-

Notifications

You must be signed in to change notification settings - Fork 53

GraphQL Flow

Our application uses GraphQL to make queries and mutations across the application. It controls the flow of data between the frontend, backend, and database for almost all logged-in users (i.e., it doesn't handle anonymous requests; we have separate Controller classes for those.)

GraphQL has extensive documentation which you may find helpful: https://graphql.org/learn/

This page aims to document SimpleReport's specific GraphQL implementation for engineers new to the project.

GraphQL has both queries and mutations. Queries just return data from the database, while mutations let you edit the data in our database. A query example would be fetching the test queue for a given facility, while a mutation would be something like adding a new patient.

Our frontend calls queries and mutations which are sent to various Resolver classes on the backend. These resolvers connect with our database, either directly or indirectly, to fetch or manipulate data as needed. The mutation/query definitions are shared in a contract between the frontend and backend, defined in .graphqls files. The frontend must call these operations exactly as specified in the GraphQL file, and the backend must define a method to implement them.

For more detail, see the Spring Wiring section of this page.

We define our GraphQL mutations and types in main.graphqls, admin.graphqls, and wiring.graphqls.

main.graphqls defines most of the interactions seen by end users - adding patients, facilities, tests, api users, etc, as well as fetching data like tests, organization name, and the patient list. It also defines several types that are used across different queries and mutations, such as Organizations and Facilities. These types are usually closely related to our database tables, but that's an artificial construct - there's nothing that requires them to be the same.

admin.graphqls defines superadmin or support-capable queries and mutations. These include mutations like resending a group of results to ReportStream, creating or identity verifying a new organization, and marking api users or facilities as deleted or undeleted.

wiring.graphqls defines some common types for GraphQL usage, including permission levels, roles, and some statuses. It does not currently define any mutations or queries.

Whenever these .graphqls files are changed, you need to run yarn codegen on the frontend. There's an additional generated file, graphql.tsx that converts all our queries, mutations, and types to Typescript-compatible types. Before the team used this generated file, to use a mutation or query on the frontend you'd need to first re-define the mutation/query as a Typescript type within your frontend file. It was very annoying, so just use the generated types instead!

Each folder also has an operations.graphqls file that defines what queries will be used in those components. Adding queries and mutations there prompts Typescript to search for the corresponding functions in graphql.tsx.

Let's follow the path of a GraphQL query all the way through our application. (Note that this was written 12/1/2021, apologies if it's out of date by the time you're reading this.)

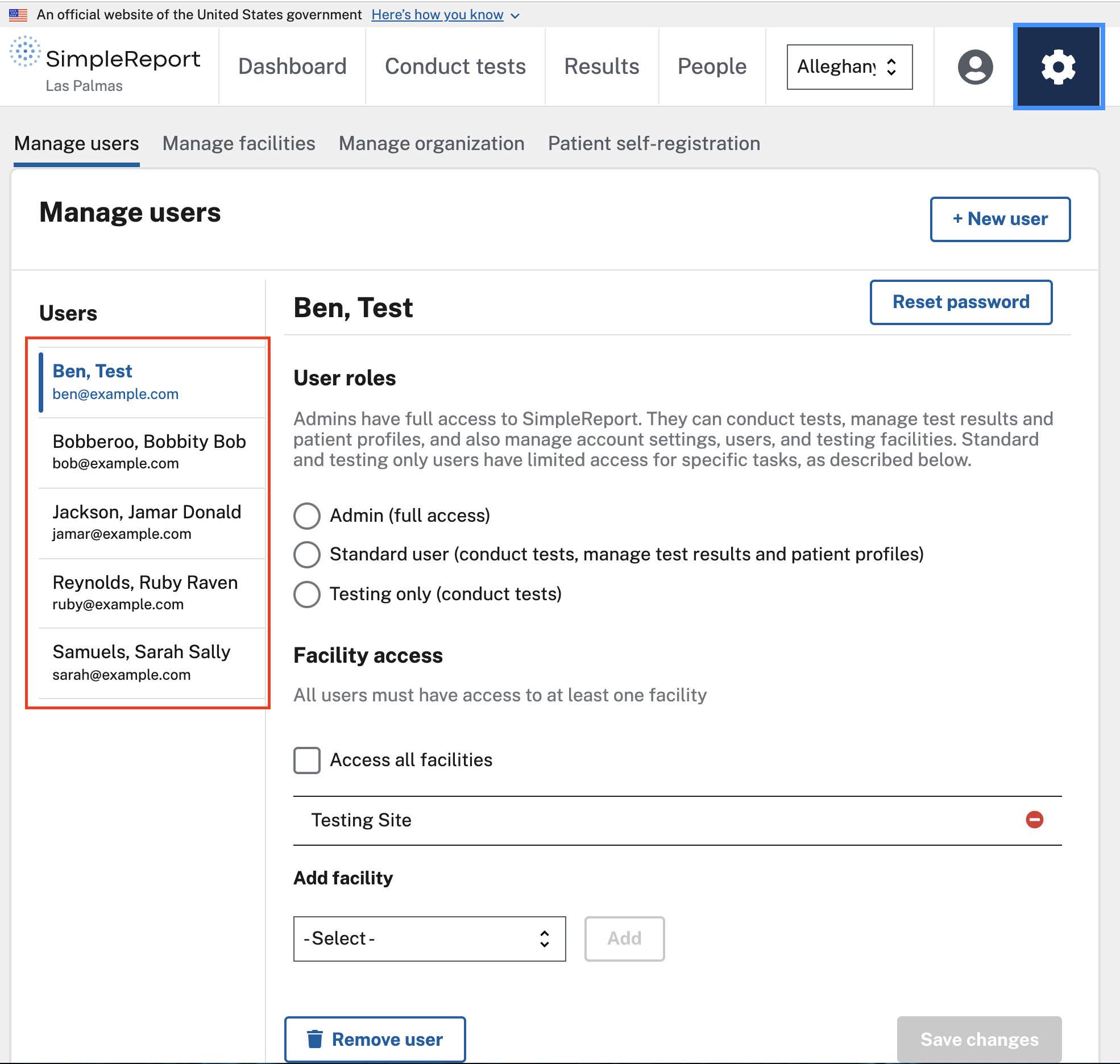

In this example, we'll look at fetching the list of users on the Manage Users page.

ManageUsersContainer.tsx and ManageUsers.tsx hold most of the frontend logic for this page. The query we're interested in here is getUsersAndStatus, which fetches basic user and account status information for the user list. It returns the user's id, name, email address, and account status (as string) for each user in an organization.

The query itself is defined in main.graphqls, but for our purposes on the frontend, it's contained in the generated Typescript file graphql.tsx.

Notice that the query called in ManageUsersContainer has a refetch parameter, which gets passed to ManageUsers. This refetch allows us to call the query again in the event that the data underlying the query changes, i.e. a user has been added to or removed from the organization.

The initial state within ManageUsers also contains a list of users, which is the output of the initial call to getUsersAndStatus. This list is sorted and passed into the UsersSideNav component.

The getUsersWithStatus query is defined in UserResolver.java. This in turn calls the ApiUserService method getUsersAndStatusInCurrentOrg.

Unlike most other GraphQL methods, this query does not only look at the database for data. It also queries Okta to retrieve the user's status. The Okta calls are directed to LiveOktaRepository, which fetches the list of all users for the given organization and returns a map of their email to account status. Then, the database is directly queried with the ApiUserRepository, returning all database objects for a given set of login emails.

The results of these two queries are merged into an ApiUserWithStatus object, which contains all the information that's expected to return from the original GraphQL query.

To add a new query or mutation, the first step is finding the appropriate .graphqls file. In most cases you'll be adding to main.graphqls.

In each graphqls file there's a section for queries and mutations, so make sure you're editing the right section.

To create a new query type, you'll name the query and define the parameters and return type. You may also need to define the permission levels required to use the query. In the example below, user is the name of the query, id is the required parameter to pass, User is the return type (defined further up in the graphql file) and the MANAGE_USERS permission level is required. Required params are designated with an exclamation point.

user(id: ID!): User @requiredPermissions(allOf: ["MANAGE_USERS"])

Once you've defined the query in the graphqls schema, you'll need to write the backend logic for it (or at least define a placeholder method.) To do so, find the appropriate QueryResolver or Resolver java class - it should extend GraphQLQueryResolver. Then add a new method to define the query. This is essentially defining the request/response for any HTTP calls that are made on your new query.

Continuing the user example, the backend method to fetch a user given their id is defined in UserResolver as:

public User getUser(UUID userId) { return new User(_userService.getUser(userId)); }

You'll notice that the parameters and return type of this method are the same as those defined in the schema. (The User defined here is the Java object, but it maps directly to the User defined in the graphQL schema.) If the parameters and return type don't match the schema, you'll see an error message on startup to the effect that graphQL cannot find a resolver with the appropriate method.

Once you have the backend resolver method written, you'll want to add logic to it. The logic for most backend components is kept in Service classes, like ApiUserService.

Once the backend is complete, you'll want to wire up the frontend. Start by adding the preferred mutation to the appropriate operations.graphql file in the same filepath as your frontend component.

Then, run yarn codegen in the frontend directory to update the generated files and import the appropriate generated methods to the frontend component you want to update. To call a new query, follow this format:

const { data, loading, error, refetch: getUsers, } = useGetUsersAndStatusQuery({ fetchPolicy: "no-cache" });

You can access the data returned by calling data.fieldName, i.e. data.usersWithStatus. You'll likely want to check the loading and error status before attempting to access the data.

To call a mutation, you'll want to use the generated use{MutationName}Mutation, like useResendActivationEmailMutation(). Once that's defined in a variable, you can call the mutation with any required variables, like so:

await resendUserActivationEmail{( variables: { id: userId, }, });

Editing queries and mutations follows much the same path as adding new ones, with the caveat that you'll need to ensure any changes are backwards-compatible to both the frontend and backend. So, you need to be prepared for your new backend code to run concurrently with old frontend code, or for new frontend code to run against old backend code. If this doesn't happen the oncall will almost certainly be paged and we'll need to roll back, so please be careful!

To maintain backwards-compatibility, make sure you're thinking closely about the data being passed through the graphQL schema. Removing a required field, for example, may need to be done in two steps - one to make the field optional and a second to remove it entirely. Otherwise you may have a stale frontend trying to request with the required field and not seeing the appropriate mutation on the backend.

All of the above is helpful development information, but doesn't really tell you how graphQL ~ magically ~ integrates with our Spring application. There's no Controller class, no bean that seems to define the wiring in SpringBootApplication, so how does it all work?

The TL;DR is that we don’t really control any of the wiring ourselves - it’s all taken care of in the graphql-java-spring library. We set up the basic Spring application, installed that library, and configured our graphQL settings in application.yaml to start using it. I believe (but haven't totally verified) that we're using the GraphQLController class in that library to actually catch the HTTP requests, specified by us as anything that hits the /graphql endpoint. (This endpoint isn’t included in our WebConfiguration list of endpoints because it’s specified in the application.yaml graphql > servlet > mapping instead.)

That library has a couple of other interesting files, including the DefaultGraphQLInvocation to actually execute the requests and GraphQLRequestBody, which seems to be a generic that defines how to process a graphQL operation and its variables.

We do have some configurations defined in our code, specifically RequiredPermissionsDirectiveWiring, DefaultArgumentValidation, ExceptionWrappingManager, and all the different DataResolver classes that implement a GraphQLResolver (see TestResultDataResolver for an example). The latter three are pretty standard stuff (specifying that args have a length limit to prevent attempted injection attacks or the like, catching exceptions, and transforming our backend data models into their graphQL type equivalents) but the RequiredPermissionsDirectiveWiring is a little unusual. It extends a graphql-java class, SchemaDirectiveWiring (confusingly in a slightly different graphQL library from all the other files I’ve linked) and validates that a given user has permission to access the objects, arguments, and fields for any graphQL request. This is one piece of the magic that links our Okta-granted group permissions to graphQL-defined permissions and figures out when to grant or deny access based on membership. (The userPermissions part of that file is where we get into the nitty-gritty there.)

- Getting Started

- [Setup] Docker and docker compose development

- [Setup] IntelliJ run configurations

- [Setup] Running DB outside of Docker (optional)

- [Setup] Running nginx locally (optional)

- [Setup] Running outside of docker

- Accessing and testing weird parts of the app on local dev

- Accessing patient experience in local dev

- API Testing with Insomnia

- Cypress

- How to run e2e locally for development

- E2E tests

- Database maintenance

- MailHog

- Running tests

- SendGrid

- Setting up okta

- Sonar

- Storybook and Chromatic

- Twilio

- User roles

- Wiremock

- CSV Uploader

- Log local DB queries

- Code review and PR conventions

- SimpleReport Style Guide

- How to Review and Test Pull Requests for Dependabot

- How to Review and Test Pull Requests with Terraform Changes

- SimpleReport Deployment Process

- Adding a Developer

- Removing a developer

- Non-deterministic test tracker

- Alert Response - When You Know What is Wrong

- What to Do When You Have No Idea What is Wrong

- Main Branch Status

- Maintenance Mode

- Swapping Slots

- Monitoring

- Container Debugging

- Debugging the ReportStream Uploader

- Renew Azure Service Principal Credentials

- Releasing Changelog Locks

- Muting Alerts

- Architectural Decision Records

- Backend Stack Overview

- Frontend Overview

- Cloud Architecture

- Cloud Environments

- Database ERD

- External IDs

- GraphQL Flow

- Hibernate Lazy fetching and nested models

- Identity Verification (Experian)

- Spring Profile Management

- SR Result bulk uploader device validation logic

- Test Metadata and how we store it

- TestOrder vs TestEvent

- ReportStream Integration

- Feature Flag Setup

- FHIR Resources

- FHIR Conversions

- Okta E2E Integration

- Deploy Application Action

- Slack notifications for support escalations

- Creating a New Environment Within a Resource Group

- How to Add and Use Environment Variables in Azure

- Web Application Firewall (WAF) Troubleshooting and Maintenance

- How to Review and Test Pull Requests with Terraform Changes