diff --git a/pika/README_pika.md b/pika/README_pika.md

new file mode 100644

index 000000000..2e8c4729c

--- /dev/null

+++ b/pika/README_pika.md

@@ -0,0 +1,91 @@

+# Codis & Pika guide(sharding mode)

+

+## Prepare

+

+### Before start, learn about [Codis](https://github.com/CodisLabs/codis) and [Pika](https://github.com/OpenAtomFoundation/pika)

+

+### Build codis image

+```

+cd codis_dir

+docker build -f Dockerfile -t codis-image:v3.2 .

+```

+

+### Pika configure

+Make sure **instance-mode** in pika.conf is set to sharding and **default-slot-num** should be 1024. Cause Codis's slot number is 1024. According to your situation, you can change **default-slot-num** by rebuilding codis code.

+

+```

+instance-mode : sharding

+default-slot-num : 1024

+```

+---

+## Start

+

+### 1. Make it run

+- Run the following orders one by one

+```

+cd codis_dir/scripts

+sudo bash docker_pika.sh zookeeper

+sudo bash docker_pika.sh dashboard

+sudo bash docker_pika.sh proxy

+sudo bash docker_pika.sh fe

+sudo bash docker_pika.sh pika

+```

+

+- Or run the orders in one line with some sleep

+```

+sudo bash docker_pika.sh zookeeper && sleep 10s && sudo bash docker_pika.sh dashboard && sudo bash docker_pika.sh proxy && sudo bash docker_pika.sh fe && sudo bash docker_pika.sh pika

+```

+

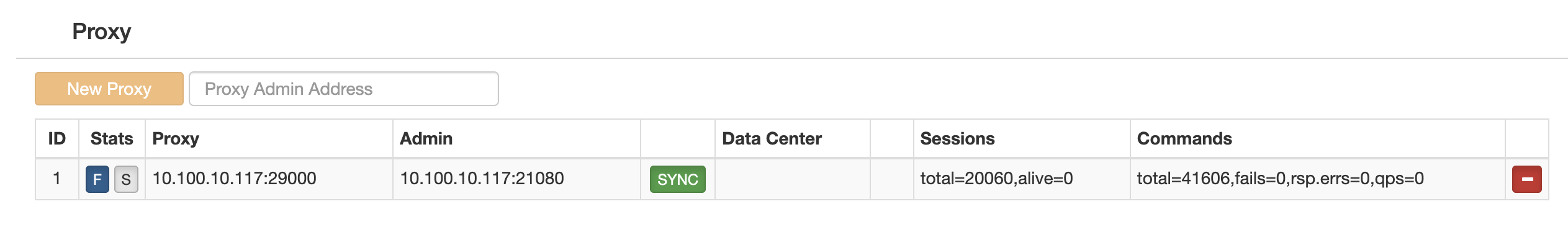

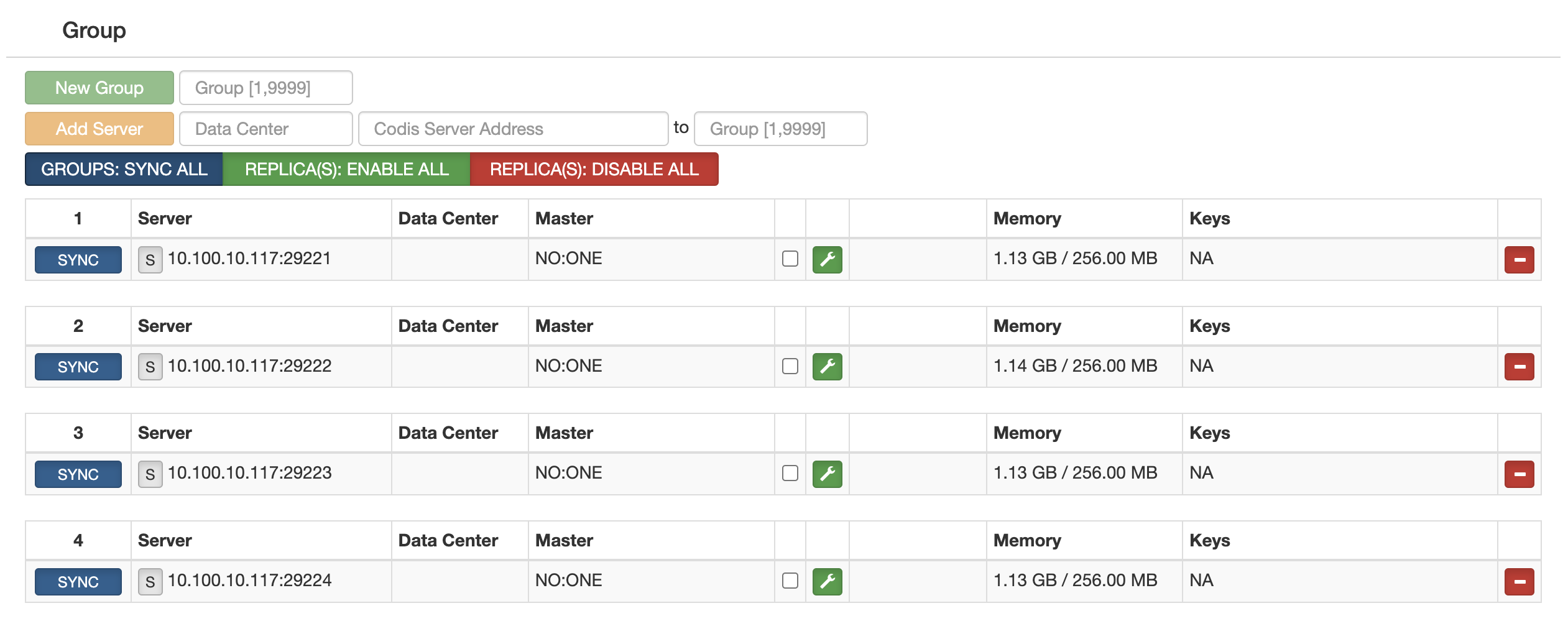

+### 2. Configure codis by fe end

+#### Configure clusetr in codis fe( http://fehost:8080/ )

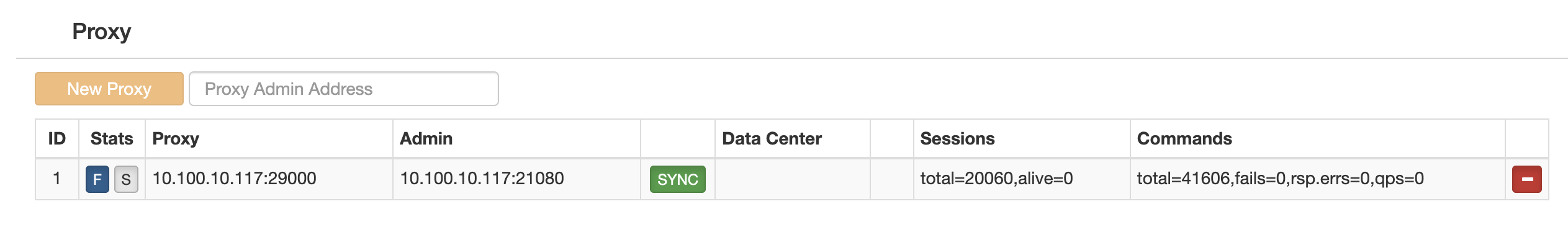

+- make proxy

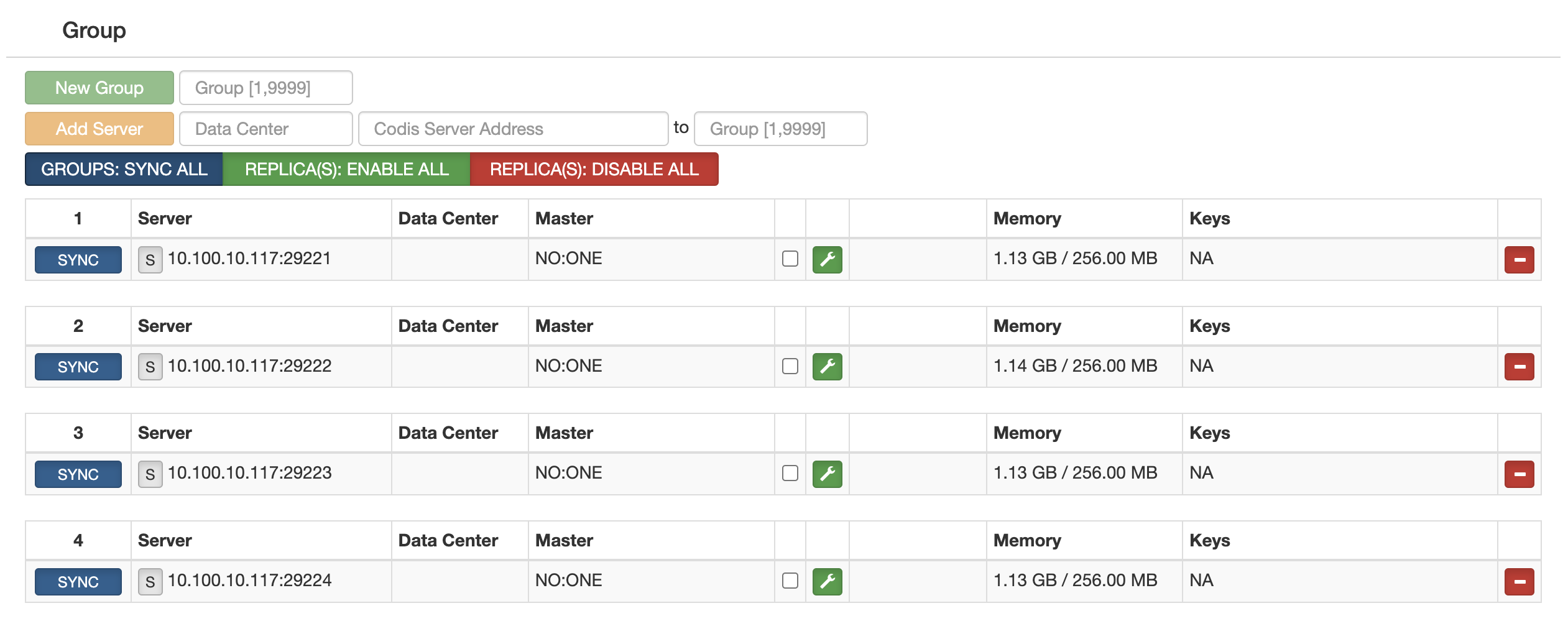

+ +- make group

+

+- make group

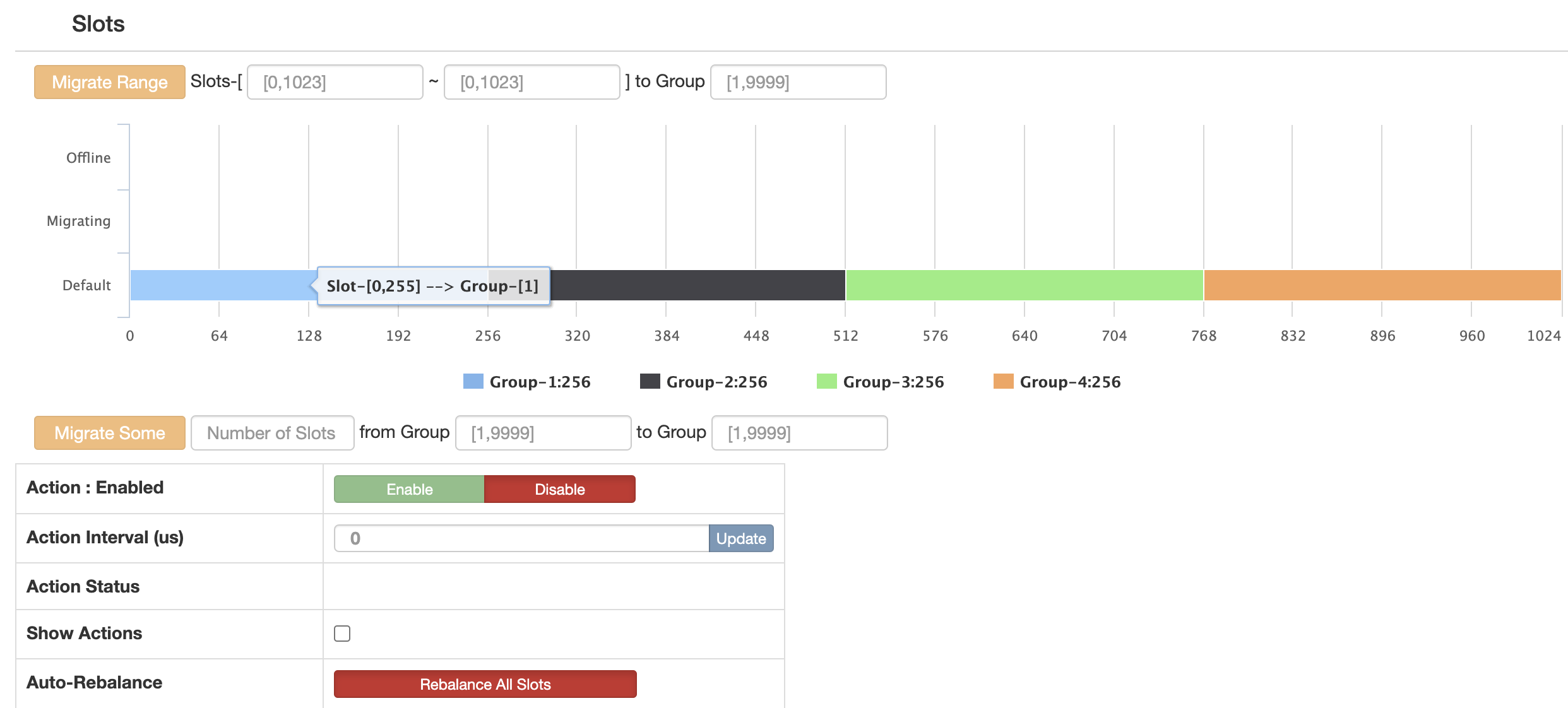

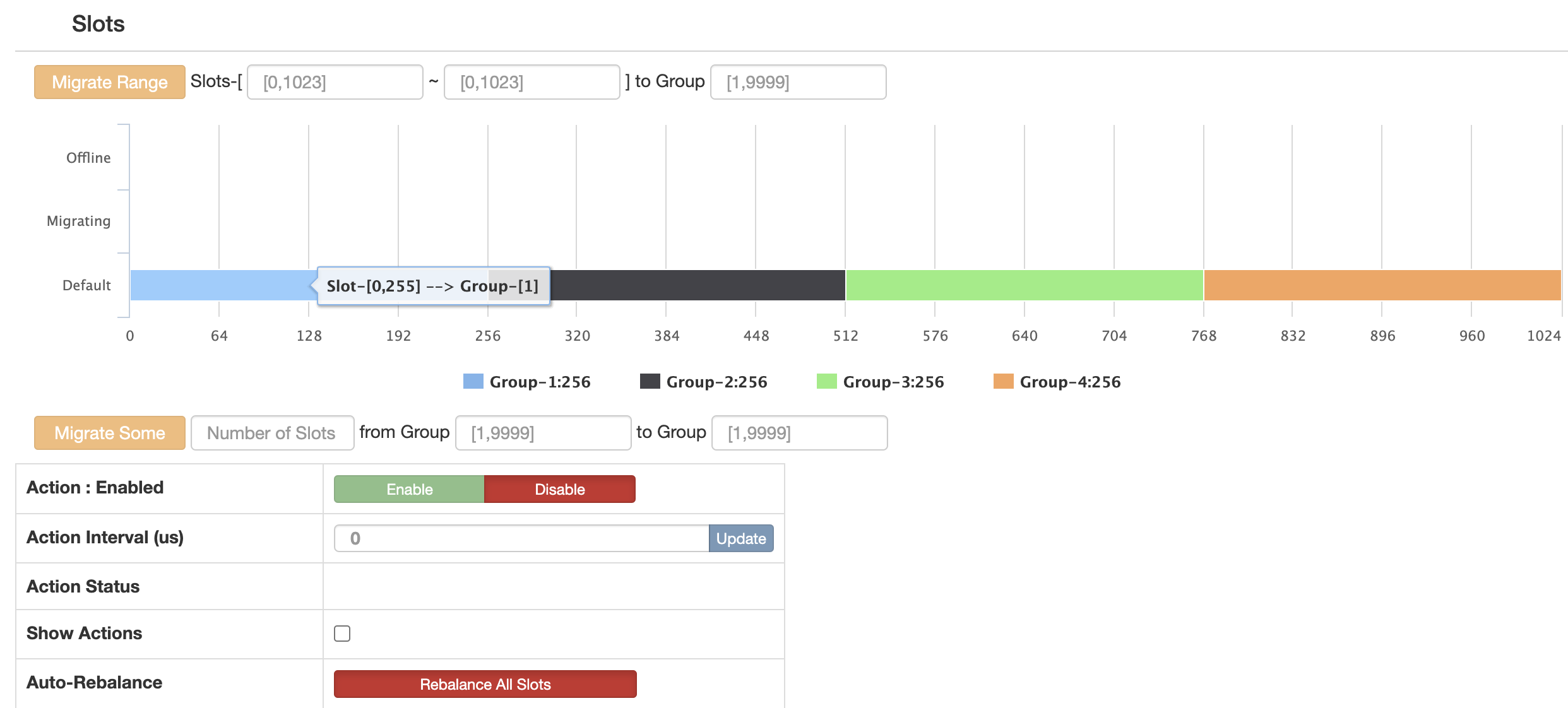

+ +- rebalance slot

+

+- rebalance slot

+ +

+

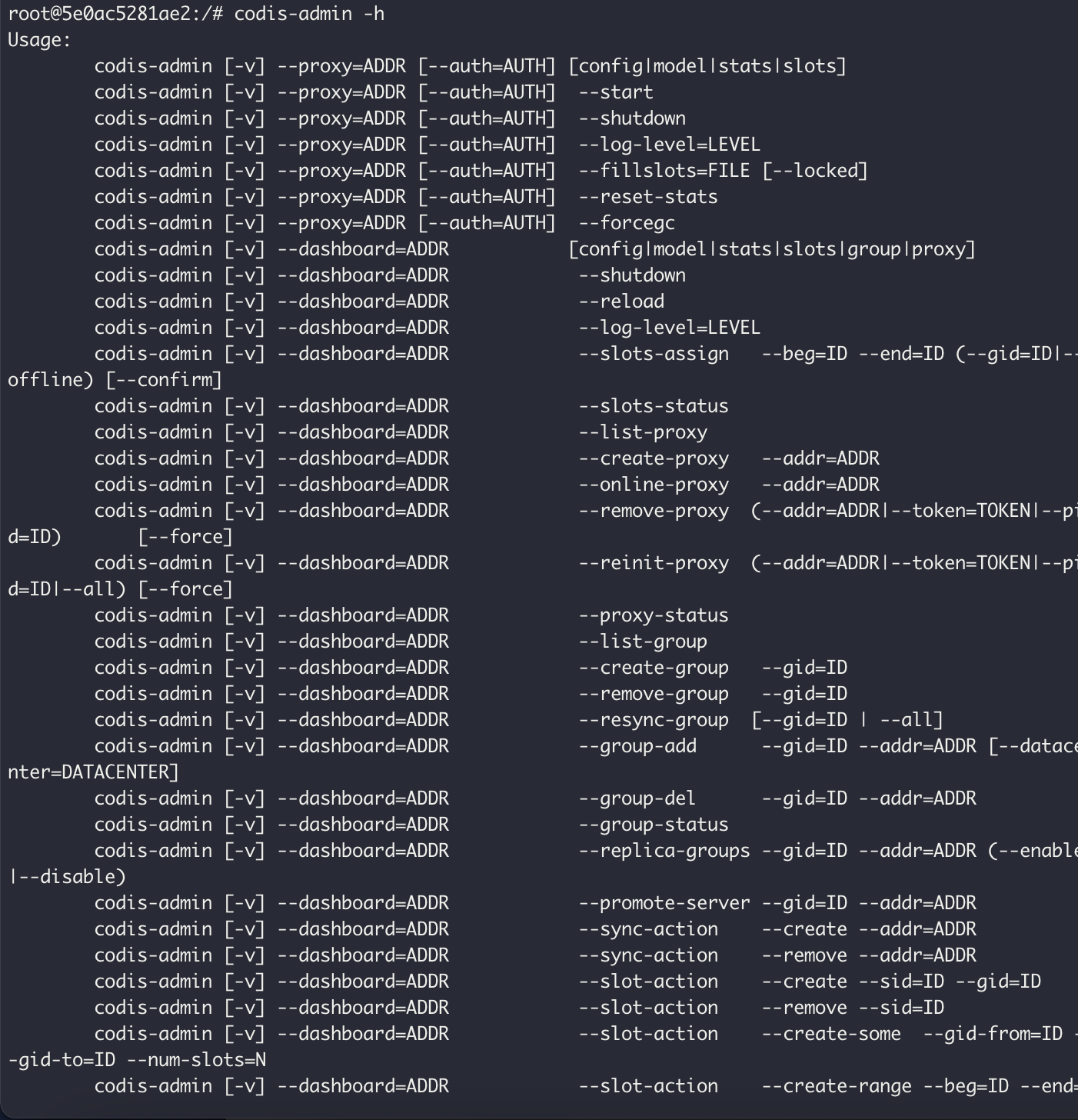

+### 2. Configure codis by command

+- log in dashboard instance and use dashboard-admin to make operations above

+

+

+

+

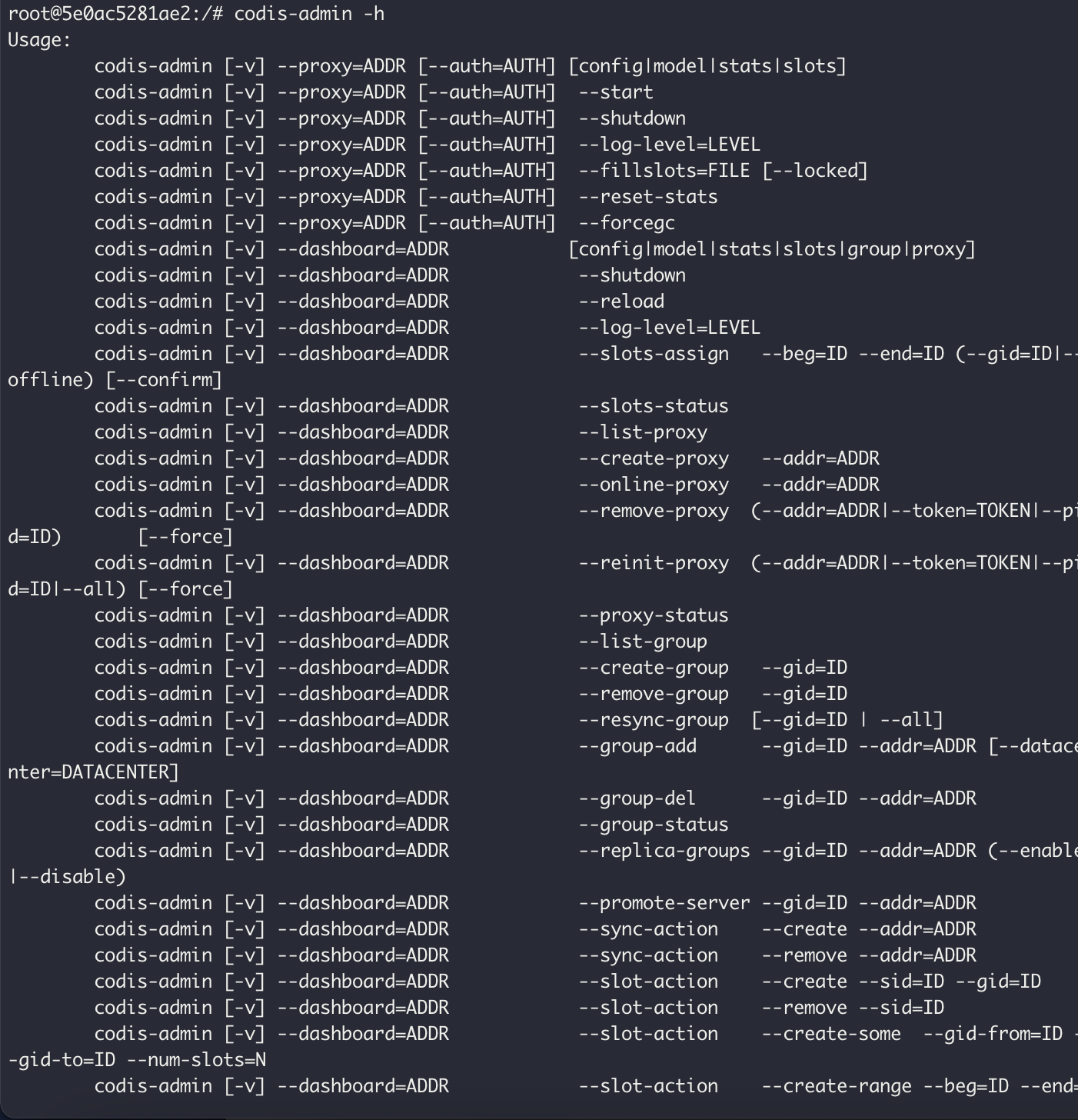

+### 2. Configure codis by command

+- log in dashboard instance and use dashboard-admin to make operations above

+

+ +

+

+

+### 3. Init pika

+- add slot for pika

+

+Supposing that you have 4 groups, Pika instancse should be assinged to 4 groups. Every Pika instance should make 256 slots.(1024/4=256) Offset and end depend on which group it's in.

+```

+# pika in group 1

+pkcluster addslots 0-255

+# pika in group 2

+pkcluster addslots 256-511

+# pika in group 3

+pkcluster addslots 512-767

+# pika in group 4

+pkcluster addslots 768-1023

+```

+

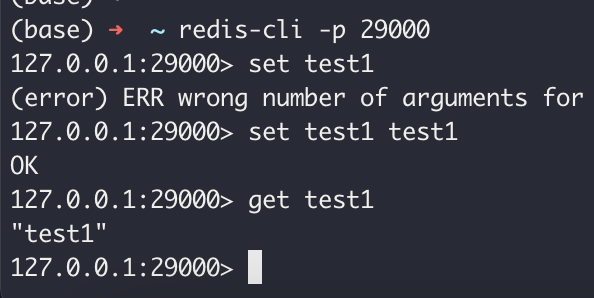

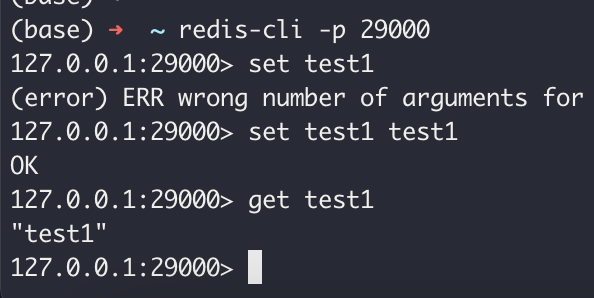

+### 4. Test proxy

+- Connenct client by Codis proxy and test it.

+

+

+

+

+

+### 3. Init pika

+- add slot for pika

+

+Supposing that you have 4 groups, Pika instancse should be assinged to 4 groups. Every Pika instance should make 256 slots.(1024/4=256) Offset and end depend on which group it's in.

+```

+# pika in group 1

+pkcluster addslots 0-255

+# pika in group 2

+pkcluster addslots 256-511

+# pika in group 3

+pkcluster addslots 512-767

+# pika in group 4

+pkcluster addslots 768-1023

+```

+

+### 4. Test proxy

+- Connenct client by Codis proxy and test it.

+

+ +

+## DevOps

+

+### Slave of a master

+```

+pkcluster slotsslaveof masterIp masterPort slotOffset-slotEnd

+```

+

+### Migrate group 1 to group 5

+- 1. create new group 5

+- 2. Make group 5 master instance be slave of group 1 master instance

+- 3. Make group 5 slave instances be slave of group 5 master instance

+- 4. When lag between group 1 master and group 5 master is small, make all group 1 slot to group 5.

+- 5. When lag between group 1 master and group 5 master is 0, make group 5 master instance slave of no one.

+- 6. delete group 1 instances.

+

+

diff --git a/pika/pika.conf b/pika/pika.conf

new file mode 100644

index 000000000..ae13bcc00

--- /dev/null

+++ b/pika/pika.conf

@@ -0,0 +1,151 @@

+# Pika port

+port : 9221

+# Thread Number

+thread-num : 4

+# Thread Pool Size

+thread-pool-size : 12

+# Sync Thread Number

+sync-thread-num : 6

+# Pika log path

+log-path : /data/pika/log/

+# Pika db path

+db-path : /data/pika/db/

+# Pika write-buffer-size

+write-buffer-size : 268435456

+# size of one block in arena memory allocation.

+# If <= 0, a proper value is automatically calculated

+# (usually 1/8 of writer-buffer-size, rounded up to a multiple of 4KB)

+arena-block-size :

+# Pika timeout

+timeout : 60

+# Requirepass

+requirepass :

+# Masterauth

+masterauth :

+# Userpass

+userpass :

+# User Blacklist

+userblacklist :

+# if this option is set to 'classic', that means pika support multiple DB, in

+# this mode, option databases enable

+# if this option is set to 'sharding', that means pika support multiple Table, you

+# can specify slot num for each table, in this mode, option default-slot-num enable

+# Pika instance mode [classic | sharding]

+instance-mode : sharding

+# Set the number of databases. The default database is DB 0, you can select

+# a different one on a per-connection basis using SELECT where

+# dbid is a number between 0 and 'databases' - 1, limited in [1, 8]

+databases : 1

+# default slot number each table in sharding mode

+default-slot-num : 1024

+# replication num defines how many followers in a single raft group, only [0, 1, 2, 3, 4] is valid

+replication-num : 0

+# consensus level defines how many confirms does leader get, before commit this log to client,

+# only [0, ...replicaiton-num] is valid

+consensus-level : 0

+# Dump Prefix

+dump-prefix :

+# daemonize [yes | no]

+#daemonize : yes

+# Dump Path

+dump-path : /data/pika/dump/

+# Expire-dump-days

+dump-expire : 0

+# pidfile Path

+pidfile : /data/pika/pika.pid

+# Max Connection

+maxclients : 20000

+# the per file size of sst to compact, default is 20M

+target-file-size-base : 20971520

+# Expire-logs-days

+expire-logs-days : 7

+# Expire-logs-nums

+expire-logs-nums : 10

+# Root-connection-num

+root-connection-num : 2

+# Slowlog-write-errorlog

+slowlog-write-errorlog : no

+# Slowlog-log-slower-than

+slowlog-log-slower-than : 10000

+# Slowlog-max-len

+slowlog-max-len : 128

+# Pika db sync path

+db-sync-path : /data/pika/dbsync/

+# db sync speed(MB) max is set to 1024MB, min is set to 0, and if below 0 or above 1024, the value will be adjust to 1024

+db-sync-speed : -1

+# The slave priority

+slave-priority : 100

+# network interface

+#network-interface : eth1

+# replication

+#slaveof : master-ip:master-port

+

+# CronTask, format 1: start-end/ratio, like 02-04/60, pika will check to schedule compaction between 2 to 4 o'clock everyday

+# if the freesize/disksize > 60%.

+# format 2: week/start-end/ratio, like 3/02-04/60, pika will check to schedule compaction between 2 to 4 o'clock

+# every wednesday, if the freesize/disksize > 60%.

+# NOTICE: if compact-interval is set, compact-cron will be mask and disable.

+#

+#compact-cron : 3/02-04/60

+

+# Compact-interval, format: interval/ratio, like 6/60, pika will check to schedule compaction every 6 hours,

+# if the freesize/disksize > 60%. NOTICE:compact-interval is prior than compact-cron;

+#compact-interval :

+

+# the size of flow control window while sync binlog between master and slave.Default is 9000 and the maximum is 90000.

+sync-window-size : 9000

+# max value of connection read buffer size: configurable value 67108864(64MB) or 268435456(256MB) or 536870912(512MB)

+# default value is 268435456(256MB)

+# NOTICE: master and slave should share exactly the same value

+max-conn-rbuf-size : 268435456

+

+

+###################

+## Critical Settings

+###################

+# write_binlog [yes | no]

+write-binlog : yes

+# binlog file size: default is 100M, limited in [1K, 2G]

+binlog-file-size : 104857600

+# Automatically triggers a small compaction according statistics

+# Use the cache to store up to 'max-cache-statistic-keys' keys

+# if 'max-cache-statistic-keys' set to '0', that means turn off the statistics function

+# it also doesn't automatically trigger a small compact feature

+max-cache-statistic-keys : 0

+# When 'delete' or 'overwrite' a specific multi-data structure key 'small-compaction-threshold' times,

+# a small compact is triggered automatically, default is 5000, limited in [1, 100000]

+small-compaction-threshold : 5000

+# If the total size of all live memtables of all the DBs exceeds

+# the limit, a flush will be triggered in the next DB to which the next write

+# is issued.

+max-write-buffer-size : 10737418240

+# The maximum number of write buffers that are built up in memory for one ColumnFamily in DB.

+# The default and the minimum number is 2, so that when 1 write buffer

+# is being flushed to storage, new writes can continue to the other write buffer.

+# If max-write-buffer-number > 3, writing will be slowed down

+# if we are writing to the last write buffer allowed.

+max-write-buffer-number : 2

+# Limit some command response size, like Scan, Keys*

+max-client-response-size : 1073741824

+# Compression type supported [snappy, zlib, lz4, zstd]

+compression : snappy

+# max-background-flushes: default is 1, limited in [1, 4]

+max-background-flushes : 1

+# max-background-compactions: default is 2, limited in [1, 8]

+max-background-compactions : 2

+# maximum value of Rocksdb cached open file descriptors

+max-cache-files : 5000

+# max_bytes_for_level_multiplier: default is 10, you can change it to 5

+max-bytes-for-level-multiplier : 10

+# BlockBasedTable block_size, default 4k

+# block-size: 4096

+# block LRU cache, default 8M, 0 to disable

+# block-cache: 8388608

+# whether the block cache is shared among the RocksDB instances, default is per CF

+# share-block-cache: no

+# whether or not index and filter blocks is stored in block cache

+# cache-index-and-filter-blocks: no

+# when set to yes, bloomfilter of the last level will not be built

+# optimize-filters-for-hits: no

+# https://github.com/facebook/rocksdb/wiki/Leveled-Compaction#levels-target-size

+# level-compaction-dynamic-level-bytes: no

diff --git a/scripts/docker_pika.sh b/scripts/docker_pika.sh

new file mode 100644

index 000000000..6af8e31ed

--- /dev/null

+++ b/scripts/docker_pika.sh

@@ -0,0 +1,116 @@

+#!/bin/bash

+

+hostip=`ifconfig ens5 | grep "inet " | awk -F " " '{print $2}'`

+

+pika_out_data_path="/data/chenbodeng/pika_data"

+

+if [ "x$hostip" == "x" ]; then

+ echo "cann't resolve host ip address"

+ exit 1

+fi

+

+mkdir -p log

+

+if [[ "$hostip" == *":"* ]]; then

+ echo "hostip format"

+ tmp=$hostip

+ IFS=':' read -ra ADDR <<< "$tmp"

+ for i in "${ADDR[@]}"; do

+ # process "$i"

+ hostip=$i

+ done

+fi

+

+case "$1" in

+zookeeper)

+ docker rm -f "Codis-Z2181" &> /dev/null

+ docker run --name "Codis-Z2181" -d \

+ --read-only \

+ -p 2181:2181 \

+ jplock/zookeeper

+ ;;

+

+dashboard)

+ docker rm -f "Codis-D28080" &> /dev/null

+ docker run --name "Codis-D28080" -d \

+ --read-only -v `realpath ../config/dashboard.toml`:/codis/dashboard.toml \

+ -v `realpath log`:/codis/log \

+ -p 28080:18080 \

+ codis-image:v3.2 \

+ codis-dashboard -l log/dashboard.log -c dashboard.toml --host-admin ${hostip}:28080

+ ;;

+

+proxy)

+ docker rm -f "Codis-P29000" &> /dev/null

+ docker run --name "Codis-P29000" -d \

+ --read-only -v `realpath ../config/proxy.toml`:/codis/proxy.toml \

+ -v `realpath log`:/codis/log \

+ -p 29000:19000 -p 21080:11080 \

+ codis-image:v3.2 \

+ codis-proxy -l log/proxy.log -c proxy.toml --host-admin ${hostip}:21080 --host-proxy ${hostip}:29000

+ ;;

+

+# server)

+# for ((i=0;i<4;i++)); do

+# let port="26379 + i"

+# docker rm -f "Codis-S${port}" &> /dev/null

+# docker run --name "Codis-S${port}" -d \

+# -v `realpath log`:/codis/log \

+# -p $port:6379 \

+# codis-image \

+# codis-server --logfile log/${port}.log

+# done

+# ;;

+

+pika)

+ for ((i=0;i<4;i++)); do

+ let port="29221 + i"

+ let rsync_port="30221 + i"

+ let slave_port="31221 + i"

+ docker rm -f "Codis-Pika${port}" &> /dev/null

+ docker run --name "Codis-Pika${port}" -d \

+ -p $port:9221 \

+ -p $rsync_port:10221 \

+ -p $slave_port:11221 \

+ -v "${pika_out_data_path}/Pika${port}":/data/pika \

+ -v `realpath ../pika/pika.conf`:/pika/conf/pika.conf \

+ pikadb/pika:v3.3.6

+ # docker run -dit --name pika_one_sd -p 9221:9221 -v /data2/chenbodeng/pika/conf/pika.conf:/pika/conf/pika.conf pikadb/pika:v3.3.6

+ done

+ ;;

+

+fe)

+ docker rm -f "Codis-F8080" &> /dev/null

+ docker run --name "Codis-F8080" -d \

+ -v `realpath log`:/codis/log \

+ -p 8080:8080 \

+ codis-image:v3.2 \

+ codis-fe -l log/fe.log --zookeeper ${hostip}:2181 --listen=0.0.0.0:8080 --assets=/gopath/src/github.com/CodisLabs/codis/bin/assets

+ ;;

+

+cleanup)

+ docker rm -f "Codis-D28080" &> /dev/null

+ docker rm -f "Codis-P29000" &> /dev/null

+ docker rm -f "Codis-F8080" &> /dev/null

+ for ((i=0;i<5;i++)); do

+ let port="29221 + i"

+ docker rm -f "Codis-Pika${port}" &> /dev/null

+ rm -rf "${pika_out_data_path}/Pika${port}"

+ done

+ docker rm -f "Codis-Z2181" &> /dev/null

+ ;;

+

+cleanup_pika)

+ for ((i=0;i<5;i++)); do

+ let port="29221 + i"

+ docker rm -f "Codis-Pika${port}"

+ rm -rf "${pika_out_data_path}/Pika${port}"

+ done

+ ;;

+

+*)

+ echo "wrong argument(s)"

+ ;;

+

+esac

+

+

+## DevOps

+

+### Slave of a master

+```

+pkcluster slotsslaveof masterIp masterPort slotOffset-slotEnd

+```

+

+### Migrate group 1 to group 5

+- 1. create new group 5

+- 2. Make group 5 master instance be slave of group 1 master instance

+- 3. Make group 5 slave instances be slave of group 5 master instance

+- 4. When lag between group 1 master and group 5 master is small, make all group 1 slot to group 5.

+- 5. When lag between group 1 master and group 5 master is 0, make group 5 master instance slave of no one.

+- 6. delete group 1 instances.

+

+

diff --git a/pika/pika.conf b/pika/pika.conf

new file mode 100644

index 000000000..ae13bcc00

--- /dev/null

+++ b/pika/pika.conf

@@ -0,0 +1,151 @@

+# Pika port

+port : 9221

+# Thread Number

+thread-num : 4

+# Thread Pool Size

+thread-pool-size : 12

+# Sync Thread Number

+sync-thread-num : 6

+# Pika log path

+log-path : /data/pika/log/

+# Pika db path

+db-path : /data/pika/db/

+# Pika write-buffer-size

+write-buffer-size : 268435456

+# size of one block in arena memory allocation.

+# If <= 0, a proper value is automatically calculated

+# (usually 1/8 of writer-buffer-size, rounded up to a multiple of 4KB)

+arena-block-size :

+# Pika timeout

+timeout : 60

+# Requirepass

+requirepass :

+# Masterauth

+masterauth :

+# Userpass

+userpass :

+# User Blacklist

+userblacklist :

+# if this option is set to 'classic', that means pika support multiple DB, in

+# this mode, option databases enable

+# if this option is set to 'sharding', that means pika support multiple Table, you

+# can specify slot num for each table, in this mode, option default-slot-num enable

+# Pika instance mode [classic | sharding]

+instance-mode : sharding

+# Set the number of databases. The default database is DB 0, you can select

+# a different one on a per-connection basis using SELECT where

+# dbid is a number between 0 and 'databases' - 1, limited in [1, 8]

+databases : 1

+# default slot number each table in sharding mode

+default-slot-num : 1024

+# replication num defines how many followers in a single raft group, only [0, 1, 2, 3, 4] is valid

+replication-num : 0

+# consensus level defines how many confirms does leader get, before commit this log to client,

+# only [0, ...replicaiton-num] is valid

+consensus-level : 0

+# Dump Prefix

+dump-prefix :

+# daemonize [yes | no]

+#daemonize : yes

+# Dump Path

+dump-path : /data/pika/dump/

+# Expire-dump-days

+dump-expire : 0

+# pidfile Path

+pidfile : /data/pika/pika.pid

+# Max Connection

+maxclients : 20000

+# the per file size of sst to compact, default is 20M

+target-file-size-base : 20971520

+# Expire-logs-days

+expire-logs-days : 7

+# Expire-logs-nums

+expire-logs-nums : 10

+# Root-connection-num

+root-connection-num : 2

+# Slowlog-write-errorlog

+slowlog-write-errorlog : no

+# Slowlog-log-slower-than

+slowlog-log-slower-than : 10000

+# Slowlog-max-len

+slowlog-max-len : 128

+# Pika db sync path

+db-sync-path : /data/pika/dbsync/

+# db sync speed(MB) max is set to 1024MB, min is set to 0, and if below 0 or above 1024, the value will be adjust to 1024

+db-sync-speed : -1

+# The slave priority

+slave-priority : 100

+# network interface

+#network-interface : eth1

+# replication

+#slaveof : master-ip:master-port

+

+# CronTask, format 1: start-end/ratio, like 02-04/60, pika will check to schedule compaction between 2 to 4 o'clock everyday

+# if the freesize/disksize > 60%.

+# format 2: week/start-end/ratio, like 3/02-04/60, pika will check to schedule compaction between 2 to 4 o'clock

+# every wednesday, if the freesize/disksize > 60%.

+# NOTICE: if compact-interval is set, compact-cron will be mask and disable.

+#

+#compact-cron : 3/02-04/60

+

+# Compact-interval, format: interval/ratio, like 6/60, pika will check to schedule compaction every 6 hours,

+# if the freesize/disksize > 60%. NOTICE:compact-interval is prior than compact-cron;

+#compact-interval :

+

+# the size of flow control window while sync binlog between master and slave.Default is 9000 and the maximum is 90000.

+sync-window-size : 9000

+# max value of connection read buffer size: configurable value 67108864(64MB) or 268435456(256MB) or 536870912(512MB)

+# default value is 268435456(256MB)

+# NOTICE: master and slave should share exactly the same value

+max-conn-rbuf-size : 268435456

+

+

+###################

+## Critical Settings

+###################

+# write_binlog [yes | no]

+write-binlog : yes

+# binlog file size: default is 100M, limited in [1K, 2G]

+binlog-file-size : 104857600

+# Automatically triggers a small compaction according statistics

+# Use the cache to store up to 'max-cache-statistic-keys' keys

+# if 'max-cache-statistic-keys' set to '0', that means turn off the statistics function

+# it also doesn't automatically trigger a small compact feature

+max-cache-statistic-keys : 0

+# When 'delete' or 'overwrite' a specific multi-data structure key 'small-compaction-threshold' times,

+# a small compact is triggered automatically, default is 5000, limited in [1, 100000]

+small-compaction-threshold : 5000

+# If the total size of all live memtables of all the DBs exceeds

+# the limit, a flush will be triggered in the next DB to which the next write

+# is issued.

+max-write-buffer-size : 10737418240

+# The maximum number of write buffers that are built up in memory for one ColumnFamily in DB.

+# The default and the minimum number is 2, so that when 1 write buffer

+# is being flushed to storage, new writes can continue to the other write buffer.

+# If max-write-buffer-number > 3, writing will be slowed down

+# if we are writing to the last write buffer allowed.

+max-write-buffer-number : 2

+# Limit some command response size, like Scan, Keys*

+max-client-response-size : 1073741824

+# Compression type supported [snappy, zlib, lz4, zstd]

+compression : snappy

+# max-background-flushes: default is 1, limited in [1, 4]

+max-background-flushes : 1

+# max-background-compactions: default is 2, limited in [1, 8]

+max-background-compactions : 2

+# maximum value of Rocksdb cached open file descriptors

+max-cache-files : 5000

+# max_bytes_for_level_multiplier: default is 10, you can change it to 5

+max-bytes-for-level-multiplier : 10

+# BlockBasedTable block_size, default 4k

+# block-size: 4096

+# block LRU cache, default 8M, 0 to disable

+# block-cache: 8388608

+# whether the block cache is shared among the RocksDB instances, default is per CF

+# share-block-cache: no

+# whether or not index and filter blocks is stored in block cache

+# cache-index-and-filter-blocks: no

+# when set to yes, bloomfilter of the last level will not be built

+# optimize-filters-for-hits: no

+# https://github.com/facebook/rocksdb/wiki/Leveled-Compaction#levels-target-size

+# level-compaction-dynamic-level-bytes: no

diff --git a/scripts/docker_pika.sh b/scripts/docker_pika.sh

new file mode 100644

index 000000000..6af8e31ed

--- /dev/null

+++ b/scripts/docker_pika.sh

@@ -0,0 +1,116 @@

+#!/bin/bash

+

+hostip=`ifconfig ens5 | grep "inet " | awk -F " " '{print $2}'`

+

+pika_out_data_path="/data/chenbodeng/pika_data"

+

+if [ "x$hostip" == "x" ]; then

+ echo "cann't resolve host ip address"

+ exit 1

+fi

+

+mkdir -p log

+

+if [[ "$hostip" == *":"* ]]; then

+ echo "hostip format"

+ tmp=$hostip

+ IFS=':' read -ra ADDR <<< "$tmp"

+ for i in "${ADDR[@]}"; do

+ # process "$i"

+ hostip=$i

+ done

+fi

+

+case "$1" in

+zookeeper)

+ docker rm -f "Codis-Z2181" &> /dev/null

+ docker run --name "Codis-Z2181" -d \

+ --read-only \

+ -p 2181:2181 \

+ jplock/zookeeper

+ ;;

+

+dashboard)

+ docker rm -f "Codis-D28080" &> /dev/null

+ docker run --name "Codis-D28080" -d \

+ --read-only -v `realpath ../config/dashboard.toml`:/codis/dashboard.toml \

+ -v `realpath log`:/codis/log \

+ -p 28080:18080 \

+ codis-image:v3.2 \

+ codis-dashboard -l log/dashboard.log -c dashboard.toml --host-admin ${hostip}:28080

+ ;;

+

+proxy)

+ docker rm -f "Codis-P29000" &> /dev/null

+ docker run --name "Codis-P29000" -d \

+ --read-only -v `realpath ../config/proxy.toml`:/codis/proxy.toml \

+ -v `realpath log`:/codis/log \

+ -p 29000:19000 -p 21080:11080 \

+ codis-image:v3.2 \

+ codis-proxy -l log/proxy.log -c proxy.toml --host-admin ${hostip}:21080 --host-proxy ${hostip}:29000

+ ;;

+

+# server)

+# for ((i=0;i<4;i++)); do

+# let port="26379 + i"

+# docker rm -f "Codis-S${port}" &> /dev/null

+# docker run --name "Codis-S${port}" -d \

+# -v `realpath log`:/codis/log \

+# -p $port:6379 \

+# codis-image \

+# codis-server --logfile log/${port}.log

+# done

+# ;;

+

+pika)

+ for ((i=0;i<4;i++)); do

+ let port="29221 + i"

+ let rsync_port="30221 + i"

+ let slave_port="31221 + i"

+ docker rm -f "Codis-Pika${port}" &> /dev/null

+ docker run --name "Codis-Pika${port}" -d \

+ -p $port:9221 \

+ -p $rsync_port:10221 \

+ -p $slave_port:11221 \

+ -v "${pika_out_data_path}/Pika${port}":/data/pika \

+ -v `realpath ../pika/pika.conf`:/pika/conf/pika.conf \

+ pikadb/pika:v3.3.6

+ # docker run -dit --name pika_one_sd -p 9221:9221 -v /data2/chenbodeng/pika/conf/pika.conf:/pika/conf/pika.conf pikadb/pika:v3.3.6

+ done

+ ;;

+

+fe)

+ docker rm -f "Codis-F8080" &> /dev/null

+ docker run --name "Codis-F8080" -d \

+ -v `realpath log`:/codis/log \

+ -p 8080:8080 \

+ codis-image:v3.2 \

+ codis-fe -l log/fe.log --zookeeper ${hostip}:2181 --listen=0.0.0.0:8080 --assets=/gopath/src/github.com/CodisLabs/codis/bin/assets

+ ;;

+

+cleanup)

+ docker rm -f "Codis-D28080" &> /dev/null

+ docker rm -f "Codis-P29000" &> /dev/null

+ docker rm -f "Codis-F8080" &> /dev/null

+ for ((i=0;i<5;i++)); do

+ let port="29221 + i"

+ docker rm -f "Codis-Pika${port}" &> /dev/null

+ rm -rf "${pika_out_data_path}/Pika${port}"

+ done

+ docker rm -f "Codis-Z2181" &> /dev/null

+ ;;

+

+cleanup_pika)

+ for ((i=0;i<5;i++)); do

+ let port="29221 + i"

+ docker rm -f "Codis-Pika${port}"

+ rm -rf "${pika_out_data_path}/Pika${port}"

+ done

+ ;;

+

+*)

+ echo "wrong argument(s)"

+ ;;

+

+esac

+

+- make group

+

+- make group

+ +- rebalance slot

+

+- rebalance slot

+ +

+

+### 2. Configure codis by command

+- log in dashboard instance and use dashboard-admin to make operations above

+

+

+

+

+### 2. Configure codis by command

+- log in dashboard instance and use dashboard-admin to make operations above

+

+ +

+

+

+### 3. Init pika

+- add slot for pika

+

+Supposing that you have 4 groups, Pika instancse should be assinged to 4 groups. Every Pika instance should make 256 slots.(1024/4=256) Offset and end depend on which group it's in.

+```

+# pika in group 1

+pkcluster addslots 0-255

+# pika in group 2

+pkcluster addslots 256-511

+# pika in group 3

+pkcluster addslots 512-767

+# pika in group 4

+pkcluster addslots 768-1023

+```

+

+### 4. Test proxy

+- Connenct client by Codis proxy and test it.

+

+

+

+

+

+### 3. Init pika

+- add slot for pika

+

+Supposing that you have 4 groups, Pika instancse should be assinged to 4 groups. Every Pika instance should make 256 slots.(1024/4=256) Offset and end depend on which group it's in.

+```

+# pika in group 1

+pkcluster addslots 0-255

+# pika in group 2

+pkcluster addslots 256-511

+# pika in group 3

+pkcluster addslots 512-767

+# pika in group 4

+pkcluster addslots 768-1023

+```

+

+### 4. Test proxy

+- Connenct client by Codis proxy and test it.

+

+ +

+## DevOps

+

+### Slave of a master

+```

+pkcluster slotsslaveof masterIp masterPort slotOffset-slotEnd

+```

+

+### Migrate group 1 to group 5

+- 1. create new group 5

+- 2. Make group 5 master instance be slave of group 1 master instance

+- 3. Make group 5 slave instances be slave of group 5 master instance

+- 4. When lag between group 1 master and group 5 master is small, make all group 1 slot to group 5.

+- 5. When lag between group 1 master and group 5 master is 0, make group 5 master instance slave of no one.

+- 6. delete group 1 instances.

+

+

diff --git a/pika/pika.conf b/pika/pika.conf

new file mode 100644

index 000000000..ae13bcc00

--- /dev/null

+++ b/pika/pika.conf

@@ -0,0 +1,151 @@

+# Pika port

+port : 9221

+# Thread Number

+thread-num : 4

+# Thread Pool Size

+thread-pool-size : 12

+# Sync Thread Number

+sync-thread-num : 6

+# Pika log path

+log-path : /data/pika/log/

+# Pika db path

+db-path : /data/pika/db/

+# Pika write-buffer-size

+write-buffer-size : 268435456

+# size of one block in arena memory allocation.

+# If <= 0, a proper value is automatically calculated

+# (usually 1/8 of writer-buffer-size, rounded up to a multiple of 4KB)

+arena-block-size :

+# Pika timeout

+timeout : 60

+# Requirepass

+requirepass :

+# Masterauth

+masterauth :

+# Userpass

+userpass :

+# User Blacklist

+userblacklist :

+# if this option is set to 'classic', that means pika support multiple DB, in

+# this mode, option databases enable

+# if this option is set to 'sharding', that means pika support multiple Table, you

+# can specify slot num for each table, in this mode, option default-slot-num enable

+# Pika instance mode [classic | sharding]

+instance-mode : sharding

+# Set the number of databases. The default database is DB 0, you can select

+# a different one on a per-connection basis using SELECT

+

+## DevOps

+

+### Slave of a master

+```

+pkcluster slotsslaveof masterIp masterPort slotOffset-slotEnd

+```

+

+### Migrate group 1 to group 5

+- 1. create new group 5

+- 2. Make group 5 master instance be slave of group 1 master instance

+- 3. Make group 5 slave instances be slave of group 5 master instance

+- 4. When lag between group 1 master and group 5 master is small, make all group 1 slot to group 5.

+- 5. When lag between group 1 master and group 5 master is 0, make group 5 master instance slave of no one.

+- 6. delete group 1 instances.

+

+

diff --git a/pika/pika.conf b/pika/pika.conf

new file mode 100644

index 000000000..ae13bcc00

--- /dev/null

+++ b/pika/pika.conf

@@ -0,0 +1,151 @@

+# Pika port

+port : 9221

+# Thread Number

+thread-num : 4

+# Thread Pool Size

+thread-pool-size : 12

+# Sync Thread Number

+sync-thread-num : 6

+# Pika log path

+log-path : /data/pika/log/

+# Pika db path

+db-path : /data/pika/db/

+# Pika write-buffer-size

+write-buffer-size : 268435456

+# size of one block in arena memory allocation.

+# If <= 0, a proper value is automatically calculated

+# (usually 1/8 of writer-buffer-size, rounded up to a multiple of 4KB)

+arena-block-size :

+# Pika timeout

+timeout : 60

+# Requirepass

+requirepass :

+# Masterauth

+masterauth :

+# Userpass

+userpass :

+# User Blacklist

+userblacklist :

+# if this option is set to 'classic', that means pika support multiple DB, in

+# this mode, option databases enable

+# if this option is set to 'sharding', that means pika support multiple Table, you

+# can specify slot num for each table, in this mode, option default-slot-num enable

+# Pika instance mode [classic | sharding]

+instance-mode : sharding

+# Set the number of databases. The default database is DB 0, you can select

+# a different one on a per-connection basis using SELECT