-

Notifications

You must be signed in to change notification settings - Fork 17

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Vector data cubes + processes #68

Comments

|

Conclusion from 3rd year planning: Will not be tackled in the near future, we add vector related processes once required (e.g. filter_point for the Wageningen use case, see #37). @jdries and @aljacob will explore further and may define additional processes in the future. Related is also #2 Currently, the processes always refer to "raster data cubes". Clarify (with @edzer?) whether it may be good to just call them data cubes and handle the "type" of cube internally. |

|

Telco: Still not needed at the moment. I'll need to go through the processes for 1.0 and check whether the vector-cubes as used at the moment make sense. Depends also on #2 |

|

The recent comment from @mkadunc in #2 (comment) fits better here:

What should we go for? At the moment, we have the two types |

|

We only define vector-cube as part of aggregate_polygon and save_result and handle them as "black box", so back-ends handle the transition. We'll dig into this again once it is needed. |

|

Recently, the question came up how to support vector-cubes as input data for processes. In this example it was aggregate_spatial that was required to be able to load more than GeoJSON. For reference my answer: [...] Fortunately, openEO is extensible and you can add whatever you need. The simplest option is to modify the "geometries" parameter to allow other things to be loaded. To allow files it's relatively easy. Replace: with: and then it also allows to specify files uploaded to the user workspace. Then it depends on your implementation what can be read. Input file formats should be exposed via GET /file_formats. A bit more complex, but the way we'd standardize it later is probably to use load_uploaded_files. The issue here is that we haven't really thought about how vector-cubes would work, but you could change the return value in the load_uploaded_files process as follows: Now it supports loading vector data and returns it in a (virtual) vector data cube, which you can then accept in aggregate_spatial with the following definition for the geometries parameter: Now, you need to figure out how to pass the data between the processes, but as there's not much more that can handle vector cubes yet, you can just do that how it works best internally. |

|

As far as I've understood, no use case in openEO Platform requires vector processes directly. There may be single processes required, like aggregate_spatial that can be considered on a case-by-case basis. Nevertheless, it is listed as a separate requirement in the SoW. |

|

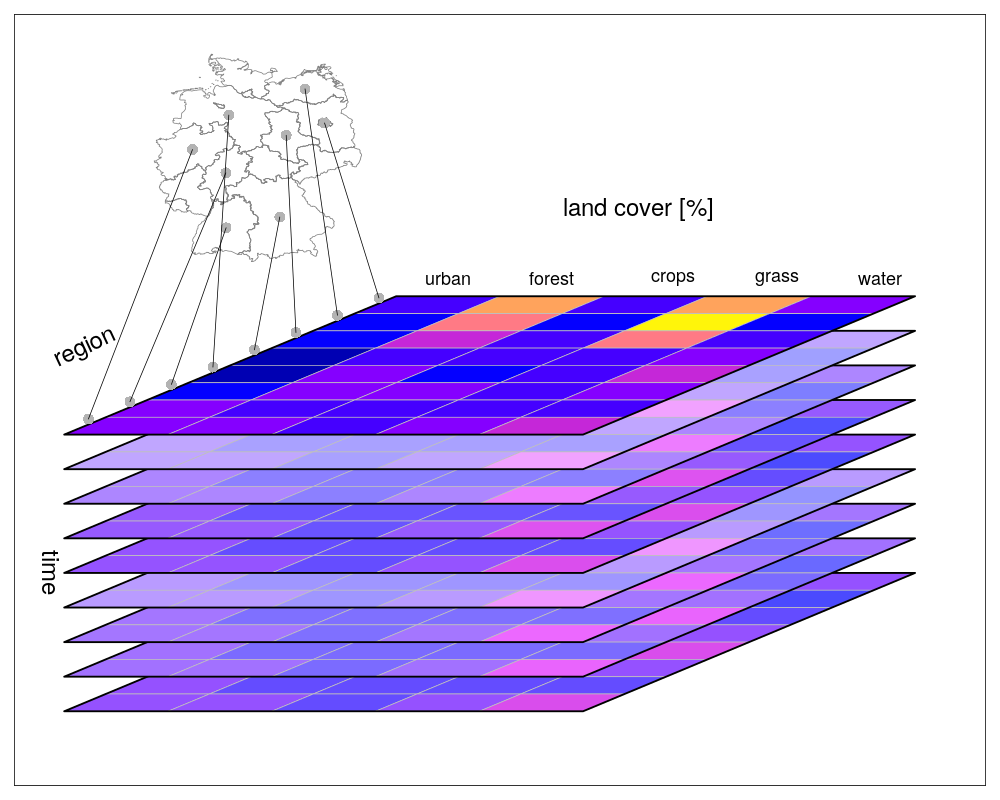

See also the confusion arising at #308. A vector data cube is an n-D cube where (at least) one of the dimensions is associated with vector geometries (points, lines, polygons, or their multi-version). Example figures for 3D cubes: Special, lower-dimensional cases:

A difficulty of this concept is that our vector data file formats we usually work with (those read/written by GDAL: from shapefile to geopackage to GeoJSON to geodatabase) only can cover the two-D case; we need to juggle with the third dimension to use such formats ("flatten" the cube somehow: either in wide form over the attribute space, or in long form by repeating the geometries). A format that can (properly) handle vector data cubes is NetCDF, e.g. this is an example of a multipolygon x time datacube in NetCDF. |

We basically ignored vector data cubes and related processes until now and will need to add more related processes in 1.0, which will be a major work package!

We also need to update existing processes, which currently only support raster-cubes and add vector-cube support to processes that currently only allow geojson.

The text was updated successfully, but these errors were encountered: