© Chris Rackauckas. Last modified: July 15, 2023.

Built with Franklin.jl and the Julia programming language.

Due October 1st, 2020 at midnight EST.

Homework 1 is a chance to get some experience implementing discrete dynamical systems techniques in a way that is parallelized, and a time to understand the fundamental behavior of the bottleneck algorithms in scientific computing.

In lecture 4 we looked at the properties of discrete dynamical systems to see that running many systems for infinitely many steps would go to a steady state. This process is used as a numerical method known as fixed point iteration to solve for the steady state of systems $x_{n+1} = f(x_{n})$. Under a transformation (which we will do in this homework), it can be used to solve rootfinding problems $f(x) = 0$ to solve for $x$.

In this problem we will look into Newton's method. Newton's method is the dynamical system defined by the update process:

\[ x_{n+1} = x_n - \left(\frac{dg}{dx}(x_n)\right)^{-1} g(x_n) \]

For these problems, assume that $\frac{dg}{dx}$ is non-singular. We will prove a few properties to show why, in practice, Newton methods are preferred for quickly calculating the steady state.

Show that if $x^\ast$ is a steady state of the equation, then $g(x^\ast) = 0$.

Take a look at the Quasi-Newton approximation:

\[ x_{n+1} = x_n - \left(\frac{dg}{dx}(x_0)\right)^{-1} g(x_n) \]

for some fixed $x_0$. Derive the stability of the Quasi-Newton approximation in the form of a matrix whose eigenvalues need to be constrained. Use this to argue that if $x_0$ is sufficiently close to $x^\ast$ then the steady state is a stable (attracting) steady state.

Relaxed Quasi-Newton is the method:

\[ x_{n+1} = x_n - \alpha \left(\frac{dg}{dx}(x_0)\right)^{-1} g(x_n) \]

Argue that for some sufficiently small $\alpha$ that the Quasi-Newton iterations will be stable if the eigenvalues of $(\left(\frac{dg}{dx}(x_0)\right)^{-1} g(x_n))^\prime$ are all positive for every $x$.

(Technically, these assumptions can be greatly relaxed, but weird cases arise. When $x \in \mathbb{C}$, this holds except on some set of Lebesgue measure zero. Feel free to explore this.)

Fixed point iteration is the dynamical system

\[ x_{n+1} = g(x_n) \]

which converges to $g(x)=x$.

What is a small change to the dynamical system that could be done such that $g(x)=0$ is the steady state?

How can you change the $\left(\frac{dg}{dx}(x_0)\right)^{-1}$ term from the Quasi-Newton iteration to get a method equivalent to fixed point iteration? What does this imply about the difference in stability between Quasi-Newton and fixed point iteration if $\frac{dg}{dx}$ has large eigenvalues?

In this problem we will practice writing fast and type-generic Julia code by producing an algorithm that will compute the quantile of any probability distribution.

Many problems can be interpreted as a rootfinding problem. For example, let's take a look at a problem in statistics. Let $X$ be a random variable with a cumulative distribution function (CDF) of $cdf(x)$. Recall that the CDF is a monotonically increasing function in $[0,1]$ which is the total probability of $X < x$. The $y$th quantile of $X$ is the value $x$ at with $X$ has a y% chance of being less than $x$. Interpret the problem of computing an arbitrary quantile $y$ as a rootfinding problem, and use Newton's method to write an algorithm for computing $x$.

(Hint: Recall that $cdf^{\prime}(x) = pdf(x)$, the probability distribution function.)

Use the types from Distributions.jl to write a function my_quantile(y,d) which uses multiple dispatch to compute the $y$th quantile for any UnivariateDistribution d from Distributions.jl. Test your function on Gamma(5, 1), Normal(0, 1), and Beta(2, 4) against the Distributions.quantile function built into the library.

(Hint: Have a keyword argument for $x_0$, and let its default be the mean or median of the distribution.)

In this problem we will write code for efficient generation of the bifurcation diagram of the logistic equation.

The logistic equation is the dynamical system given by the update relation:

\[ x_{n+1} = rx_n (1-x_n) \]

where $r$ is some parameter. Write a function which iterates the equation from $x_0 = 0.25$ enough times to be sufficiently close to its long-term behavior (400 iterations) and samples 150 points from the steady state attractor (i.e. output iterations 401:550) as a function of $r$, and mutates some vector as a solution, i.e. calc_attractor!(out,f,p,num_attract=150;warmup=400).

Test your function with $r = 2.9$. Double check that your function computes the correct result by calculating the analytical steady state value.

The bifurcation plot shows how a steady state changes as a parameter changes. Compute the long-term result of the logistic equation at the values of r = 2.9:0.001:4, and plot the steady state values for each $r$ as an r x steady_attractor scatter plot. You should get a very bizarrely awesome picture, the bifurcation graph of the logistic equation.

(Hint: Generate a single matrix for the attractor values, and use calc_attractor! on views of columns for calculating the output, or inline the calc_attractor! computation directly onto the matrix, or even give calc_attractor! an input for what column to modify.)

Multithread your bifurcation graph generator by performing different steady state calculations on different threads. Does your timing improve? Why? Be careful and check to make sure you have more than 1 thread!

Multiprocess your bifurcation graph generator first by using pmap, and then by using @distributed. Does your timing improve? Why? Be careful to add processes before doing the distributed call.

(Note: You may need to change your implementation around to be allocating differently in order for it to be compatible with multiprocessing!)

Which method is the fastest? Why?

\ No newline at end of file +Due October 1st, 2020 at midnight EST.

Homework 1 is a chance to get some experience implementing discrete dynamical systems techniques in a way that is parallelized, and a time to understand the fundamental behavior of the bottleneck algorithms in scientific computing.

In lecture 4 we looked at the properties of discrete dynamical systems to see that running many systems for infinitely many steps would go to a steady state. This process is used as a numerical method known as fixed point iteration to solve for the steady state of systems $x_{n+1} = f(x_{n})$. Under a transformation (which we will do in this homework), it can be used to solve rootfinding problems $f(x) = 0$ to solve for $x$.

In this problem we will look into Newton's method. Newton's method is the dynamical system defined by the update process:

\[ x_{n+1} = x_n - \left(\frac{dg}{dx}(x_n)\right)^{-1} g(x_n) \]

For these problems, assume that $\frac{dg}{dx}$ is non-singular. We will prove a few properties to show why, in practice, Newton methods are preferred for quickly calculating the steady state.

Show that if $x^\ast$ is a steady state of the equation, then $g(x^\ast) = 0$.

Take a look at the Quasi-Newton approximation:

\[ x_{n+1} = x_n - \left(\frac{dg}{dx}(x_0)\right)^{-1} g(x_n) \]

for some fixed $x_0$. Derive the stability of the Quasi-Newton approximation in the form of a matrix whose eigenvalues need to be constrained. Use this to argue that if $x_0$ is sufficiently close to $x^\ast$ then the steady state is a stable (attracting) steady state.

Relaxed Quasi-Newton is the method:

\[ x_{n+1} = x_n - \alpha \left(\frac{dg}{dx}(x_0)\right)^{-1} g(x_n) \]

Argue that for some sufficiently small $\alpha$ that the Quasi-Newton iterations will be stable if the eigenvalues of $(\left(\frac{dg}{dx}(x_0)\right)^{-1} g(x_n))^\prime$ are all positive for every $x$.

(Technically, these assumptions can be greatly relaxed, but weird cases arise. When $x \in \mathbb{C}$, this holds except on some set of Lebesgue measure zero. Feel free to explore this.)

Fixed point iteration is the dynamical system

\[ x_{n+1} = g(x_n) \]

which converges to $g(x)=x$.

What is a small change to the dynamical system that could be done such that $g(x)=0$ is the steady state?

How can you change the $\left(\frac{dg}{dx}(x_0)\right)^{-1}$ term from the Quasi-Newton iteration to get a method equivalent to fixed point iteration? What does this imply about the difference in stability between Quasi-Newton and fixed point iteration if $\frac{dg}{dx}$ has large eigenvalues?

In this problem we will practice writing fast and type-generic Julia code by producing an algorithm that will compute the quantile of any probability distribution.

Many problems can be interpreted as a rootfinding problem. For example, let's take a look at a problem in statistics. Let $X$ be a random variable with a cumulative distribution function (CDF) of $cdf(x)$. Recall that the CDF is a monotonically increasing function in $[0,1]$ which is the total probability of $X < x$. The $y$th quantile of $X$ is the value $x$ at with $X$ has a y% chance of being less than $x$. Interpret the problem of computing an arbitrary quantile $y$ as a rootfinding problem, and use Newton's method to write an algorithm for computing $x$.

(Hint: Recall that $cdf^{\prime}(x) = pdf(x)$, the probability distribution function.)

Use the types from Distributions.jl to write a function my_quantile(y,d) which uses multiple dispatch to compute the $y$th quantile for any UnivariateDistribution d from Distributions.jl. Test your function on Gamma(5, 1), Normal(0, 1), and Beta(2, 4) against the Distributions.quantile function built into the library.

(Hint: Have a keyword argument for $x_0$, and let its default be the mean or median of the distribution.)

In this problem we will write code for efficient generation of the bifurcation diagram of the logistic equation.

The logistic equation is the dynamical system given by the update relation:

\[ x_{n+1} = rx_n (1-x_n) \]

where $r$ is some parameter. Write a function which iterates the equation from $x_0 = 0.25$ enough times to be sufficiently close to its long-term behavior (400 iterations) and samples 150 points from the steady state attractor (i.e. output iterations 401:550) as a function of $r$, and mutates some vector as a solution, i.e. calc_attractor!(out,f,p,num_attract=150;warmup=400).

Test your function with $r = 2.9$. Double check that your function computes the correct result by calculating the analytical steady state value.

The bifurcation plot shows how a steady state changes as a parameter changes. Compute the long-term result of the logistic equation at the values of r = 2.9:0.001:4, and plot the steady state values for each $r$ as an r x steady_attractor scatter plot. You should get a very bizarrely awesome picture, the bifurcation graph of the logistic equation.

(Hint: Generate a single matrix for the attractor values, and use calc_attractor! on views of columns for calculating the output, or inline the calc_attractor! computation directly onto the matrix, or even give calc_attractor! an input for what column to modify.)

Multithread your bifurcation graph generator by performing different steady state calculations on different threads. Does your timing improve? Why? Be careful and check to make sure you have more than 1 thread!

Multiprocess your bifurcation graph generator first by using pmap, and then by using @distributed. Does your timing improve? Why? Be careful to add processes before doing the distributed call.

(Note: You may need to change your implementation around to be allocating differently in order for it to be compatible with multiprocessing!)

Which method is the fastest? Why?

\ No newline at end of file diff --git a/_weave/homework02/hw2/index.html b/_weave/homework02/hw2/index.html index 5e421156..21512bc6 100644 --- a/_weave/homework02/hw2/index.html +++ b/_weave/homework02/hw2/index.html @@ -11,4 +11,4 @@to receive two cores on two nodes. Recreate the bandwidth vs message plots and the interpretation. Does the fact that the nodes are physically disconnected cause a substantial difference?

\ No newline at end of file +mpirun julia mycode.jlto receive two cores on two nodes. Recreate the bandwidth vs message plots and the interpretation. Does the fact that the nodes are physically disconnected cause a substantial difference?

\ No newline at end of file diff --git a/_weave/homework03/hw3/index.html b/_weave/homework03/hw3/index.html index bf37b16e..ec4e60de 100644 --- a/_weave/homework03/hw3/index.html +++ b/_weave/homework03/hw3/index.html @@ -1 +1 @@ -In this homework, we will write an implementation of neural ordinary differential equations from scratch. You may use the DifferentialEquations.jl ODE solver, but not the adjoint sensitivities functionality. Optionally, a second problem is to add GPU support to your implementation.

Due December 9th, 2020 at midnight.

Please email the results to 18337.mit.psets@gmail.com.

In this problem we will work through the development of a neural ODE.

Use the definition of the pullback as a vector-Jacobian product (vjp) to show that $B_f^x(1) = \left( \nabla f(x) \right)^{T}$ for a function $f: \mathbb{R}^n \rightarrow \mathbb{R}$.

(Hint: if you put 1 into the pullback, what kind of function is it? What does the Jacobian look like?)

Implement a simple $NN: \mathbb{R}^2 \rightarrow \mathbb{R}^2$ neural network

\[ NN(u;W_i,b_i) = W_2 tanh.(W_1 u + b_1) + b_2 \]

where $W_1$ is $50 \times 2$, $b_1$ is length 50, $W_2$ is $2 \times 50$, and $b_2$ is length 2. Implement the pullback of the neural network: $B_{NN}^{u,W_i,b_i}(y)$ to calculate the derivative of the neural network with respect to each of these inputs. Check for correctness by using ForwardDiff.jl to calculate the gradient.

The adjoint of an ODE can be described as the set of vector equations:

\[ \begin{align} u' &= f(u,p,t)\\ \end{align} \]

forward, and then

\[ \begin{align} \lambda' &= -\lambda^\ast \frac{\partial f}{\partial u}\\ \mu' &= -\lambda^\ast \frac{\partial f}{\partial p}\\ \end{align} \]

solved in reverse time from $T$ to $0$ for some cost function $C(p)$. For this problem, we will use the L2 loss function.

Note that $\mu(T) = 0$ and $\lambda(T) = \frac{\partial C}{\partial u(T)}$. This is written in the form where the only data point is at time $T$. If that is not the case, the reverse solve needs to add the jump $\frac{\partial C}{\partial u(t_i)}$ to $\lambda$ at each data point $u(t_i)$. Use this example for how to add these jumps to the equation.

Using this formulation of the adjoint, it holds that $\mu(0) = \frac{\partial C}{\partial p}$, and thus solving these ODEs in reverse gives the solution for the gradient as a part of the system at time zero.

Notice that $B_f^u(\lambda) = \lambda^\ast \frac{\partial f}{\partial u}$ and similarly for $\mu$. Implement an adjoint calculation for a neural ordinary differential equation where

\[ u' = NN(u) \]

from above. Solve the ODE forwards using OrdinaryDiffEq.jl's Tsit5() integrator, then use the interpolation from the forward pass for the u values of the backpass and solve.

(Note: you will want to double check this gradient by using something like ForwardDiff! Start with only measuring the datapoint at the end, then try multiple data points.)

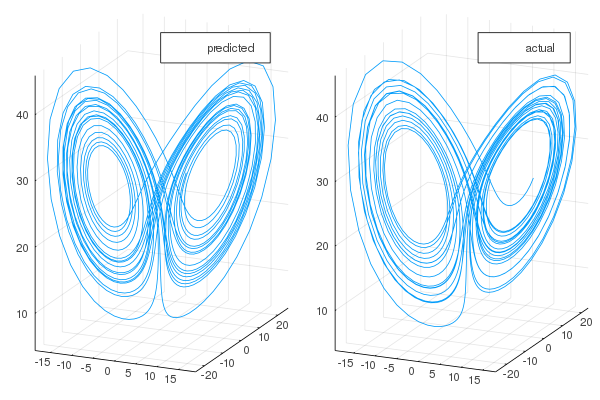

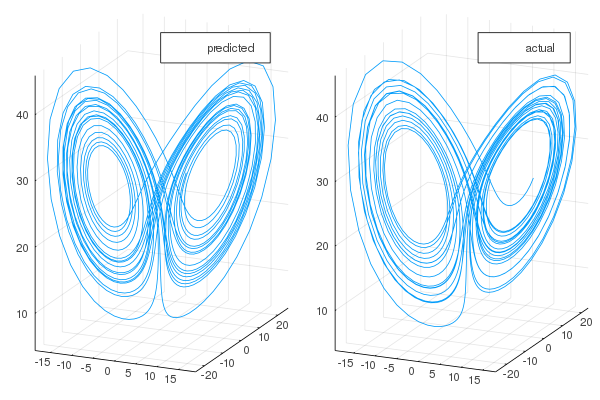

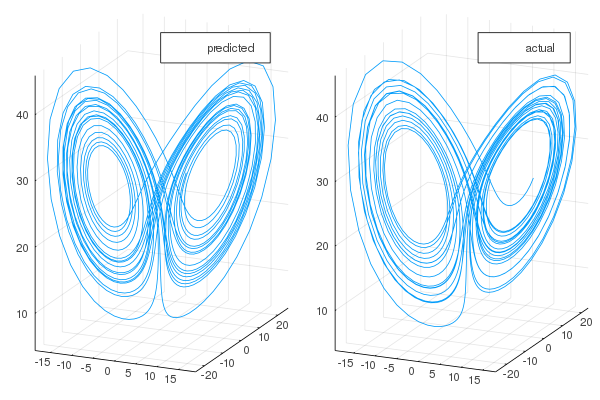

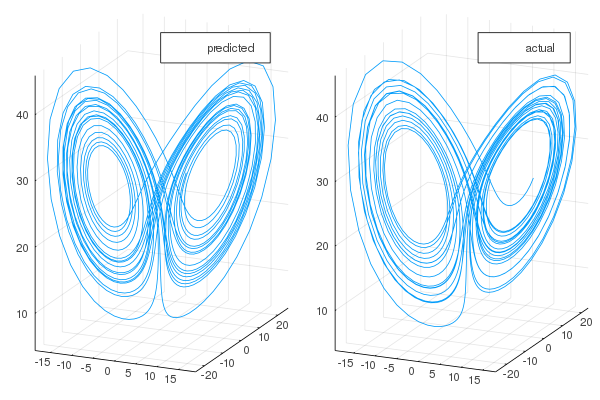

Generate data from the ODE $u' = Au$ where A = [-0.1 2.0; -2.0 -0.1] at t=0.0:0.1:1.0 (use saveat) with $u(0) = [2,0]$. Define the cost function C(θ) to be the Euclidean distance between the neural ODE's solution and the data. Optimize this cost function by using gradient descent where the gradient is your adjoint method's output.

(Note: calculate the cost and the gradient at the same time by using the forward pass to calculate the cost, and then use it in the adjoint for the interpolation. Note that you should not use saveat in the forward pass then, because otherwise the interpolation is linear. Instead, post-interpolate the data points.)

If you have access to a GPU, you may wish to try the following.

Change your neural network to be GPU-accelerated by using CuArrays.jl for the underlying array types.

Change the initial condition of the ODE solves to a CuArray to make your neural ODE GPU-accelerated.

\ No newline at end of file +In this homework, we will write an implementation of neural ordinary differential equations from scratch. You may use the DifferentialEquations.jl ODE solver, but not the adjoint sensitivities functionality. Optionally, a second problem is to add GPU support to your implementation.

Due December 9th, 2020 at midnight.

Please email the results to 18337.mit.psets@gmail.com.

In this problem we will work through the development of a neural ODE.

Use the definition of the pullback as a vector-Jacobian product (vjp) to show that $B_f^x(1) = \left( \nabla f(x) \right)^{T}$ for a function $f: \mathbb{R}^n \rightarrow \mathbb{R}$.

(Hint: if you put 1 into the pullback, what kind of function is it? What does the Jacobian look like?)

Implement a simple $NN: \mathbb{R}^2 \rightarrow \mathbb{R}^2$ neural network

\[ NN(u;W_i,b_i) = W_2 tanh.(W_1 u + b_1) + b_2 \]

where $W_1$ is $50 \times 2$, $b_1$ is length 50, $W_2$ is $2 \times 50$, and $b_2$ is length 2. Implement the pullback of the neural network: $B_{NN}^{u,W_i,b_i}(y)$ to calculate the derivative of the neural network with respect to each of these inputs. Check for correctness by using ForwardDiff.jl to calculate the gradient.

The adjoint of an ODE can be described as the set of vector equations:

\[ \begin{align} u' &= f(u,p,t)\\ \end{align} \]

forward, and then

\[ \begin{align} \lambda' &= -\lambda^\ast \frac{\partial f}{\partial u}\\ \mu' &= -\lambda^\ast \frac{\partial f}{\partial p}\\ \end{align} \]

solved in reverse time from $T$ to $0$ for some cost function $C(p)$. For this problem, we will use the L2 loss function.

Note that $\mu(T) = 0$ and $\lambda(T) = \frac{\partial C}{\partial u(T)}$. This is written in the form where the only data point is at time $T$. If that is not the case, the reverse solve needs to add the jump $\frac{\partial C}{\partial u(t_i)}$ to $\lambda$ at each data point $u(t_i)$. Use this example for how to add these jumps to the equation.

Using this formulation of the adjoint, it holds that $\mu(0) = \frac{\partial C}{\partial p}$, and thus solving these ODEs in reverse gives the solution for the gradient as a part of the system at time zero.

Notice that $B_f^u(\lambda) = \lambda^\ast \frac{\partial f}{\partial u}$ and similarly for $\mu$. Implement an adjoint calculation for a neural ordinary differential equation where

\[ u' = NN(u) \]

from above. Solve the ODE forwards using OrdinaryDiffEq.jl's Tsit5() integrator, then use the interpolation from the forward pass for the u values of the backpass and solve.

(Note: you will want to double check this gradient by using something like ForwardDiff! Start with only measuring the datapoint at the end, then try multiple data points.)

Generate data from the ODE $u' = Au$ where A = [-0.1 2.0; -2.0 -0.1] at t=0.0:0.1:1.0 (use saveat) with $u(0) = [2,0]$. Define the cost function C(θ) to be the Euclidean distance between the neural ODE's solution and the data. Optimize this cost function by using gradient descent where the gradient is your adjoint method's output.

(Note: calculate the cost and the gradient at the same time by using the forward pass to calculate the cost, and then use it in the adjoint for the interpolation. Note that you should not use saveat in the forward pass then, because otherwise the interpolation is linear. Instead, post-interpolate the data points.)

If you have access to a GPU, you may wish to try the following.

Change your neural network to be GPU-accelerated by using CuArrays.jl for the underlying array types.

Change the initial condition of the ODE solves to a CuArray to make your neural ODE GPU-accelerated.

\ No newline at end of file diff --git a/_weave/lecture02/jl_UdliHl/optimizing_16_1.png b/_weave/lecture02/jl_UdliHl/optimizing_16_1.png new file mode 100644 index 00000000..c214ca62 Binary files /dev/null and b/_weave/lecture02/jl_UdliHl/optimizing_16_1.png differ diff --git a/_weave/lecture02/jl_UdliHl/optimizing_17_1.png b/_weave/lecture02/jl_UdliHl/optimizing_17_1.png new file mode 100644 index 00000000..af3f7bcd Binary files /dev/null and b/_weave/lecture02/jl_UdliHl/optimizing_17_1.png differ diff --git a/_weave/lecture02/jl_h3cAOL/optimizing_16_1.png b/_weave/lecture02/jl_h3cAOL/optimizing_16_1.png deleted file mode 100644 index 6b4b8995..00000000 Binary files a/_weave/lecture02/jl_h3cAOL/optimizing_16_1.png and /dev/null differ diff --git a/_weave/lecture02/jl_h3cAOL/optimizing_17_1.png b/_weave/lecture02/jl_h3cAOL/optimizing_17_1.png deleted file mode 100644 index 08166f19..00000000 Binary files a/_weave/lecture02/jl_h3cAOL/optimizing_17_1.png and /dev/null differ diff --git a/_weave/lecture02/optimizing/index.html b/_weave/lecture02/optimizing/index.html index 7b178761..a95523e4 100644 --- a/_weave/lecture02/optimizing/index.html +++ b/_weave/lecture02/optimizing/index.html @@ -10,7 +10,7 @@-16.500 μs (0 allocations: 0 bytes) +21.600 μs (0 allocations: 0 bytes)

function inner_cols!(C,A,B) for j in 1:100, i in 1:100 @@ -19,7 +19,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime inner_cols!(C,A,B)

-7.933 μs (0 allocations: 0 bytes) +9.500 μs (0 allocations: 0 bytes)

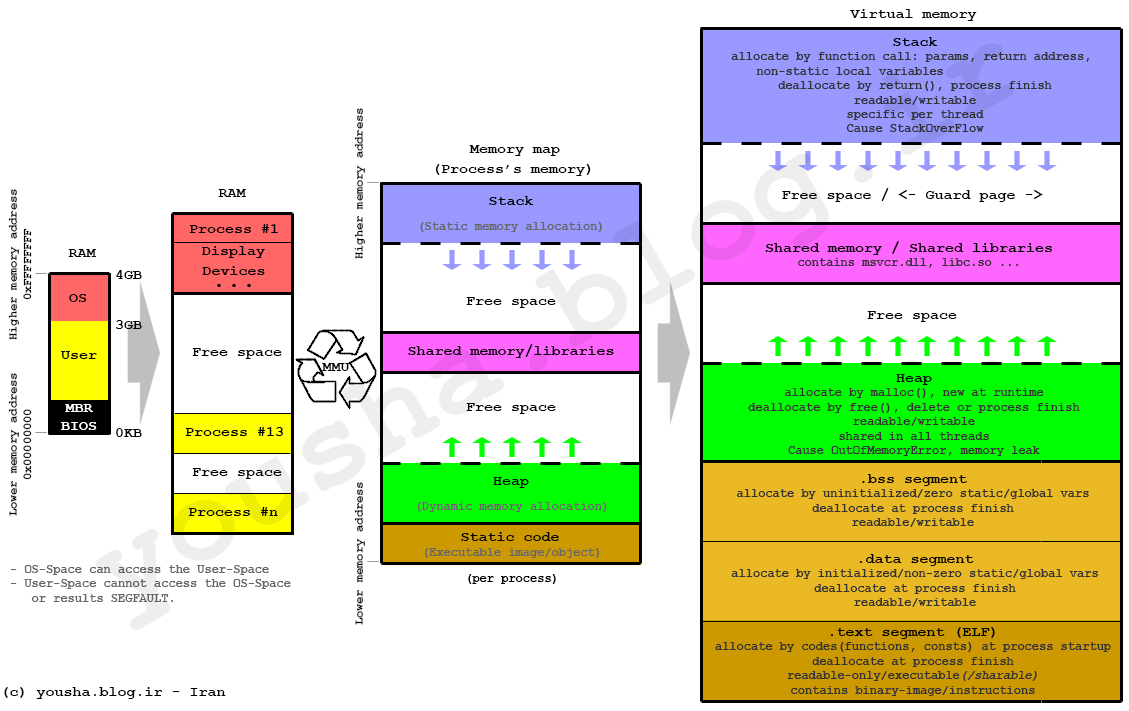

Locally, the stack is composed of a stack and a heap. The stack requires a static allocation: it is ordered. Because it's ordered, it is very clear where things are in the stack, and therefore accesses are very quick (think instantaneous). However, because this is static, it requires that the size of the variables is known at compile time (to determine all of the variable locations). Since that is not possible with all variables, there exists the heap. The heap is essentially a stack of pointers to objects in memory. When heap variables are needed, their values are pulled up the cache chain and accessed.

Heap allocations are costly because they involve this pointer indirection, so stack allocation should be done when sensible (it's not helpful for really large arrays, but for small values like scalars it's essential!)

function inner_alloc!(C,A,B) for j in 1:100, i in 1:100 @@ -29,7 +29,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime inner_alloc!(C,A,B)

-314.598 μs (10000 allocations: 625.00 KiB) +363.501 μs (10000 allocations: 625.00 KiB)

function inner_noalloc!(C,A,B) for j in 1:100, i in 1:100 @@ -39,7 +39,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime inner_noalloc!(C,A,B)

-7.533 μs (0 allocations: 0 bytes) +8.800 μs (0 allocations: 0 bytes)

Why does the array here get heap-allocated? It isn't able to prove/guarantee at compile-time that the array's size will always be a given value, and thus it allocates it to the heap. @btime tells us this allocation occurred and shows us the total heap memory that was taken. Meanwhile, the size of a Float64 number is known at compile-time (64-bits), and so this is stored onto the stack and given a specific location that the compiler will be able to directly address.

Note that one can use the StaticArrays.jl library to get statically-sized arrays and thus arrays which are stack-allocated:

using StaticArrays function static_inner_alloc!(C,A,B) @@ -50,7 +50,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime static_inner_alloc!(C,A,B)

-8.166 μs (0 allocations: 0 bytes) +9.200 μs (0 allocations: 0 bytes)

Many times you do need to write into an array, so how can you write into an array without performing a heap allocation? The answer is mutation. Mutation is changing the values of an already existing array. In that case, no free memory has to be found to put the array (and no memory has to be freed by the garbage collector).

In Julia, functions which mutate the first value are conventionally noted by a !. See the difference between these two equivalent functions:

function inner_noalloc!(C,A,B) for j in 1:100, i in 1:100 @@ -60,7 +60,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime inner_noalloc!(C,A,B)

-7.733 μs (0 allocations: 0 bytes) +9.600 μs (0 allocations: 0 bytes)

function inner_alloc(A,B) C = similar(A) @@ -71,7 +71,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime inner_alloc(A,B)

-15.100 μs (2 allocations: 78.17 KiB) +16.200 μs (2 allocations: 78.17 KiB)

To use this algorithm effectively, the ! algorithm assumes that the caller already has allocated the output array to put as the output argument. If that is not true, then one would need to manually allocate. The goal of that interface is to give the caller control over the allocations to allow them to manually reduce the total number of heap allocations and thus increase the speed.

Wouldn't it be nice to not have to write the loop there? In many high level languages this is simply called vectorization. In Julia, we will call it array vectorization to distinguish it from the SIMD vectorization which is common in lower level languages like C, Fortran, and Julia.

In Julia, if you use . on an operator it will transform it to the broadcasted form. Broadcast is lazy: it will build up an entire .'d expression and then call broadcast! on composed expression. This is customizable and documented in detail. However, to a first approximation we can think of the broadcast mechanism as a mechanism for building fused expressions. For example, the Julia code:

A .+ B .+ C;

under the hood lowers to something like:

@@ -86,29 +86,29 @@Optimizing Serial Code

Chris Rackauckas

Septe end @btime unfused(A,B,C);

-31.800 μs (4 allocations: 156.34 KiB) +31.200 μs (4 allocations: 156.34 KiB)

fused(A,B,C) = A .+ B .+ C @btime fused(A,B,C);

-18.400 μs (2 allocations: 78.17 KiB) +18.600 μs (2 allocations: 78.17 KiB)

Note that we can also fuse the output by using .=. This is essentially the vectorized version of a ! function:

D = similar(A) fused!(D,A,B,C) = (D .= A .+ B .+ C) @btime fused!(D,A,B,C);

-10.800 μs (0 allocations: 0 bytes) +10.500 μs (0 allocations: 0 bytes)

Julia allows for broadcasting the call () operator as well. .() will call the function element-wise on all arguments, so sin.(A) will be the elementwise sine function. This will fuse Julia like the other operators.

In articles on MATLAB, Python, R, etc., this is where you will be told to vectorize your code. Notice from above that this isn't a performance difference between writing loops and using vectorized broadcasts. This is not abnormal! The reason why you are told to vectorize code in these other languages is because they have a high per-operation overhead (which will be discussed further down). This means that every call, like +, is costly in these languages. To get around this issue and make the language usable, someone wrote and compiled the loop for the C/Fortran function that does the broadcasted form (see numpy's Github repo). Thus A .+ B's MATLAB/Python/R equivalents are calling a single C function to generally avoid the cost of function calls and thus are faster.

But this is not an intrinsic property of vectorization. Vectorization isn't "fast" in these languages, it's just close to the correct speed. The reason vectorization is recommended is because looping is slow in these languages. Because looping isn't slow in Julia (or C, C++, Fortran, etc.), loops and vectorization generally have the same speed. So use the one that works best for your code without a care about performance.

(As a small side effect, these high level languages tend to allocate a lot of temporary variables since the individual C kernels are written for specific numbers of inputs and thus don't naturally fuse. Julia's broadcast mechanism is just generating and JIT compiling Julia functions on the fly, and thus it can accommodate the combinatorial explosion in the amount of choices just by only compiling the combinations that are necessary for a specific code)

It's important to note that slices in Julia produce copies instead of views. Thus for example:

A[50,50]

-0.6987883598884902 +0.1874936774122129

allocates a new output. This is for safety, since if it pointed to the same array then writing to it would change the original array. We can demonstrate this by asking for a view instead of a copy.

@show A[1] E = @view A[1:5,1:5] E[1] = 2.0 @show A[1]

-A[1] = 0.49711339210357286 +A[1] = 0.6237197114754515 A[1] = 2.0 2.0

However, this means that @view A[1:5,1:5] did not allocate an array (it does allocate a pointer if the escape analysis is unable to prove that it can be elided. This means that in small loops there will be no allocation, while if the view is returned from a function for example it will allocate the pointer, ~80 bytes, but not the memory of the array. This means that it is O(1) in cost but with a relatively small constant).

Heap allocations have to locate and prepare a space in RAM that is proportional to the amount of memory that is calculated, which means that the cost of a heap allocation for an array is O(n), with a large constant. As RAM begins to fill up, this cost dramatically increases. If you run out of RAM, your computer may begin to use swap, which is essentially RAM simulated on your hard drive. Generally when you hit swap your performance is so dead that you may think that your computation froze, but if you check your resource use you will notice that it's actually just filled the RAM and starting to use the swap.

But think of it as O(n) with a large constant factor. This means that for operations which only touch the data once, heap allocations can dominate the computational cost:

@@ -130,7 +130,7 @@Optimizing Serial Code

Chris Rackauckas

Septe plot(ns,alloc,label="=",xscale=:log10,yscale=:log10,legend=:bottomright, title="Micro-optimizations matter for BLAS1") plot!(ns,noalloc,label=".=") -

However, when the computation takes O(n^3), like in matrix multiplications, the high constant factor only comes into play when the matrices are sufficiently small:

+

However, when the computation takes O(n^3), like in matrix multiplications, the high constant factor only comes into play when the matrices are sufficiently small:

using LinearAlgebra, BenchmarkTools function alloc_timer(n) A = rand(n,n) @@ -149,7 +149,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

plot(ns,alloc,label="*",xscale=:log10,yscale=:log10,legend=:bottomright, title="Micro-optimizations only matter for small matmuls") plot!(ns,noalloc,label="mul!") -

Though using a mutating form is never bad and always is a little bit better.

Avoid cache misses by reusing values

Iterate along columns

Avoid heap allocations in inner loops

Heap allocations occur when the size of things is not proven at compile-time

Use fused broadcasts (with mutated outputs) to avoid heap allocations

Array vectorization confers no special benefit in Julia because Julia loops are as fast as C or Fortran

Use views instead of slices when applicable

Avoiding heap allocations is most necessary for O(n) algorithms or algorithms with small arrays

Use StaticArrays.jl to avoid heap allocations of small arrays in inner loops

Many people think Julia is fast because it is JIT compiled. That is simply not true (we've already shown examples where Julia code isn't fast, but it's always JIT compiled!). Instead, the reason why Julia is fast is because the combination of two ideas:

Type inference

Type specialization in functions

These two features naturally give rise to Julia's core design feature: multiple dispatch. Let's break down these pieces.

At the core level of the computer, everything has a type. Some languages are more explicit about said types, while others try to hide the types from the user. A type tells the compiler how to to store and interpret the memory of a value. For example, if the compiled code knows that the value in the register is supposed to be interpreted as a 64-bit floating point number, then it understands that slab of memory like:

Importantly, it will know what to do for function calls. If the code tells it to add two floating point numbers, it will send them as inputs to the Floating Point Unit (FPU) which will give the output.

If the types are not known, then... ? So one cannot actually compute until the types are known, since otherwise it's impossible to interpret the memory. In languages like C, the programmer has to declare the types of variables in the program:

void add(double *a, double *b, double *c, size_t n){

+Though using a mutating form is never bad and always is a little bit better.

Avoid cache misses by reusing values

Iterate along columns

Avoid heap allocations in inner loops

Heap allocations occur when the size of things is not proven at compile-time

Use fused broadcasts (with mutated outputs) to avoid heap allocations

Array vectorization confers no special benefit in Julia because Julia loops are as fast as C or Fortran

Use views instead of slices when applicable

Avoiding heap allocations is most necessary for O(n) algorithms or algorithms with small arrays

Use StaticArrays.jl to avoid heap allocations of small arrays in inner loops

Many people think Julia is fast because it is JIT compiled. That is simply not true (we've already shown examples where Julia code isn't fast, but it's always JIT compiled!). Instead, the reason why Julia is fast is because the combination of two ideas:

Type inference

Type specialization in functions

These two features naturally give rise to Julia's core design feature: multiple dispatch. Let's break down these pieces.

At the core level of the computer, everything has a type. Some languages are more explicit about said types, while others try to hide the types from the user. A type tells the compiler how to to store and interpret the memory of a value. For example, if the compiled code knows that the value in the register is supposed to be interpreted as a 64-bit floating point number, then it understands that slab of memory like:

Importantly, it will know what to do for function calls. If the code tells it to add two floating point numbers, it will send them as inputs to the Floating Point Unit (FPU) which will give the output.

If the types are not known, then... ? So one cannot actually compute until the types are known, since otherwise it's impossible to interpret the memory. In languages like C, the programmer has to declare the types of variables in the program:

void add(double *a, double *b, double *c, size_t n){

size_t i;

for(i = 0; i < n; ++i) {

c[i] = a[i] + b[i];

@@ -172,7 +172,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `f`

-define i64 @julia_f_2897(i64 signext %0, i64 signext %1) #0 {

+define i64 @julia_f_2893(i64 signext %0, i64 signext %1) #0 {

top:

; ┌ @ int.jl:87 within `+`

%2 = add i64 %1, %0

@@ -184,7 +184,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `f`

-define double @julia_f_2899(double %0, double %1) #0 {

+define double @julia_f_2895(double %0, double %1) #0 {

top:

; ┌ @ float.jl:408 within `+`

%2 = fadd double %0, %1

@@ -204,7 +204,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

-define i64 @julia_g_2901(i64 signext %0, i64 signext %1) #0 {

+define i64 @julia_g_2897(i64 signext %0, i64 signext %1) #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `g`

@@ -249,7 +249,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `f`

-define double @julia_f_3427(double %0, i64 signext %1) #0 {

+define double @julia_f_3423(double %0, i64 signext %1) #0 {

top:

; ┌ @ promotion.jl:410 within `+`

; │┌ @ promotion.jl:381 within `promote`

@@ -290,7 +290,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

-define double @julia_g_3430(double %0, i64 signext %1) #0 {

+define double @julia_g_3426(double %0, i64 signext %1) #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `g`

@@ -360,7 +360,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

0.4

The + function in Julia is just defined as +(a,b), and we can actually point to that code in the Julia distribution:

@which +(2.0,5) -+(x::Number, y::Number) in Base at promotion.jl:410

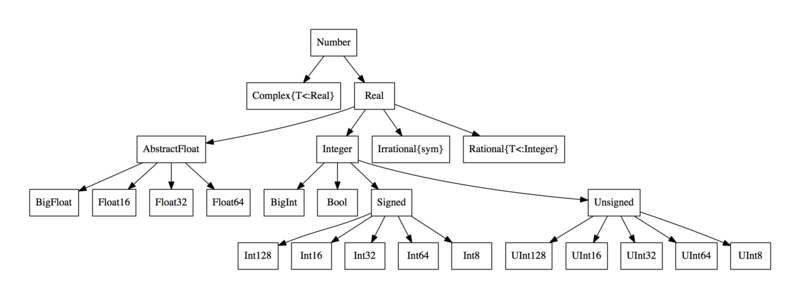

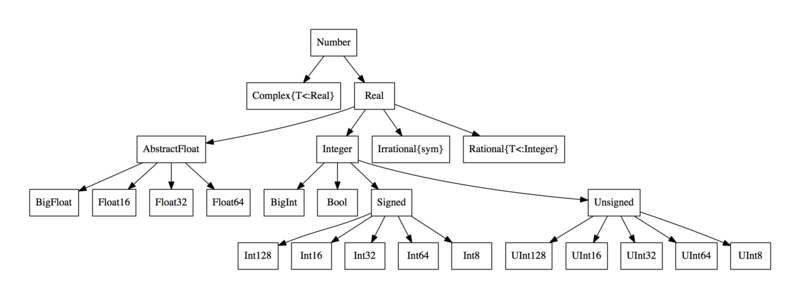

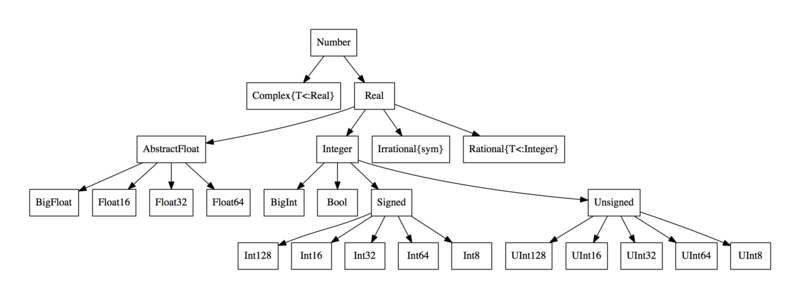

To control at a higher level, Julia uses abstract types. For example, Float64 <: AbstractFloat, meaning Float64s are a subtype of AbstractFloat. We also have that Int <: Integer, while both AbstractFloat <: Number and Integer <: Number.

Julia allows the user to define dispatches at a higher level, and the version that is called is the most strict version that is correct. For example, right now with ff we will get a MethodError if we call it between a Int and a Float64 because no such method exists:

++(x::Number, y::Number) in Base at promotion.jl:410

To control at a higher level, Julia uses abstract types. For example, Float64 <: AbstractFloat, meaning Float64s are a subtype of AbstractFloat. We also have that Int <: Integer, while both AbstractFloat <: Number and Integer <: Number.

Julia allows the user to define dispatches at a higher level, and the version that is called is the most strict version that is correct. For example, right now with ff we will get a MethodError if we call it between a Int and a Float64 because no such method exists:

ff(2.0,5)

ERROR: MethodError: no method matching ff(::Float64, ::Int64) @@ -381,7 +381,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `ff`

-define double @julia_ff_3551(double %0, i64 signext %1) #0 {

+define double @julia_ff_3547(double %0, i64 signext %1) #0 {

top:

; ┌ @ promotion.jl:410 within `+`

; │┌ @ promotion.jl:381 within `promote`

@@ -537,7 +537,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

-define void @julia_g_3690([2 x double]* noalias nocapture noundef nonnull s

+define void @julia_g_3686([2 x double]* noalias nocapture noundef nonnull s

ret([2 x double]) align 8 dereferenceable(16) %0, [2 x double]* nocapture n

oundef nonnull readonly align 8 dereferenceable(16) %1, [2 x double]* nocap

ture noundef nonnull readonly align 8 dereferenceable(16) %2) #0 {

@@ -653,7 +653,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

-define [2 x float] @julia_g_3702([2 x float]* nocapture noundef nonnull rea

+define [2 x float] @julia_g_3698([2 x float]* nocapture noundef nonnull rea

donly align 4 dereferenceable(8) %0, [2 x float]* nocapture noundef nonnull

readonly align 4 dereferenceable(8) %1) #0 {

top:

@@ -754,7 +754,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

-define void @julia_g_3721([2 x {}*]* noalias nocapture noundef nonnull sret

+define void @julia_g_3717([2 x {}*]* noalias nocapture noundef nonnull sret

([2 x {}*]) align 8 dereferenceable(16) %0, [2 x {}*]* nocapture noundef no

nnull readonly align 8 dereferenceable(16) %1, [2 x {}*]* nocapture noundef

nonnull readonly align 8 dereferenceable(16) %2) #0 {

@@ -788,22 +788,22 @@ Optimizing Serial Code

Chris Rackauckas

Septe

store {}** %13, {}*** %12, align 8

%14 = bitcast {}*** %pgcstack to {}***

store {}** %gcframe2.sub, {}*** %14, align 8

- call void @"j_+_3723"([2 x {}*]* noalias nocapture noundef nonnull sret(

-[2 x {}*]) %9, [2 x {}*]* nocapture nonnull readonly %1, i64 signext 4) #0

+ call void @"j_+_3719"([2 x {}*]* noalias nocapture noundef nonnull sret(

+[2 x {}*]) %5, [2 x {}*]* nocapture nonnull readonly %1, i64 signext 4) #0

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

- call void @"j_+_3724"([2 x {}*]* noalias nocapture noundef nonnull sret(

-[2 x {}*]) %5, i64 signext 2, [2 x {}*]* nocapture readonly %9) #0

+ call void @"j_+_3720"([2 x {}*]* noalias nocapture noundef nonnull sret(

+[2 x {}*]) %9, i64 signext 2, [2 x {}*]* nocapture readonly %5) #0

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

7 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

- call void @"j_+_3725"([2 x {}*]* noalias nocapture noundef nonnull sret(

-[2 x {}*]) %7, [2 x {}*]* nocapture readonly %5, [2 x {}*]* nocapture nonnu

+ call void @"j_+_3721"([2 x {}*]* noalias nocapture noundef nonnull sret(

+[2 x {}*]) %7, [2 x {}*]* nocapture readonly %9, [2 x {}*]* nocapture nonnu

ll readonly %2) #0

; └

%15 = bitcast [2 x {}*]* %0 to i8*

@@ -836,28 +836,28 @@ Optimizing Serial Code

Chris Rackauckas

Septe

b = MyComplex(2.0,1.0)

@btime g(a,b)

-22.189 ns (1 allocation: 32 bytes) +29.548 ns (1 allocation: 32 bytes) MyComplex(9.0, 2.0)

a = MyParameterizedComplex(1.0,1.0) b = MyParameterizedComplex(2.0,1.0) @btime g(a,b)

-22.088 ns (1 allocation: 32 bytes)

+26.835 ns (1 allocation: 32 bytes)

MyParameterizedComplex{Float64}(9.0, 2.0)

a = MySlowComplex(1.0,1.0) b = MySlowComplex(2.0,1.0) @btime g(a,b)

-130.643 ns (5 allocations: 96 bytes) +141.829 ns (5 allocations: 96 bytes) MySlowComplex(9.0, 2.0)

a = MySlowComplex2(1.0,1.0) b = MySlowComplex2(2.0,1.0) @btime g(a,b)

-871.875 ns (14 allocations: 288 bytes) +931.034 ns (14 allocations: 288 bytes) MySlowComplex2(9.0, 2.0)

Note that, because of these type specialization, value types, etc. properties, the number types, even ones such as Int, Float64, and Complex, are all themselves implemented in pure Julia! Thus even basic pieces can be implemented in Julia with full performance, given one uses the features correctly.

Note that a type which is mutable struct will not be isbits. This means that mutable structs will be a pointer to a heap allocated object, unless it's shortlived and the compiler can erase its construction. Also, note that isbits compiles down to bit operations from pure Julia, which means that these types can directly compile to GPU kernels through CUDAnative without modification.

Since functions automatically specialize on their input types in Julia, we can use this to our advantage in order to make an inner loop fully inferred. For example, take the code from above but with a loop:

function r(x) @@ -872,7 +872,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime r(x)

-6.140 μs (300 allocations: 4.69 KiB) +6.725 μs (300 allocations: 4.69 KiB) 604.0

In here, the loop variables are not inferred and thus this is really slow. However, we can force a function call in the middle to end up with specialization and in the inner loop be stable:

s(x) = _s(x[1],x[2]) @@ -888,7 +888,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

end @btime s(x)

-309.829 ns (1 allocation: 16 bytes) +332.200 ns (1 allocation: 16 bytes) 604.0

Notice that this algorithm still doesn't infer:

@code_warntype s(x) @@ -920,7 +920,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `fff`

-define i64 @julia_fff_3875(i64 signext %0) #0 {

+define i64 @julia_fff_3871(i64 signext %0) #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

8 within `fff`

@@ -934,7 +934,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `fff`

-define double @julia_fff_3877(double %0) #0 {

+define double @julia_fff_3873(double %0) #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

8 within `fff`

@@ -949,7 +949,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

C[i,j] = A[i,j] + B[i,j]

end

-804.197 μs (30000 allocations: 468.75 KiB) +1.011 ms (30000 allocations: 468.75 KiB)

This is very slow because the types of A, B, and C cannot be inferred. Why can't they be inferred? Well, at any time in the dynamic REPL scope I can do something like C = "haha now a string!", and thus it cannot specialize on the types currently existing in the REPL (since asynchronous changes could also occur), and therefore it defaults back to doing a type check at every single function which slows it down. Moral of the story, Julia functions are fast but its global scope is too dynamic to be optimized.

Julia is not fast because of its JIT, it's fast because of function specialization and type inference

Type stable functions allow inference to fully occur

Multiple dispatch works within the function specialization mechanism to create overhead-free compile time controls

Julia will specialize the generic functions

Making sure values are concretely typed in inner loops is essential for performance

Now let's dig even a little deeper. Everything the processor does has a cost. A great chart to keep in mind is this classic one. A few things should immediately jump out to you:

Simple arithmetic, like floating point additions, are super cheap. ~1 clock cycle, or a few nanoseconds.

Processors do branch prediction on if statements. If the code goes down the predicted route, the if statement costs ~1-2 clock cycles. If it goes down the wrong route, then it will take ~10-20 clock cycles. This means that predictable branches, like ones with clear patterns or usually the same output, are much cheaper (almost free) than unpredictable branches.

Function calls are expensive: 15-60 clock cycles!

RAM reads are very expensive, with lower caches less expensive.

Let's check the LLVM IR on one of our earlier loops:

function inner_noalloc!(C,A,B) for j in 1:100, i in 1:100 @@ -961,7 +961,7 @@Optimizing Serial Code

Chris Rackauckas

Septe

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `inner_noalloc!`

-define nonnull {}* @"japi1_inner_noalloc!_3886"({}* %0, {}** noalias nocapt

+define nonnull {}* @"japi1_inner_noalloc!_3882"({}* %0, {}** noalias nocapt

ure noundef readonly %1, i32 %2) #0 {

top:

%3 = alloca {}**, align 8

@@ -1104,7 +1104,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

br i1 %.not18, label %L36, label %L2

L36: ; preds = %L25

- ret {}* inttoptr (i64 139639611547656 to {}*)

+ ret {}* inttoptr (i64 139979581812744 to {}*)

oob: ; preds = %L5.us.us.postl

oop, %L2.split.us.L2.split.us.split_crit_edge, %L2

@@ -1229,17 +1229,17 @@ Optimizing Serial Code

Chris Rackauckas

Septe

end

@btime inner_noalloc!(C,A,B)

-7.600 μs (0 allocations: 0 bytes) +8.500 μs (0 allocations: 0 bytes)

@btime inner_noalloc_ib!(C,A,B)

-5.016 μs (0 allocations: 0 bytes) +5.450 μs (0 allocations: 0 bytes)

Now let's inspect the LLVM IR again:

@code_llvm inner_noalloc_ib!(C,A,B)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `inner_noalloc_ib!`

-define nonnull {}* @"japi1_inner_noalloc_ib!_3922"({}* %0, {}** noalias noc

+define nonnull {}* @"japi1_inner_noalloc_ib!_3918"({}* %0, {}** noalias noc

apture noundef readonly %1, i32 %2) #0 {

top:

%3 = alloca {}**, align 8

@@ -1374,7 +1374,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

br i1 %.not.not10, label %L36, label %L2

L36: ; preds = %L25

- ret {}* inttoptr (i64 139639611547656 to {}*)

+ ret {}* inttoptr (i64 139979581812744 to {}*)

}

If you look closely, you will see things like:

%wide.load24 = load <4 x double>, <4 x double> addrspac(13)* %46, align 8

; └

@@ -1383,7 +1383,7 @@ Optimizing Serial Code

Chris Rackauckas

Septe

@code_llvm fma(2.0,5.0,3.0)

; @ floatfuncs.jl:426 within `fma`

-define double @julia_fma_3923(double %0, double %1, double %2) #0 {

+define double @julia_fma_3919(double %0, double %1, double %2) #0 {

common.ret:

; ┌ @ floatfuncs.jl:421 within `fma_llvm`

%3 = call double @llvm.fma.f64(double %0, double %1, double %2)

@@ -1430,7 +1430,7 @@ Inlining

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `qinline`

-define double @julia_qinline_3926(double %0, double %1) #0 {

+define double @julia_qinline_3922(double %0, double %1) #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

7 within `qinline`

@@ -1467,17 +1467,17 @@ Inlining

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

11 within `qnoinline`

-define double @julia_qnoinline_3928(double %0, double %1) #0 {

+define double @julia_qnoinline_3924(double %0, double %1) #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

14 within `qnoinline`

- %2 = call double @j_fnoinline_3930(double %0, i64 signext 4) #0

+ %2 = call double @j_fnoinline_3926(double %0, i64 signext 4) #0

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

15 within `qnoinline`

- %3 = call double @j_fnoinline_3931(i64 signext 2, double %2) #0

+ %3 = call double @j_fnoinline_3927(i64 signext 2, double %2) #0

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

16 within `qnoinline`

- %4 = call double @j_fnoinline_3932(double %3, double %1) #0

+ %4 = call double @j_fnoinline_3928(double %3, double %1) #0

ret double %4

}

@@ -1496,7 +1496,7 @@ Inlining

-22.390 ns (1 allocation: 16 bytes)

+27.839 ns (1 allocation: 16 bytes)

9.0

@@ -1508,7 +1508,7 @@ Inlining

-26.004 ns (1 allocation: 16 bytes)

+31.690 ns (1 allocation: 16 bytes)

9.0

@@ -1536,7 +1536,7 @@ Note on Benchmarking

-1.699 ns (0 allocations: 0 bytes)

+1.900 ns (0 allocations: 0 bytes)

9.0

@@ -1553,7 +1553,7 @@ Note on Benchmarking

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `cheat`

-define double @julia_cheat_3960() #0 {

+define double @julia_cheat_3956() #0 {

top:

ret double 9.000000e+00

}

@@ -1578,6 +1578,6 @@ Discussion Questions

\ No newline at end of file

diff --git a/_weave/lecture03/jl_7PHY3B/sciml_35_1.png b/_weave/lecture03/jl_7PHY3B/sciml_35_1.png

new file mode 100644

index 00000000..6f6be881

Binary files /dev/null and b/_weave/lecture03/jl_7PHY3B/sciml_35_1.png differ

diff --git a/_weave/lecture03/jl_A7oobX/sciml_36_1.png b/_weave/lecture03/jl_7PHY3B/sciml_36_1.png

similarity index 100%

rename from _weave/lecture03/jl_A7oobX/sciml_36_1.png

rename to _weave/lecture03/jl_7PHY3B/sciml_36_1.png

diff --git a/_weave/lecture03/jl_A7oobX/sciml_37_1.png b/_weave/lecture03/jl_7PHY3B/sciml_37_1.png

similarity index 100%

rename from _weave/lecture03/jl_A7oobX/sciml_37_1.png

rename to _weave/lecture03/jl_7PHY3B/sciml_37_1.png

diff --git a/_weave/lecture03/jl_7PHY3B/sciml_41_1.png b/_weave/lecture03/jl_7PHY3B/sciml_41_1.png

new file mode 100644

index 00000000..d6df7e6b

Binary files /dev/null and b/_weave/lecture03/jl_7PHY3B/sciml_41_1.png differ

diff --git a/_weave/lecture03/jl_A7oobX/sciml_42_1.png b/_weave/lecture03/jl_7PHY3B/sciml_42_1.png

similarity index 100%

rename from _weave/lecture03/jl_A7oobX/sciml_42_1.png

rename to _weave/lecture03/jl_7PHY3B/sciml_42_1.png

diff --git a/_weave/lecture03/jl_7PHY3B/sciml_45_1.png b/_weave/lecture03/jl_7PHY3B/sciml_45_1.png

new file mode 100644

index 00000000..289c9827

Binary files /dev/null and b/_weave/lecture03/jl_7PHY3B/sciml_45_1.png differ

diff --git a/_weave/lecture03/jl_A7oobX/sciml_35_1.png b/_weave/lecture03/jl_A7oobX/sciml_35_1.png

deleted file mode 100644

index b73d3e2b..00000000

Binary files a/_weave/lecture03/jl_A7oobX/sciml_35_1.png and /dev/null differ

diff --git a/_weave/lecture03/jl_A7oobX/sciml_41_1.png b/_weave/lecture03/jl_A7oobX/sciml_41_1.png

deleted file mode 100644

index 7c82fa34..00000000

Binary files a/_weave/lecture03/jl_A7oobX/sciml_41_1.png and /dev/null differ

diff --git a/_weave/lecture03/jl_A7oobX/sciml_45_1.png b/_weave/lecture03/jl_A7oobX/sciml_45_1.png

deleted file mode 100644

index a6af4682..00000000

Binary files a/_weave/lecture03/jl_A7oobX/sciml_45_1.png and /dev/null differ

diff --git a/_weave/lecture03/sciml/index.html b/_weave/lecture03/sciml/index.html

index 97bef98a..af00466e 100644

--- a/_weave/lecture03/sciml/index.html

+++ b/_weave/lecture03/sciml/index.html

@@ -19,11 +19,11 @@ Introduction to Scientific Machine Learning through Physics-Inf

simpleNN(rand(10))

5-element Vector{Float64}:

- -2.4350849734604516

- 4.348579464751774

- -0.430223629075539

- 1.7699710631897965

- -8.170048057983601

+ -1.3383331584519713

+ -2.39034673226155

+ 5.476769960460295

+ -4.383289115950564

+ -8.639151272106952

This is our direct definition of a neural network. Notice that we choose to use tanh as our activation function between the layers.

Defining Neural Networks with Flux.jl

One of the main deep learning libraries in Julia is Flux.jl. Flux is an interesting library for scientific machine learning because it is built on top of language-wide automatic differentiation libraries, giving rise to a programming paradigm known as differentiable programming, which means that one can write a program in a manner that it has easily accessible fast derivatives. However, due to being built on a differentiable programming base, the underlying functionality is simply standard Julia code,

To learn how to use the library, consult the documentation. A Google search will bring up the Flux.jl Github repository. From there, the blue link on the README brings you to the package documentation. This is common through Julia so it's a good habit to learn!

In the documentation you will find that the way a neural network is defined is through a Chain of layers. A Dense layer is the kind we defined above, which is given by an input size, an output size, and an activation function. For example, the following recreates the neural network that we had above:

using Flux

NN2 = Chain(Dense(10 => 32,tanh),

@@ -32,11 +32,11 @@ Introduction to Scientific Machine Learning through Physics-Inf

NN2(rand(10))

5-element Vector{Float64}:

- -0.29078475578534135

- -0.36515252241118434

- 0.18666332556964638

- 0.27894106921878215

- -0.07838097075616664

+ -0.367383275136521

+ 0.09404428112599642

+ 0.24593293916044112

+ -0.19206701177467855

+ -0.1616706778424914

Notice that Flux.jl as a library is written in pure Julia, which means that every piece of this syntax is just sugar over some Julia code that we can specialize ourselves (this is the advantage of having a language fast enough for the implementation of the library and the use of the library!)

For example, the activation function is just a scalar Julia function. If we wanted to replace it by something like the quadratic function, we can just use an anonymous function to define the scalar function we would like to use:

NN3 = Chain(Dense(10 => 32,x->x^2),

Dense(32 => 32,x->max(0,x)),

@@ -44,11 +44,11 @@ Introduction to Scientific Machine Learning through Physics-Inf

NN3(rand(10))

5-element Vector{Float64}:

- -0.09374245725516782

- 0.36412359730517746

- -0.04188060346619738

- -0.0063866127237920105

- -0.07180727833605427

+ -0.0635693658681912

+ 0.10343361412920299

+ 0.23396297818125808

+ -0.0655890950970476

+ 0.05933084866332009

The second activation function there is what's known as a relu. A relu can be good to use because it's an exceptionally fast operation and satisfies a form of the universal approximation theorem (UAT). However, a downside is that its derivative is not continuous, which could impact the numerical properties of some algorithms, and thus it's widely used throughout standard machine learning but we'll see reasons why it may be disadvantageous in some cases in scientific machine learning.

Digging into the Construction of a Neural Network Library

Again, as mentioned before, this neural network NN2 is simply a function:

simpleNN(x) = W[3]*tanh.(W[2]*tanh.(W[1]*x + b[1]) + b[2]) + b[3]

@@ -96,26 +96,26 @@ Introduction to Scientific Machine Learning through Physics-Inf

denselayer_f(rand(32))

32-element Vector{Float64}:

- 0.6502745532344867

- -0.2764078547672906

- -0.33756397356649603

- 0.2905378129827325

- 0.4829203037778629

- 0.49259637695301317

- -0.7244575524152954

- 0.6201698112871467

- 0.43852802909767524

- -0.29660901905762604

+ 0.5742544502155338

+ -0.33380176214476615

+ 0.542810141740359

+ 0.14469442202480834

+ -0.29914058859543896

+ 0.6716839940944329

+ 0.2881809336509002

+ 0.5692583753772852

+ -0.5698291775734202

+ -0.6771694953190442

⋮

- -0.2999804882299587

- 0.6165336309867477

- 0.29528760817159105

- -0.4957092213910054

- 0.7795838428322517

- -0.4247540893917004

- -0.42115911678801005

- -0.5614876585119257

- -0.48468637337209475

+ 0.06555175730264437

+ -0.7822997657577385

+ -0.2509639764291457

+ -0.14439865793581114

+ -0.057541402830386

+ 0.5126940597261566

+ -0.6583375064922741

+ 0.19901747735535336

+ -0.11878186145036329

So okay, Dense objects are just functions that have weight and bias matrices inside of them. Now what does Chain do?

@which Chain(1,2,3)

Chain(xs...) in Flux at /home/runner/.julia/packages/Flux/ZdbJr/src/layers/basic.jl:39 Again, for our explanations here we will look at the slightly simpler code From and earlier version of the Flux package:

@@ -169,57 +169,53 @@ Introduction to Scientific Machine Learning through Physics-Inf

loss() = sum(abs2,sum(abs2,NN(rand(10)).-1) for i in 1:100)

loss()

-4550.070342185175

+4762.879890603975

This loss function takes 100 random points in $[0,1]^{10}$ and then computes the output of the neural network minus 1 on each of the values, and sums up the squared values (abs2). Why the squared values? This means that every computed loss value is positive, and so we know that by decreasing the loss this means that, on average our neural network outputs are closer to 1. What are the weights? Since we're using the Flux callable struct style from above, the weights are those inside of the NN chain object, which we can inspect:

NN[1].weight # The W matrix of the first layer

32×10 Matrix{Float32}:

- 0.206907 -0.293996 0.246735 … -0.316413 -0.344967 0.140159

- -0.234722 0.0792494 -0.255654 0.304856 0.301727 -0.15234

- -0.131264 -0.300535 0.34379 0.137062 -0.355142 0.292703

- -0.165884 -0.184028 -0.244061 -0.0888335 -0.0501927 -0.364835

- 0.273653 -0.27581 -0.165154 0.107254 -0.0985882 -0.131832

- -0.0837413 0.0814385 0.193289 … 0.114349 -0.0310933 0.343392

- -0.108227 -0.110772 0.155364 0.177525 0.160025 -0.017761

-1

- 0.131718 0.00724105 -0.223872 -0.00875761 0.112146 0.245469

- -0.173363 0.105232 -0.331788 0.224498 0.0817328 0.163695

- -0.129662 0.0193645 0.225084 -0.131568 0.124624 0.106667

- ⋮ ⋱

- 0.366625 0.215874 -0.284587 0.257149 0.181714 -0.244675

- 0.134637 -0.280037 0.24618 -0.276576 0.0496992 0.026246

-6

- 0.0804886 -0.0138646 0.056448 … -0.336282 -0.244829 -0.33495

- -0.0437822 -0.260398 -0.190927 0.287319 -0.192932 0.053829

- -0.0672412 0.283508 0.192685 -0.105615 -0.115523 0.037439

-8

- 0.0563317 -0.317537 0.356511 0.136938 0.349309 -0.187046

- 0.266178 0.125742 0.0387179 -0.322464 0.10805 0.268939

- -0.14143 0.315573 0.308718 … 0.357034 -0.30481 0.063483

-7

- -0.293272 0.181026 -0.101116 -0.135126 -0.249626 0.022162

-9

+ -0.042315 -0.152674 0.248828 -0.00480644 … -0.165152 -0.0683321

+ 0.0196488 -0.355363 -0.0511883 -0.275463 -0.345056 0.247659

+ 0.0696147 0.198262 -0.0505652 0.208592 0.349459 0.160529

+ 0.229841 0.181453 -0.206514 -0.165194 -0.262046 -0.123437

+ -0.298059 -0.320777 0.161661 -0.0647406 0.293637 -0.325253

+ -0.28259 -0.0159905 -0.227372 -0.26533 … -0.159701 0.215338

+ -0.0417327 0.0246552 0.282349 -0.0145864 -0.277505 0.0595062

+ 0.106574 0.0655952 0.11508 -0.0328105 -0.185263 0.12242

+ 0.316739 -0.147705 0.088275 -0.0220919 -0.180979 -0.24828

+ 0.223117 -0.0728504 0.0867307 -0.349231 0.223369 -0.147801

+ ⋮ ⋱

+ 0.156 -0.302055 0.14692 -0.189734 0.363814 0.366614

+ 0.31773 0.32263 -0.375842 -0.306479 0.26718 0.257999

+ 0.0447154 0.0959551 -0.0754782 0.32307 … 0.0750746 0.320953

+ -0.120086 -0.0858004 -0.29501 -0.0595151 0.278634 0.225435

+ -0.0773527 0.0215229 0.276879 -0.297569 0.179297 0.0024109

+5

+ -0.242057 0.116857 -0.0286312 0.12309 0.342328 -0.0437305

+ -0.0715863 -0.0341185 0.0922671 -0.0341884 -0.0671414 -0.0955032

+ -0.118132 0.0959598 0.148726 0.331655 … -0.0611473 0.377659

+ -0.36258 0.312327 -0.368223 0.118171 -0.28799 -0.102674

Now let's grab all of the parameters together:

p = Flux.params(NN)

-Params([Float32[0.20690748 -0.2939962 … -0.34496742 0.14015937; -0.23472181

- 0.07924941 … 0.30172688 -0.15234008; … ; -0.14143002 0.31557292 … -0.30481

-017 0.06348374; -0.2932724 0.18102595 … -0.24962649 0.022162892], Float32[0

-.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0 … 0.0, 0.0, 0.0, 0.0, 0.0

-, 0.0, 0.0, 0.0, 0.0, 0.0], Float32[-0.03256267 -0.13882013 … 0.19279614 0.

-05722884; -0.0030363456 0.07537417 … 0.022959018 -0.2238231; … ; 0.29075414

- 0.27948645 … -0.20741504 0.052605283; 0.21510808 -0.21531041 … -0.28893065

- -0.087447755], Float32[0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0 …

- 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], Float32[0.03591951 0.0

-95983386 … 0.3207747 -0.08298867; -0.10454989 0.12266854 … 0.25205672 -0.02

-537506; … ; 0.12059488 0.0905982 … 0.059288114 0.2102544; 0.07917695 -0.169

-73555 … -0.2598032 -0.35143456], Float32[0.0, 0.0, 0.0, 0.0, 0.0]])

+Params([Float32[-0.042314984 -0.15267445 … -0.16515188 -0.06833213; 0.01964

+8807 -0.35536322 … -0.34505555 0.2476594; … ; -0.11813201 0.09595979 … -0.0

+61147317 0.37765864; -0.36258012 0.31232706 … -0.28799024 -0.10267412], Flo

+at32[0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0 … 0.0, 0.0, 0.0, 0.

+0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], Float32[0.20425878 -0.076422386 … 0.08237

+911 -0.27405605; -0.28111216 0.16626641 … -0.29174885 -0.16367172; … ; 0.27

+852747 -0.23803714 … 0.2357961 0.14744177; 0.26311168 -0.2878293 … -0.03472

+6076 0.2109272], Float32[0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0

+… 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], Float32[-0.104982175

+-0.12251709 … -0.17302983 -0.039281193; 0.3006238 0.08044029 … 0.017479276

+0.10239558; … ; 0.1948438 -0.26296428 … -0.2930175 0.0440955; 0.02304553 -0

+.34583634 … 0.19846451 0.38075408], Float32[0.0, 0.0, 0.0, 0.0, 0.0]])

That's a helper function on Chain which recursively gathers all of the defining parameters. Let's now find the optimal values p which cause the neural network to be the constant 1 function:

Flux.train!(loss, p, Iterators.repeated((), 10000), ADAM(0.1))

Now let's check the loss:

loss()

-6.637833380399612e-5

+5.824328915289363e-9

This means that NN(x) is now a very good function approximator to f(x) = ones(5)!

So Why Machine Learning? Why Neural Networks?

All we did was find parameters that made NN(x) act like a function f(x). How does that relate to machine learning? Well, in any case where one is acting on data (x,y), the idea is to assume that there exists some underlying mathematical model f(x) = y. If we had perfect knowledge of what f is, then from only the information of x we can then predict what y would be. The inference problem is to then figure out what function f should be. Therefore, machine learning on data is simply this problem of finding an approximator to some unknown function!

So why neural networks? Neural networks satisfy two properties. The first of which is known as the Universal Approximation Theorem (UAT), which in simple non-mathematical language means that, for any ϵ of accuracy, if your neural network is large enough (has enough layers, the weight matrices are large enough), then it can approximate any (nice) function f within that ϵ. Therefore, we can reduce the problem of finding missing functions, the problem of machine learning, to a problem of finding the weights of neural networks, which is a well-defined mathematical optimization problem.

Why neural networks specifically? That's a fairly good question, since there are many other functions with this property. For example, you will have learned from analysis that $a_0 + a_1 x + a_2 x^2 + \ldots$ arbitrary polynomials can be used to approximate any analytic function (this is the Taylor series). Similarly, a Fourier series

\[ f(x) = a_0 + \sum_k b_k \cos(kx) + c_k \sin(kx) \]

can approximate any continuous function f (and discontinuous functions also can have convergence, etc. these are the details of a harmonic analysis course).

That's all for one dimension. How about two dimensional functions? It turns out it's not difficult to prove that tensor products of universal approximators will give higher dimensional universal approximators. So for example, tensoring together two polynomials:

\[ a_0 + a_1 x + a_2 y + a_3 x y + a_4 x^2 y + a_5 x y^2 + a_6 x^2 y^2 + \ldots \]

will give a two-dimensional function approximator. But notice how we have to resolve every combination of terms. This means that if we used n coefficients in each dimension d, the total number of coefficients to build a d-dimensional universal approximator from one-dimensional objects would need $n^d$ coefficients. This exponential growth is known as the curse of dimensionality.

The second property of neural networks that makes them applicable to machine learning is that they overcome the curse of dimensionality. The proofs in this area can be a little difficult to parse, but what they boil down to is proving in many cases that the growth of neural networks to sufficiently approximate a d-dimensional function grows as a polynomial of d, rather than exponential. This means that there's some dimensional cutoff where for $d>cutoff$ it is more efficient to use a neural network. This can be problem-specific, but generally it tends to be the case at least by 8 or 10 dimensions.

Neural networks have a few other properties to consider as well:

The assumptions of the neural network can be encoded into the neural architectures. A neural network where the last layer has an activation function x->x^2 is a neural network where all outputs are positive. This means that if you want to find a positive function, you can make the optimization easier by enforcing this constraint. A lot of other constraints can be enforced, like tanh activation functions can make the neural network be a smooth (all derivatives finite) function, or other activations can cause finite numbers of learnable discontinuities.

Generating higher dimensional forms from one dimensional forms does not have good symmetry. For example, the two-dimensional tensor Fourier basis does not have a good way to represent $sin(xy)$. This property of the approximator is called (non)isotropy and more detail can be found in this wonderful talk about function approximation for multidimensional integration (cubature). Neural networks are naturally not aligned to a basis.

Neural networks are "easy" to compute. There's good software for them, GPU-acceleration, and all other kinds of tooling that make them particularly simple to use.

There are proofs that in many scenarios for neural networks the local minima are the global minima, meaning that local optimization is sufficient for training a neural network. Global optimization (which we will cover later in the course) is much more expensive than local methods like gradient descent, and thus this can be a good property to abuse for faster computation.

From Machine Learning to Scientific Machine Learning: Structure and Science

This understanding of a neural network and their libraries directly bridges to the understanding of scientific machine learning and the computation done in the field. In scientific machine learning, neural networks and machine learning are used as the basis to solve problems in scientific computing. Scientific computing, as a discipline also known as Computational Science, is a field of study which focuses on scientific simulation, using tools such as differential equations to investigate physical, biological, and other phenomena.

What we wish to do in scientific machine learning is use these properties of neural networks to improve the way that we investigate our scientific models.

Aside: Why Differential Equations?

Why do differential equations come up so often in as the model in the scientific context? This is a deep question with quite a simple answer. Essentially, all scientific experiments always have to test how things change. For example, you take a system now, you change it, and your measurement is how the changes you made caused changes in the system. This boils down to gather information about how, for some arbitrary system $y = f(x)$, how $\Delta x$ is related to $\Delta y$. Thus what you learn from scientific experiments, what is codified as scientific laws, is not "the answer", but the answer to how things change. This process of writing down equations by describing how they change precisely gives differential equations.

Solving ODEs with Neural Networks: The Physics-Informed Neural Network

Now let's get to our first true SciML application: solving ordinary differential equations with neural networks. The process of solving a differential equation with a neural network, or using a differential equation as a regularizer in the loss function, is known as a physics-informed neural network, since this allows for physical equations to guide the training of the neural network in circumstances where data might be lacking.

Background: A Method for Solving Ordinary Differential Equations with Neural Networks

This is a result first due to Lagaris et. al from 1998. The idea is to solve differential equations using neural networks by representing the solution by a neural network and training the resulting network to satisfy the conditions required by the differential equation.

Let's say we want to solve a system of ordinary differential equations

\[ u' = f(u,t) \]

with $t \in [0,1]$ and a known initial condition $u(0)=u_0$. To solve this, we approximate the solution by a neural network:

\[ NN(t) \approx u(t) \]

If $NN(t)$ was the true solution, then it would hold that $NN'(t) = f(NN(t),t)$ for all $t$. Thus we turn this condition into our loss function. This motivates the loss function:

\[ L(p) = \sum_i \left(\frac{dNN(t_i)}{dt} - f(NN(t_i),t_i) \right)^2 \]

The choice of $t_i$ could be done in many ways: it can be random, it can be a grid, etc. Anyways, when this loss function is minimized (gradients computed with standard reverse-mode automatic differentiation), then we have that $\frac{dNN(t_i)}{dt} \approx f(NN(t_i),t_i)$ and thus $NN(t)$ approximately solves the differential equation.

Note that we still have to handle the initial condition. One simple way to do this is to add an initial condition term to the cost function. This would look like:

\[ L(p) = (NN(0) - u_0)^2 + \sum_i \left(\frac{dNN(t_i)}{dt} - f(NN(t_i),t_i) \right)^2 \]

While that would work, it can be more efficient to encode the initial condition into the function itself so that it's trivially satisfied for any possible set of parameters. For example, instead of directly using a neural network, we can use:

\[ g(t) = u_0 + tNN(t) \]

as our solution. Notice that $g(t)$ is thus a universal approximator for all continuous functions such that $g(0)=u_0$ (this is a property one should prove!). Since $g(t)$ will always satisfy the initial condition, we can train $g(t)$ to satisfy the derivative function then it will automatically be a solution to the derivative function. In this sense, we can use the loss function:

\[ L(p) = \sum_i \left(\frac{dg(t_i)}{dt} - f(g(t_i),t_i) \right)^2 \]

where $p$ are the parameters that define $g$, which in turn are the parameters which define the neural network $NN$ that define $g$. Thus this reduces down, once again, to simply finding weights which minimize a loss function!

Coding Up the Method

Now let's implement this method with Flux. Let's define a neural network to be the NN(t) above. To make the problem easier, let's look at the ODE:

\[ u' = \cos 2\pi t \]

and approximate it with the neural network from a scalar to a scalar:

using Flux

NNODE = Chain(x -> [x], # Take in a scalar and transform it into an array

@@ -228,7 +224,7 @@ Introduction to Scientific Machine Learning through Physics-Inf

first) # Take first value, i.e. return a scalar

NNODE(1.0)

-0.12358128803653629

+-0.07905010348616885

Instead of directly approximating the neural network, we will use the transformed equation that is forced to satisfy the boundary conditions. Using u0=1.0, we have the function:

g(t) = t*NNODE(t) + 1f0

@@ -252,23 +248,23 @@ Introduction to Scientific Machine Learning through Physics-Inf

display(loss())

Flux.train!(loss, Flux.params(NNODE), data, opt; cb=cb)

-0.5292029324358418

-0.4932957105006129

-0.4516506740086385

-0.3061094653058115

-0.07083152746013988

-0.011665014535397188

-0.006347690360824062

-0.005509980046802497

-0.005214355730566553

-0.0049850620896091675

-0.004787041823734979

+0.5178025866113664

+0.5003380507674955

+0.4817250368610114

+0.4072179526120902

+0.1709448358222078

+0.020230873113184646

+0.005706788834646396

+0.004160672851654196

+0.0038591849808713866

+0.003736976081002845

+0.003611986041281208

How well did this do? Well if we take the integral of both sides of our differential equation, we see it's fairly trivial:

\[ \int g' = g = \int \cos 2\pi t = C + \frac{\sin 2\pi t}{2\pi} \]

where we defined $C = 1$. Let's take a bunch of (input,output) pairs from the neural network and plot it against the analytical solution to the differential equation:

using Plots

t = 0:0.001:1.0

plot(t,g.(t),label="NN")

plot!(t,1.0 .+ sin.(2π.*t)/2π, label = "True Solution")

-

We see that it matches very well, and we can keep improving this fit by increasing the size of the neural network, using more training points, and training for more iterations.

Example: Harmonic Oscillator Informed Training

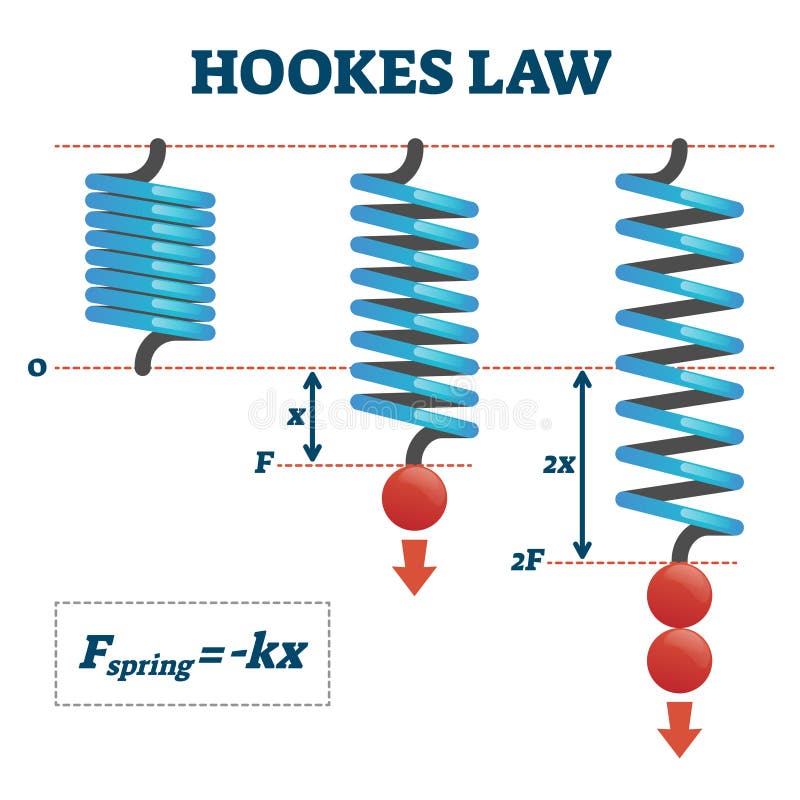

Using this idea, differential equations encoding physical laws can be utilized inside of loss functions for terms which we have some basis to believe should approximately follow some physical system. Let's investigate this last step by looking at how to inform the training of a neural network using the harmonic oscillator.

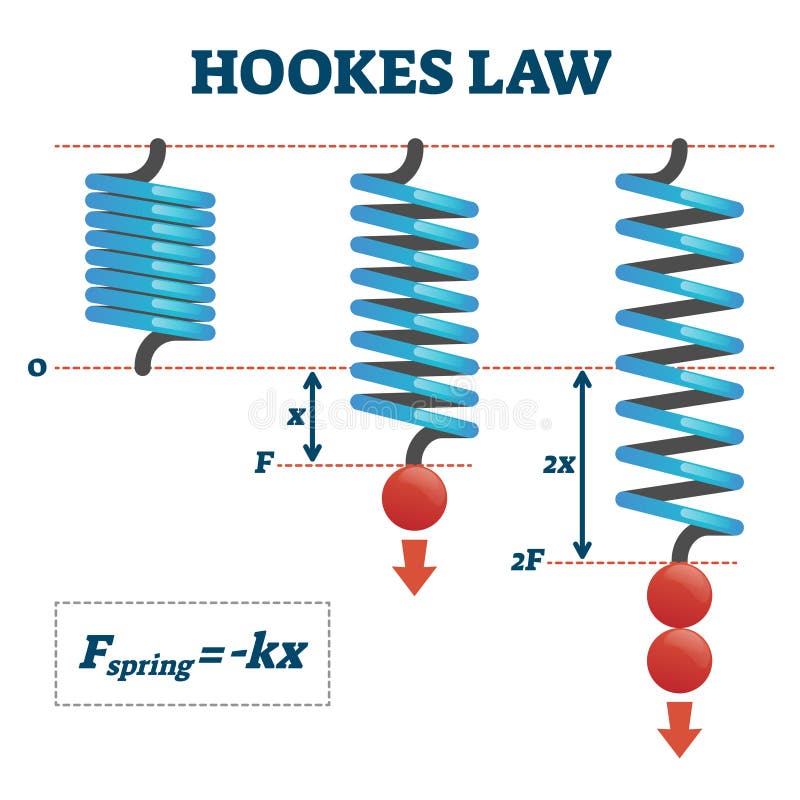

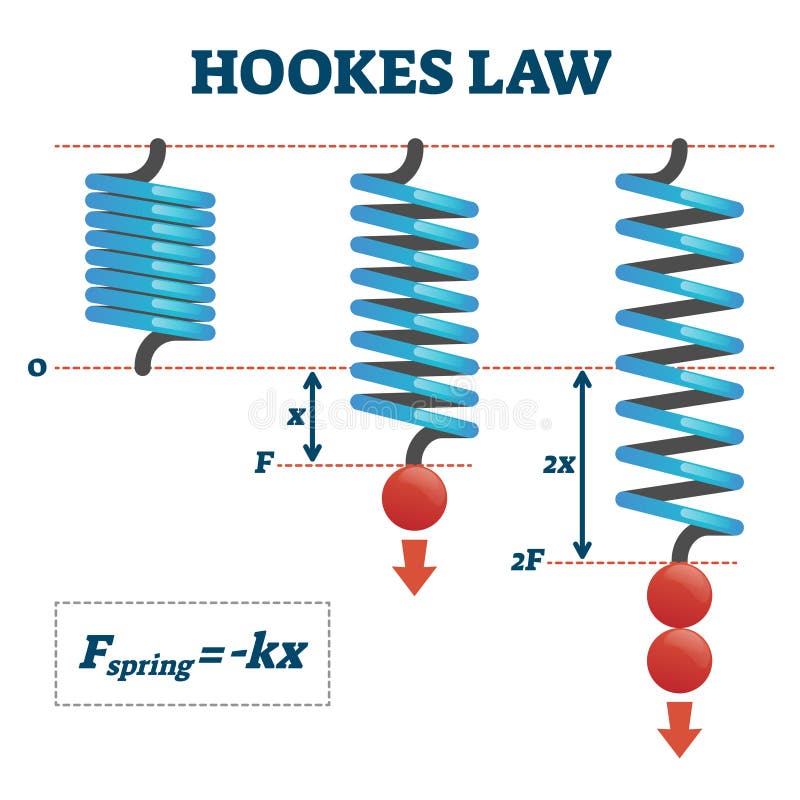

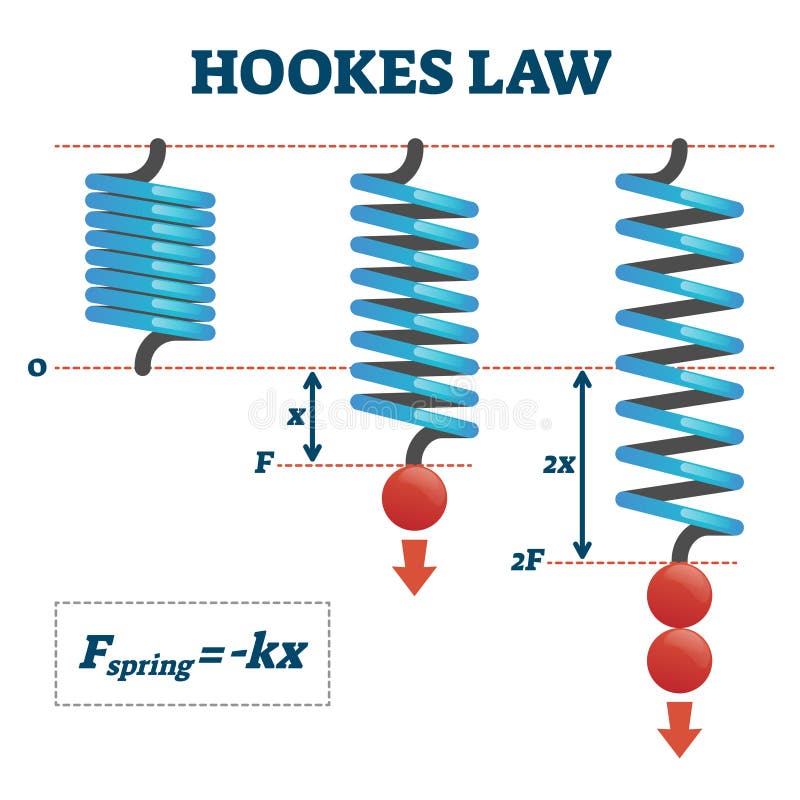

Let's assume that we are taking measurements of (position,force) in some real one-dimensional spring pushing and pulling against a wall.

But instead of the simple spring, let's assume we had a more complex spring, for example, let's say $F(x) = -kx + 0.1sin(x)$ where this extra term is due to some deformities in the metal (assume mass=1). Then by Newton's law of motion we have a second order ordinary differential equation:

\[ x'' = -kx + 0.1 \sin(x) \]

We can use the DifferentialEquations.jl package to solve this differential equation and see what this system looks like:

+

We see that it matches very well, and we can keep improving this fit by increasing the size of the neural network, using more training points, and training for more iterations.

Example: Harmonic Oscillator Informed Training