diff --git a/.gitignore b/.gitignore

index 264e72a..02a437c 100644

--- a/.gitignore

+++ b/.gitignore

@@ -16,3 +16,8 @@ verifier_log_*

.idea

*.so

release

+*.compiled

+.DS_Store

+*.csv

+*.out

+*.txt

\ No newline at end of file

diff --git a/README.md b/README.md

index 0c87c95..e0fdbb4 100644

--- a/README.md

+++ b/README.md

@@ -12,10 +12,12 @@

## What's New?

+- New activation function (sin, cos, tan, GeLU) with optimizable bounds (α-CROWN) and [branch and bound support](https://files.sri.inf.ethz.ch/wfvml23/papers/paper_24.pdf) for non-ReLU activation functions. We achieve significant improvements on verifying neural networks with non-ReLU activation functions such as Transformer and LSTM networks. (09/2023)

+- [α,β-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git) ([alpha-beta-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git)) (using `auto_LiRPA` as its core library) **won** [VNN-COMP 2023](https://sites.google.com/view/vnn2023). (08/2023)

- Bound computation for higher-order computational graphs to support bounding Jacobian, Jacobian-vector products, and [local Lipschitz constants](https://arxiv.org/abs/2210.07394). (11/2022)

-- Our neural network verification tool [α,β-CROWN](https://github.com/huanzhang12/alpha-beta-CROWN.git) ([alpha-beta-CROWN](https://github.com/huanzhang12/alpha-beta-CROWN.git)) (using `auto_LiRPA` as its core library) **won** [VNN-COMP 2022](https://sites.google.com/view/vnn2022). Our library supports the large CIFAR100, TinyImageNet and ImageNet models in VNN-COMP 2022. (09/2022)

+- Our neural network verification tool [α,β-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git) ([alpha-beta-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git)) (using `auto_LiRPA` as its core library) **won** [VNN-COMP 2022](https://sites.google.com/view/vnn2022). Our library supports the large CIFAR100, TinyImageNet and ImageNet models in VNN-COMP 2022. (09/2022)

- Implementation of **general cutting planes** ([GCP-CROWN](https://arxiv.org/pdf/2208.05740.pdf)), support of more activation functions and improved performance and scalability. (09/2022)

-- Our neural network verification tool [α,β-CROWN](https://github.com/huanzhang12/alpha-beta-CROWN.git) ([alpha-beta-CROWN](https://github.com/huanzhang12/alpha-beta-CROWN.git)) **won** [VNN-COMP 2021](https://sites.google.com/view/vnn2021) **with the highest total score**, outperforming 11 SOTA verifiers. α,β-CROWN uses the `auto_LiRPA` library as its core bound computation library. (09/2021)

+- Our neural network verification tool [α,β-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git) ([alpha-beta-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git)) **won** [VNN-COMP 2021](https://sites.google.com/view/vnn2021) **with the highest total score**, outperforming 11 SOTA verifiers. α,β-CROWN uses the `auto_LiRPA` library as its core bound computation library. (09/2021)

- [Optimized CROWN/LiRPA](https://arxiv.org/pdf/2011.13824.pdf) bound (α-CROWN) for ReLU, **sigmoid**, **tanh**, and **maxpool** activation functions, which can significantly outperform regular CROWN bounds. See [simple_verification.py](examples/vision/simple_verification.py#L59) for an example. (07/31/2021)

- Handle split constraints for ReLU neurons ([β-CROWN](https://arxiv.org/pdf/2103.06624.pdf)) for complete verifiers. (07/31/2021)

- A memory efficient GPU implementation of backward (CROWN) bounds for

@@ -46,12 +48,12 @@ Our library supports the following algorithms:

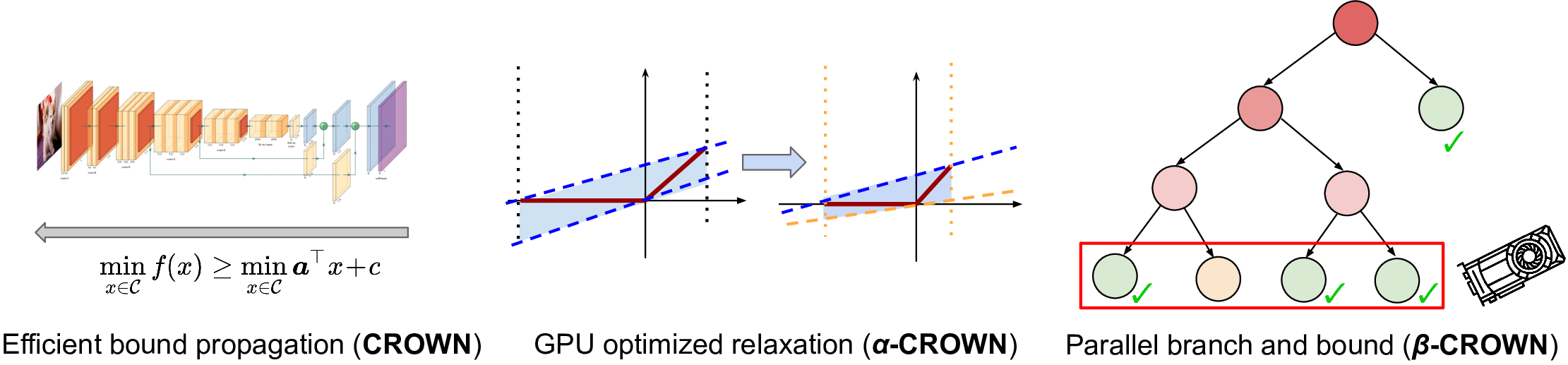

* Backward mode LiRPA bound propagation ([CROWN](https://arxiv.org/pdf/1811.00866.pdf)/[DeepPoly](https://files.sri.inf.ethz.ch/website/papers/DeepPoly.pdf))

* Backward mode LiRPA bound propagation with optimized bounds ([α-CROWN](https://arxiv.org/pdf/2011.13824.pdf))

-* Backward mode LiRPA bound propagation with split constraints ([β-CROWN](https://arxiv.org/pdf/2103.06624.pdf))

+* Backward mode LiRPA bound propagation with split constraints ([β-CROWN](https://arxiv.org/pdf/2103.06624.pdf)) for ReLU, and ([Shi et al. 2023](https://files.sri.inf.ethz.ch/wfvml23/papers/paper_24.pdf)) for general nonlinear functions

* Generalized backward mode LiRPA bound propagation with general cutting plane constraints ([GCP-CROWN](https://arxiv.org/pdf/2208.05740.pdf))

* Forward mode LiRPA bound propagation ([Xu et al., 2020](https://arxiv.org/pdf/2002.12920))

* Forward mode LiRPA bound propagation with optimized bounds (similar to [α-CROWN](https://arxiv.org/pdf/2011.13824.pdf))

* Interval bound propagation ([IBP](https://arxiv.org/pdf/1810.12715.pdf))

-* Hybrid approaches, e.g., Forward+Backward, IBP+Backward ([CROWN-IBP](https://arxiv.org/pdf/1906.06316.pdf)), [α,β-CROWN](https://github.com/huanzhang12/alpha-beta-CROWN.git) ([alpha-beta-CROWN](https://github.com/huanzhang12/alpha-beta-CROWN.git))

+* Hybrid approaches, e.g., Forward+Backward, IBP+Backward ([CROWN-IBP](https://arxiv.org/pdf/1906.06316.pdf)), [α,β-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git) ([alpha-beta-CROWN](https://github.com/Verified-Intelligence/alpha-beta-CROWN.git))

Our library allows automatic bound derivation and computation for general

computational graphs, in a similar manner that gradients are obtained in modern

@@ -99,7 +101,7 @@ See [PyTorch Get Started](https://pytorch.org/get-started).

Then you can install `auto_LiRPA` via:

```bash

-git clone https://github.com/KaidiXu/auto_LiRPA

+git clone https://github.com/Verified-Intelligence/auto_LiRPA

cd auto_LiRPA

python setup.py install

```

@@ -159,10 +161,10 @@ We provide [a wide range of examples](doc/src/examples.md) of using `auto_LiRPA`

* [Certified Adversarial Defense Training on Sequence Data with **LSTM**](doc/src/examples.md#certified-adversarial-defense-training-for-lstm-on-mnist)

* [Certifiably Robust Language Classifier using **Transformers**](doc/src/examples.md#certifiably-robust-language-classifier-with-transformer-and-lstm)

* [Certified Robustness against **Model Weight Perturbations**](doc/src/examples.md#certified-robustness-against-model-weight-perturbations-and-certified-defense)

-* [Bounding **Jacobian** and **local Lipschitz constants**](examples/vision/jacobian.py)

+* [Bounding **Jacobian** and **local Lipschitz constants**](examples/vision/jacobian_new.py)

`auto_LiRPA` has also be used in the following works:

-* [**α,β-CROWN for complete neural network verification**](https://github.com/huanzhang12/alpha-beta-CROWN)

+* [**α,β-CROWN for complete neural network verification**](https://github.com/Verified-Intelligence/alpha-beta-CROWN)

* [**Fast certified robust training**](https://github.com/shizhouxing/Fast-Certified-Robust-Training)

* [**Computing local Lipschitz constants**](https://github.com/shizhouxing/Local-Lipschitz-Constants)

@@ -177,7 +179,7 @@ For more documentations, please refer to:

## Publications

-Please kindly cite our papers if you use the `auto_LiRPA` library. Full [BibTeX entries](doc/examples.md#bibtex-entries) can be found [here](doc/examples.md#bibtex-entries).

+Please kindly cite our papers if you use the `auto_LiRPA` library. Full [BibTeX entries](doc/src/examples.md#bibtex-entries) can be found [here](doc/src/examples.md#bibtex-entries).

The general LiRPA based bound propagation algorithm was originally proposed in our paper:

@@ -207,26 +209,30 @@ Certified robust training using `auto_LiRPA` is improved to allow much shorter w

NeurIPS 2021.

Zhouxing Shi\*, Yihan Wang\*, Huan Zhang, Jinfeng Yi and Cho-Jui Hsieh (\* Equal contribution).

-## Developers and Copyright

+Branch and bound for non-ReLU and general activation functions:

+* [Formal Verification for Neural Networks with General Nonlinearities via Branch-and-Bound](https://files.sri.inf.ethz.ch/wfvml23/papers/paper_24.pdf).

+Zhouxing Shi\*, Qirui Jin\*, Zico Kolter, Suman Jana, Cho-Jui Hsieh, Huan Zhang (\* Equal contribution).

-| [Kaidi Xu](https://kaidixu.com/) | [Zhouxing Shi](https://shizhouxing.github.io/) | [Huan Zhang](https://huan-zhang.com/) | [Yihan Wang](https://yihanwang617.github.io/) | [Shiqi Wang](https://www.cs.columbia.edu/~tcwangshiqi/) |

-|:--:|:--:| :--:| :--:| :--:|

-|  |

|  |

|  |

|  |

|  |

+## Developers and Copyright

Team lead:

-* Huan Zhang (huan@huan-zhang.com), CMU

+* Huan Zhang (huan@huan-zhang.com), UIUC

-Main developers:

+Current developers:

* Zhouxing Shi (zshi@cs.ucla.edu), UCLA

+* Linyi Li (linyi2@illinois.edu), UIUC

+* Christopher Brix (brix@cs.rwth-aachen.de), RWTH Aachen University

* Kaidi Xu (kx46@drexel.edu), Drexel University

+* Xiangru Zhong (xiangruzh0915@gmail.com), Sun Yat-sen University

+* Qirui Jin (qiruijin@umich.edu), University of Michigan

+* Zhuolin Yang (zhuolin5@illinois.edu), UIUC

+* Zhuowen Yuan (realzhuowen@gmail.com), UIUC

-Contributors:

-* Yihan Wang (yihanwang@ucla.edu), UCLA

+Past developers:

* Shiqi Wang (sw3215@columbia.edu), Columbia University

-* Linyi Li (linyi2@illinois.edu), UIUC

+* Yihan Wang (yihanwang@ucla.edu), UCLA

* Jinqi (Kathryn) Chen (jinqic@cs.cmu.edu), CMU

-* Zhuolin Yang (zhuolin5@illinois.edu), UIUC

-We thank the [commits](https://github.com/KaidiXu/auto_LiRPA/commits) and [pull requests](https://github.com/KaidiXu/auto_LiRPA/pulls) from community contributors.

+We thank the [commits](https://github.com/Verified-Intelligence/auto_LiRPA/commits) and [pull requests](https://github.com/Verified-Intelligence/auto_LiRPA/pulls) from community contributors.

Our library is released under the BSD 3-Clause license.

diff --git a/README_abcrown.md b/README_abcrown.md

deleted file mode 100644

index 339f4ed..0000000

--- a/README_abcrown.md

+++ /dev/null

@@ -1,245 +0,0 @@

-α,β-CROWN (alpha-beta-CROWN): A Fast and Scalable Neural Network Verifier with Efficient Bound Propagation

-======================

-

-

|

+## Developers and Copyright

Team lead:

-* Huan Zhang (huan@huan-zhang.com), CMU

+* Huan Zhang (huan@huan-zhang.com), UIUC

-Main developers:

+Current developers:

* Zhouxing Shi (zshi@cs.ucla.edu), UCLA

+* Linyi Li (linyi2@illinois.edu), UIUC

+* Christopher Brix (brix@cs.rwth-aachen.de), RWTH Aachen University

* Kaidi Xu (kx46@drexel.edu), Drexel University

+* Xiangru Zhong (xiangruzh0915@gmail.com), Sun Yat-sen University

+* Qirui Jin (qiruijin@umich.edu), University of Michigan

+* Zhuolin Yang (zhuolin5@illinois.edu), UIUC

+* Zhuowen Yuan (realzhuowen@gmail.com), UIUC

-Contributors:

-* Yihan Wang (yihanwang@ucla.edu), UCLA

+Past developers:

* Shiqi Wang (sw3215@columbia.edu), Columbia University

-* Linyi Li (linyi2@illinois.edu), UIUC

+* Yihan Wang (yihanwang@ucla.edu), UCLA

* Jinqi (Kathryn) Chen (jinqic@cs.cmu.edu), CMU

-* Zhuolin Yang (zhuolin5@illinois.edu), UIUC

-We thank the [commits](https://github.com/KaidiXu/auto_LiRPA/commits) and [pull requests](https://github.com/KaidiXu/auto_LiRPA/pulls) from community contributors.

+We thank the [commits](https://github.com/Verified-Intelligence/auto_LiRPA/commits) and [pull requests](https://github.com/Verified-Intelligence/auto_LiRPA/pulls) from community contributors.

Our library is released under the BSD 3-Clause license.

diff --git a/README_abcrown.md b/README_abcrown.md

deleted file mode 100644

index 339f4ed..0000000

--- a/README_abcrown.md

+++ /dev/null

@@ -1,245 +0,0 @@

-α,β-CROWN (alpha-beta-CROWN): A Fast and Scalable Neural Network Verifier with Efficient Bound Propagation

-======================

-

-

- -

-

-

-α,β-CROWN (alpha-beta-CROWN) is a neural network verifier based on an efficient

-bound propagation algorithm ([CROWN](https://arxiv.org/pdf/1811.00866.pdf)) and

-branch and bound. It can be accelerated efficiently on **GPUs** and can scale

-to relatively large convolutional networks. It also supports a wide range of

-neural network architectures (e.g., **CNN**, **ResNet**, and various activation

-functions), thanks to the versatile

-[auto\_LiRPA](http://github.com/KaidiXu/auto_LiRPA) library developed by us.

-α,β-CROWN can provide **provable robustness guarantees against adversarial

-attacks** and can also verify other general properties of neural networks.

-

-α,β-CROWN is the **winning verifier** in [VNN-COMP

-2021](https://sites.google.com/view/vnn2021) and [VNN-COMP

-2022](https://sites.google.com/view/vnn2022) (International Verification of

-Neural Networks Competition) with the highest total score, outperforming many

-other neural network verifiers on a wide range of benchmarks over 2 years.

-Details of competition results can be found in [VNN-COMP 2021

-slides](https://docs.google.com/presentation/d/1oM3NqqU03EUqgQVc3bGK2ENgHa57u-W6Q63Vflkv000/edit#slide=id.ge4496ad360_14_21),

-[report](https://arxiv.org/abs/2109.00498) and [VNN-COMP 2022 slides (page

-73)](https://drive.google.com/file/d/1nnRWSq3plsPvOT3V-drAF5D8zWGu02VF/view?usp=sharing).

-

-Supported Features

-----------------------

-

-

- -

-

-

-Our verifier consists of the following core algorithms:

-

-* **β-CROWN** ([Wang et al. 2021](https://arxiv.org/pdf/2103.06624.pdf)): complete verification with **CROWN** ([Zhang et al. 2018](https://arxiv.org/pdf/1811.00866.pdf)) and branch and bound

-* **α-CROWN** ([Xu et al., 2021](https://arxiv.org/pdf/2011.13824.pdf)): incomplete verification with optimized CROWN bound

-* **GCP-CROWN** ([Zhang et al. 2021](https://arxiv.org/pdf/2208.05740.pdf)): CROWN-like bound propagation with general cutting plane constraints.

-* **BaB-Attack** ([Zhang et al. 2021](https://proceedings.mlr.press/v162/zhang22ae/zhang22ae.pdf)): Branch and bound based adversarial attack for tackling hard instances.

-* **MIP** ([Tjeng et al., 2017](https://arxiv.org/pdf/1711.07356.pdf)): mixed integer programming (slow but can be useful on small models).

-

-We support these neural network architectures:

-

-* Layers: fully connected (FC), convolutional (CNN), pooling (average pool and max pool), transposed convolution

-* Activation functions: ReLU (incomplete/complete verification); sigmoid, tanh, arctan, sin, cos, tan (incomplete verification)

-* Residual connections and other irregular graphs

-

-We support the following verification specifications:

-

-* Lp norm perturbation (p=1,2,infinity, as often used in robustness verification)

-* VNNLIB format input (at most two layers of AND/OR clause, as used in VNN-COMP 2021 and 2022)

-* Any linear specifications on neural network output (which can be added as a linear layer)

-

-We provide many example configurations in

-[`complete_verifier/exp_configs`](/complete_verifier/exp_configs) directory to

-start with:

-

-* MNIST: MLP and CNN models

-* CIFAR-10, CIFAR-100, TinyImageNet: CNN and ResNet models

-* ACASXu, NN4sys and other low input-dimension models

-

-See the [Guide on Algorithm

-Selection](/complete_verifier/docs/abcrown_usage.md#guide-on-algorithm-selection)

-to find the most suitable example to get started.

-

-Installation and Setup

-----------------------

-

-α,β-CROWN is tested on Python 3.7+ and PyTorch 1.11. It can be installed

-easily into a conda environment. If you don't have conda, you can install

-[miniconda](https://docs.conda.io/en/latest/miniconda.html).

-

-```bash

-# Remove the old environment, if necessary.

-conda deactivate; conda env remove --name alpha-beta-crown

-# install all dependents into the alpha-beta-crown environment

-conda env create -f complete_verifier/environment.yml --name alpha-beta-crown

-# activate the environment

-conda activate alpha-beta-crown

-```

-

-If you use the α-CROWN and/or β-CROWN verifiers (which covers the most use

-cases), a Gurobi license is *not needed*. If you want to use MIP based

-verification algorithms (feasible only for small MLP models), you need to

-install a Gurobi license with the `grbgetkey` command. If you don't have

-access to a license, by default the above installation procedure includes a

-free and restricted license, which is actually sufficient for many relatively

-small NNs. If you use the GCP-CROWN verifier, an installation of IBM CPlex

-solver is required. Instructions to install the CPlex solver can be found

-in the [VNN-COMP benchmark instructions](/complete_verifier/docs/vnn_comp.md#installation)

-or the [GCP-CROWN instructions](https://github.com/tcwangshiqi-columbia/GCP-CROWN).

-

-If you prefer to install packages manually rather than using a prepared conda

-environment, you can refer to this [installation

-script](/vnncomp_scripts/install_tool_general.sh).

-

-If you want to run α,β-CROWN verifier on the VNN-COMP 2021 and 2022 benchmarks

-(e.g., to make a comparison to a new verifier), you can follow [this

-guide](/complete_verifier/docs/vnn_comp.md).

-

-Instructions

-----------------------

-

-We provide a unified front-end for the verifier, `abcrown.py`. All parameters

-for the verifier are defined in a `yaml` config file. For example, to run

-robustness verification on a CIFAR-10 ResNet network, you just run:

-

-```bash

-conda activate alpha-beta-crown # activate the conda environment

-cd complete_verifier

-python abcrown.py --config exp_configs/cifar_resnet_2b.yaml

-```

-

-You can find explanations for most useful parameters in [this example config

-file](/complete_verifier/exp_configs/cifar_resnet_2b.yaml). For detailed usage

-and tutorial examples please see the [Usage

-Documentation](/complete_verifier/docs/abcrown_usage.md). We also provide a

-large range of examples in the

-[`complete_verifier/exp_configs`](/complete_verifier/exp_configs) folder.

-

-

-Publications

-----------------------

-

-If you use our verifier in your work, **please kindly cite our CROWN**([Zhang

-et al., 2018](https://arxiv.org/pdf/1811.00866.pdf)), **α-CROWN** ([Xu et al.,

-2021](https://arxiv.org/pdf/2011.13824.pdf)), **β-CROWN**([Wang et al.,

-2021](https://arxiv.org/pdf/2103.06624.pdf)) and **GCP-CROWN**([Zhang et al.,

-2022](https://arxiv.org/pdf/2208.05740.pdf)) papers. If your work involves the

-convex relaxation of the NN verification please kindly cite [Salman et al.,

-2019](https://arxiv.org/pdf/1902.08722). If your work deals with

-ResNet/DenseNet, LSTM (recurrent networks), Transformer or other complex

-architectures, or model weight perturbations please kindly cite [Xu et al.,

-2020](https://arxiv.org/pdf/2002.12920.pdf). If you use our branch and bound

-based adversarial attack (falsifier), please cite [Zhang et al.

-2022](https://proceedings.mlr.press/v162/zhang22ae/zhang22ae.pdf).

-

-α,β-CROWN combines our existing efforts on neural network verification:

-

-* **CROWN** ([Zhang et al. NeurIPS 2018](https://arxiv.org/pdf/1811.00866.pdf)) is a very efficient bound propagation based verification algorithm. CROWN propagates a linear inequality backwards through the network and utilizes linear bounds to relax activation functions.

-

-* The **"convex relaxation barrier"** ([Salman et al., NeurIPS 2019](https://arxiv.org/pdf/1902.08722)) paper concludes that optimizing the ReLU relaxation allows CROWN (referred to as a "greedy" primal space solver) to achieve the same solution as linear programming (LP) based verifiers.

-

-* **LiRPA** ([Xu et al., NeurIPS 2020](https://arxiv.org/pdf/2002.12920.pdf)) is a generalization of CROWN on general computational graphs and we also provide an efficient GPU implementation, the [auto\_LiRPA](https://github.com/KaidiXu/auto_LiRPA) library.

-

-* **α-CROWN** (sometimes referred to as optimized CROWN or optimized LiRPA) is used in the Fast-and-Complete verifier ([Xu et al., ICLR 2021](https://arxiv.org/pdf/2011.13824.pdf)), which jointly optimizes intermediate layer bounds and final layer bounds in CROWN via variable α. α-CROWN typically has greater power than LP since LP cannot cheaply tighten intermediate layer bounds.

-

-* **β-CROWN** ([Wang et al., NeurIPS 2021](https://arxiv.org/pdf/2103.06624.pdf)) incorporates split constraints in branch and bound (BaB) into the CROWN bound propagation procedure via an additional optimizable parameter β. The combination of efficient and GPU accelerated bound propagation with branch and bound produces a powerful and scalable neural network verifier.

-

-* **BaB-Attack** ([Zhang et al., ICML 2022](https://proceedings.mlr.press/v162/zhang22ae/zhang22ae.pdf)) is a strong falsifier (adversarial attack) based on branch and bound, which can find adversarial examples for hard instances where gradient or input-space-search based methods cannot succeed.

-

-* **GCP-CROWN** ([Zhang et al., NeurIPS 2022](https://arxiv.org/pdf/2208.05740.pdf)) enables the use of general cutting planes methods for neural network verification in a GPU-accelerated and very efficient bound propagation framework.

-

-We provide bibtex entries below:

-

-```

-@article{zhang2018efficient,

- title={Efficient Neural Network Robustness Certification with General Activation Functions},

- author={Zhang, Huan and Weng, Tsui-Wei and Chen, Pin-Yu and Hsieh, Cho-Jui and Daniel, Luca},

- journal={Advances in Neural Information Processing Systems},

- volume={31},

- pages={4939--4948},

- year={2018},

- url={https://arxiv.org/pdf/1811.00866.pdf}

-}

-

-@article{xu2020automatic,

- title={Automatic perturbation analysis for scalable certified robustness and beyond},

- author={Xu, Kaidi and Shi, Zhouxing and Zhang, Huan and Wang, Yihan and Chang, Kai-Wei and Huang, Minlie and Kailkhura, Bhavya and Lin, Xue and Hsieh, Cho-Jui},

- journal={Advances in Neural Information Processing Systems},

- volume={33},

- year={2020}

-}

-

-@article{salman2019convex,

- title={A Convex Relaxation Barrier to Tight Robustness Verification of Neural Networks},

- author={Salman, Hadi and Yang, Greg and Zhang, Huan and Hsieh, Cho-Jui and Zhang, Pengchuan},

- journal={Advances in Neural Information Processing Systems},

- volume={32},

- pages={9835--9846},

- year={2019}

-}

-

-@inproceedings{xu2021fast,

- title={{Fast and Complete}: Enabling Complete Neural Network Verification with Rapid and Massively Parallel Incomplete Verifiers},

- author={Kaidi Xu and Huan Zhang and Shiqi Wang and Yihan Wang and Suman Jana and Xue Lin and Cho-Jui Hsieh},

- booktitle={International Conference on Learning Representations},

- year={2021},

- url={https://openreview.net/forum?id=nVZtXBI6LNn}

-}

-

-@article{wang2021beta,

- title={{Beta-CROWN}: Efficient bound propagation with per-neuron split constraints for complete and incomplete neural network verification},

- author={Wang, Shiqi and Zhang, Huan and Xu, Kaidi and Lin, Xue and Jana, Suman and Hsieh, Cho-Jui and Kolter, J Zico},

- journal={Advances in Neural Information Processing Systems},

- volume={34},

- year={2021}

-}

-

-@InProceedings{zhang22babattack,

- title = {A Branch and Bound Framework for Stronger Adversarial Attacks of {R}e{LU} Networks},

- author = {Zhang, Huan and Wang, Shiqi and Xu, Kaidi and Wang, Yihan and Jana, Suman and Hsieh, Cho-Jui and Kolter, Zico},

- booktitle = {Proceedings of the 39th International Conference on Machine Learning},

- volume = {162},

- pages = {26591--26604},

- year = {2022},

-}

-

-@article{zhang2022general,

- title={General Cutting Planes for Bound-Propagation-Based Neural Network Verification},

- author={Zhang, Huan and Wang, Shiqi and Xu, Kaidi and Li, Linyi and Li, Bo and Jana, Suman and Hsieh, Cho-Jui and Kolter, J Zico},

- journal={Advances in Neural Information Processing Systems},

- year={2022}

-}

-```

-

-Developers and Copyright

-----------------------

-

-The α,β-CROWN verifier is developed by a team from CMU, UCLA, Drexel University, Columbia University and UIUC:

-

-Team lead:

-* Huan Zhang (huan@huan-zhang.com), CMU

-

-Main developers:

-* Kaidi Xu (kx46@drexel.edu), Drexel University

-* Zhouxing Shi (zshi@cs.ucla.edu), UCLA

-* Shiqi Wang (sw3215@columbia.edu), Columbia University

-

-Contributors:

-* Linyi Li (linyi2@illinois.edu), UIUC

-* Jinqi (Kathryn) Chen (jinqic@cs.cmu.edu), CMU

-* Zhuolin Yang (zhuolin5@illinois.edu), UIUC

-* Yihan Wang (yihanwang@ucla.edu), UCLA

-

-Advisors:

-* Zico Kolter (zkolter@cs.cmu.edu), CMU

-* Cho-Jui Hsieh (chohsieh@cs.ucla.edu), UCLA

-* Suman Jana (suman@cs.columbia.edu), Columbia University

-* Bo Li (lbo@illinois.edu), UIUC

-* Xue Lin (xue.lin@northeastern.edu), Northeastern University

-

-Our library is released under the BSD 3-Clause license. A copy of the license is included [here](LICENSE).

-

diff --git a/auto_LiRPA/__init__.py b/auto_LiRPA/__init__.py

index 42981d0..47ddb9a 100644

--- a/auto_LiRPA/__init__.py

+++ b/auto_LiRPA/__init__.py

@@ -5,4 +5,4 @@

from .wrapper import CrossEntropyWrapper, CrossEntropyWrapperMultiInput

from .bound_op_map import register_custom_op, unregister_custom_op

-__version__ = '0.3.1'

+__version__ = '0.4.0'

diff --git a/auto_LiRPA/backward_bound.py b/auto_LiRPA/backward_bound.py

index 01d973f..b862761 100644

--- a/auto_LiRPA/backward_bound.py

+++ b/auto_LiRPA/backward_bound.py

@@ -1,23 +1,34 @@

+import os

import torch

from torch import Tensor

-from collections import deque, defaultdict

+from collections import deque

from tqdm import tqdm

from .patches import Patches

from .utils import *

from .bound_ops import *

import warnings

+from typing import TYPE_CHECKING, List

+if TYPE_CHECKING:

+ from .bound_general import BoundedModule

-def batched_backward(

- self, node, C, unstable_idx, batch_size, bound_lower=True,

- bound_upper=True):

+

+def batched_backward(self: 'BoundedModule', node, C, unstable_idx, batch_size,

+ bound_lower=True, bound_upper=True, return_A=None):

+ if return_A is None: return_A = self.return_A

crown_batch_size = self.bound_opts['crown_batch_size']

- unstable_size = get_unstable_size(unstable_idx)

- print(f'Batched CROWN: unstable size {unstable_size}')

- num_batches = (unstable_size + crown_batch_size - 1) // crown_batch_size

output_shape = node.output_shape[1:]

dim = int(prod(output_shape))

+ if unstable_idx is None:

+ unstable_idx = torch.arange(dim, device=self.device)

+ dense = True

+ else:

+ dense = False

+ unstable_size = get_unstable_size(unstable_idx)

+ print(f'Batched CROWN: node {node}, unstable size {unstable_size}')

+ num_batches = (unstable_size + crown_batch_size - 1) // crown_batch_size

ret = []

+ ret_A = {} # if return_A, we will store A here

for i in tqdm(range(num_batches)):

if isinstance(unstable_idx, tuple):

unstable_idx_batch = tuple(

@@ -34,7 +45,7 @@ def batched_backward(

unstable_size_batch, batch_size, *node.output_shape[1:-2], 1, 1],

identity=1, unstable_idx=unstable_idx_batch,

output_shape=[batch_size, *node.output_shape[1:]])

- elif isinstance(node, BoundLinear) or isinstance(node, BoundMatMul):

+ elif isinstance(node, (BoundLinear, BoundMatMul)):

assert C in ['OneHot', None]

C_batch = OneHotC(

[batch_size, unstable_size_batch, *node.output_shape[1:]],

@@ -45,71 +56,154 @@ def batched_backward(

C_batch[0, torch.arange(unstable_size_batch), unstable_idx_batch] = 1.0

C_batch = C_batch.expand(batch_size, -1, -1).view(

batch_size, unstable_size_batch, *output_shape)

- ret.append(self.backward_general(

- C=C_batch, node=node,

+ # overwrite return_A options to run backward general

+ ori_return_A_option = self.return_A

+ self.return_A = return_A

+

+ batch_ret = self.backward_general(

+ node, C_batch,

bound_lower=bound_lower, bound_upper=bound_upper,

average_A=False, need_A_only=False, unstable_idx=unstable_idx_batch,

- verbose=False))

+ verbose=False)

+ ret.append(batch_ret[:2])

+

+ if len(batch_ret) > 2:

+ # A found, we merge A

+ batch_A = batch_ret[2]

+ ret_A = merge_A(batch_A, ret_A)

+

+ # restore return_A options

+ self.return_A = ori_return_A_option

if bound_lower:

lb = torch.cat([item[0].view(batch_size, -1) for item in ret], dim=1)

+ if dense:

+ # In this case, restore_sparse_bounds will not be called.

+ # And thus we restore the shape here.

+ lb = lb.reshape(batch_size, *output_shape)

else:

lb = None

if bound_upper:

ub = torch.cat([item[1].view(batch_size, -1) for item in ret], dim=1)

+ if dense:

+ # In this case, restore_sparse_bounds will not be called.

+ # And thus we restore the shape here.

+ ub = ub.reshape(batch_size, *output_shape)

else:

ub = None

- return lb, ub

+

+ if return_A:

+ return lb, ub, ret_A

+ else:

+ return lb, ub

def backward_general(

- self, C, node=None, bound_lower=True, bound_upper=True, average_A=False,

- need_A_only=False, unstable_idx=None, unstable_size=0, update_mask=None, verbose=True):

+ self: 'BoundedModule',

+ bound_node,

+ C,

+ start_backpropagation_at_node = None,

+ bound_lower=True,

+ bound_upper=True,

+ average_A=False,

+ need_A_only=False,

+ unstable_idx=None,

+ update_mask=None,

+ verbose=True,

+ apply_output_constraints_to: Optional[List[str]] = None,

+ initial_As: Optional[dict] = None,

+ initial_lb: Optional[torch.tensor] = None,

+ initial_ub: Optional[torch.tensor] = None,

+):

+ use_beta_crown = self.bound_opts['optimize_bound_args']['enable_beta_crown']

+

+ if bound_node.are_output_constraints_activated_for_layer(apply_output_constraints_to):

+ assert not use_beta_crown

+ assert not self.cut_used

+ assert initial_As is None

+ assert initial_lb is None

+ assert initial_ub is None

+ return self.backward_general_with_output_constraint(

+ bound_node=bound_node,

+ C=C,

+ start_backporpagation_at_node=start_backpropagation_at_node,

+ bound_lower=bound_lower,

+ bound_upper=bound_upper,

+ average_A=average_A,

+ need_A_only=need_A_only,

+ unstable_idx=unstable_idx,

+ update_mask=update_mask,

+ verbose=verbose,

+ )

+

+ roots = self.roots()

+

+ if start_backpropagation_at_node is None:

+ # When output constraints are used, backward_general_with_output_constraint()

+ # adds additional layers at the end, performs the backpropagation through these,

+ # and then calls backward_general() on the output layer.

+ # In this case, the layer we start from (start_backpropagation_at_node) differs

+ # from the layer that should be bounded (bound_node)

+

+ # When output constraints are not used, the bounded node is the one where

+ # backpropagation starts.

+ start_backpropagation_at_node = bound_node

+

if verbose:

- logger.debug(f'Bound backward from {node.__class__.__name__}({node.name})')

+ logger.debug(f'Bound backward from {start_backpropagation_at_node.__class__.__name__}({start_backpropagation_at_node.name}) '

+ f'to bound {bound_node.__class__.__name__}({bound_node.name})')

if isinstance(C, str):

logger.debug(f' C: {C}')

elif C is not None:

logger.debug(f' C: shape {C.shape}, type {type(C)}')

- _print_time = False

+ _print_time = bool(os.environ.get('AUTOLIRPA_PRINT_TIME', 0))

if isinstance(C, str):

# If C is a str, use batched CROWN. If batched CROWN is not intended to

# be enabled, C must be a explicitly provided non-str object for this function.

- if need_A_only or self.return_A or average_A:

+ if need_A_only or average_A:

raise ValueError(

'Batched CROWN is not compatible with '

- f'need_A_only={need_A_only}, return_A={self.return_A}, '

- f'average_A={average_A}')

- node.lower, node.upper = self.batched_backward(

- node, C, unstable_idx,

- batch_size=self.root[0].value.shape[0],

+ f'need_A_only={need_A_only}, average_A={average_A}')

+ ret = self.batched_backward(

+ bound_node, C, unstable_idx,

+ batch_size=roots[0].value.shape[0],

bound_lower=bound_lower, bound_upper=bound_upper,

)

- return node.lower, node.upper

+ bound_node.lower, bound_node.upper = ret[:2]

+ return ret

- for l in self._modules.values():

- l.lA = l.uA = None

- l.bounded = True

+ for n in self.nodes():

+ n.lA = n.uA = None

- degree_out = get_degrees(node, self.backward_from)

- all_nodes_before = list(degree_out.keys())

-

- C, batch_size, output_dim, output_shape = preprocess_C(C, node)

-

- node.lA = C if bound_lower else None

- node.uA = C if bound_upper else None

- lb = ub = torch.tensor(0., device=self.device)

+ degree_out = get_degrees(start_backpropagation_at_node)

+ C, batch_size, output_dim, output_shape = self._preprocess_C(C, bound_node)

+ if initial_As is None:

+ start_backpropagation_at_node.lA = C if bound_lower else None

+ start_backpropagation_at_node.uA = C if bound_upper else None

+ else:

+ for layer_name, (lA, uA) in initial_As.items():

+ self[layer_name].lA = lA

+ self[layer_name].uA = uA

+ assert start_backpropagation_at_node.lA is not None or start_backpropagation_at_node.uA is not None

+ if initial_lb is None:

+ lb = torch.tensor(0., device=self.device)

+ else:

+ lb = initial_lb

+ if initial_ub is None:

+ ub = torch.tensor(0., device=self.device)

+ else:

+ ub = initial_ub

# Save intermediate layer A matrices when required.

A_record = {}

- queue = deque([node])

+ queue = deque([start_backpropagation_at_node])

while len(queue) > 0:

l = queue.popleft() # backward from l

- l.bounded = True

+ self.backward_from[l.name].append(bound_node)

- if l.name in self.root_name: continue

+ if l.name in self.root_names: continue

# if all the succeeds are done, then we can turn to this node in the

# next iteration.

@@ -142,53 +236,69 @@ def backward_general(

# A matrices are all zero, no need to propagate.

continue

- if isinstance(l, BoundRelu):

- # TODO: unify this interface.

- A, lower_b, upper_b = l.bound_backward(

- l.lA, l.uA, *l.inputs, start_node=node, unstable_idx=unstable_idx,

- beta_for_intermediate_layers=self.intermediate_constr is not None)

- elif isinstance(l, BoundOptimizableActivation):

+ lA, uA = l.lA, l.uA

+ if (l.name != start_backpropagation_at_node.name and use_beta_crown

+ and getattr(l, 'sparse_betas', None)):

+ lA, uA, lbias, ubias = self.beta_crown_backward_bound(

+ l, lA, uA, start_node=start_backpropagation_at_node)

+ lb = lb + lbias

+ ub = ub + ubias

+

+ if isinstance(l, BoundOptimizableActivation):

# For other optimizable activation functions (TODO: unify with ReLU).

- if node.name != self.final_node_name:

- start_shape = node.output_shape[1:]

+ if bound_node.name != self.final_node_name:

+ start_shape = bound_node.output_shape[1:]

else:

start_shape = C.shape[0]

- A, lower_b, upper_b = l.bound_backward(

- l.lA, l.uA, *l.inputs, start_shape=start_shape, start_node=node)

+ l.preserve_mask = update_mask

else:

- A, lower_b, upper_b = l.bound_backward(l.lA, l.uA, *l.inputs)

+ start_shape = None

+ A, lower_b, upper_b = l.bound_backward(

+ lA, uA, *l.inputs,

+ start_node=bound_node, unstable_idx=unstable_idx,

+ start_shape=start_shape)

+

# After propagation through this node, we delete its lA, uA variables.

- if not self.return_A and node.name != self.final_name:

+ if bound_node.name != self.final_name:

del l.lA, l.uA

if _print_time:

+ torch.cuda.synchronize()

time_elapsed = time.time() - start_time

- if time_elapsed > 1e-3:

+ if time_elapsed > 5e-3:

print(l, time_elapsed)

if lb.ndim > 0 and type(lower_b) == Tensor and self.conv_mode == 'patches':

lb, ub, lower_b, upper_b = check_patch_biases(lb, ub, lower_b, upper_b)

lb = lb + lower_b

ub = ub + upper_b

- if self.return_A and self.needed_A_dict and node.name in self.needed_A_dict:

+ if self.return_A and self.needed_A_dict and bound_node.name in self.needed_A_dict:

# FIXME remove [0][0] and [0][1]?

- if len(self.needed_A_dict[node.name]) == 0 or l.name in self.needed_A_dict[node.name]:

- A_record.update({l.name: {

- "lA": A[0][0].transpose(0, 1).detach() if A[0][0] is not None else None,

- "uA": A[0][1].transpose(0, 1).detach() if A[0][1] is not None else None,

- # When not used, lb or ub is tensor(0).

- "lbias": lb.transpose(0, 1).detach() if lb.ndim > 1 else None,

- "ubias": ub.transpose(0, 1).detach() if ub.ndim > 1 else None,

- "unstable_idx": unstable_idx

+ if len(self.needed_A_dict[bound_node.name]) == 0 or l.name in self.needed_A_dict[bound_node.name]:

+ # A could be either patches (in this case we cannot transpose so directly return)

+ # or matrix (in this case we transpose)

+ A_record.update({

+ l.name: {

+ "lA": (

+ A[0][0] if isinstance(A[0][0], Patches)

+ else A[0][0].transpose(0, 1).detach()

+ ) if A[0][0] is not None else None,

+ "uA": (

+ A[0][1] if isinstance(A[0][1], Patches)

+ else A[0][1].transpose(0, 1).detach()

+ ) if A[0][1] is not None else None,

+ # When not used, lb or ub is tensor(0).

+ "lbias": lb.transpose(0, 1).detach() if lb.ndim > 1 else None,

+ "ubias": ub.transpose(0, 1).detach() if ub.ndim > 1 else None,

+ "unstable_idx": unstable_idx

}})

# FIXME: solve conflict with the following case

- self.A_dict.update({node.name: A_record})

- if need_A_only and set(self.needed_A_dict[node.name]) == set(A_record.keys()):

+ self.A_dict.update({bound_node.name: A_record})

+ if need_A_only and set(self.needed_A_dict[bound_node.name]) == set(A_record.keys()):

# We have collected all A matrices we need. We can return now!

- self.A_dict.update({node.name: A_record})

+ self.A_dict.update({bound_node.name: A_record})

# Do not concretize to save time. We just need the A matrices.

- # return A matrix as a dict: {node.name: [A_lower, A_upper]}

+ # return A matrix as a dict: {node_start.name: [A_lower, A_upper]}

return None, None, self.A_dict

-

for i, l_pre in enumerate(l.inputs):

add_bound(l, l_pre, lA=A[i][0], uA=A[i][1])

@@ -197,26 +307,29 @@ def backward_general(

if ub.ndim >= 2:

ub = ub.transpose(0, 1)

- if self.return_A and self.needed_A_dict and node.name in self.needed_A_dict:

+ if self.return_A and self.needed_A_dict and bound_node.name in self.needed_A_dict:

save_A_record(

- node, A_record, self.A_dict, self.root, self.needed_A_dict[node.name],

+ bound_node, A_record, self.A_dict, roots,

+ self.needed_A_dict[bound_node.name],

lb=lb, ub=ub, unstable_idx=unstable_idx)

# TODO merge into `concretize`

- if self.cut_used and getattr(self, 'cut_module', None) is not None and self.cut_module.x_coeffs is not None:

+ if (self.cut_used and getattr(self, 'cut_module', None) is not None

+ and self.cut_module.x_coeffs is not None):

# propagate input neuron in cut constraints

- self.root[0].lA, self.root[0].uA = self.cut_module.input_cut(

- node, self.root[0].lA, self.root[0].uA, self.root[0].lower.size()[1:], unstable_idx,

+ roots[0].lA, roots[0].uA = self.cut_module.input_cut(

+ bound_node, roots[0].lA, roots[0].uA, roots[0].lower.size()[1:], unstable_idx,

batch_mask=update_mask)

- lb, ub = concretize(

- lb, ub, node, self.root, batch_size, output_dim,

- bound_lower, bound_upper, average_A=average_A)

+ lb, ub = concretize(self, batch_size, output_dim, lb, ub,

+ bound_lower, bound_upper,

+ average_A=average_A, node_start=bound_node)

# TODO merge into `concretize`

- if self.cut_used and getattr(self, "cut_module", None) is not None and self.cut_module.cut_bias is not None:

+ if (self.cut_used and getattr(self, "cut_module", None) is not None

+ and self.cut_module.cut_bias is not None):

# propagate cut bias in cut constraints

- lb, ub = self.cut_module.bias_cut(node, lb, ub, unstable_idx, batch_mask=update_mask)

+ lb, ub = self.cut_module.bias_cut(bound_node, lb, ub, unstable_idx, batch_mask=update_mask)

if lb is not None and ub is not None and ((lb-ub)>0).sum().item() > 0:

# make sure there is no bug for cut constraints propagation

print(f"Warning: lb is larger than ub with diff: {(lb-ub)[(lb-ub)>0].max().item()}")

@@ -240,23 +353,26 @@ def get_unstable_size(unstable_idx):

return unstable_idx.numel()

-def check_optimized_variable_sparsity(self, node):

- alpha_sparsity = None # unknown.

+def check_optimized_variable_sparsity(self: 'BoundedModule', node):

+ alpha_sparsity = None # unknown, optimizable variables are not created for this node.

for relu in self.relus:

- if hasattr(relu, 'alpha_lookup_idx') and node.name in relu.alpha_lookup_idx:

+ # FIXME: this hardcoded for ReLUs. Need to support other optimized nonlinear functions.

+ # alpha_lookup_idx is only created for sparse-spec alphas.

+ if relu.alpha_lookup_idx is not None and node.name in relu.alpha_lookup_idx:

if relu.alpha_lookup_idx[node.name] is not None:

# This node was created with sparse alpha

alpha_sparsity = True

+ elif self.bound_opts['optimize_bound_args']['use_shared_alpha']:

+ # Shared alpha, the spec dimension is 1, and sparsity can be supported.

+ alpha_sparsity = True

else:

alpha_sparsity = False

break

- # print(f'node {node.name} alpha sparsity {alpha_sparsity}')

return alpha_sparsity

-def get_sparse_C(

- self, node, sparse_intermediate_bounds=True, ref_intermediate_lb=None,

- ref_intermediate_ub=None):

+def get_sparse_C(self: 'BoundedModule', node, sparse_intermediate_bounds=True,

+ ref_intermediate_lb=None, ref_intermediate_ub=None):

sparse_conv_intermediate_bounds = self.bound_opts.get('sparse_conv_intermediate_bounds', False)

minimum_sparsity = self.bound_opts.get('minimum_sparsity', 0.9)

crown_batch_size = self.bound_opts.get('crown_batch_size', 1e9)

@@ -276,8 +392,10 @@ def get_sparse_C(

if (isinstance(node, BoundLinear) or isinstance(node, BoundMatMul)) and int(

os.environ.get('AUTOLIRPA_USE_FULL_C', 0)) == 0:

if sparse_intermediate_bounds:

- # If we are doing bound refinement and reference bounds are given, we only refine unstable neurons.

- # Also, if we are checking against LP solver we will refine all neurons and do not use this optimization.

+ # If we are doing bound refinement and reference bounds are given,

+ # we only refine unstable neurons.

+ # Also, if we are checking against LP solver we will refine all

+ # neurons and do not use this optimization.

# For each batch element, we find the unstable neurons.

unstable_idx, unstable_size = self.get_unstable_locations(

ref_intermediate_lb, ref_intermediate_ub)

@@ -289,9 +407,12 @@ def get_sparse_C(

# Create C in batched CROWN

newC = 'OneHot'

reduced_dim = True

- elif (unstable_size <= minimum_sparsity * dim and unstable_size > 0 and alpha_is_sparse is None) or alpha_is_sparse:

- # When we already have sparse alpha for this layer, we always use sparse C. Otherwise we determine it by sparsity.

- # Create an abstract C matrix, the unstable_idx are the non-zero elements in specifications for all batches.

+ elif ((0 < unstable_size <= minimum_sparsity * dim

+ and alpha_is_sparse is None) or alpha_is_sparse):

+ # When we already have sparse alpha for this layer, we always

+ # use sparse C. Otherwise we determine it by sparsity.

+ # Create an abstract C matrix, the unstable_idx are the non-zero

+ # elements in specifications for all batches.

newC = OneHotC(

[batch_size, unstable_size, *node.output_shape[1:]],

self.device, unstable_idx, None)

@@ -300,7 +421,10 @@ def get_sparse_C(

unstable_idx = None

del ref_intermediate_lb, ref_intermediate_ub

if not reduced_dim:

- newC = eyeC([batch_size, dim, *node.output_shape[1:]], self.device)

+ if dim > crown_batch_size:

+ newC = 'eye'

+ else:

+ newC = eyeC([batch_size, dim, *node.output_shape[1:]], self.device)

elif node.patches_start and node.mode == "patches":

if sparse_intermediate_bounds:

unstable_idx, unstable_size = self.get_unstable_locations(

@@ -323,7 +447,8 @@ def get_sparse_C(

# elements in specifications for all batches.

# The shape of patches is [unstable_size, batch, C, H, W].

newC = Patches(

- shape=[unstable_size, batch_size, *node.output_shape[1:-2], 1, 1],

+ shape=[unstable_size, batch_size, *node.output_shape[1:-2],

+ 1, 1],

identity=1, unstable_idx=unstable_idx,

output_shape=[batch_size, *node.output_shape[1:]])

reduced_dim = True

@@ -336,7 +461,8 @@ def get_sparse_C(

None, 1, 0, [node.output_shape[1], batch_size, *node.output_shape[2:],

*node.output_shape[1:-2], 1, 1], 1,

output_shape=[batch_size, *node.output_shape[1:]])

- elif isinstance(node, (BoundAdd, BoundSub)) and node.mode == "patches":

+ elif (isinstance(node, (BoundAdd, BoundSub)) and node.mode == "patches"

+ and len(node.output_shape) >= 4):

# FIXME: BoundAdd does not always have patches. Need to use a better way

# to determine patches mode.

# FIXME: We should not hardcode BoundAdd here!

@@ -361,9 +487,12 @@ def get_sparse_C(

dtype=list(self.parameters())[0].dtype)).view(

num_channel, 1, 1, 1, num_channel, 1, 1)

# Expand to (out_c, 1, unstable_size, out_c, 1, 1).

- patches = patches.expand(-1, 1, node.output_shape[-2], node.output_shape[-1], -1, 1, 1)

- patches = patches[unstable_idx[0], :, unstable_idx[1], unstable_idx[2]]

- # Expand with the batch dimension. Final shape (unstable_size, batch_size, out_c, 1, 1).

+ patches = patches.expand(-1, 1, node.output_shape[-2],

+ node.output_shape[-1], -1, 1, 1)

+ patches = patches[unstable_idx[0], :,

+ unstable_idx[1], unstable_idx[2]]

+ # Expand with the batch dimension. Final shape

+ # (unstable_size, batch_size, out_c, 1, 1).

patches = patches.expand(-1, batch_size, -1, -1, -1)

newC = Patches(

patches, 1, 0, patches.shape, unstable_idx=unstable_idx,

@@ -380,8 +509,10 @@ def get_sparse_C(

dtype=list(self.parameters())[0].dtype)).view(

num_channel, 1, 1, 1, num_channel, 1, 1)

# Expand to (out_c, batch, out_h, out_w, out_c, 1, 1).

- patches = patches.expand(-1, batch_size, node.output_shape[-2], node.output_shape[-1], -1, 1, 1)

- newC = Patches(patches, 1, 0, patches.shape, output_shape=[batch_size, *node.output_shape[1:]])

+ patches = patches.expand(-1, batch_size, node.output_shape[-2],

+ node.output_shape[-1], -1, 1, 1)

+ newC = Patches(patches, 1, 0, patches.shape, output_shape=[

+ batch_size, *node.output_shape[1:]])

else:

if sparse_intermediate_bounds:

unstable_idx, unstable_size = self.get_unstable_locations(

@@ -394,28 +525,37 @@ def get_sparse_C(

# Create in C in batched CROWN

newC = 'eye'

reduced_dim = True

- elif (unstable_size <= minimum_sparsity * dim and alpha_is_sparse is None) or alpha_is_sparse:

+ elif (unstable_size <= minimum_sparsity * dim

+ and alpha_is_sparse is None) or alpha_is_sparse:

newC = torch.zeros([1, unstable_size, dim], device=self.device)

# Fill the corresponding elements to 1.0

newC[0, torch.arange(unstable_size), unstable_idx] = 1.0

- newC = newC.expand(batch_size, -1, -1).view(batch_size, unstable_size, *node.output_shape[1:])

+ newC = newC.expand(batch_size, -1, -1).view(

+ batch_size, unstable_size, *node.output_shape[1:])

reduced_dim = True

else:

unstable_idx = None

del ref_intermediate_lb, ref_intermediate_ub

if not reduced_dim:

if dim > 1000:

- warnings.warn(f"Creating an identity matrix with size {dim}x{dim} for node {node}. This may indicate poor performance for bound computation. If you see this message on a small network please submit a bug report.", stacklevel=2)

- newC = torch.eye(dim, device=self.device, dtype=list(self.parameters())[0].dtype) \

- .unsqueeze(0).expand(batch_size, -1, -1) \

- .view(batch_size, dim, *node.output_shape[1:])

+ warnings.warn(

+ f"Creating an identity matrix with size {dim}x{dim} for node {node}. "

+ "This may indicate poor performance for bound computation. "

+ "If you see this message on a small network please submit "

+ "a bug report.", stacklevel=2)

+ if dim > crown_batch_size:

+ newC = 'eye'

+ else:

+ newC = torch.eye(dim, device=self.device).unsqueeze(0).expand(

+ batch_size, -1, -1

+ ).view(batch_size, dim, *node.output_shape[1:])

return newC, reduced_dim, unstable_idx, unstable_size

-def restore_sparse_bounds(

- self, node, unstable_idx, unstable_size, ref_intermediate_lb, ref_intermediate_ub,

- new_lower=None, new_upper=None):

+def restore_sparse_bounds(self: 'BoundedModule', node, unstable_idx,

+ unstable_size, ref_intermediate_lb,

+ ref_intermediate_ub, new_lower=None, new_upper=None):

batch_size = self.batch_size

if unstable_size == 0:

# No unstable neurons. Skip the update.

@@ -447,22 +587,26 @@ def restore_sparse_bounds(

node.upper = upper.view(batch_size, *node.output_shape[1:])

-def get_degrees(node_start, backward_from):

+def get_degrees(node_start):

+ if not isinstance(node_start, list):

+ node_start = [node_start]

degrees = {}

- queue = deque([node_start])

- node_start.bounded = False

+ added = {}

+ queue = deque()

+ for node in node_start:

+ queue.append(node)

+ added[node.name] = True

while len(queue) > 0:

l = queue.popleft()

- backward_from[l.name].append(node_start)

for l_pre in l.inputs:

degrees[l_pre.name] = degrees.get(l_pre.name, 0) + 1

- if l_pre.bounded:

- l_pre.bounded = False

+ if not added.get(l_pre.name, False):

queue.append(l_pre)

+ added[l_pre.name] = True

return degrees

-def preprocess_C(C, node):

+def _preprocess_C(self: 'BoundedModule', C, node):

if isinstance(C, Patches):

if C.unstable_idx is None:

# Patches have size (out_c, batch, out_h, out_w, c, h, w).

@@ -478,9 +622,11 @@ def preprocess_C(C, node):

else:

batch_size, output_dim = C.shape[:2]

- # The C matrix specified by the user has shape (batch, spec) but internally we have (spec, batch) format.

+ # The C matrix specified by the user has shape (batch, spec)

+ # but internally we have (spec, batch) format.

if not isinstance(C, (eyeC, Patches, OneHotC)):

- C = C.transpose(0, 1)

+ C = C.transpose(0, 1).reshape(

+ output_dim, batch_size, *node.output_shape[1:])

elif isinstance(C, eyeC):

C = C._replace(shape=(C.shape[1], C.shape[0], *C.shape[2:]))

elif isinstance(C, OneHotC):

@@ -506,65 +652,61 @@ def preprocess_C(C, node):

return C, batch_size, output_dim, output_shape

-def concretize(lb, ub, node, root, batch_size, output_dim, bound_lower=True, bound_upper=True, average_A=False):

-

- for i in range(len(root)):

- if root[i].lA is None and root[i].uA is None: continue

- if average_A and isinstance(root[i], BoundParams):

- lA = root[i].lA.mean(node.batch_dim + 1, keepdim=True).expand(root[i].lA.shape) if bound_lower else None

- uA = root[i].uA.mean(node.batch_dim + 1, keepdim=True).expand(root[i].uA.shape) if bound_upper else None

+def concretize(self, batch_size, output_dim, lb, ub=None,

+ bound_lower=True, bound_upper=True,

+ average_A=False, node_start=None):

+ roots = self.roots()

+ for i in range(len(roots)):

+ if roots[i].lA is None and roots[i].uA is None: continue

+ if average_A and isinstance(roots[i], BoundParams):

+ lA = roots[i].lA.mean(

+ node_start.batch_dim + 1, keepdim=True

+ ).expand(roots[i].lA.shape) if bound_lower else None

+ uA = roots[i].uA.mean(

+ node_start.batch_dim + 1, keepdim=True

+ ).expand(roots[i].uA.shape) if bound_upper else None

else:

- lA, uA = root[i].lA, root[i].uA

- if not isinstance(root[i].lA, eyeC) and not isinstance(root[i].lA, Patches):

- lA = root[i].lA.reshape(output_dim, batch_size, -1).transpose(0, 1) if bound_lower else None

- if not isinstance(root[i].uA, eyeC) and not isinstance(root[i].uA, Patches):

- uA = root[i].uA.reshape(output_dim, batch_size, -1).transpose(0, 1) if bound_upper else None

- if hasattr(root[i], 'perturbation') and root[i].perturbation is not None:

- if isinstance(root[i], BoundParams):

+ lA, uA = roots[i].lA, roots[i].uA

+ if not isinstance(roots[i].lA, eyeC) and not isinstance(roots[i].lA, Patches):

+ lA = roots[i].lA.reshape(output_dim, batch_size, -1).transpose(0, 1) if bound_lower else None

+ if not isinstance(roots[i].uA, eyeC) and not isinstance(roots[i].uA, Patches):

+ uA = roots[i].uA.reshape(output_dim, batch_size, -1).transpose(0, 1) if bound_upper else None

+ if hasattr(roots[i], 'perturbation') and roots[i].perturbation is not None:

+ if isinstance(roots[i], BoundParams):

# add batch_size dim for weights node

- lb = lb + root[i].perturbation.concretize(

- root[i].center.unsqueeze(0), lA,

- sign=-1, aux=root[i].aux) if bound_lower else None

- ub = ub + root[i].perturbation.concretize(

- root[i].center.unsqueeze(0), uA,

- sign=+1, aux=root[i].aux) if bound_upper else None

+ lb = lb + roots[i].perturbation.concretize(

+ roots[i].center.unsqueeze(0), lA,

+ sign=-1, aux=roots[i].aux) if bound_lower else None

+ ub = ub + roots[i].perturbation.concretize(

+ roots[i].center.unsqueeze(0), uA,

+ sign=+1, aux=roots[i].aux) if bound_upper else None

else:

- lb = lb + root[i].perturbation.concretize(

- root[i].center, lA, sign=-1, aux=root[i].aux) if bound_lower else None

- ub = ub + root[i].perturbation.concretize(

- root[i].center, uA, sign=+1, aux=root[i].aux) if bound_upper else None

+ lb = lb + roots[i].perturbation.concretize(

+ roots[i].center, lA, sign=-1, aux=roots[i].aux) if bound_lower else None

+ ub = ub + roots[i].perturbation.concretize(

+ roots[i].center, uA, sign=+1, aux=roots[i].aux) if bound_upper else None

else:

- fv = root[i].forward_value

- if type(root[i]) == BoundInput:

+ fv = roots[i].forward_value

+ if type(roots[i]) == BoundInput:

# Input node with a batch dimension

batch_size_ = batch_size

else:

# Parameter node without a batch dimension

batch_size_ = 1

- if bound_lower:

- if isinstance(lA, eyeC):

- lb = lb + fv.view(batch_size_, -1)

- elif isinstance(lA, Patches):

- lb = lb + lA.matmul(fv, input_shape=root[0].center.shape)

- elif type(root[i]) == BoundInput:

- lb = lb + lA.matmul(fv.view(batch_size_, -1, 1)).squeeze(-1)

+ def _add_constant(A, b):

+ if isinstance(A, eyeC):

+ b = b + fv.view(batch_size_, -1)

+ elif isinstance(A, Patches):

+ b = b + A.matmul(fv, input_shape=roots[0].center.shape)

+ elif type(roots[i]) == BoundInput:

+ b = b + A.matmul(fv.view(batch_size_, -1, 1)).squeeze(-1)

else:

- lb = lb + lA.matmul(fv.view(-1, 1)).squeeze(-1)

- else:

- lb = None

-

- if bound_upper:

- if isinstance(uA, eyeC):

- ub = ub + fv.view(batch_size_, -1)

- elif isinstance(uA, Patches):

- ub = ub + uA.matmul(fv, input_shape=root[0].center.shape)

- elif type(root[i]) == BoundInput:

- ub = ub + uA.matmul(fv.view(batch_size_, -1, 1)).squeeze(-1)

- else:

- ub = ub + uA.matmul(fv.view(-1, 1)).squeeze(-1)

- else:

- ub = None

+ b = b + A.matmul(fv.view(-1, 1)).squeeze(-1)

+ return b

+

+ lb = _add_constant(lA, lb) if bound_lower else None

+ ub = _add_constant(uA, ub) if bound_upper else None

return lb, ub

@@ -581,7 +723,7 @@ def addA(A1, A2):

raise NotImplementedError(f'Unsupported types for A1 ({type(A1)}) and A2 ({type(A2)}')

-def add_bound(node, node_pre, lA, uA):

+def add_bound(node, node_pre, lA=None, uA=None):

"""Propagate lA and uA to a preceding node."""

if lA is not None:

if node_pre.lA is None:

@@ -602,25 +744,6 @@ def add_bound(node, node_pre, lA, uA):

node_pre.uA = addA(node_pre.uA, uA)

-def get_beta_watch_list(intermediate_constr, all_nodes_before):

- beta_watch_list = defaultdict(dict)

- if intermediate_constr is not None:

- # Intermediate layer betas are handled in two cases.

- # First, if the beta split is before this node, we don't need to do anything special;

- # it will done in BoundRelu.

- # Second, if the beta split after this node, we need to modify the A matrix

- # during bound propagation to reflect beta after this layer.

- for k in intermediate_constr:

- if k not in all_nodes_before:

- # The second case needs special care: we add all such splits in a watch list.

- # However, after first occurance of a layer in the watchlist,

- # beta_watch_list will be deleted and the A matrix from split constraints

- # has been added and will be propagated to later layers.

- for kk, vv in intermediate_constr[k].items():

- beta_watch_list[kk][k] = vv

- return beta_watch_list

-

-

def add_constant_node(lb, ub, node):

new_lb = node.get_bias(node.lA, node.forward_value)

new_ub = node.get_bias(node.uA, node.forward_value)

@@ -633,27 +756,27 @@ def add_constant_node(lb, ub, node):

return lb, ub

-def save_A_record(node, A_record, A_dict, root, needed_A_dict, lb, ub, unstable_idx):

+def save_A_record(node, A_record, A_dict, roots, needed_A_dict, lb, ub, unstable_idx):

root_A_record = {}

- for i in range(len(root)):

- if root[i].lA is None and root[i].uA is None: continue

- if root[i].name in needed_A_dict:

- if root[i].lA is not None:

- if isinstance(root[i].lA, Patches):

- _lA = root[i].lA

+ for i in range(len(roots)):

+ if roots[i].lA is None and roots[i].uA is None: continue

+ if roots[i].name in needed_A_dict:

+ if roots[i].lA is not None:

+ if isinstance(roots[i].lA, Patches):

+ _lA = roots[i].lA

else:

- _lA = root[i].lA.transpose(0, 1).detach()

+ _lA = roots[i].lA.transpose(0, 1).detach()

else:

_lA = None

- if root[i].uA is not None:

- if isinstance(root[i].uA, Patches):

- _uA = root[i].uA

+ if roots[i].uA is not None:

+ if isinstance(roots[i].uA, Patches):

+ _uA = roots[i].uA

else:

- _uA = root[i].uA.transpose(0, 1).detach()

+ _uA = roots[i].uA.transpose(0, 1).detach()

else:

_uA = None

- root_A_record.update({root[i].name: {

+ root_A_record.update({roots[i].name: {

"lA": _lA,

"uA": _uA,

# When not used, lb or ub is tensor(0). They have been transposed above.

@@ -678,8 +801,10 @@ def select_unstable_idx(ref_intermediate_lb, ref_intermediate_ub, unstable_locs,

return indices_selected

-def get_unstable_locations(

- self, ref_intermediate_lb, ref_intermediate_ub, conv=False, channel_only=False):

+def get_unstable_locations(self: 'BoundedModule', ref_intermediate_lb,

+ ref_intermediate_ub, conv=False, channel_only=False):

+ # FIXME (2023): This function should be a member class of the Bound object, since the

+ # definition of unstable neurons depends on the activation function.

max_crown_size = self.bound_opts.get('max_crown_size', int(1e9))

# For conv layer we only check the case where all neurons are active/inactive.

unstable_masks = torch.logical_and(ref_intermediate_lb < 0, ref_intermediate_ub > 0)

@@ -694,9 +819,9 @@ def get_unstable_locations(

else:

if not conv and unstable_masks.ndim > 2:

# Flatten the conv layer shape.

- unstable_masks = unstable_masks.view(unstable_masks.size(0), -1)

- ref_intermediate_lb = ref_intermediate_lb.view(ref_intermediate_lb.size(0), -1)

- ref_intermediate_ub = ref_intermediate_ub.view(ref_intermediate_ub.size(0), -1)

+ unstable_masks = unstable_masks.reshape(unstable_masks.size(0), -1)

+ ref_intermediate_lb = ref_intermediate_lb.reshape(ref_intermediate_lb.size(0), -1)

+ ref_intermediate_ub = ref_intermediate_ub.reshape(ref_intermediate_ub.size(0), -1)

unstable_locs = unstable_masks.sum(dim=0).bool()

if conv:

# Now converting it to indices for these unstable nuerons.

@@ -719,45 +844,85 @@ def get_unstable_locations(

def get_alpha_crown_start_nodes(

- self, node, c=None, share_slopes=False, final_node_name=None):

+ self: 'BoundedModule',

+ node,

+ c=None,

+ share_alphas=False,

+ final_node_name=None,

+ backward_from_node: Bound = None,

+ ):

+ """

+ Given a layer "node", return a list of following nodes after this node whose bounds

+ will propagate through this node. Each element in the list is a tuple with 3 elements:

+ (following_node_name, following_node_shape, unstable_idx)

+ """

# When use_full_conv_alpha is True, conv layers do not share alpha.

sparse_intermediate_bounds = self.bound_opts.get('sparse_intermediate_bounds', False)

use_full_conv_alpha_thresh = self.bound_opts.get('use_full_conv_alpha_thresh', 512)

start_nodes = []

- for nj in self.backward_from[node.name]: # Pre-activation layers.

+ # In most cases, backward_from_node == node

+ # Only if output constraints are used, will they differ: the node that should be

+ # bounded (node) needs alphas for *all* layers, not just those behind it.

+ # In this case, backward_from_node will be the input node

+ if backward_from_node != node:

+ assert len(self.bound_opts['optimize_bound_args']['apply_output_constraints_to']) > 0

+

+ for nj in self.backward_from[backward_from_node.name]: # Pre-activation layers.

unstable_idx = None

- use_sparse_conv = None

+ use_sparse_conv = None # Whether a sparse-spec alpha is used for a conv output node. None for non-conv output node.

use_full_conv_alpha = self.bound_opts.get('use_full_conv_alpha', False)

- if (sparse_intermediate_bounds and isinstance(node, BoundRelu)

- and nj.name != final_node_name and not share_slopes):

+

+ # Find the indices of unstable neuron, used for create sparse-feature alpha.

+ if (sparse_intermediate_bounds

+ and isinstance(node, BoundOptimizableActivation)

+ and nj.name != final_node_name and not share_alphas):

# Create sparse optimization variables for intermediate neurons.

- if ((isinstance(nj, BoundLinear) or isinstance(nj, BoundMatMul))

- and int(os.environ.get('AUTOLIRPA_USE_FULL_C', 0)) == 0):

- # unstable_idx has shape [neuron_size_of_nj]. Batch dimension is reduced.

- unstable_idx, _ = self.get_unstable_locations(nj.lower, nj.upper)

- elif isinstance(nj, (BoundConv, BoundAdd, BoundSub, BoundBatchNormalization)) and nj.mode == 'patches':

- if nj.name in node.patch_size:

- # unstable_idx has shape [channel_size_of_nj]. Batch and spatial dimensions are reduced.

- unstable_idx, _ = self.get_unstable_locations(

- nj.lower, nj.upper, channel_only=not use_full_conv_alpha, conv=True)

- use_sparse_conv = False # alpha is shared among channels. Sparse-spec alpha in hw dimension not used.

- if use_full_conv_alpha and unstable_idx[0].size(0) > use_full_conv_alpha_thresh:

- # Too many unstable neurons. Using shared alpha per channel.

- unstable_idx, _ = self.get_unstable_locations(

- nj.lower, nj.upper, channel_only=True, conv=True)

- use_full_conv_alpha = False

- else:

- # matrix mode for conv layers.

- # unstable_idx has shape [c_out * h_out * w_out]. Batch dimension is reduced.

+ # These are called "sparse-spec" alpha because we only create alpha only for

+ # the intermediate of final output nodes whose bounds are needed.

+ # "sparse-spec" alpha makes sense only for piece-wise linear functions.

+ # For other intermediate nodes, there is no "unstable" or "stable" neuron.

+ # FIXME: whether an layer has unstable/stable neurons should be in Bound obj.

+ # FIXME: get_unstable_locations should be a member class of ReLU.

+ if len(nj.output_name) == 1 and isinstance(self[nj.output_name[0]], (BoundRelu, BoundSignMerge, BoundMaxPool)):

+ if ((isinstance(nj, (BoundLinear, BoundMatMul)))

+ and int(os.environ.get('AUTOLIRPA_USE_FULL_C', 0)) == 0):

+ # unstable_idx has shape [neuron_size_of_nj]. Batch dimension is reduced.

unstable_idx, _ = self.get_unstable_locations(nj.lower, nj.upper)

- use_sparse_conv = True # alpha is not shared among channels, and is sparse in spec dimension.

+ elif isinstance(nj, (BoundConv, BoundAdd, BoundSub, BoundBatchNormalization)) and nj.mode == 'patches':

+ if nj.name in node.patch_size:

+ # unstable_idx has shape [channel_size_of_nj]. Batch and spatial dimensions are reduced.

+ unstable_idx, _ = self.get_unstable_locations(

+ nj.lower, nj.upper, channel_only=not use_full_conv_alpha, conv=True)

+ use_sparse_conv = False # alpha is shared among channels. Sparse-spec alpha in hw dimension not used.

+ if use_full_conv_alpha and unstable_idx[0].size(0) > use_full_conv_alpha_thresh:

+ # Too many unstable neurons. Using shared alpha per channel.

+ unstable_idx, _ = self.get_unstable_locations(

+ nj.lower, nj.upper, channel_only=True, conv=True)

+ use_full_conv_alpha = False

+ else:

+ # Matrix mode for conv layers. Although the bound propagation started with patches mode,

+ # when A matrix is propagated to this layer, it might become a dense matrix since patches

+ # can be come very large after many layers. In this case,

+ # unstable_idx has shape [c_out * h_out * w_out]. Batch dimension is reduced.

+ unstable_idx, _ = self.get_unstable_locations(nj.lower, nj.upper)

+ use_sparse_conv = True # alpha is not shared among channels, and is sparse in spec dimension.

+ else:

+ # FIXME: we should not check for fixed names here. Need to enable patches mode more generally.

+ if isinstance(nj, (BoundConv, BoundAdd, BoundSub, BoundBatchNormalization)) and nj.mode == 'patches':

+ use_sparse_conv = False # Sparse-spec alpha can never be used, because it is not a ReLU activation.

+

if nj.name == final_node_name:

+ # Final layer, always the number of specs as the shape.

size_final = self[final_node_name].output_shape[-1] if c is None else c.size(1)

- start_nodes.append((final_node_name, size_final, None))

+ # The 4-th element indicates that this start node is the final node,

+ # which may be utilized by operators that do not know the name of

+ # the final node.

+ start_nodes.append((final_node_name, size_final, None, True))

continue

- if share_slopes:

- # all intermediate neurons from the same layer share the same set of slopes.

+

+ if share_alphas:

+ # all intermediate neurons from the same layer share the same set of alphas.

output_shape = 1

elif isinstance(node, BoundOptimizableActivation) and node.patch_size and nj.name in node.patch_size:

# Patches mode. Use output channel size as the spec size. This still shares some alpha, but better than no sharing.

@@ -770,8 +935,75 @@ def get_alpha_crown_start_nodes(

output_shape = node.patch_size[nj.name][0]

assert not sparse_intermediate_bounds or use_sparse_conv is False # Double check our assumption holds. If this fails, then we created wrong shapes for alpha.

else:

- # Output is linear layer, or patch converted to matrix.

+ # Output is linear layer (use_sparse_conv = None), or patch converted to matrix (use_sparse_conv = True).

assert not sparse_intermediate_bounds or use_sparse_conv is not False # Double check our assumption holds. If this fails, then we created wrong shapes for alpha.

output_shape = nj.lower.shape[1:] # FIXME: for non-relu activations it's still expecting a prod.

- start_nodes.append((nj.name, output_shape, unstable_idx))

+ start_nodes.append((nj.name, output_shape, unstable_idx, False))

return start_nodes

+

+

+def merge_A(batch_A, ret_A):

+ for key0 in batch_A:

+ if key0 not in ret_A: ret_A[key0] = {}

+ for key1 in batch_A[key0]:

+ value = batch_A[key0][key1]

+ if key1 not in ret_A[key0]:

+ # create:

+ ret_A[key0].update({

+ key1: {

+ "lA": value["lA"],

+ "uA": value["uA"],

+ "lbias": value["lbias"],

+ "ubias": value["ubias"],

+ "unstable_idx": value["unstable_idx"]

+ }

+ })

+ elif key0 == node.name:

+ # merge:

+ # the batch splitting only happens for current node, i.e.,

+ # for other nodes the returned lA should be the same across different batches

+ # so no need to repeatly merge them

+ exist = ret_A[key0][key1]

+

+ if exist["unstable_idx"] is not None:

+ if isinstance(exist["unstable_idx"], torch.Tensor):

+ merged_unstable = torch.cat([

+ exist["unstable_idx"],

+ value['unstable_idx']], dim=0)

+ elif isinstance(exist["unstable_idx"], tuple):

+ if exist["unstable_idx"]:

+ merged_unstable = tuple([

+ torch.cat([exist["unstable_idx"][idx],

+ value['unstable_idx'][idx]], dim=0)

+ for idx in range(len(exist['unstable_idx']))]

+ )

+ else:

+ merged_unstable = None

+ else:

+ raise NotImplementedError(

+ f'Unsupported type {type(exist["unstable_idx"])}')

+ else:

+ merged_unstable = None

+ merge_dict = {"unstable_idx": merged_unstable}

+ for name in ["lA", "uA"]:

+ if exist[name] is not None:

+ if isinstance(exist[name], torch.Tensor):

+ # for matrix the spec dim is 1

+ merge_dict[name] = torch.cat([exist[name], value[name]], dim=1)

+ else:

+ assert isinstance(exist[name], Patches)

+ # for patches the spec dim`is 0

+ merge_dict[name] = exist[name].create_similar(

+ torch.cat([exist[name].patches, value[name].patches], dim=0),

+ unstable_idx=merged_unstable

+ )

+ else:

+ merge_dict[name] = None

+ for name in ["lbias", "ubias"]:

+ if exist[name] is not None:

+ # for bias the spec dim in 1

+ merge_dict[name] = torch.cat([exist[name], value[name]], dim=1)

+ else:

+ merge_dict[name] = None

+ ret_A[key0][key1] = merge_dict

+ return ret_A

diff --git a/auto_LiRPA/beta_crown.py b/auto_LiRPA/beta_crown.py

index 6be6dc0..ad4295c 100644

--- a/auto_LiRPA/beta_crown.py

+++ b/auto_LiRPA/beta_crown.py

@@ -1,48 +1,238 @@

+from collections import OrderedDict

import torch

+from torch import Tensor

+from .patches import Patches, inplace_unfold

+from typing import TYPE_CHECKING

+if TYPE_CHECKING:

+ from .bound_general import BoundedModule

-def beta_bias(self):

- batch_size = len(self.relus[-1].split_beta)

- batch = int(batch_size/2)

- bias = torch.zeros((batch_size, 1), device=self.device)

- for m in self.relus:

- if not m.used or not m.perturbed:

+

+class SparseBeta:

+ def __init__(self, shape, bias=False, betas=None, device='cpu'):

+ self.device = device

+ self.val = torch.zeros(shape)

+ self.loc = torch.zeros(shape, dtype=torch.long, device=device)

+ self.sign = torch.zeros(shape, device=device)

+ self.bias = torch.zeros(shape) if bias else None

+ if betas:

+ for bi in range(len(betas)):

+ if betas[bi] is not None:

+ self.val[bi, :len(betas[bi])] = betas[bi]

+ self.val = self.val.detach().to(

+ device, non_blocking=True).requires_grad_()

+

+ def apply_splits(self, history, key):

+ for bi in range(len(history)):

+ # Add history splits. (layer, neuron) is the current decision.

+ split_locs, split_coeffs = history[bi][key][:2]

+ split_len = len(split_locs)

+ if split_len > 0:

+ self.sign[bi, :split_len] = torch.as_tensor(

+ split_coeffs, device=self.device)

+ self.loc[bi, :split_len] = torch.as_tensor(

+ split_locs, device=self.device)

+ if self.bias is not None:

+ split_bias = history[bi][key][2]

+ self.bias[bi, :split_len] = torch.as_tensor(

+ split_bias, device=self.device)

+ self.loc = self.loc.to(device=self.device, non_blocking=True)

+ self.sign = self.sign.to(device=self.device, non_blocking=True)

+ if self.bias is not None:

+ self.bias = self.bias.to(device=self.device, non_blocking=True)

+

+

+def get_split_nodes(self: 'BoundedModule', input_split=False):

+ self.split_nodes = []

+ self.split_activations = {}

+ splittable_activations = self.get_splittable_activations()

+ self._set_used_nodes(self[self.final_name])

+ for layer in self.layers_requiring_bounds:

+ split_activations_ = []

+ for activation_name in layer.output_name:

+ activation = self[activation_name]

+ if activation in splittable_activations:

+ split_activations_.append(

+ (activation, activation.inputs.index(layer)))

+ if split_activations_:

+ self.split_nodes.append(layer)

+ self.split_activations[layer.name] = split_activations_

+ if input_split:

+ root = self[self.root_names[0]]

+ if root not in self.split_nodes:

+ self.split_nodes.append(root)

+ self.split_activations[root.name] = []

+ return self.split_nodes, self.split_activations

+

+

+def set_beta(self: 'BoundedModule', enable_opt_interm_bounds, parameters,

+ lr_beta, lr_cut_beta, cutter, dense_coeffs_mask):

+ """

+ Set betas, best_betas, coeffs, dense_coeffs_mask, best_coeffs, biases

+ and best_biases.

+ """

+ coeffs = None

+ betas = []

+ best_betas = OrderedDict()

+

+ # TODO compute only once

+ self.nodes_with_beta = []

+ for node in self.split_nodes:

+ if not hasattr(node, 'sparse_betas'):

continue

- if m.split_beta_used:

- bias[:batch] = bias[:batch] + m.split_bias*m.split_beta[:batch]*m.split_c[:batch]

- bias[batch:] = bias[batch:] + m.split_bias*m.split_beta[batch:]*m.split_c[batch:]

- if m.history_beta_used:

- bias = bias + (m.new_history_bias*m.new_history_beta*m.new_history_c).sum(1, keepdim=True)

- # No single node split here, because single node splits do not have bias.

- return bias

+ self.nodes_with_beta.append(node)

+ if enable_opt_interm_bounds:

+ for sparse_beta in node.sparse_betas.values():

+ if sparse_beta is not None:

+ betas.append(sparse_beta.val)

+ best_betas[node.name] = {

+ beta_m: sparse_beta.val.detach().clone()

+ for beta_m, sparse_beta in node.sparse_betas.items()

+ }

+ else:

+ betas.append(node.sparse_betas[0].val)

+ best_betas[node.name] = node.sparse_betas[0].val.detach().clone()

+

+ # Beta has shape (batch, max_splits_per_layer)

+ parameters.append({'params': betas.copy(), 'lr': lr_beta, 'batch_dim': 0})

+

+ if self.cut_used:

+ self.set_beta_cuts(parameters, lr_cut_beta, betas, best_betas, cutter)

+

+ return betas, best_betas, coeffs, dense_coeffs_mask

+

+

+def set_beta_cuts(self: 'BoundedModule', parameters, lr_cut_beta, betas,

+ best_betas, cutter):

+ # also need to optimize cut betas