diff --git a/.dev_scripts/github/update_model_index.py b/.dev_scripts/github/update_model_index.py

index bf056c9d54..6d60344744 100755

--- a/.dev_scripts/github/update_model_index.py

+++ b/.dev_scripts/github/update_model_index.py

@@ -144,22 +144,18 @@ def parse_md(md_file):

with open(md_file, 'r') as md:

lines = md.readlines()

i = 0

+ name = lines[0][2:]

+ name = name.split('(', 1)[0].strip()

+ collection['Metadata']['Architecture'].append(name)

+ collection['Name'] = name

+ collection_name = name

while i < len(lines):

# parse reference

- if lines[i][:2] == '(.*)', lines[j])[0]

- name = name.split('(', 1)[0].strip()

- # get architecture

- if 'ALGORITHM' in lines[i] or 'BACKBONE' in lines[i]:

- collection['Metadata']['Architecture'].append(name)

- collection['Name'] = name

- collection_name = name

- # get paper url

+ if lines[i].startswith('', lines[i])

+ url = url.groups()[0]

collection['Paper'].append(url)

- i = j + 1

+ i += 1

# parse table

elif lines[i][0] == '|' and i + 1 < len(lines) and \

diff --git a/.github/workflows/build.yml b/.github/workflows/build.yml

index cf07624fc4..c4134130eb 100644

--- a/.github/workflows/build.yml

+++ b/.github/workflows/build.yml

@@ -11,6 +11,7 @@ on:

- 'docs_zh-CN/**'

- 'examples/**'

- '.dev_scripts/**'

+ - '.pre-commit-config.yaml'

pull_request:

paths-ignore:

@@ -20,6 +21,7 @@ on:

- 'docs_zh-CN/**'

- 'examples/**'

- '.dev_scripts/**'

+ - '.pre-commit-config.yaml'

concurrency:

group: ${{ github.workflow }}-${{ github.ref }}

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index cdc1d6c986..8f4d1ca3a7 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -49,3 +49,7 @@ repos:

language: python

files: ^configs/.*\.md$

require_serial: true

+ - repo: https://github.com/open-mmlab/pre-commit-hooks

+ rev: v0.1.0 # Use the ref you want to point at

+ hooks:

+ - id: check-algo-readme

diff --git a/configs/inpainting/deepfillv1/README.md b/configs/inpainting/deepfillv1/README.md

index e956bc58b3..5ad68d5980 100644

--- a/configs/inpainting/deepfillv1/README.md

+++ b/configs/inpainting/deepfillv1/README.md

@@ -1,9 +1,22 @@

# DeepFillv1 (CVPR'2018)

-

+## Abstract

-

-DeepFillv1 (CVPR'2018)

+

+

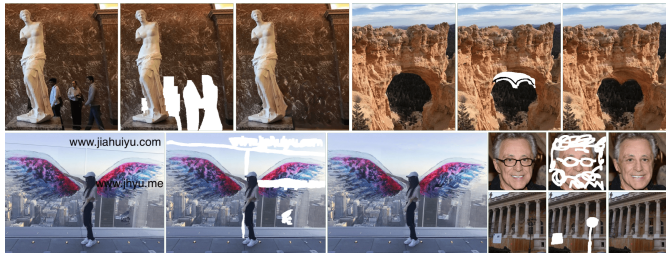

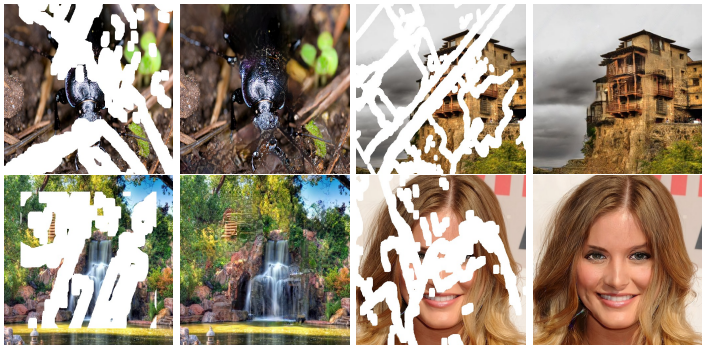

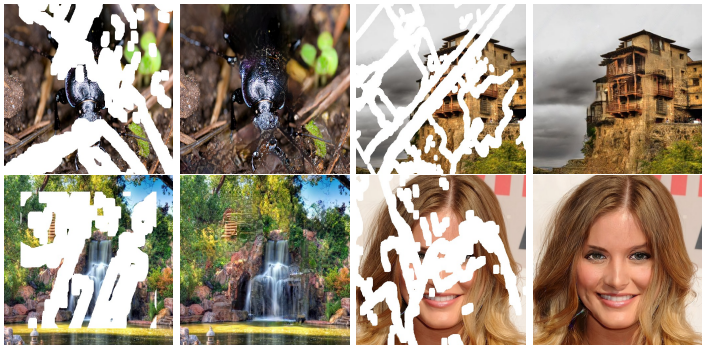

+Recent deep learning based approaches have shown promising results for the challenging task of inpainting large missing regions in an image. These methods can generate visually plausible image structures and textures, but often create distorted structures or blurry textures inconsistent with surrounding areas. This is mainly due to ineffectiveness of convolutional neural networks in explicitly borrowing or copying information from distant spatial locations. On the other hand, traditional texture and patch synthesis approaches are particularly suitable when it needs to borrow textures from the surrounding regions. Motivated by these observations, we propose a new deep generative model-based approach which can not only synthesize novel image structures but also explicitly utilize surrounding image features as references during network training to make better predictions. The model is a feed-forward, fully convolutional neural network which can process images with multiple holes at arbitrary locations and with variable sizes during the test time. Experiments on multiple datasets including faces (CelebA, CelebA-HQ), textures (DTD) and natural images (ImageNet, Places2) demonstrate that our proposed approach generates higher-quality inpainting results than existing ones.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

+

```bibtex

@inproceedings{yu2018generative,

@@ -15,22 +28,7 @@

}

```

-

-

-

-

-## Abstract

-

-Recent deep learning based approaches have shown promising results for the challenging task of inpainting large missing regions in an image. These methods can generate visually plausible image structures and textures, but often create distorted structures or blurry textures inconsistent with surrounding areas. This is mainly due to ineffectiveness of convolutional neural networks in explicitly borrowing or copying information from distant spatial locations. On the other hand, traditional texture and patch synthesis approaches are particularly suitable when it needs to borrow textures from the surrounding regions. Motivated by these observations, we propose a new deep generative model-based approach which can not only synthesize novel image structures but also explicitly utilize surrounding image features as references during network training to make better predictions. The model is a feed-forward, fully convolutional neural network which can process images with multiple holes at arbitrary locations and with variable sizes during the test time. Experiments on multiple datasets including faces (CelebA, CelebA-HQ), textures (DTD) and natural images (ImageNet, Places2) demonstrate that our proposed approach generates higher-quality inpainting results than existing ones.

-

-

-  -

-

-

-

-## Results

-

-

+## Results and models

**Places365-Challenge**

diff --git a/configs/inpainting/deepfillv2/README.md b/configs/inpainting/deepfillv2/README.md

index 876c18c032..ea54f06fbe 100644

--- a/configs/inpainting/deepfillv2/README.md

+++ b/configs/inpainting/deepfillv2/README.md

@@ -1,8 +1,22 @@

# DeepFillv2 (CVPR'2019)

+## Abstract

+

+

+

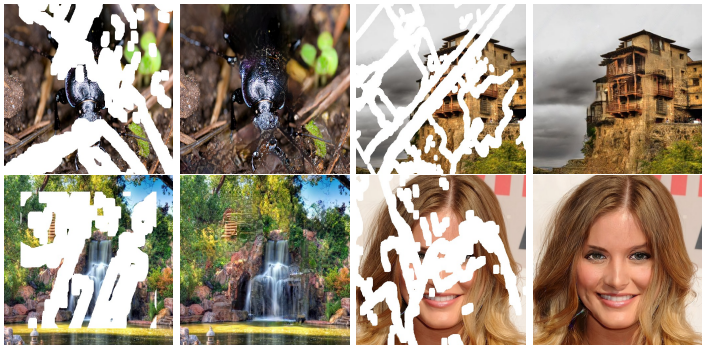

+We present a generative image inpainting system to complete images with free-form mask and guidance. The system is based on gated convolutions learned from millions of images without additional labelling efforts. The proposed gated convolution solves the issue of vanilla convolution that treats all input pixels as valid ones, generalizes partial convolution by providing a learnable dynamic feature selection mechanism for each channel at each spatial location across all layers. Moreover, as free-form masks may appear anywhere in images with any shape, global and local GANs designed for a single rectangular mask are not applicable. Thus, we also present a patch-based GAN loss, named SN-PatchGAN, by applying spectral-normalized discriminator on dense image patches. SN-PatchGAN is simple in formulation, fast and stable in training. Results on automatic image inpainting and user-guided extension demonstrate that our system generates higher-quality and more flexible results than previous methods. Our system helps user quickly remove distracting objects, modify image layouts, clear watermarks and edit faces.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-DeepFillv2 (CVPR'2019)

```bibtex

@inproceedings{yu2019free,

@@ -14,21 +28,7 @@

}

```

-

-

-

-

-

-## Abstract

-

-We present a generative image inpainting system to complete images with free-form mask and guidance. The system is based on gated convolutions learned from millions of images without additional labelling efforts. The proposed gated convolution solves the issue of vanilla convolution that treats all input pixels as valid ones, generalizes partial convolution by providing a learnable dynamic feature selection mechanism for each channel at each spatial location across all layers. Moreover, as free-form masks may appear anywhere in images with any shape, global and local GANs designed for a single rectangular mask are not applicable. Thus, we also present a patch-based GAN loss, named SN-PatchGAN, by applying spectral-normalized discriminator on dense image patches. SN-PatchGAN is simple in formulation, fast and stable in training. Results on automatic image inpainting and user-guided extension demonstrate that our system generates higher-quality and more flexible results than previous methods. Our system helps user quickly remove distracting objects, modify image layouts, clear watermarks and edit faces.

-

-

-  -

-

-

-

-## Results

+## Results and models

**Places365-Challenge**

diff --git a/configs/inpainting/global_local/README.md b/configs/inpainting/global_local/README.md

index 4f32df7bda..f6f613745e 100644

--- a/configs/inpainting/global_local/README.md

+++ b/configs/inpainting/global_local/README.md

@@ -1,8 +1,22 @@

# Global&Local (ToG'2017)

+## Abstract

+

+

+

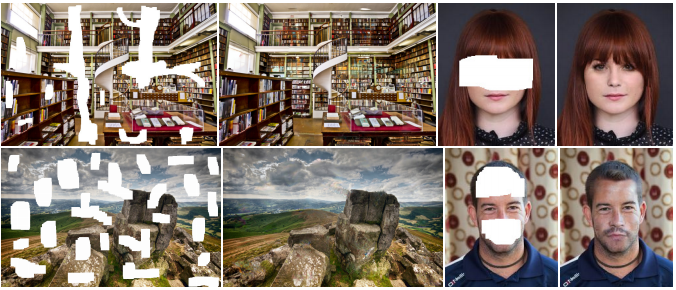

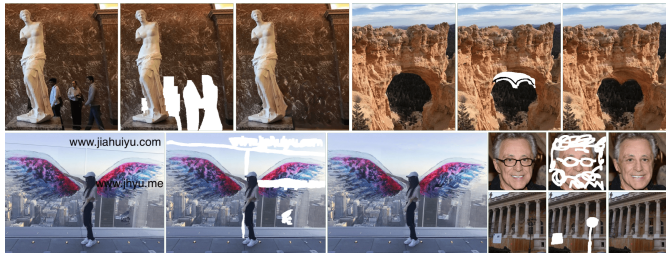

+We present a novel approach for image completion that results in images that are both locally and globally consistent. With a fully-convolutional neural network, we can complete images of arbitrary resolutions by flling in missing regions of any shape. To train this image completion network to be consistent, we use global and local context discriminators that are trained to distinguish real images from completed ones. The global discriminator looks at the entire image to assess if it is coherent as a whole, while the local discriminator looks only at a small area centered at the completed region to ensure the local consistency of the generated patches. The image completion network is then trained to fool the both context discriminator networks, which requires it to generate images that are indistinguishable from real ones with regard to overall consistency as well as in details. We show that our approach can be used to complete a wide variety of scenes. Furthermore, in contrast with the patch-based approaches such as PatchMatch, our approach can generate fragments that do not appear elsewhere in the image, which allows us to naturally complete the image.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-Global&Local (ToG'2017)

```bibtex

@article{iizuka2017globally,

@@ -17,20 +31,7 @@

}

```

-

-

-

-

-## Abstract

-

-We present a novel approach for image completion that results in images that are both locally and globally consistent. With a fully-convolutional neural network, we can complete images of arbitrary resolutions by flling in missing regions of any shape. To train this image completion network to be consistent, we use global and local context discriminators that are trained to distinguish real images from completed ones. The global discriminator looks at the entire image to assess if it is coherent as a whole, while the local discriminator looks only at a small area centered at the completed region to ensure the local consistency of the generated patches. The image completion network is then trained to fool the both context discriminator networks, which requires it to generate images that are indistinguishable from real ones with regard to overall consistency as well as in details. We show that our approach can be used to complete a wide variety of scenes. Furthermore, in contrast with the patch-based approaches such as PatchMatch, our approach can generate fragments that do not appear elsewhere in the image, which allows us to naturally complete the image.

-

-

-  -

-

-

-

-## Results

+## Results and models

*Note that we do not apply the post-processing module in Global&Local for a fair comparison with current deep inpainting methods.*

diff --git a/configs/inpainting/partial_conv/README.md b/configs/inpainting/partial_conv/README.md

index 5fea8b57b1..822396035c 100644

--- a/configs/inpainting/partial_conv/README.md

+++ b/configs/inpainting/partial_conv/README.md

@@ -1,9 +1,22 @@

# PConv (ECCV'2018)

-

+## Abstract

+

+

+

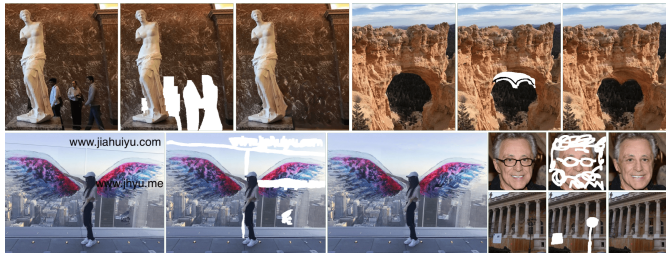

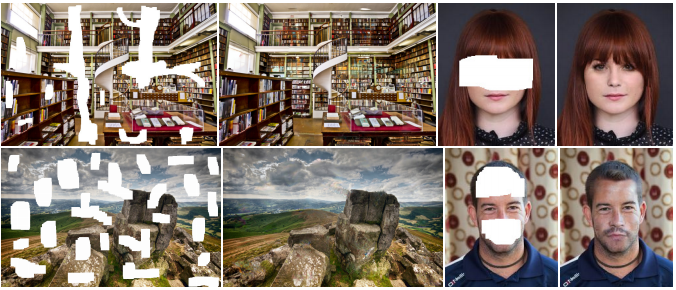

+Existing deep learning based image inpainting methods use a standard convolutional network over the corrupted image, using convolutional filter responses conditioned on both valid pixels as well as the substitute values in the masked holes (typically the mean value). This often leads to artifacts such as color discrepancy and blurriness. Post-processing is usually used to reduce such artifacts, but are expensive and may fail. We propose the use of partial convolutions, where the convolution is masked and renormalized to be conditioned on only valid pixels. We further include a mechanism to automatically generate an updated mask for the next layer as part of the forward pass. Our model outperforms other methods for irregular masks. We show qualitative and quantitative comparisons with other methods to validate our approach.

+

+

+

+  +

+

-

-PConv (ECCV'2018)

+

+

+

+## Citation

+

+

```bibtex

@inproceedings{liu2018image,

@@ -15,21 +28,7 @@

}

```

-

-

-

-

-## Abstract

-

-Existing deep learning based image inpainting methods use a standard convolutional network over the corrupted image, using convolutional filter responses conditioned on both valid pixels as well as the substitute values in the masked holes (typically the mean value). This often leads to artifacts such as color discrepancy and blurriness. Post-processing is usually used to reduce such artifacts, but are expensive and may fail. We propose the use of partial convolutions, where the convolution is masked and renormalized to be conditioned on only valid pixels. We further include a mechanism to automatically generate an updated mask for the next layer as part of the forward pass. Our model outperforms other methods for irregular masks. We show qualitative and quantitative comparisons with other methods to validate our approach.

-

-

-  -

-

-

-

-## Results

-

+## Results and models

**Places365-Challenge**

diff --git a/configs/mattors/dim/README.md b/configs/mattors/dim/README.md

index cb3cf5fee0..d7c53c33c1 100644

--- a/configs/mattors/dim/README.md

+++ b/configs/mattors/dim/README.md

@@ -1,8 +1,22 @@

# DIM (CVPR'2017)

+## Abstract

+

+

+

+Image matting is a fundamental computer vision problem and has many applications. Previous algorithms have poor performance when an image has similar foreground and background colors or complicated textures. The main reasons are prior methods 1) only use low-level features and 2) lack high-level context. In this paper, we propose a novel deep learning based algorithm that can tackle both these problems. Our deep model has two parts. The first part is a deep convolutional encoder-decoder network that takes an image and the corresponding trimap as inputs and predict the alpha matte of the image. The second part is a small convolutional network that refines the alpha matte predictions of the first network to have more accurate alpha values and sharper edges. In addition, we also create a large-scale image matting dataset including 49300 training images and 1000 testing images. We evaluate our algorithm on the image matting benchmark, our testing set, and a wide variety of real images. Experimental results clearly demonstrate the superiority of our algorithm over previous methods.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-DIM (CVPR'2017)

```bibtex

@inproceedings{xu2017deep,

@@ -14,21 +28,7 @@

}

```

-

-

-

-

-## Abstract

-

-Image matting is a fundamental computer vision problem and has many applications. Previous algorithms have poor performance when an image has similar foreground and background colors or complicated textures. The main reasons are prior methods 1) only use low-level features and 2) lack high-level context. In this paper, we propose a novel deep learning based algorithm that can tackle both these problems. Our deep model has two parts. The first part is a deep convolutional encoder-decoder network that takes an image and the corresponding trimap as inputs and predict the alpha matte of the image. The second part is a small convolutional network that refines the alpha matte predictions of the first network to have more accurate alpha values and sharper edges. In addition, we also create a large-scale image matting dataset including 49300 training images and 1000 testing images. We evaluate our algorithm on the image matting benchmark, our testing set, and a wide variety of real images. Experimental results clearly demonstrate the superiority of our algorithm over previous methods.

-

-

-  -

-

-

-## Results

-

-

+## Results and models

| Method | SAD | MSE | GRAD | CONN | Download |

| :------------------------------------------------------------------------: | :------: | :-------: | :------: | :------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

diff --git a/configs/mattors/gca/README.md b/configs/mattors/gca/README.md

index a65a8169be..097d772fca 100644

--- a/configs/mattors/gca/README.md

+++ b/configs/mattors/gca/README.md

@@ -1,35 +1,33 @@

# GCA (AAAI'2020)

-

-

-GCA (AAAI'2020)

-

-```bibtex

-@inproceedings{li2020natural,

- title={Natural Image Matting via Guided Contextual Attention},

- author={Li, Yaoyi and Lu, Hongtao},

- booktitle={Association for the Advancement of Artificial Intelligence (AAAI)},

- year={2020}

-}

-```

-

-

-

-

-

## Abstract

-Over the last few years, deep learning based approaches have achieved outstanding improvements in natural image matting. Many of these methods can generate visually plausible alpha estimations, but typically yield blurry structures or textures in the semitransparent area. This is due to the local ambiguity of transparent objects. One possible solution is to leverage the far-surrounding information to estimate the local opacity. Traditional affinity-based methods often suffer from the high computational complexity, which are not suitable for high resolution alpha estimation. Inspired by affinity-based method and the successes of contextual attention in inpainting, we develop a novel end-to-end approach for natural image matting with a guided contextual attention module, which is specifically designed for image matting. Guided contextual attention module directly propagates high-level opacity information globally based on the learned low-level affinity. The proposed method can mimic information flow of affinity-based methods and utilize rich features learned by deep neural networks simultaneously. Experiment results on Composition-1k testing set and this http URL benchmark dataset demonstrate that our method outperforms state-of-the-art approaches in natural image matting.

+

+Over the last few years, deep learning based approaches have achieved outstanding improvements in natural image matting. Many of these methods can generate visually plausible alpha estimations, but typically yield blurry structures or textures in the semitransparent area. This is due to the local ambiguity of transparent objects. One possible solution is to leverage the far-surrounding information to estimate the local opacity. Traditional affinity-based methods often suffer from the high computational complexity, which are not suitable for high resolution alpha estimation. Inspired by affinity-based method and the successes of contextual attention in inpainting, we develop a novel end-to-end approach for natural image matting with a guided contextual attention module, which is specifically designed for image matting. Guided contextual attention module directly propagates high-level opacity information globally based on the learned low-level affinity. The proposed method can mimic information flow of affinity-based methods and utilize rich features learned by deep neural networks simultaneously. Experiment results on Composition-1k testing set and this http URL benchmark dataset demonstrate that our method outperforms state-of-the-art approaches in natural image matting.

+

+

+

+

+## Citation

-## Results

+

+```bibtex

+@inproceedings{li2020natural,

+ title={Natural Image Matting via Guided Contextual Attention},

+ author={Li, Yaoyi and Lu, Hongtao},

+ booktitle={Association for the Advancement of Artificial Intelligence (AAAI)},

+ year={2020}

+}

+```

+## Results and models

| Method | SAD | MSE | GRAD | CONN | Download |

| :--------------------------------------------------------------------: | :-------: | :--------: | :-------: | :-------: | :------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

diff --git a/configs/mattors/indexnet/README.md b/configs/mattors/indexnet/README.md

index 963a137f46..960e31218a 100644

--- a/configs/mattors/indexnet/README.md

+++ b/configs/mattors/indexnet/README.md

@@ -1,8 +1,22 @@

# IndexNet (ICCV'2019)

+## Abstract

+

+

+

+We show that existing upsampling operators can be unified with the notion of the index function. This notion is inspired by an observation in the decoding process of deep image matting where indices-guided unpooling can recover boundary details much better than other upsampling operators such as bilinear interpolation. By looking at the indices as a function of the feature map, we introduce the concept of learning to index, and present a novel index-guided encoder-decoder framework where indices are self-learned adaptively from data and are used to guide the pooling and upsampling operators, without the need of supervision. At the core of this framework is a flexible network module, termed IndexNet, which dynamically predicts indices given an input. Due to its flexibility, IndexNet can be used as a plug-in applying to any off-the-shelf convolutional networks that have coupled downsampling and upsampling stages.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-IndexNet (ICCV'2019)

```bibtex

@inproceedings{hao2019indexnet,

@@ -13,23 +27,7 @@

}

```

-

-

-

-

-

-## Abstract

-

-We show that existing upsampling operators can be unified with the notion of the index function. This notion is inspired by an observation in the decoding process of deep image matting where indices-guided unpooling can recover boundary details much better than other upsampling operators such as bilinear interpolation. By looking at the indices as a function of the feature map, we introduce the concept of learning to index, and present a novel index-guided encoder-decoder framework where indices are self-learned adaptively from data and are used to guide the pooling and upsampling operators, without the need of supervision. At the core of this framework is a flexible network module, termed IndexNet, which dynamically predicts indices given an input. Due to its flexibility, IndexNet can be used as a plug-in applying to any off-the-shelf convolutional networks that have coupled downsampling and upsampling stages.

-We demonstrate the effectiveness of IndexNet on the task of natural image matting where the quality of learned indices can be visually observed from predicted alpha mattes. Results on the Composition-1k matting dataset show that our model built on MobileNetv2 exhibits at least 16.1% improvement over the seminal VGG-16 based deep matting baseline, with less training data and lower model capacity.

-

-

-

-  -

-

-

-## Results

-

+## Results and models

| Method | SAD | MSE | GRAD | CONN | Download |

| :--------------------------------------------------------------------------: | :------: | :-------: | :------: | :------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

diff --git a/configs/restorers/basicvsr/README.md b/configs/restorers/basicvsr/README.md

index ae44cac647..4a3f38cb7e 100644

--- a/configs/restorers/basicvsr/README.md

+++ b/configs/restorers/basicvsr/README.md

@@ -1,9 +1,22 @@

# BasicVSR (CVPR'2021)

-

+## Abstract

+

+

+

+Video super-resolution (VSR) approaches tend to have more components than the image counterparts as they need to exploit the additional temporal dimension. Complex designs are not uncommon. In this study, we wish to untangle the knots and reconsider some most essential components for VSR guided by four basic functionalities, i.e., Propagation, Alignment, Aggregation, and Upsampling. By reusing some existing components added with minimal redesigns, we show a succinct pipeline, BasicVSR, that achieves appealing improvements in terms of speed and restoration quality in comparison to many state-of-the-art algorithms. We conduct systematic analysis to explain how such gain can be obtained and discuss the pitfalls. We further show the extensibility of BasicVSR by presenting an information-refill mechanism and a coupled propagation scheme to facilitate information aggregation. The BasicVSR and its extension, IconVSR, can serve as strong baselines for future VSR approaches.

+

+

+

+  +

+

+

+

+

-

-BasicVSR (CVPR'2021)

+## Citation

+

+

```bibtex

@InProceedings{chan2021basicvsr,

@@ -14,16 +27,8 @@

}

```

-

-

-## Abstract

-Video super-resolution (VSR) approaches tend to have more components than the image counterparts as they need to exploit the additional temporal dimension. Complex designs are not uncommon. In this study, we wish to untangle the knots and reconsider some most essential components for VSR guided by four basic functionalities, i.e., Propagation, Alignment, Aggregation, and Upsampling. By reusing some existing components added with minimal redesigns, we show a succinct pipeline, BasicVSR, that achieves appealing improvements in terms of speed and restoration quality in comparison to many state-of-the-art algorithms. We conduct systematic analysis to explain how such gain can be obtained and discuss the pitfalls. We further show the extensibility of BasicVSR by presenting an information-refill mechanism and a coupled propagation scheme to facilitate information aggregation. The BasicVSR and its extension, IconVSR, can serve as strong baselines for future VSR approaches.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels for REDS4 and Y channel for others. The metrics are `PSNR` / `SSIM` .

The pretrained weights of SPyNet can be found [here](https://download.openmmlab.com/mmediting/restorers/basicvsr/spynet_20210409-c6c1bd09.pth).

diff --git a/configs/restorers/basicvsr_plusplus/README.md b/configs/restorers/basicvsr_plusplus/README.md

index ed8952c7e1..927e297373 100644

--- a/configs/restorers/basicvsr_plusplus/README.md

+++ b/configs/restorers/basicvsr_plusplus/README.md

@@ -1,9 +1,22 @@

# BasicVSR++

-

+## Abstract

-

-BasicVSR++

+

+

+A recurrent structure is a popular framework choice for the task of video super-resolution. The state-of-the-art method BasicVSR adopts bidirectional propagation with feature alignment to effectively exploit information from the entire input video. In this study, we redesign BasicVSR by proposing second-order grid propagation and flow-guided deformable alignment. We show that by empowering the recurrent framework with the enhanced propagation and alignment, one can exploit spatiotemporal information across misaligned video frames more effectively. The new components lead to an improved performance under a similar computational constraint. In particular, our model BasicVSR++ surpasses BasicVSR by 0.82 dB in PSNR with similar number of parameters. In addition to video super-resolution, BasicVSR++ generalizes well to other video restoration tasks such as compressed video enhancement. In NTIRE 2021, BasicVSR++ obtains three champions and one runner-up in the Video Super-Resolution and Compressed Video Enhancement Challenges. Codes and models will be released to MMEditing.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

+

```bibtex

@article{chan2021basicvsr++,

@@ -14,16 +27,8 @@

}

```

-

-

-## Abstract

-A recurrent structure is a popular framework choice for the task of video super-resolution. The state-of-the-art method BasicVSR adopts bidirectional propagation with feature alignment to effectively exploit information from the entire input video. In this study, we redesign BasicVSR by proposing second-order grid propagation and flow-guided deformable alignment. We show that by empowering the recurrent framework with the enhanced propagation and alignment, one can exploit spatiotemporal information across misaligned video frames more effectively. The new components lead to an improved performance under a similar computational constraint. In particular, our model BasicVSR++ surpasses BasicVSR by 0.82 dB in PSNR with similar number of parameters. In addition to video super-resolution, BasicVSR++ generalizes well to other video restoration tasks such as compressed video enhancement. In NTIRE 2021, BasicVSR++ obtains three champions and one runner-up in the Video Super-Resolution and Compressed Video Enhancement Challenges. Codes and models will be released to MMEditing.

-

-

-  -

-

+## Results and models

-## Results and Models

The pretrained weights of SPyNet can be found [here](https://download.openmmlab.com/mmediting/restorers/basicvsr/spynet_20210409-c6c1bd09.pth).

| Method | REDS4 (BIx4) PSNR/SSIM (RGB) | Vimeo-90K-T (BIx4) PSNR/SSIM (Y) | Vid4 (BIx4) PSNR/SSIM (Y) | UDM10 (BDx4) PSNR/SSIM (Y) | Vimeo-90K-T (BDx4) PSNR/SSIM (Y) | Vid4 (BDx4) PSNR/SSIM (Y) | Download |

diff --git a/configs/restorers/dic/README.md b/configs/restorers/dic/README.md

index 4fe29526d1..23d31dd425 100644

--- a/configs/restorers/dic/README.md

+++ b/configs/restorers/dic/README.md

@@ -1,9 +1,22 @@

# DIC (CVPR'2020)

-

-

+## Abstract

+

+

+

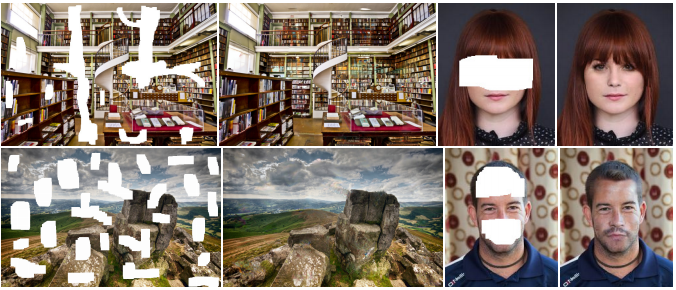

+Recent works based on deep learning and facial priors have succeeded in super-resolving severely degraded facial images. However, the prior knowledge is not fully exploited in existing methods, since facial priors such as landmark and component maps are always estimated by low-resolution or coarsely super-resolved images, which may be inaccurate and thus affect the recovery performance. In this paper, we propose a deep face super-resolution (FSR) method with iterative collaboration between two recurrent networks which focus on facial image recovery and landmark estimation respectively. In each recurrent step, the recovery branch utilizes the prior knowledge of landmarks to yield higher-quality images which facilitate more accurate landmark estimation in turn. Therefore, the iterative information interaction between two processes boosts the performance of each other progressively. Moreover, a new attentive fusion module is designed to strengthen the guidance of landmark maps, where facial components are generated individually and aggregated attentively for better restoration. Quantitative and qualitative experimental results show the proposed method significantly outperforms state-of-the-art FSR methods in recovering high-quality face images.

+

+

+

+  +

+

+

+

+

-DIC (CVPR'2020)

+## Citation

+

+

```bibtex

@inproceedings{ma2020deep,

@@ -15,16 +28,8 @@

}

```

-

-

-## Abstract

-Recent works based on deep learning and facial priors have succeeded in super-resolving severely degraded facial images. However, the prior knowledge is not fully exploited in existing methods, since facial priors such as landmark and component maps are always estimated by low-resolution or coarsely super-resolved images, which may be inaccurate and thus affect the recovery performance. In this paper, we propose a deep face super-resolution (FSR) method with iterative collaboration between two recurrent networks which focus on facial image recovery and landmark estimation respectively. In each recurrent step, the recovery branch utilizes the prior knowledge of landmarks to yield higher-quality images which facilitate more accurate landmark estimation in turn. Therefore, the iterative information interaction between two processes boosts the performance of each other progressively. Moreover, a new attentive fusion module is designed to strengthen the guidance of landmark maps, where facial components are generated individually and aggregated attentively for better restoration. Quantitative and qualitative experimental results show the proposed method significantly outperforms state-of-the-art FSR methods in recovering high-quality face images.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels, `scale` pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/edsr/README.md b/configs/restorers/edsr/README.md

index 19c2f05d83..b7b8022811 100644

--- a/configs/restorers/edsr/README.md

+++ b/configs/restorers/edsr/README.md

@@ -1,9 +1,22 @@

# EDSR (CVPR'2017)

-

+## Abstract

+

+

+

+Recent research on super-resolution has progressed with the development of deep convolutional neural networks (DCNN). In particular, residual learning techniques exhibit improved performance. In this paper, we develop an enhanced deep super-resolution network (EDSR) with performance exceeding those of current state-of-the-art SR methods. The significant performance improvement of our model is due to optimization by removing unnecessary modules in conventional residual networks. The performance is further improved by expanding the model size while we stabilize the training procedure. We also propose a new multi-scale deep super-resolution system (MDSR) and training method, which can reconstruct high-resolution images of different upscaling factors in a single model. The proposed methods show superior performance over the state-of-the-art methods on benchmark datasets and prove its excellence by winning the NTIRE2017 Super-Resolution Challenge.

+

+

+

+  +

+

+

+

+

-

-EDSR (CVPR'2017)

+## Citation

+

+

```bibtex

@inproceedings{lim2017enhanced,

@@ -15,16 +28,8 @@

}

```

-

-

-## Abstract

-Recent research on super-resolution has progressed with the development of deep convolutional neural networks (DCNN). In particular, residual learning techniques exhibit improved performance. In this paper, we develop an enhanced deep super-resolution network (EDSR) with performance exceeding those of current state-of-the-art SR methods. The significant performance improvement of our model is due to optimization by removing unnecessary modules in conventional residual networks. The performance is further improved by expanding the model size while we stabilize the training procedure. We also propose a new multi-scale deep super-resolution system (MDSR) and training method, which can reconstruct high-resolution images of different upscaling factors in a single model. The proposed methods show superior performance over the state-of-the-art methods on benchmark datasets and prove its excellence by winning the NTIRE2017 Super-Resolution Challenge.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels, `scale` pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/edvr/README.md b/configs/restorers/edvr/README.md

index 90724c8b81..8d3403a4bf 100644

--- a/configs/restorers/edvr/README.md

+++ b/configs/restorers/edvr/README.md

@@ -1,9 +1,22 @@

# EDVR (CVPRW'2019)

-

+## Abstract

+

+

+

+Video restoration tasks, including super-resolution, deblurring, etc, are drawing increasing attention in the computer vision community. A challenging benchmark named REDS is released in the NTIRE19 Challenge. This new benchmark challenges existing methods from two aspects: (1) how to align multiple frames given large motions, and (2) how to effectively fuse different frames with diverse motion and blur. In this work, we propose a novel Video Restoration framework with Enhanced Deformable networks, termed EDVR, to address these challenges. First, to handle large motions, we devise a Pyramid, Cascading and Deformable (PCD) alignment module, in which frame alignment is done at the feature level using deformable convolutions in a coarse-to-fine manner. Second, we propose a Temporal and Spatial Attention (TSA) fusion module, in which attention is applied both temporally and spatially, so as to emphasize important features for subsequent restoration. Thanks to these modules, our EDVR wins the champions and outperforms the second place by a large margin in all four tracks in the NTIRE19 video restoration and enhancement challenges. EDVR also demonstrates superior performance to state-of-the-art published methods on video super-resolution and deblurring.

+

+

+

+  +

+

+

+

+

-

-EDVR (CVPRW'2019)

+## Citation

+

+

```bibtex

@InProceedings{wang2019edvr,

@@ -15,16 +28,8 @@

}

```

-

-

-## Abstract

-Video restoration tasks, including super-resolution, deblurring, etc, are drawing increasing attention in the computer vision community. A challenging benchmark named REDS is released in the NTIRE19 Challenge. This new benchmark challenges existing methods from two aspects: (1) how to align multiple frames given large motions, and (2) how to effectively fuse different frames with diverse motion and blur. In this work, we propose a novel Video Restoration framework with Enhanced Deformable networks, termed EDVR, to address these challenges. First, to handle large motions, we devise a Pyramid, Cascading and Deformable (PCD) alignment module, in which frame alignment is done at the feature level using deformable convolutions in a coarse-to-fine manner. Second, we propose a Temporal and Spatial Attention (TSA) fusion module, in which attention is applied both temporally and spatially, so as to emphasize important features for subsequent restoration. Thanks to these modules, our EDVR wins the champions and outperforms the second place by a large margin in all four tracks in the NTIRE19 video restoration and enhancement challenges. EDVR also demonstrates superior performance to state-of-the-art published methods on video super-resolution and deblurring.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/esrgan/README.md b/configs/restorers/esrgan/README.md

index 6fe03f3e09..bb47dac83a 100644

--- a/configs/restorers/esrgan/README.md

+++ b/configs/restorers/esrgan/README.md

@@ -1,9 +1,22 @@

# ESRGAN (ECCVW'2018)

-

+## Abstract

+

+

+

+The Super-Resolution Generative Adversarial Network (SRGAN) is a seminal work that is capable of generating realistic textures during single image super-resolution. However, the hallucinated details are often accompanied with unpleasant artifacts. To further enhance the visual quality, we thoroughly study three key components of SRGAN - network architecture, adversarial loss and perceptual loss, and improve each of them to derive an Enhanced SRGAN (ESRGAN). In particular, we introduce the Residual-in-Residual Dense Block (RRDB) without batch normalization as the basic network building unit. Moreover, we borrow the idea from relativistic GAN to let the discriminator predict relative realness instead of the absolute value. Finally, we improve the perceptual loss by using the features before activation, which could provide stronger supervision for brightness consistency and texture recovery. Benefiting from these improvements, the proposed ESRGAN achieves consistently better visual quality with more realistic and natural textures than SRGAN and won the first place in the PIRM2018-SR Challenge.

+

+

+

+  +

+

+

+

+

-

-ESRGAN (ECCVW'2018)

+## Citation

+

+

```bibtex

@inproceedings{wang2018esrgan,

@@ -15,16 +28,8 @@

}

```

-

-

-## Abstract

-The Super-Resolution Generative Adversarial Network (SRGAN) is a seminal work that is capable of generating realistic textures during single image super-resolution. However, the hallucinated details are often accompanied with unpleasant artifacts. To further enhance the visual quality, we thoroughly study three key components of SRGAN - network architecture, adversarial loss and perceptual loss, and improve each of them to derive an Enhanced SRGAN (ESRGAN). In particular, we introduce the Residual-in-Residual Dense Block (RRDB) without batch normalization as the basic network building unit. Moreover, we borrow the idea from relativistic GAN to let the discriminator predict relative realness instead of the absolute value. Finally, we improve the perceptual loss by using the features before activation, which could provide stronger supervision for brightness consistency and texture recovery. Benefiting from these improvements, the proposed ESRGAN achieves consistently better visual quality with more realistic and natural textures than SRGAN and won the first place in the PIRM2018-SR Challenge.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels, `scale` pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/glean/README.md b/configs/restorers/glean/README.md

index 02b9d9732f..6236dac1e9 100644

--- a/configs/restorers/glean/README.md

+++ b/configs/restorers/glean/README.md

@@ -1,8 +1,22 @@

# GLEAN (CVPR'2021)

+## Abstract

+

+

+

+We show that pre-trained Generative Adversarial Networks (GANs), e.g., StyleGAN, can be used as a latent bank to improve the restoration quality of large-factor image super-resolution (SR). While most existing SR approaches attempt to generate realistic textures through learning with adversarial loss, our method, Generative LatEnt bANk (GLEAN), goes beyond existing practices by directly leveraging rich and diverse priors encapsulated in a pre-trained GAN. But unlike prevalent GAN inversion methods that require expensive image-specific optimization at runtime, our approach only needs a single forward pass to generate the upscaled image. GLEAN can be easily incorporated in a simple encoder-bank-decoder architecture with multi-resolution skip connections. Switching the bank allows the method to deal with images from diverse categories, e.g., cat, building, human face, and car. Images upscaled by GLEAN show clear improvements in terms of fidelity and texture faithfulness in comparison to existing methods.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-GLEAN (CVPR'2021)

```bibtex

@InProceedings{chan2021glean,

@@ -13,18 +27,8 @@

}

```

-

-

-## Abstract

-We show that pre-trained Generative Adversarial Networks (GANs), e.g., StyleGAN, can be used as a latent bank to improve the restoration quality of large-factor image super-resolution (SR). While most existing SR approaches attempt to generate realistic textures through learning with adversarial loss, our method, Generative LatEnt bANk (GLEAN), goes beyond existing practices by directly leveraging rich and diverse priors encapsulated in a pre-trained GAN. But unlike prevalent GAN inversion methods that require expensive image-specific optimization at runtime, our approach only needs a single forward pass to generate the upscaled image. GLEAN can be easily incorporated in a simple encoder-bank-decoder architecture with multi-resolution skip connections. Switching the bank allows the method to deal with images from diverse categories, e.g., cat, building, human face, and car. Images upscaled by GLEAN show clear improvements in terms of fidelity and texture faithfulness in comparison to existing methods.

-

-

-

-  -

-

-

+## Results and models

-## Results and Models

For the meta info used in training and test, please refer to [here](https://github.com/ckkelvinchan/GLEAN). The results are evaluated on RGB channels.

| Method | PSNR | Download |

diff --git a/configs/restorers/iconvsr/README.md b/configs/restorers/iconvsr/README.md

index 83ecf14124..13ed5b7249 100644

--- a/configs/restorers/iconvsr/README.md

+++ b/configs/restorers/iconvsr/README.md

@@ -1,9 +1,24 @@

# IconVSR (CVPR'2021)

-

+## Abstract

+

+

+

+Video super-resolution (VSR) approaches tend to have more components than the image counterparts as they need to exploit the additional temporal dimension. Complex designs are not uncommon. In this study, we wish to untangle the knots and reconsider some most essential components for VSR guided by four basic functionalities, i.e., Propagation, Alignment, Aggregation, and Upsampling. By reusing some existing components added with minimal redesigns, we show a succinct pipeline, BasicVSR, that achieves appealing improvements in terms of speed and restoration quality in comparison to many state-of-the-art algorithms. We conduct systematic analysis to explain how such gain can be obtained and discuss the pitfalls. We further show the extensibility of BasicVSR by presenting an information-refill mechanism and a coupled propagation scheme to facilitate information aggregation. The BasicVSR and its extension, IconVSR, can serve as strong baselines for future VSR approaches.

+

+

+

+  +

+  +

+

+

+

+

+

+

-

-IconVSR (CVPR'2021)

+## Citation

+

+

```bibtex

@InProceedings{chan2021basicvsr,

@@ -14,18 +29,8 @@

}

```

-

-

-## Abstract

-Video super-resolution (VSR) approaches tend to have more components than the image counterparts as they need to exploit the additional temporal dimension. Complex designs are not uncommon. In this study, we wish to untangle the knots and reconsider some most essential components for VSR guided by four basic functionalities, i.e., Propagation, Alignment, Aggregation, and Upsampling. By reusing some existing components added with minimal redesigns, we show a succinct pipeline, BasicVSR, that achieves appealing improvements in terms of speed and restoration quality in comparison to many state-of-the-art algorithms. We conduct systematic analysis to explain how such gain can be obtained and discuss the pitfalls. We further show the extensibility of BasicVSR by presenting an information-refill mechanism and a coupled propagation scheme to facilitate information aggregation. The BasicVSR and its extension, IconVSR, can serve as strong baselines for future VSR approaches.

-

-

-  -

-  -

-

-

-

+## Results and models

-## Results and Models

Evaluated on RGB channels for REDS4 and Y channel for others. The metrics are `PSNR` / `SSIM` .

The pretrained weights of the IconVSR components can be found here: [SPyNet](https://download.openmmlab.com/mmediting/restorers/basicvsr/spynet_20210409-c6c1bd09.pth), [EDVR-M for REDS](https://download.openmmlab.com/mmediting/restorers/iconvsr/edvrm_reds_20210413-3867262f.pth), and [EDVR-M for Vimeo-90K](https://download.openmmlab.com/mmediting/restorers/iconvsr/edvrm_vimeo90k_20210413-e40e99a8.pth).

diff --git a/configs/restorers/liif/README.md b/configs/restorers/liif/README.md

index 21cc2b9344..004b03cfc2 100644

--- a/configs/restorers/liif/README.md

+++ b/configs/restorers/liif/README.md

@@ -1,9 +1,22 @@

# LIIF (CVPR'2021)

-

+## Abstract

+

+

+

+How to represent an image? While the visual world is presented in a continuous manner, machines store and see the images in a discrete way with 2D arrays of pixels. In this paper, we seek to learn a continuous representation for images. Inspired by the recent progress in 3D reconstruction with implicit neural representation, we propose Local Implicit Image Function (LIIF), which takes an image coordinate and the 2D deep features around the coordinate as inputs, predicts the RGB value at a given coordinate as an output. Since the coordinates are continuous, LIIF can be presented in arbitrary resolution. To generate the continuous representation for images, we train an encoder with LIIF representation via a self-supervised task with super-resolution. The learned continuous representation can be presented in arbitrary resolution even extrapolate to x30 higher resolution, where the training tasks are not provided. We further show that LIIF representation builds a bridge between discrete and continuous representation in 2D, it naturally supports the learning tasks with size-varied image ground-truths and significantly outperforms the method with resizing the ground-truths.

+

+

+

+  +

+

+

+

+

-

-LIIF (CVPR'2021)

+## Citation

+

+

```bibtex

@inproceedings{chen2021learning,

@@ -15,17 +28,8 @@

}

```

-

-

-## Abstract

-How to represent an image? While the visual world is presented in a continuous manner, machines store and see the images in a discrete way with 2D arrays of pixels. In this paper, we seek to learn a continuous representation for images. Inspired by the recent progress in 3D reconstruction with implicit neural representation, we propose Local Implicit Image Function (LIIF), which takes an image coordinate and the 2D deep features around the coordinate as inputs, predicts the RGB value at a given coordinate as an output. Since the coordinates are continuous, LIIF can be presented in arbitrary resolution. To generate the continuous representation for images, we train an encoder with LIIF representation via a self-supervised task with super-resolution. The learned continuous representation can be presented in arbitrary resolution even extrapolate to x30 higher resolution, where the training tasks are not provided. We further show that LIIF representation builds a bridge between discrete and continuous representation in 2D, it naturally supports the learning tasks with size-varied image ground-truths and significantly outperforms the method with resizing the ground-truths.

-

-

-

-  -

-

+## Results and models

-## Results and Models

| Method | scale | Set5

PSNR / SSIM | Set14

PSNR / SSIM | DIV2K

PSNR / SSIM | Download |

| :--------------------------------------------------------------------------------------------------------------: | :---: | :-----------------: | :------------------: | :-------------------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

| [liif_edsr_norm_c64b16_g1_1000k_div2k](/configs/restorers/liif/liif_edsr_norm_c64b16_g1_1000k_div2k.py) | x2 | 35.7131 / 0.9366 | 31.5579 / 0.8889 | 34.6647 / 0.9355 | [model](https://download.openmmlab.com/mmediting/restorers/liif/liif_edsr_norm_c64b16_g1_1000k_div2k_20210715-ab7ce3fc.pth) \| [log](https://download.openmmlab.com/mmediting/restorers/liif/liif_edsr_norm_c64b16_g1_1000k_div2k_20210715-ab7ce3fc.log.json) |

diff --git a/configs/restorers/rdn/README.md b/configs/restorers/rdn/README.md

index d0d6ddef7b..4c082787a7 100644

--- a/configs/restorers/rdn/README.md

+++ b/configs/restorers/rdn/README.md

@@ -1,8 +1,22 @@

# RDN (CVPR'2018)

+## Abstract

+

+

+

+A very deep convolutional neural network (CNN) has recently achieved great success for image super-resolution (SR) and offered hierarchical features as well. However, most deep CNN based SR models do not make full use of the hierarchical features from the original low-resolution (LR) images, thereby achieving relatively-low performance. In this paper, we propose a novel residual dense network (RDN) to address this problem in image SR. We fully exploit the hierarchical features from all the convolutional layers. Specifically, we propose residual dense block (RDB) to extract abundant local features via dense connected convolutional layers. RDB further allows direct connections from the state of preceding RDB to all the layers of current RDB, leading to a contiguous memory (CM) mechanism. Local feature fusion in RDB is then used to adaptively learn more effective features from preceding and current local features and stabilizes the training of wider network. After fully obtaining dense local features, we use global feature fusion to jointly and adaptively learn global hierarchical features in a holistic way. Extensive experiments on benchmark datasets with different degradation models show that our RDN achieves favorable performance against state-of-the-art methods.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-RDN (CVPR'2018)

```bibtex

@inproceedings{zhang2018residual,

@@ -14,16 +28,8 @@

}

```

-

-

-## Abstract

-A very deep convolutional neural network (CNN) has recently achieved great success for image super-resolution (SR) and offered hierarchical features as well. However, most deep CNN based SR models do not make full use of the hierarchical features from the original low-resolution (LR) images, thereby achieving relatively-low performance. In this paper, we propose a novel residual dense network (RDN) to address this problem in image SR. We fully exploit the hierarchical features from all the convolutional layers. Specifically, we propose residual dense block (RDB) to extract abundant local features via dense connected convolutional layers. RDB further allows direct connections from the state of preceding RDB to all the layers of current RDB, leading to a contiguous memory (CM) mechanism. Local feature fusion in RDB is then used to adaptively learn more effective features from preceding and current local features and stabilizes the training of wider network. After fully obtaining dense local features, we use global feature fusion to jointly and adaptively learn global hierarchical features in a holistic way. Extensive experiments on benchmark datasets with different degradation models show that our RDN achieves favorable performance against state-of-the-art methods.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels, `scale` pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/real_esrgan/README.md b/configs/restorers/real_esrgan/README.md

index 17ad1c3d10..fc299836d3 100644

--- a/configs/restorers/real_esrgan/README.md

+++ b/configs/restorers/real_esrgan/README.md

@@ -1,9 +1,22 @@

# Real-ESRGAN (ICCVW'2021)

-

+## Abstract

+

+

+

+Though many attempts have been made in blind super-resolution to restore low-resolution images with unknown and complex degradations, they are still far from addressing general real-world degraded images. In this work, we extend the powerful ESRGAN to a practical restoration application (namely, Real-ESRGAN), which is trained with pure synthetic data. Specifically, a high-order degradation modeling process is introduced to better simulate complex real-world degradations. We also consider the common ringing and overshoot artifacts in the synthesis process. In addition, we employ a U-Net discriminator with spectral normalization to increase discriminator capability and stabilize the training dynamics. Extensive comparisons have shown its superior visual performance than prior works on various real datasets. We also provide efficient implementations to synthesize training pairs on the fly.

+

+

+

+  +

+

+

+

+

-

-Real-ESRGAN (ICCVW'2021)

+## Citation

+

+

```bibtex

@inproceedings{wang2021real,

@@ -15,16 +28,8 @@

}

```

-

-

-## Abstract

-Though many attempts have been made in blind super-resolution to restore low-resolution images with unknown and complex degradations, they are still far from addressing general real-world degraded images. In this work, we extend the powerful ESRGAN to a practical restoration application (namely, Real-ESRGAN), which is trained with pure synthetic data. Specifically, a high-order degradation modeling process is introduced to better simulate complex real-world degradations. We also consider the common ringing and overshoot artifacts in the synthesis process. In addition, we employ a U-Net discriminator with spectral normalization to increase discriminator capability and stabilize the training dynamics. Extensive comparisons have shown its superior visual performance than prior works on various real datasets. We also provide efficient implementations to synthesize training pairs on the fly.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels. The metrics are `PSNR/SSIM`.

| Method | Set5 | Download |

diff --git a/configs/restorers/srcnn/README.md b/configs/restorers/srcnn/README.md

index 420b9e9a90..0adc4a8ecc 100644

--- a/configs/restorers/srcnn/README.md

+++ b/configs/restorers/srcnn/README.md

@@ -1,8 +1,22 @@

# SRCNN (TPAMI'2015)

+## Abstract

+

+

+

+We propose a deep learning method for single image super-resolution (SR). Our method directly learns an end-to-end mapping between the low/high-resolution images. The mapping is represented as a deep convolutional neural network (CNN) that takes the low-resolution image as the input and outputs the high-resolution one. We further show that traditional sparse-coding-based SR methods can also be viewed as a deep convolutional network. But unlike traditional methods that handle each component separately, our method jointly optimizes all layers. Our deep CNN has a lightweight structure, yet demonstrates state-of-the-art restoration quality, and achieves fast speed for practical on-line usage. We explore different network structures and parameter settings to achieve trade-offs between performance and speed. Moreover, we extend our network to cope with three color channels simultaneously, and show better overall reconstruction quality.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-SRCNN (TPAMI'2015)

```bibtex

@article{dong2015image,

@@ -17,16 +31,8 @@

}

```

-

-

-## Abstract

-We propose a deep learning method for single image super-resolution (SR). Our method directly learns an end-to-end mapping between the low/high-resolution images. The mapping is represented as a deep convolutional neural network (CNN) that takes the low-resolution image as the input and outputs the high-resolution one. We further show that traditional sparse-coding-based SR methods can also be viewed as a deep convolutional network. But unlike traditional methods that handle each component separately, our method jointly optimizes all layers. Our deep CNN has a lightweight structure, yet demonstrates state-of-the-art restoration quality, and achieves fast speed for practical on-line usage. We explore different network structures and parameter settings to achieve trade-offs between performance and speed. Moreover, we extend our network to cope with three color channels simultaneously, and show better overall reconstruction quality.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels, `scale` pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/srresnet_srgan/README.md b/configs/restorers/srresnet_srgan/README.md

index b24949097c..e08fdbf3b1 100644

--- a/configs/restorers/srresnet_srgan/README.md

+++ b/configs/restorers/srresnet_srgan/README.md

@@ -1,8 +1,22 @@

# SRGAN (CVPR'2016)

+## Abstract

+

+

+

+Despite the breakthroughs in accuracy and speed of single image super-resolution using faster and deeper convolutional neural networks, one central problem remains largely unsolved: how do we recover the finer texture details when we super-resolve at large upscaling factors? The behavior of optimization-based super-resolution methods is principally driven by the choice of the objective function. Recent work has largely focused on minimizing the mean squared reconstruction error. The resulting estimates have high peak signal-to-noise ratios, but they are often lacking high-frequency details and are perceptually unsatisfying in the sense that they fail to match the fidelity expected at the higher resolution. In this paper, we present SRGAN, a generative adversarial network (GAN) for image super-resolution (SR). To our knowledge, it is the first framework capable of inferring photo-realistic natural images for 4x upscaling factors. To achieve this, we propose a perceptual loss function which consists of an adversarial loss and a content loss. The adversarial loss pushes our solution to the natural image manifold using a discriminator network that is trained to differentiate between the super-resolved images and original photo-realistic images. In addition, we use a content loss motivated by perceptual similarity instead of similarity in pixel space. Our deep residual network is able to recover photo-realistic textures from heavily downsampled images on public benchmarks. An extensive mean-opinion-score (MOS) test shows hugely significant gains in perceptual quality using SRGAN. The MOS scores obtained with SRGAN are closer to those of the original high-resolution images than to those obtained with any state-of-the-art method.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-SRGAN (CVPR'2016)

```bibtex

@inproceedings{ledig2016photo,

@@ -13,16 +27,8 @@

}

```

-

-

-## Abstract

-Despite the breakthroughs in accuracy and speed of single image super-resolution using faster and deeper convolutional neural networks, one central problem remains largely unsolved: how do we recover the finer texture details when we super-resolve at large upscaling factors? The behavior of optimization-based super-resolution methods is principally driven by the choice of the objective function. Recent work has largely focused on minimizing the mean squared reconstruction error. The resulting estimates have high peak signal-to-noise ratios, but they are often lacking high-frequency details and are perceptually unsatisfying in the sense that they fail to match the fidelity expected at the higher resolution. In this paper, we present SRGAN, a generative adversarial network (GAN) for image super-resolution (SR). To our knowledge, it is the first framework capable of inferring photo-realistic natural images for 4x upscaling factors. To achieve this, we propose a perceptual loss function which consists of an adversarial loss and a content loss. The adversarial loss pushes our solution to the natural image manifold using a discriminator network that is trained to differentiate between the super-resolved images and original photo-realistic images. In addition, we use a content loss motivated by perceptual similarity instead of similarity in pixel space. Our deep residual network is able to recover photo-realistic textures from heavily downsampled images on public benchmarks. An extensive mean-opinion-score (MOS) test shows hugely significant gains in perceptual quality using SRGAN. The MOS scores obtained with SRGAN are closer to those of the original high-resolution images than to those obtained with any state-of-the-art method.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels, `scale` pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/tdan/README.md b/configs/restorers/tdan/README.md

index 573d564059..ace3594ab0 100644

--- a/configs/restorers/tdan/README.md

+++ b/configs/restorers/tdan/README.md

@@ -1,8 +1,22 @@

# TDAN (CVPR'2020)

+## Abstract

+

+

+

+Video super-resolution (VSR) aims to restore a photo-realistic high-resolution (HR) video frame from both its corresponding low-resolution (LR) frame (reference frame) and multiple neighboring frames (supporting frames). Due to varying motion of cameras or objects, the reference frame and each support frame are not aligned. Therefore, temporal alignment is a challenging yet important problem for VSR. Previous VSR methods usually utilize optical flow between the reference frame and each supporting frame to wrap the supporting frame for temporal alignment. Therefore, the performance of these image-level wrapping-based models will highly depend on the prediction accuracy of optical flow, and inaccurate optical flow will lead to artifacts in the wrapped supporting frames, which also will be propagated into the reconstructed HR video frame. To overcome the limitation, in this paper, we propose a temporal deformable alignment network (TDAN) to adaptively align the reference frame and each supporting frame at the feature level without computing optical flow. The TDAN uses features from both the reference frame and each supporting frame to dynamically predict offsets of sampling convolution kernels. By using the corresponding kernels, TDAN transforms supporting frames to align with the reference frame. To predict the HR video frame, a reconstruction network taking aligned frames and the reference frame is utilized. Experimental results demonstrate the effectiveness of the proposed TDAN-based VSR model.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-TDAN (CVPR'2020)

```bibtex

@InProceedings{tian2020tdan,

@@ -13,16 +27,8 @@

}

```

-

-

-## Abstract

-Video super-resolution (VSR) aims to restore a photo-realistic high-resolution (HR) video frame from both its corresponding low-resolution (LR) frame (reference frame) and multiple neighboring frames (supporting frames). Due to varying motion of cameras or objects, the reference frame and each support frame are not aligned. Therefore, temporal alignment is a challenging yet important problem for VSR. Previous VSR methods usually utilize optical flow between the reference frame and each supporting frame to wrap the supporting frame for temporal alignment. Therefore, the performance of these image-level wrapping-based models will highly depend on the prediction accuracy of optical flow, and inaccurate optical flow will lead to artifacts in the wrapped supporting frames, which also will be propagated into the reconstructed HR video frame. To overcome the limitation, in this paper, we propose a temporal deformable alignment network (TDAN) to adaptively align the reference frame and each supporting frame at the feature level without computing optical flow. The TDAN uses features from both the reference frame and each supporting frame to dynamically predict offsets of sampling convolution kernels. By using the corresponding kernels, TDAN transforms supporting frames to align with the reference frame. To predict the HR video frame, a reconstruction network taking aligned frames and the reference frame is utilized. Experimental results demonstrate the effectiveness of the proposed TDAN-based VSR model.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on Y-channel. 8 pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/tof/README.md b/configs/restorers/tof/README.md

index 50fc1e1459..0f49bf0326 100644

--- a/configs/restorers/tof/README.md

+++ b/configs/restorers/tof/README.md

@@ -1,8 +1,22 @@

# TOFlow (IJCV'2019)

+## Abstract

+

+

+

+Many video enhancement algorithms rely on optical flow to register frames in a video sequence. Precise flow estimation is however intractable; and optical flow itself is often a sub-optimal representation for particular video processing tasks. In this paper, we propose task-oriented flow (TOFlow), a motion representation learned in a self-supervised, task-specific manner. We design a neural network with a trainable motion estimation component and a video processing component, and train them jointly to learn the task-oriented flow. For evaluation, we build Vimeo-90K, a large-scale, high-quality video dataset for low-level video processing. TOFlow outperforms traditional optical flow on standard benchmarks as well as our Vimeo-90K dataset in three video processing tasks: frame interpolation, video denoising/deblocking, and video super-resolution.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-TOFlow (IJCV'2019)

```bibtex

@article{xue2019video,

@@ -17,16 +31,8 @@

}

```

-

-

-## Abstract

-Many video enhancement algorithms rely on optical flow to register frames in a video sequence. Precise flow estimation is however intractable; and optical flow itself is often a sub-optimal representation for particular video processing tasks. In this paper, we propose task-oriented flow (TOFlow), a motion representation learned in a self-supervised, task-specific manner. We design a neural network with a trainable motion estimation component and a video processing component, and train them jointly to learn the task-oriented flow. For evaluation, we build Vimeo-90K, a large-scale, high-quality video dataset for low-level video processing. TOFlow outperforms traditional optical flow on standard benchmarks as well as our Vimeo-90K dataset in three video processing tasks: frame interpolation, video denoising/deblocking, and video super-resolution.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels.

The metrics are `PSNR / SSIM` .

diff --git a/configs/restorers/ttsr/README.md b/configs/restorers/ttsr/README.md

index df82a9c4d6..8e8fb261c4 100644

--- a/configs/restorers/ttsr/README.md

+++ b/configs/restorers/ttsr/README.md

@@ -1,8 +1,22 @@

# TTSR (CVPR'2020)

+## Abstract

+

+

+

+We study on image super-resolution (SR), which aims to recover realistic textures from a low-resolution (LR) image. Recent progress has been made by taking high-resolution images as references (Ref), so that relevant textures can be transferred to LR images. However, existing SR approaches neglect to use attention mechanisms to transfer high-resolution (HR) textures from Ref images, which limits these approaches in challenging cases. In this paper, we propose a novel Texture Transformer Network for Image Super-Resolution (TTSR), in which the LR and Ref images are formulated as queries and keys in a transformer, respectively. TTSR consists of four closely-related modules optimized for image generation tasks, including a learnable texture extractor by DNN, a relevance embedding module, a hard-attention module for texture transfer, and a soft-attention module for texture synthesis. Such a design encourages joint feature learning across LR and Ref images, in which deep feature correspondences can be discovered by attention, and thus accurate texture features can be transferred. The proposed texture transformer can be further stacked in a cross-scale way, which enables texture recovery from different levels (e.g., from 1x to 4x magnification). Extensive experiments show that TTSR achieves significant improvements over state-of-the-art approaches on both quantitative and qualitative evaluations.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-TTSR (CVPR'2020)

```bibtex

@inproceedings{yang2020learning,

@@ -14,16 +28,8 @@

}

```

-

-

-## Abstract

-We study on image super-resolution (SR), which aims to recover realistic textures from a low-resolution (LR) image. Recent progress has been made by taking high-resolution images as references (Ref), so that relevant textures can be transferred to LR images. However, existing SR approaches neglect to use attention mechanisms to transfer high-resolution (HR) textures from Ref images, which limits these approaches in challenging cases. In this paper, we propose a novel Texture Transformer Network for Image Super-Resolution (TTSR), in which the LR and Ref images are formulated as queries and keys in a transformer, respectively. TTSR consists of four closely-related modules optimized for image generation tasks, including a learnable texture extractor by DNN, a relevance embedding module, a hard-attention module for texture transfer, and a soft-attention module for texture synthesis. Such a design encourages joint feature learning across LR and Ref images, in which deep feature correspondences can be discovered by attention, and thus accurate texture features can be transferred. The proposed texture transformer can be further stacked in a cross-scale way, which enables texture recovery from different levels (e.g., from 1x to 4x magnification). Extensive experiments show that TTSR achieves significant improvements over state-of-the-art approaches on both quantitative and qualitative evaluations.

-

-

-  -

-

+## Results and models

-## Results and Models

Evaluated on RGB channels, `scale` pixels in each border are cropped before evaluation.

The metrics are `PSNR / SSIM` .

diff --git a/configs/synthesizers/cyclegan/README.md b/configs/synthesizers/cyclegan/README.md

index 2a2de24cfe..64ed9bb113 100644

--- a/configs/synthesizers/cyclegan/README.md

+++ b/configs/synthesizers/cyclegan/README.md

@@ -1,8 +1,22 @@

# CycleGAN (ICCV'2017)

+## Abstract

+

+

+

+Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image using a training set of aligned image pairs. However, for many tasks, paired training data will not be available. We present an approach for learning to translate an image from a source domain X to a target domain Y in the absence of paired examples. Our goal is to learn a mapping G:X→Y such that the distribution of images from G(X) is indistinguishable from the distribution Y using an adversarial loss. Because this mapping is highly under-constrained, we couple it with an inverse mapping F:Y→X and introduce a cycle consistency loss to push F(G(X))≈X (and vice versa). Qualitative results are presented on several tasks where paired training data does not exist, including collection style transfer, object transfiguration, season transfer, photo enhancement, etc. Quantitative comparisons against several prior methods demonstrate the superiority of our approach.

+

+

+

+  +

+

+

+

+

+

+## Citation

+

-

-CycleGAN (ICCV'2017)

```bibtex

@inproceedings{zhu2017unpaired,

@@ -14,19 +28,7 @@

}

```

-

-

-

-

-## Abstract

-Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image using a training set of aligned image pairs. However, for many tasks, paired training data will not be available. We present an approach for learning to translate an image from a source domain X to a target domain Y in the absence of paired examples. Our goal is to learn a mapping G:X→Y such that the distribution of images from G(X) is indistinguishable from the distribution Y using an adversarial loss. Because this mapping is highly under-constrained, we couple it with an inverse mapping F:Y→X and introduce a cycle consistency loss to push F(G(X))≈X (and vice versa). Qualitative results are presented on several tasks where paired training data does not exist, including collection style transfer, object transfiguration, season transfer, photo enhancement, etc. Quantitative comparisons against several prior methods demonstrate the superiority of our approach.

-

-

-  -

-

-

-

-## Results

+## Results and models

We use `FID` and `IS` metrics to evaluate the generation performance of CycleGAN.