diff --git a/docs/development/gateway/api.mdx b/_DEPRECATED/gateway/api.mdx

similarity index 100%

rename from docs/development/gateway/api.mdx

rename to _DEPRECATED/gateway/api.mdx

diff --git a/docs/development/gateway/caching-system.mdx b/_DEPRECATED/gateway/caching-system.mdx

similarity index 100%

rename from docs/development/gateway/caching-system.mdx

rename to _DEPRECATED/gateway/caching-system.mdx

diff --git a/docs/development/gateway/device-management.mdx b/_DEPRECATED/gateway/device-management.mdx

similarity index 100%

rename from docs/development/gateway/device-management.mdx

rename to _DEPRECATED/gateway/device-management.mdx

diff --git a/docs/development/gateway/enterprise-management.mdx b/_DEPRECATED/gateway/enterprise-management.mdx

similarity index 100%

rename from docs/development/gateway/enterprise-management.mdx

rename to _DEPRECATED/gateway/enterprise-management.mdx

diff --git a/docs/development/gateway/index.mdx b/_DEPRECATED/gateway/index.mdx

similarity index 100%

rename from docs/development/gateway/index.mdx

rename to _DEPRECATED/gateway/index.mdx

diff --git a/docs/development/gateway/mcp.mdx b/_DEPRECATED/gateway/mcp.mdx

similarity index 100%

rename from docs/development/gateway/mcp.mdx

rename to _DEPRECATED/gateway/mcp.mdx

diff --git a/docs/development/gateway/meta.json b/_DEPRECATED/gateway/meta.json

similarity index 100%

rename from docs/development/gateway/meta.json

rename to _DEPRECATED/gateway/meta.json

diff --git a/docs/development/gateway/oauth.mdx b/_DEPRECATED/gateway/oauth.mdx

similarity index 100%

rename from docs/development/gateway/oauth.mdx

rename to _DEPRECATED/gateway/oauth.mdx

diff --git a/docs/development/gateway/process-management.mdx b/_DEPRECATED/gateway/process-management.mdx

similarity index 100%

rename from docs/development/gateway/process-management.mdx

rename to _DEPRECATED/gateway/process-management.mdx

diff --git a/docs/development/gateway/security.mdx b/_DEPRECATED/gateway/security.mdx

similarity index 100%

rename from docs/development/gateway/security.mdx

rename to _DEPRECATED/gateway/security.mdx

diff --git a/docs/development/gateway/session-management.mdx b/_DEPRECATED/gateway/session-management.mdx

similarity index 100%

rename from docs/development/gateway/session-management.mdx

rename to _DEPRECATED/gateway/session-management.mdx

diff --git a/docs/development/gateway/sse-transport.mdx b/_DEPRECATED/gateway/sse-transport.mdx

similarity index 100%

rename from docs/development/gateway/sse-transport.mdx

rename to _DEPRECATED/gateway/sse-transport.mdx

diff --git a/docs/development/gateway/structure.mdx b/_DEPRECATED/gateway/structure.mdx

similarity index 100%

rename from docs/development/gateway/structure.mdx

rename to _DEPRECATED/gateway/structure.mdx

diff --git a/docs/development/gateway/teams.mdx b/_DEPRECATED/gateway/teams.mdx

similarity index 100%

rename from docs/development/gateway/teams.mdx

rename to _DEPRECATED/gateway/teams.mdx

diff --git a/docs/development/gateway/tech-stack.mdx b/_DEPRECATED/gateway/tech-stack.mdx

similarity index 100%

rename from docs/development/gateway/tech-stack.mdx

rename to _DEPRECATED/gateway/tech-stack.mdx

diff --git a/docs/development/gateway/testing.mdx b/_DEPRECATED/gateway/testing.mdx

similarity index 100%

rename from docs/development/gateway/testing.mdx

rename to _DEPRECATED/gateway/testing.mdx

diff --git a/docs/architecture.mdx b/docs/architecture.mdx

index 2b00572..e6fcd5e 100644

--- a/docs/architecture.mdx

+++ b/docs/architecture.mdx

@@ -11,7 +11,7 @@ import { Zap, Shield, Monitor, Cloud, Settings, Users } from 'lucide-react';

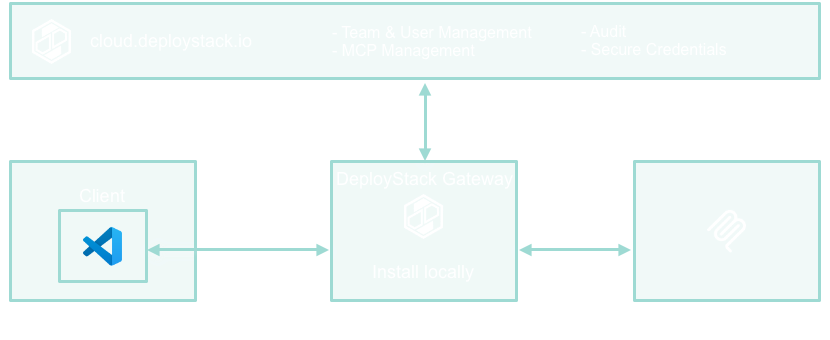

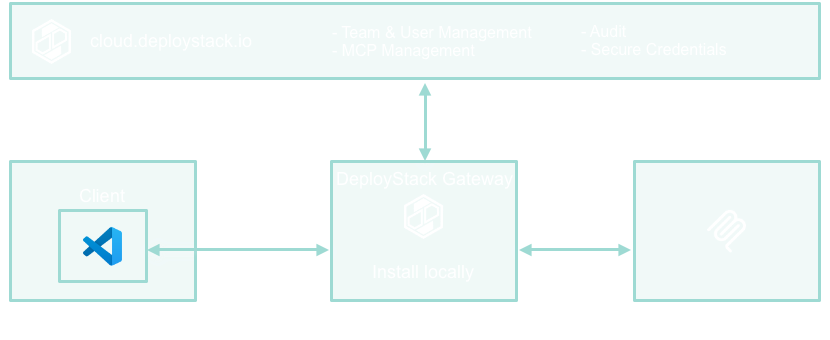

# DeployStack Architecture

-DeployStack transforms MCP from individual developer tools into enterprise-ready infrastructure through a sophisticated **Control Plane / Data Plane architecture**. Our platform eliminates configuration complexity, provides secure credential management, and offers complete organizational visibility for teams of any size.

+DeployStack transforms MCP from individual developer tools into enterprise-ready infrastructure through a sophisticated **Control Plane / Satellite architecture**. Our platform eliminates installation friction, provides secure credential management, and offers complete organizational visibility for teams of any size.

## The Problem: MCP Without Management

@@ -40,17 +40,17 @@ Traditional MCP implementation creates significant organizational challenges:

- **Tool Discovery**: Developers waste time finding and configuring tools individually

- **No Standardization**: No central catalog or approved tool list for organizational use

-## The Solution: Enterprise Control Plane

+## The Solution: MCP-as-a-Service

-DeployStack introduces a **Control Plane / Data Plane architecture** that brings enterprise-grade management to the MCP ecosystem while maintaining the performance and flexibility developers expect.

+DeployStack introduces a **Control Plane / Satellite architecture** that brings enterprise-grade management to the MCP ecosystem with zero installation friction through managed satellite infrastructure.

## Core Components

@@ -64,16 +64,16 @@ DeployStack introduces a **Control Plane / Data Plane architecture** that brings

}

- title="Data Plane"

+ title="Satellite Infrastructure"

>

- **DeployStack Gateway** - Local secure proxy managing persistent MCP server processes with credential injection

+ **Global & Team Satellites** - Managed MCP infrastructure providing instant access with zero installation

}

title="Developer Interface"

>

- **Agent Integration** - VS Code, CLI tools, and other MCP clients connect seamlessly through the gateway

+ **Simple URL Configuration** - VS Code, Claude, and other MCP clients connect via HTTPS URL with OAuth

@@ -99,78 +99,61 @@ The cloud-based control plane provides centralized management for all MCP infras

- **Cost Tracking**: Monitor expensive API usage across teams and optimize spending

- **Audit Trails**: Complete logging of all MCP server interactions for compliance

-### Data Plane: DeployStack Gateway

+### Satellite Infrastructure: Global & Team Satellites

-The local gateway acts as an intelligent proxy and process manager running on each developer's machine:

+The satellite infrastructure provides managed MCP services through two deployment models:

-#### Persistent Process Management

-- **Background Processes**: All configured MCP servers run as [persistent background processes](/development/gateway/process-management) when the gateway starts

-- **Instant Availability**: Tools are immediately available without process spawning delays

-- **Language Agnostic**: Supports MCP servers written in Node.js, Python, Go, Rust, or any language

+#### Global Satellites (Managed by DeployStack)

+- **Zero Installation**: Access via simple HTTPS URL configuration

+- **Auto-Scaling**: Handles traffic spikes automatically

+- **Multi-Region**: Low-latency global availability

+- **Fully Featured**: Complete MCP server access and team management

-#### Dual Transport Architecture

-The gateway implements sophisticated transport protocols for maximum compatibility:

+#### Team Satellites (Customer-Deployed)

+- **On-Premise Deployment**: Within corporate networks for internal resource access

+- **Complete Team Isolation**: Linux namespaces and cgroups for security

+- **Internal Resources**: Connect to company databases, APIs, file systems

+- **Enterprise Security**: Full compliance and governance controls

-**SSE Transport (VS Code Compatibility)**:

-```

-VS Code → GET /sse → DeployStack Gateway

- ← SSE Stream with session endpoint

-VS Code → POST /message?session=xyz → Gateway → MCP Server (stdio)

- ← JSON-RPC response via SSE

-```

-

-**stdio Transport (CLI Compatibility)**:

-```

-CLI Tool → DeployStack Gateway → MCP Server (stdio)

- ← Direct JSON-RPC over stdio

-```

-

-#### Secure Credential Injection

-- **Runtime Injection**: Credentials are injected directly into MCP server process environments at startup

-- **Zero Disk Exposure**: No credentials written to disk in plain text

-- **Process Isolation**: Each MCP server runs in its own isolated environment

+#### OAuth Authentication

+- **Standard OAuth Flow**: Client credentials generated in dashboard

+- **Secure Token Exchange**: Standard Bearer Token authentication

+- **Team-Aware Access**: Credentials scoped to specific teams and permissions

+- **Zero Credential Storage**: No local credential management required

## Protocol Flow

-### 1. Developer Authentication

-```bash

-deploystack login

-```

-- Gateway authenticates with cloud.deploystack.io using OAuth2

-- Downloads team configurations and access policies

-- Caches encrypted configurations locally

-

-### 2. Gateway Startup

-```bash

-deploystack start

-```

-- **Configuration Sync**: Downloads latest team MCP server configurations

-- **Process Spawning**: Starts all configured MCP servers as background processes

-- **Credential Injection**: Securely injects team credentials into process environments

-- **Service Discovery**: Discovers and caches all available tools from running processes

-- **HTTP Server**: Starts local server at `http://localhost:9095/sse` for client connections

+### 1. OAuth Client Setup

+- Developer creates OAuth client credentials in cloud.deploystack.io dashboard

+- Client ID and Secret generated for secure satellite access

+- No software installation or local authentication required

-### 3. Client Connection

-**VS Code Configuration**:

+### 2. VS Code Configuration

+**Simple URL Configuration**:

```json

{

"mcpServers": {

"deploystack": {

- "url": "http://localhost:9095/sse"

+ "url": "https://satellite.deploystack.io/mcp",

+ "oauth": {

+ "client_id": "deploystack_mcp_client_abc123def456ghi789",

+ "client_secret": "deploystack_mcp_secret_xyz789abc123def456ghi789jkl012"

+ }

}

}

}

```

+### 3. Satellite Connection

**Connection Flow**:

-1. **SSE Establishment**: VS Code connects to `/sse` endpoint

-2. **Session Creation**: Gateway generates cryptographically secure session ID

-3. **Tool Discovery**: Client calls `tools/list` to discover available MCP servers

-4. **Request Routing**: All tool requests routed through gateway to persistent MCP processes

+1. **OAuth Authentication**: Client credentials validated against control plane

+2. **Team Resolution**: User's team memberships and permissions retrieved

+3. **Tool Discovery**: Available MCP tools based on team configuration

+4. **Request Processing**: All tool requests processed through managed satellite infrastructure

### 4. Request Processing

```

-Client Request → Gateway Session Validation → Route to MCP Process → Return Response

+Client Request → OAuth Validation → Team Authorization → Satellite MCP Processing → Response

```

## Security Architecture

@@ -178,68 +161,60 @@ Client Request → Gateway Session Validation → Route to MCP Process → Retur

DeployStack implements enterprise-grade security across all components of the platform. For comprehensive security details including credential management, access control, and compliance features, see our [Security Documentation](/security).

Key security principles:

-- **Zero-Trust Credential Model**: Credentials never stored on developer machines

-- **Process Isolation**: Each MCP server runs in complete isolation

-- **Cryptographic Sessions**: 256-bit entropy for all client connections

+- **OAuth Bearer Token Authentication**: Standard OAuth flow with secure credential management

+- **Team Isolation**: Complete separation between team resources and data

+- **Managed Infrastructure**: Enterprise-grade security controls in satellite infrastructure

## Performance Optimization

-### Persistent Process Model

-Unlike on-demand spawning, DeployStack uses persistent background processes:

+### Managed Satellite Infrastructure

+Unlike local installations, DeployStack uses managed satellite infrastructure:

-- **Zero Latency**: All tools immediately available from running processes

-- **Resource Efficiency**: No spawn/cleanup overhead during development workflows

-- **Memory Stability**: Consistent resource usage patterns

-- **Parallel Processing**: Concurrent handling of multiple requests across processes

+- **Instant Availability**: All tools immediately available without local setup

+- **Auto-Scaling**: Satellite infrastructure scales automatically with demand

+- **Global Distribution**: Multiple regions for low-latency access worldwide

+- **Zero Maintenance**: No local processes to manage or update

### Caching Strategy

-DeployStack implements sophisticated caching mechanisms to optimize performance and enable offline operation. For detailed information about the caching architecture, implementation, and team isolation strategies, see our [Gateway Caching System Documentation](/development/gateway/caching-system).

+DeployStack implements sophisticated caching mechanisms in satellite infrastructure for optimal performance. Caching is managed transparently by the satellite infrastructure with no local configuration required.

## Enterprise Features

-### Organizational Visibility

+### Organizational Visibility (Coming soon)

- **Real-Time Analytics**: Live dashboard showing MCP server usage across the organization

- **Cost Optimization**: Track expensive API usage and identify optimization opportunities

- **Resource Planning**: Understand which tools drive the most value for different teams

-### Compliance & Governance

+### Compliance & Governance (Coming soon)

- **Audit Logging**: Complete trails of all MCP server interactions

-- **Policy Enforcement**: Centralized policies automatically enforced at the gateway level

+- **Policy Enforcement**: Centralized policies automatically enforced at the satellite level

- **Access Reviews**: Regular reviews of team access to sensitive MCP servers

-### Operational Controls

+### Operational Controls (Coming soon)

- **Centralized Updates**: Push MCP server configuration changes to all team members

- **Emergency Disable**: Instantly disable problematic MCP servers across the organization

- **Health Monitoring**: Real-time monitoring of MCP server performance and availability

## Team Context Switching

-DeployStack supports multiple team memberships with seamless context switching:

-

-```bash

-# List available teams

-deploystack teams

-

-# Switch to different team

-deploystack teams --switch 2

-```

+DeployStack supports multiple team memberships with instant context switching:

-**Context Switch Process**:

-1. **Graceful Shutdown**: Stop all current team's MCP server processes

-2. **Configuration Refresh**: Download new team's configurations and credentials

-3. **Process Restart**: Start all MCP servers for the new team

-4. **State Synchronization**: Update local cache and runtime state

+**Team Switch Process** (via dashboard):

+1. **Select Team**: Choose different team in cloud.deploystack.io dashboard

+2. **Generate New OAuth Credentials**: Create new client credentials for the team

+3. **Update Configuration**: Replace OAuth credentials in VS Code configuration

+4. **Instant Access**: New team's MCP tools immediately available

## Deployment Models

### Cloud-Native (Default)

- **Control Plane**: Hosted at cloud.deploystack.io

-- **Data Plane**: Local gateway on developer machines

-- **Benefits**: Zero infrastructure management, automatic updates, shared team configurations

+- **Satellite Infrastructure**: Managed global satellites with optional team satellites

+- **Benefits**: Zero installation friction, automatic updates, shared team configurations

### Self-Hosted Enterprise

- **Control Plane**: Deployed in customer's infrastructure

-- **Data Plane**: Local gateways connect to private control plane

+- **Team Satellites**: Customer-deployed satellites within corporate networks

- **Benefits**: Complete data sovereignty, custom compliance requirements, air-gapped environments

## Development Workflow

@@ -257,10 +232,11 @@ deploystack teams --switch 2

### After DeployStack

```bash

-# One-time setup for entire team

-1. npm install -g @deploystack/gateway

-2. deploystack login

-3. # Done! All authorized tools available immediately

+# Zero installation setup for entire team

+1. Register at cloud.deploystack.io

+2. Create OAuth client credentials

+3. Add URL to VS Code configuration

+4. # Done! All authorized tools available immediately

```

**VS Code Configuration**:

@@ -268,48 +244,52 @@ deploystack teams --switch 2

{

"mcpServers": {

"deploystack": {

- "url": "http://localhost:9095/sse"

+ "url": "https://satellite.deploystack.io/mcp",

+ "oauth": {

+ "client_id": "your_client_id",

+ "client_secret": "your_client_secret"

+ }

}

}

}

```

-## Monitoring & Observability (comming soon)

+## Monitoring & Observability (Coming soon)

-### Gateway Metrics

-- **Process Health**: Real-time status of all MCP server processes

+### Satellite Metrics

+- **Infrastructure Health**: Real-time status of satellite infrastructure

- **Request Throughput**: Performance metrics for tool usage

- **Error Rates**: Failure detection and automatic recovery

-- **Resource Usage**: CPU, memory, and network consumption

+- **Resource Usage**: Satellite resource consumption and scaling

### Cloud Metrics

- **Team Activity**: Organization-wide usage patterns and trends

- **Cost Analysis**: API usage costs and optimization recommendations

- **Security Events**: Authentication, authorization, and policy violations

-- **Performance Analytics**: Gateway and MCP server performance across teams

+- **Performance Analytics**: Satellite performance across teams and regions

## Benefits Summary

### For Developers

-- **Zero Configuration**: One command setup, then everything works

+- **Zero Installation**: One URL configuration, then everything works

- **Instant Access**: All team tools immediately available

- **Consistent Environment**: Identical setup across all team members

-- **No Credential Management**: Never handle API keys or tokens

+- **No Credential Management**: OAuth handles all authentication securely

### for Organizations

-- **Complete Visibility**: Know what MCP tools are used, by whom, and how often

+- **Complete Visibility**: Know what MCP tools are used, by whom, and how often (coming soon)

- **Security Control**: Centralized credential management and access policies

-- **Cost Optimization**: Track and optimize expensive API usage

-- **Compliance Ready**: Full audit trails and governance controls

+- **Cost Optimization**: Track and optimize expensive API usage (coming soon)

+- **Compliance Ready**: Full audit trails and governance controls (coming soon)

### For Administrators

- **Central Management**: Single dashboard for entire MCP ecosystem

- **Policy Enforcement**: Granular control over tool access by team and role

-- **Instant Deployment**: Push configuration changes to all team members

-- **Operational Insights**: Real-time monitoring and analytics

+- **Instant Deployment**: Push configuration changes to all team members (coming soon)

+- **Operational Insights**: Real-time monitoring and analytics (coming soon)

- **Enterprise Transformation**: DeployStack transforms MCP from individual developer tools into enterprise-ready infrastructure, providing the security, governance, and operational control that organizations need while maintaining the developer experience that teams love.

+ **MCP-as-a-Service**: DeployStack transforms MCP from individual developer tools into enterprise-ready infrastructure with zero installation friction, providing the security, governance, and operational control that organizations need while maintaining the developer experience that teams love.

---

diff --git a/docs/development/backend/api-security.mdx b/docs/development/backend/api-security.mdx

index 05994b3..5f2ece2 100644

--- a/docs/development/backend/api-security.mdx

+++ b/docs/development/backend/api-security.mdx

@@ -234,6 +234,10 @@ requireOwnershipOrAdmin(getUserIdFromRequest) // User owns resource OR is admin

// Dual authentication (Cookie + OAuth2)

requireAuthenticationAny() // Accept either cookie or OAuth2 Bearer token

requireOAuthScope('scope.name') // Enforce OAuth2 scope requirements

+

+// Satellite authentication (API key-based)

+requireSatelliteAuth() // Validates satellite API keys using argon2

+requireUserOrSatelliteAuth() // Accept either user auth or satellite API key

```

### Dual Authentication Support

@@ -265,6 +269,79 @@ export default async function dualAuthRoute(server: FastifyInstance) {

For detailed OAuth2 implementation, see the [Backend OAuth Implementation Guide](/development/backend/oauth-providers) and [Backend Security Policy](/development/backend/security#oauth2-server-security).

+### Satellite Authentication

+

+For endpoints that need to authenticate DeployStack Satellite instances, use the satellite authentication middleware. Satellites use API key-based authentication with argon2 hash verification.

+

+```typescript

+import { requireSatelliteAuth, requireUserOrSatelliteAuth } from '../../middleware/satelliteAuthMiddleware';

+

+export default async function satelliteRoute(server: FastifyInstance) {

+ // Satellite-only endpoint

+ server.post('/satellites/:satelliteId/heartbeat', {

+ preValidation: [requireSatelliteAuth()], // Only satellites can access

+ schema: {

+ security: [{ bearerAuth: [] }] // API key via Bearer token

+ }

+ }, async (request, reply) => {

+ // Access satellite context

+ const satellite = request.satellite!;

+ const satelliteId = satellite.id;

+ const satelliteType = satellite.satellite_type; // 'global' or 'team'

+ });

+

+ // Hybrid endpoint (users OR satellites)

+ server.get('/satellites/:satelliteId/status', {

+ preValidation: [requireUserOrSatelliteAuth()], // Accept either auth method

+ schema: {

+ security: [

+ { cookieAuth: [] }, // User authentication

+ { bearerAuth: [] } // Satellite API key

+ ]

+ }

+ }, async (request, reply) => {

+ // Check authentication type

+ if (request.satellite) {

+ // Authenticated as satellite

+ const satelliteId = request.satellite.id;

+ } else if (request.user) {

+ // Authenticated as user

+ const userId = request.user.id;

+ }

+ });

+}

+```

+

+#### Satellite Authentication Flow

+

+The satellite authentication middleware performs these steps:

+

+1. **Bearer Token Extraction**: Extracts API key from Authorization header

+2. **Database Lookup**: Retrieves all satellite records from database

+3. **Hash Verification**: Uses argon2.verify() to validate API key against stored hashes

+4. **Context Setting**: Sets satellite information on request object for route handlers

+

+#### Satellite Context Object

+

+When satellite authentication succeeds, the middleware sets `request.satellite` with:

+

+```typescript

+interface SatelliteContext {

+ id: string; // Satellite unique identifier

+ name: string; // Human-readable satellite name

+ satellite_type: 'global' | 'team'; // Deployment type

+ team_id: string | null; // Associated team (null for global)

+ status: 'active' | 'inactive' | 'maintenance' | 'error'; // Current status

+}

+```

+

+#### Security Considerations

+

+- **API Key Storage**: Satellite API keys are stored as argon2 hashes in the database

+- **Key Generation**: 32-byte cryptographically secure random keys (base64url encoded)

+- **Key Rotation**: New API key generated on each satellite registration

+- **Scope Isolation**: Satellites can only access their own resources and endpoints

+

### Team-Aware Permission System

For endpoints that operate within team contexts (e.g., `/teams/:teamId/resource`), use the team-aware permission middleware:

diff --git a/docs/development/backend/gateway-client-config.mdx b/docs/development/backend/gateway-client-config.mdx

deleted file mode 100644

index 06f42d4..0000000

--- a/docs/development/backend/gateway-client-config.mdx

+++ /dev/null

@@ -1,78 +0,0 @@

----

-title: Gateway Client Configuration API

-description: Developer guide for the Gateway Client Configuration endpoint that provides pre-formatted configuration files for MCP clients to connect to the DeployStack Gateway

----

-

-# Gateway Client Configuration API

-

-The Gateway Client Configuration API provides pre-formatted configuration files for various MCP clients to connect to the local DeployStack Gateway. This eliminates manual configuration steps and reduces setup errors for developers.

-

-## Overview

-

-The endpoint generates client-specific JSON configurations that users can directly use in their MCP clients (Claude Desktop, Cline, VSCode, Cursor, Windsurf) to connect to their local DeployStack Gateway running on `http://localhost:9095/sse`.

-

-## API Endpoint

-

-**Route:** `GET /api/gateway/config/:client`

-

-**Parameters:**

-- `:client` - The MCP client type (required)

- - Supported values: `claude-desktop`, `cline`, `vscode`, `cursor`, `windsurf`

-

-**Authentication:** Dual authentication support

-- Cookie-based authentication (web users)

-- OAuth2 Bearer token authentication (CLI users)

-

-**Required Permission:** `gateway.config:read`

-**Required OAuth2 Scope:** `gateway:config:read`

-

-## Client Configurations

-

-The endpoint supports multiple MCP client types, each with its own optimized configuration format. All configurations use the DeployStack Gateway's SSE endpoint: `http://localhost:9095/sse`

-

-**Supported Clients:**

-- `claude-desktop` - Claude Desktop application

-- `cline` - Cline VS Code extension

-- `vscode` - VS Code MCP extension

-- `cursor` - Cursor IDE

-- `windsurf` - Windsurf AI IDE

-

-**Configuration Source:** For the current client configuration formats and JSON structures, see the `generateClientConfig()` function in:

-

-```bash

-services/backend/src/routes/gateway/config/get-client-config.ts

-```

-

-## Permission System

-

-### Role Assignments

-

-The `gateway.config:read` permission is assigned to:

-- **global_admin** - Basic access to get gateway configs they need

-- **global_user** - Basic access to get gateway configs they need

-

-### OAuth2 Scope

-

-The `gateway:config:read` OAuth2 scope:

-- **Purpose:** Enables CLI tools and OAuth2 clients to fetch gateway configurations

-- **Description:** "Generate client-specific gateway configuration files"

-- **Gateway Integration:** Automatically requested during gateway OAuth login

-

-## Future Enhancements

-

-### Additional Client Types

-- **Zed Editor** - Growing popularity in developer community

-- **Neovim** - Popular among command-line developers

-- **Custom Clients** - Generic JSON format for custom integrations

-

-### Configuration Customization

-- **Environment Variables** - Support for different gateway URLs

-- **TLS/SSL Support** - When gateway supports secure connections

-- **Authentication Tokens** - If gateway adds authentication in future

-

-## Related Documentation

-

-- [Backend API Security](/development/backend/api-security) - Security patterns and authorization

-- [Backend OAuth2 Server](/development/backend/oauth2-server) - OAuth2 implementation details

-- [Gateway OAuth Implementation](/development/gateway/oauth) - Gateway OAuth client

-- [Gateway SSE Transport](/development/gateway/sse-transport) - Gateway SSE architecture

diff --git a/docs/development/backend/index.mdx b/docs/development/backend/index.mdx

index 12cb1eb..b90bd55 100644

--- a/docs/development/backend/index.mdx

+++ b/docs/development/backend/index.mdx

@@ -9,7 +9,7 @@ import { Database, Shield, Plug, Settings, Mail, TestTube, Wrench, BookOpen, Ter

# DeployStack Backend Development

-The DeployStack backend is a modern, high-performance Node.js application built with **Fastify**, **TypeScript**, and **Drizzle ORM**. It's specifically designed for managing MCP (Model Context Protocol) server configurations with enterprise-grade features including authentication, role-based access control, and an extensible plugin system.

+The DeployStack backend is a modern, high-performance Node.js application built with **Fastify**, **TypeScript**, and **Drizzle ORM**. It serves as the central control plane managing MCP server catalogs, team configurations, satellite orchestration, and user authentication with enterprise-grade features.

## Technology Stack

@@ -18,7 +18,7 @@ The DeployStack backend is a modern, high-performance Node.js application built

- **Database**: SQLite (default) or PostgreSQL with Drizzle ORM

- **Validation**: Zod for request/response validation and OpenAPI generation

- **Plugin System**: Extensible architecture with security isolation

-- **Authentication**: Cookie-based sessions with role-based access control

+- **Authentication**: Dual authentication system - cookie-based sessions for frontend and OAuth 2.1 for satellite access

## Quick Start

@@ -99,10 +99,10 @@ The development server starts at `http://localhost:3000` with API documentation

}

- href="/deploystack/development/backend/gateway-client-config"

- title="Gateway Client Configuration"

+ href="/deploystack/development/backend/satellite-communication"

+ title="Satellite Communication"

>

- API endpoint for generating client-specific gateway configuration files with dual authentication support.

+ API endpoints for satellite registration, configuration management, and command orchestration with polling-based communication.

diff --git a/docs/development/backend/logging.mdx b/docs/development/backend/logging.mdx

index 95e78d1..ecacf35 100644

--- a/docs/development/backend/logging.mdx

+++ b/docs/development/backend/logging.mdx

@@ -1,7 +1,7 @@

---

-title: Backend Logging & Log Level Configuration

+title: Backend Logging & Log Level Configuration

description: Complete guide to configuring and using log levels in the DeployStack backend for development and production environments.

-sidebar: Backend Development

+sidebar: Logging

---

import { Callout } from 'fumadocs-ui/components/callout';

diff --git a/docs/development/backend/oauth2-server.mdx b/docs/development/backend/oauth2-server.mdx

index 3c7494c..9c2c485 100644

--- a/docs/development/backend/oauth2-server.mdx

+++ b/docs/development/backend/oauth2-server.mdx

@@ -9,17 +9,19 @@ This document describes the OAuth2 authorization server implementation in the De

## Overview

-The OAuth2 server provides RFC 6749 compliant authorization for programmatic API access. This enables the DeployStack Gateway CLI and other tools to authenticate users and access APIs on their behalf using Bearer tokens instead of cookies.

+The OAuth2 server provides RFC 6749 compliant authorization for programmatic API access with RFC 7591 Dynamic Client Registration support. This enables the DeployStack Gateway CLI, MCP clients (VS Code, Cursor, Claude.ai), and other tools to authenticate users and access APIs on their behalf using Bearer tokens instead of cookies.

## Architecture

The OAuth2 server implementation includes:

- **Authorization Server** - Handles OAuth2 authorization flow with PKCE

+- **Dynamic Client Registration** - RFC 7591 compliant client registration for MCP clients

- **Token Management** - Issues and validates access/refresh tokens

- **Consent System** - User authorization interface

- **Dual Authentication** - Supports both cookies and Bearer tokens

- **Scope-based Access** - Fine-grained permission control

+- **Database Storage** - Persistent client and token storage

## OAuth2 Flow

@@ -34,9 +36,19 @@ The implementation follows the OAuth2 Authorization Code flow enhanced with PKCE

5. **Token exchange** - Client exchanges code for tokens

6. **API access** - Client uses Bearer token for requests

+### Dynamic Client Registration Flow (RFC 7591)

+

+MCP clients can automatically register themselves:

+

+1. **Client registration** - POST to `/api/oauth2/register` with metadata

+2. **Client validation** - Server validates redirect URIs and grants

+3. **Client ID generation** - Server generates unique client_id (format: `dyn__`)

+4. **Database storage** - Client metadata stored in `dynamic_oauth_clients` table

+5. **OAuth flow** - Client proceeds with standard authorization flow

+

### PKCE Implementation

-PKCE provides security for public clients (like CLI tools):

+PKCE provides security for public clients (like CLI tools and MCP clients):

#### Code Verifier

- 128 random bytes encoded as base64url

@@ -60,34 +72,70 @@ PKCE provides security for public clients (like CLI tools):

Manages the authorization flow:

#### Client Validation

-- Validates client_id against whitelist

-- Currently supports `deploystack-gateway-cli`

-- Extensible for additional clients

+- Validates dynamic clients against database (`dynamic_oauth_clients` table)

+- Supports both pre-registered and dynamically registered clients

+- Extensible for additional client types

#### Redirect URI Validation

-- Checks URI against allowed list

-- Supports localhost callbacks for CLI

+- Checks URI against allowed patterns for MCP clients

+- Supports localhost callbacks for CLI tools

+- Supports VS Code specific patterns (`http://127.0.0.1:/`, `vscode://`)

+- Supports Cursor patterns (`cursor://`)

+- Supports Claude.ai patterns (`https://claude.ai/mcp/auth/callback`)

- Prevents redirect attacks

#### Scope Validation

-- Validates requested scopes

+- Validates requested scopes against MCP scope patterns

+- Supports `mcp:read`, `mcp:tools:execute`, `offline_access`

- Ensures scopes are recognized

- Limits access appropriately

#### Authorization Storage

-- Stores authorization requests

+- Stores authorization requests in database

- Links PKCE challenges

-- Manages request lifecycle

+- Manages request lifecycle with expiration

+- Supports team-scoped authorization

#### Code Generation

- Creates authorization codes

-- Associates with user session

-- Implements expiration

+- Associates with user session and team

+- Implements 10-minute expiration

+- Prevents replay attacks

#### Code Verification

-- Validates authorization codes

+- Validates authorization codes against database

- Verifies PKCE challenge

- Ensures single use

+- Validates client and redirect URI match

+

+### Dynamic Client Registration

+

+Implements RFC 7591 Dynamic Client Registration:

+

+#### Registration Endpoint

+- **File**: `services/backend/src/routes/oauth2/register.ts`

+- **Endpoint**: `POST /api/oauth2/register`

+- **Purpose**: Allows MCP clients to self-register

+

+#### Client Metadata Validation

+- Validates `redirect_uris` against MCP client patterns

+- Supports VS Code: `http://127.0.0.1:/`, `https://vscode.dev/redirect`

+- Supports Cursor: `cursor://` schemes

+- Supports Claude.ai: `https://claude.ai/mcp/auth/callback`

+- Validates `grant_types` (authorization_code, refresh_token)

+- Validates `response_types` (code)

+

+#### Client ID Generation

+- Format: `dyn__`

+- Timestamp: Unix timestamp for uniqueness

+- Random suffix: 9-character base36 string

+- Example: `dyn_1757880447836_uvze3d0yc`

+

+#### Database Storage

+- **Table**: `dynamic_oauth_clients`

+- **Schema**: See `services/backend/src/db/schema.sqlite.ts`

+- **Fields**: client_id, client_name, redirect_uris, grant_types, response_types, scope, token_endpoint_auth_method, client_id_issued_at, expires_at

+- **Persistence**: Survives server restarts and supports multiple instances

### TokenService

@@ -96,50 +144,80 @@ Handles token lifecycle:

#### Token Generation

- Creates cryptographically secure tokens

- Generates appropriate expiration

-- Stores hashed versions

+- Stores hashed versions in database

#### Access Token Management

-- Issues 1-hour access tokens

-- Includes user and scope data

+- Issues 1-week access tokens for MCP clients

+- Issues 1-hour access tokens for CLI tools

+- Includes user, team, and scope data

- Enables API authentication

#### Refresh Token Handling

- Issues 30-day refresh tokens

- Allows token renewal

- Maintains session continuity

+- Supports offline access

#### Token Verification

- Validates token format

- Checks expiration

- Verifies against database

+- Supports introspection endpoint

#### Token Refresh

- Exchanges refresh for access token

- Validates client identity

- Maintains scope consistency

+- Supports both static and dynamic clients

#### Token Revocation

- Invalidates tokens on demand

- Cleans up related tokens

- Ensures immediate effect

-### OAuthCleanupService

+### Database Schema

+

+#### Dynamic OAuth Clients Table

+- **File**: `services/backend/src/db/schema.sqlite.ts`

+- **Table**: `dynamic_oauth_clients`

+- **Migration**: `0006_keen_firestar.sql`

+- **Purpose**: Persistent storage for dynamically registered MCP clients

+

+#### OAuth Authorization Codes Table

+- **Table**: `oauth_authorization_codes`

+- **Purpose**: Stores authorization requests and codes

+- **Features**: PKCE challenge storage, team context, expiration

+

+#### OAuth Access Tokens Table

+- **Table**: `oauth_access_tokens`

+- **Purpose**: Stores issued access tokens

+- **Features**: Hashed storage, scope tracking, team context

+

+#### OAuth Refresh Tokens Table

+- **Table**: `oauth_refresh_tokens`

+- **Purpose**: Stores refresh tokens for token renewal

+- **Features**: Long-term storage, client association

-Automatic maintenance:

+### OAuthCleanupService (TODO)

+

+Automatic maintenance system needs implementation:

#### Scheduled Cleanup

-- Runs hourly via cron

-- Removes expired tokens

-- Prevents database bloat

+- Should run hourly via cron

+- Remove expired authorization codes (>10 minutes)

+- Remove expired access tokens

+- Remove expired refresh tokens

+- Clean up unused dynamic client registrations

#### Cleanup Scope

-- Authorization codes > 10 minutes

+- Authorization codes > 10 minutes old

- Expired access tokens

- Expired refresh tokens

+- Dynamic clients unused for >90 days (configurable)

## OAuth2 Endpoints Overview

-The OAuth2 server implements standard OAuth2 endpoints following RFC 6749:

+The OAuth2 server implements standard OAuth2 endpoints following RFC 6749 and RFC 7591:

### Authorization Flow Endpoints

@@ -147,6 +225,11 @@ The OAuth2 server implements standard OAuth2 endpoints following RFC 6749:

- **Consent Endpoints** (`/api/oauth2/consent`) - Displays and processes user authorization consent

- **Token Endpoint** (`/api/oauth2/token`) - Exchanges authorization codes for access tokens and handles token refresh

- **User Info Endpoint** (`/api/oauth2/userinfo`) - Returns authenticated user information

+- **Introspection Endpoint** (`/api/oauth2/introspect`) - Token validation for resource servers

+

+### Dynamic Client Registration Endpoints

+

+- **Registration Endpoint** (`/api/oauth2/register`) - RFC 7591 compliant client registration

For complete API specifications including request parameters, response schemas, and examples, see the [Backend API Documentation](/development/backend/api). The API documentation provides OpenAPI specifications for all OAuth2 endpoints.

@@ -154,7 +237,13 @@ For complete API specifications including request parameters, response schemas,

### Available Scopes

-For the current list of supported scopes, see the source code at `services/backend/src/services/oauth/authorizationService.ts` in the `validateScope()` method.

+**MCP Client Scopes:**

+- `mcp:read` - Tool discovery and MCP server access

+- `mcp:tools:execute` - Tool execution permissions

+- `offline_access` - Refresh token issuance

+

+**CLI Tool Scopes:**

+For the current list of CLI-supported scopes, see the source code at `services/backend/src/services/oauth/authorizationService.ts` in the `validateScope()` method.

### Scope Enforcement

@@ -170,6 +259,38 @@ Scopes are enforced at the endpoint level:

- Returns 403 for insufficient scope

- Provides clear error messages

+## Client Types and Configuration

+

+### Dynamic Clients (RFC 7591)

+

+**VS Code MCP Extension:**

+- **Client ID**: Auto-generated (e.g., `dyn_1757880447836_uvze3d0yc`)

+- **Registration**: Automatic via RFC 7591

+- **Redirect URIs**: `http://127.0.0.1:/`, `https://vscode.dev/redirect`

+- **Scopes**: `mcp:read mcp:tools:execute offline_access`

+- **Token Lifetime**: 1-week access, 30-day refresh

+

+**Cursor MCP Client:**

+- **Client ID**: Auto-generated

+- **Registration**: Automatic via RFC 7591

+- **Redirect URIs**: `cursor://` schemes

+- **Scopes**: `mcp:read mcp:tools:execute offline_access`

+

+**Claude.ai MCP Client:**

+- **Client ID**: Auto-generated

+- **Registration**: Automatic via RFC 7591

+- **Redirect URIs**: `https://claude.ai/mcp/auth/callback`

+- **Scopes**: `mcp:read mcp:tools:execute offline_access`

+

+### Adding New Static Clients

+

+To support additional pre-registered OAuth2 clients:

+

+1. Add client_id to validation whitelist in `AuthorizationService.validateClient()`

+2. Configure allowed redirect URIs in `AuthorizationService.validateRedirectUri()`

+3. Define client-specific settings

+4. Update documentation

+

## Dual Authentication

### Supporting Both Methods

@@ -200,29 +321,6 @@ The system maintains context about authentication:

- **Type Detection**: Check for `tokenPayload` presence

- **Unified Interface**: Same user object structure

-## Client Configuration

-

-### DeployStack Gateway CLI

-

-Pre-registered OAuth2 client:

-

-- **Client ID**: `deploystack-gateway-cli`

-- **Client Type**: Public (no secret)

-- **Redirect URIs**:

- - `http://localhost:8976/oauth/callback`

- - `http://127.0.0.1:8976/oauth/callback`

-- **Required**: PKCE with SHA256

-- **Token Lifetime**: 1-hour access, 30-day refresh

-

-### Adding New Clients

-

-To support additional OAuth2 clients:

-

-1. Add client_id to validation whitelist

-2. Configure allowed redirect URIs

-3. Define client-specific settings

-4. Update documentation

-

## Security Implementation

### PKCE Security

@@ -240,12 +338,13 @@ Multiple layers of token protection:

- Constant-time comparison

- Secure random generation

- Automatic expiration

-- Regular cleanup

+- Regular cleanup (TODO: implement)

### Authorization Security

Secure authorization flow:

- CSRF protection via state parameter

+- Proper URL encoding of state parameter

- Session requirement for authorization

- Validated redirect URIs

- Clear consent interface

@@ -258,8 +357,28 @@ API access security:

- Scope-based access control

- Automatic token refresh

+### Dynamic Client Security

+

+RFC 7591 security measures:

+- Redirect URI validation against MCP patterns

+- Client metadata validation

+- Automatic client ID generation

+- Database persistence with proper indexing

+- No client secrets for public clients

+

## Integration Examples

+### MCP Client Registration Flow

+

+Example dynamic client registration for MCP clients:

+

+1. **Client registration request**

+2. **Server validates metadata**

+3. **Client ID generated and stored**

+4. **Client proceeds with OAuth flow**

+5. **User authorizes in browser**

+6. **Tokens issued for MCP access**

+

### CLI Authentication Flow

Example OAuth2 flow for CLI tools:

@@ -293,14 +412,16 @@ Content-Type: application/json

{

"grant_type": "refresh_token",

"refresh_token": "",

- "client_id": "deploystack-gateway-cli"

+ "client_id": "dyn_1757880447836_uvze3d0yc"

}

```

+

## Monitoring

### Metrics to Track (TODO)

- Authorization requests

+- Dynamic client registrations

- Token issuance rate

- Refresh token usage

- Failed authentication attempts

@@ -310,6 +431,7 @@ Content-Type: application/json

Comprehensive logging for debugging:

- Authorization flow steps

+- Dynamic client registration events

- Token operations

- Scope validations

- Error conditions

@@ -317,20 +439,19 @@ Comprehensive logging for debugging:

## OAuth Scope Management

-The backend validates OAuth scopes to control API access. Scope configuration must stay synchronized between the backend and gateway.

+The backend validates OAuth scopes to control API access. Scope configuration must stay synchronized between the backend and clients.

### Current Scopes

For the current list of supported scopes, check the source code at:

- **Backend validation**: `services/backend/src/services/oauth/authorizationService.ts` in the `validateScope()` method

-- **Gateway requests**: `services/gateway/src/utils/auth-config.ts` in the `scopes` array

### Adding New Scopes

When adding support for a new OAuth scope in the backend:

1. **Add the scope** to the `allowedScopes` array in `services/backend/src/services/oauth/authorizationService.ts`

-2. **Update the gateway** to request the new scope (see [Gateway OAuth Implementation](/development/gateway/oauth))

+2. **Update clients** to request the new scope (Gateway, MCP clients)

3. **Apply scope enforcement** to relevant API endpoints using middleware

4. **Test the complete flow** to ensure proper scope validation

@@ -341,7 +462,8 @@ static validateScope(scope: string): boolean {

const requestedScopes = scope.split(' ');

const allowedScopes = [

'mcp:read',

- 'mcp:categories:read',

+ 'mcp:tools:execute',

+ 'offline_access',

'your-new-scope', // Add new scope here

// ... other scopes

];

@@ -378,29 +500,58 @@ server.get('/api/another-endpoint', {

### Scope Synchronization

-**Critical**: The backend and gateway must have matching scope configurations:

-- If backend supports a scope but gateway doesn't request it, users won't get that permission

-- If gateway requests a scope but backend doesn't support it, authentication will fail

+**Critical**: The backend and clients must have matching scope configurations:

+- If backend supports a scope but client doesn't request it, users won't get that permission

+- If client requests a scope but backend doesn't support it, authentication will fail

+

+Always coordinate scope changes between backend and client implementations.

+

+

+## MCP Client Integration

-Always coordinate scope changes between both services.

+The OAuth2 server supports MCP clients through dynamic registration:

-## Gateway Integration

+### Supported MCP Clients

-The OAuth2 server integrates with the DeployStack Gateway:

+- **VS Code MCP Extension** - Automatic registration and authentication

+- **Cursor MCP Client** - Dynamic client registration support

+- **Claude.ai Custom Connector** - OAuth2 integration

+- **Cline MCP Client** - VS Code extension support

+

+### MCP Client Flow

+

+1. **Dynamic Registration** - Client registers via RFC 7591

+2. **OAuth Authorization** - User authorizes in browser

+3. **Token Issuance** - Long-lived tokens for MCP access

+4. **MCP Communication** - Bearer tokens for satellite access

+

+## Implementation Status

+

+### Completed Features

+

+- RFC 6749 OAuth2 Authorization Server

+- RFC 7591 Dynamic Client Registration

+- PKCE support for public clients

+- Database-backed client and token storage

+- Team-scoped authorization

+- MCP client support (VS Code, Cursor, Claude.ai)

+- Dual authentication (cookies + Bearer tokens)

+- Scope-based access control

+- Token introspection endpoint

+- State parameter URL encoding fix

-### Gateway OAuth Client

+### TODO Items

-See [Gateway OAuth Implementation](/development/gateway/oauth) for:

-- Client-side PKCE generation

-- Browser integration

-- Callback server

-- Token storage

-- Automatic refresh

+- **OAuthCleanupService Implementation** - Automated cleanup of expired tokens and clients

+- **Comprehensive Logging** - Enhanced logging for monitoring and debugging

+- **Metrics Collection** - Performance and usage metrics

+- **Rate Limiting** - Protection against abuse

+- **Client Management UI** - Admin interface for client management

## Related Documentation

- [Backend Authentication System](/development/backend/auth) - Core authentication

-- [Gateway OAuth Implementation](/development/gateway/oauth) - Client-side OAuth

+- [Satellite OAuth Authentication](/development/satellite/oauth-authentication) - MCP client authentication

- [Security Policy](/development/backend/security) - Security details

- [API Documentation](/development/backend/api) - API reference

-- [OAuth Provider Implementation](/development/backend/oauth-providers) - Third-party OAuth login setup

+- [OAuth Provider Implementation](/development/backend/oauth-providers) - Third-party OAuth login setup

\ No newline at end of file

diff --git a/docs/development/backend/plugins.mdx b/docs/development/backend/plugins.mdx

index 63851e4..5f5e928 100644

--- a/docs/development/backend/plugins.mdx

+++ b/docs/development/backend/plugins.mdx

@@ -444,7 +444,7 @@ class MyPlugin implements Plugin {

}

```

-For complete event documentation, see the [Global Event Bus](./events) guide.

+For complete event documentation, see the [Global Event Bus](/development/backend/events) guide.

### Access to Core Services

diff --git a/docs/development/backend/satellite-communication.mdx b/docs/development/backend/satellite-communication.mdx

new file mode 100644

index 0000000..e17f7cd

--- /dev/null

+++ b/docs/development/backend/satellite-communication.mdx

@@ -0,0 +1,486 @@

+---

+title: Satellite Communication

+description: Backend API endpoints for satellite registration, command orchestration, and configuration management with team-aware MCP server distribution.

+---

+

+# Satellite Communication

+

+The DeployStack backend implements satellite management APIs that handle registration, command orchestration, and configuration distribution. The system supports both global satellites (serving all teams) and team satellites (serving specific teams) through a polling-based communication architecture.

+

+## Implementation Status

+

+**Current Status**: Fully implemented and operational

+

+The satellite communication system includes:

+

+- **Satellite Registration**: Working registration endpoint with API key generation

+- **Command Orchestration**: Complete command polling and result reporting endpoints

+- **Configuration Management**: Team-aware MCP server configuration distribution

+- **Status Monitoring**: Heartbeat collection with automatic satellite activation

+- **Authentication**: Argon2-based API key validation middleware

+

+### MCP Server Distribution Architecture

+

+**Global Satellite Model**: Currently implemented approach where global satellites serve all teams with process isolation.

+

+**Team-Aware Configuration Distribution**:

+- Global satellites receive ALL team MCP server installations

+- Each team installation becomes a separate process with unique identifier

+- Process ID format: `{server_slug}-{team_slug}-{installation_id}`

+- Team-specific configurations (args, environment, headers) merged per installation

+

+**Configuration Merging Process**:

+1. Template-level configuration (from MCP server definition)

+2. Team-level configuration (from team installation)

+3. User-level configuration (from user preferences)

+4. Final merged configuration sent to satellite

+

+**Multi-Transport Support**:

+- `stdio` transport: Command and arguments for subprocess execution

+- `http` transport: URL and headers for HTTP proxy

+- `sse` transport: URL and headers for Server-Sent Events

+

+### Satellite Lifecycle Management

+

+**Registration Process**:

+- Satellites register with backend and receive API keys

+- Initial status set to 'inactive' for security

+- API keys stored as Argon2 hashes in database

+

+**Activation Process**:

+- Satellites send heartbeat after registration

+- Backend automatically sets status to 'active' on first heartbeat

+- Active satellites begin receiving actual commands

+

+**Command Processing**:

+- Inactive satellites receive empty command arrays (no 403 errors)

+- Active satellites receive pending commands based on priority

+- Command results reported back to backend for status tracking

+

+## Architecture Pattern

+

+### Polling-Based Communication

+

+Satellites use **outbound-only HTTPS polling** to communicate with the backend, making them compatible with restrictive corporate firewalls:

+

+```

+┌─────────────────┐ Outbound HTTPS ┌─────────────────┐

+│ Satellite │ ───────────────────► │ DeployStack │

+│ (Edge) │ │ Backend │

+│ │ ◄─────────────────── │ (Cloud) │

+└─────────────────┘ Command Response └─────────────────┘

+```

+

+### Dual Deployment Models

+

+**Global Satellites**: Cloud-hosted by DeployStack team

+- Serve all teams with resource isolation

+- Managed through global satellite management endpoints

+

+**Team Satellites**: Customer-deployed within corporate networks

+- Serve specific teams exclusively

+- Managed through team-scoped satellite management endpoints

+

+## Satellite Pairing Process

+

+### Registration Token Flow

+

+The satellite pairing process follows a secure two-phase approach:

+

+**Phase 1: Token Generation**

+- Administrators generate temporary registration tokens

+- Global tokens for global satellites (global_admin only)

+- Team tokens for team satellites (team_admin for specific teams)

+- Tokens have scope-specific prefixes and expiration times

+

+**Phase 2: Satellite Registration**

+- Satellites use registration tokens to authenticate initial pairing

+- Backend validates token scope and expiration

+- Permanent API keys issued after successful registration

+- Registration tokens marked as used (single-use security)

+

+### Token Security Model

+

+**Registration Token Prefixes**:

+- Global satellites: `deploystack_satellite_global_...`

+- Team satellites: `deploystack_satellite_team_...`

+

+**Token Characteristics**:

+- JWT-based with cryptographic signatures

+- Short expiration times (1 hour global, 24 hours team)

+- Single-use to prevent replay attacks

+- Audit trail for compliance

+

+**Operational API Keys**:

+- Permanent keys for ongoing satellite communication

+- Scope-specific prefixes for security validation

+- Argon2 hashed storage in database

+- Support for key rotation and revocation

+

+## Command Orchestration

+

+### Command Queue Architecture

+

+The backend maintains a priority-based command queue system:

+

+**Command Types**:

+- `spawn`: Start new MCP server process

+- `kill`: Terminate MCP server process

+- `restart`: Restart existing MCP server

+- `configure`: Update MCP server configuration

+- `health_check`: Request process health status

+

+**Priority Levels**:

+- `immediate`: High-priority commands requiring instant execution

+- `high`: Important commands processed within minutes

+- `normal`: Standard commands processed during regular polling

+- `low`: Background maintenance commands

+

+### Adaptive Polling Strategy

+

+Satellites adjust polling behavior based on backend signals:

+

+**Polling Modes**:

+- **Immediate Mode**: 2-second intervals for urgent commands

+- **Normal Mode**: 30-second intervals for standard operations

+- **Backoff Mode**: Exponential backoff during errors or low activity

+

+**Optimization Features**:

+- Conditional polling based on last poll timestamp

+- Command batching to reduce API calls

+- Cache headers for efficient bandwidth usage

+- Circuit breaker patterns for error recovery

+

+### Command Lifecycle

+

+**Command Flow**:

+1. User action triggers command creation in backend

+2. Command added to priority queue with team context

+3. Satellite polls and retrieves pending commands

+4. Satellite executes command with team isolation

+5. Satellite reports execution results back to backend

+6. Backend updates command status and notifies user interface

+

+**Team Context Integration**:

+- All commands include team scope information

+- Team satellites only receive commands for their team

+- Global satellites process commands with team isolation

+- Audit trail with team attribution

+

+## Status Monitoring

+

+### Heartbeat System

+

+Satellites report health and performance metrics:

+

+**System Metrics**:

+- CPU usage percentage and memory consumption

+- Disk usage and network connectivity status

+- Process count and resource utilization

+- Uptime and stability indicators

+

+**Process Metrics**:

+- Individual MCP server process status

+- Health indicators (healthy/unhealthy/unknown)

+- Performance metrics (request count, response times)

+- Resource consumption per process

+

+### Real-Time Status Tracking

+

+The backend provides real-time satellite status information:

+

+**Satellite Health Monitoring**:

+- Connection status and last heartbeat timestamps

+- System resource usage trends

+- Process health aggregation

+- Alert generation for issues

+

+**Performance Analytics**:

+- Historical performance data collection

+- Usage pattern analysis for capacity planning

+- Team-specific metrics and reporting

+- Audit trail generation

+

+## Configuration Management

+

+### Dynamic Configuration Updates

+

+Satellites retrieve configuration updates without requiring restarts:

+

+**Configuration Categories**:

+- **Polling Settings**: Interval configuration and optimization parameters

+- **Resource Limits**: CPU, memory, and process count restrictions

+- **Team Settings**: Team-specific policies and allowed MCP servers

+- **Security Policies**: Access control and compliance requirements

+

+**Configuration Distribution**:

+- Push-based updates through command queue

+- Pull-based configuration refresh during polling

+- Version-controlled configuration management

+- Rollback capabilities for configuration errors

+

+### Team-Aware Configuration

+

+Configuration respects team boundaries and isolation:

+

+**Global Satellite Configuration**:

+- Platform-wide settings and resource allocation

+- Multi-tenant isolation policies

+- Global resource limits and quotas

+- Cross-team security boundaries

+

+**Team Satellite Configuration**:

+- Team-specific MCP server configurations

+- Custom resource limits per team

+- Team-defined security policies

+- Internal resource access settings

+

+## Database Schema Integration

+

+### Core Table Structure

+

+The satellite system integrates with existing DeployStack schema through 5 specialized tables. For detailed schema definitions, see [`services/backend/src/db/schema.sqlite.ts`](https://github.com/deploystackio/deploystack/blob/main/services/backend/src/db/schema.sqlite.ts).

+

+**Satellite Registry** (`satellites`):

+- Central registration of all satellites

+- Type classification (global/team) and ownership

+- Capability tracking and status monitoring

+- API key management and authentication

+

+**Command Queue** (`satelliteCommands`):

+- Priority-based command orchestration

+- Team context and correlation tracking

+- Expiration and retry management

+- Command lifecycle tracking

+

+**Process Tracking** (`satelliteProcesses`):

+- Real-time MCP server process monitoring

+- Health status and performance metrics

+- Team isolation and resource usage

+- Integration with existing MCP configuration system

+

+**Usage Analytics** (`satelliteUsageLogs`):

+- Audit trail for compliance

+- User attribution and team tracking

+- Performance analytics and billing data

+- Device tracking for enterprise security

+

+**Health Monitoring** (`satelliteHeartbeats`):

+- System metrics and resource monitoring

+- Process health aggregation

+- Alert generation and notification triggers

+- Historical health trend analysis

+

+### Team Isolation in Data Model

+

+All satellite data respects team boundaries:

+

+**Team-Scoped Data**:

+- Team satellites linked to specific teams

+- Process isolation per team context

+- Usage logs with team attribution

+- Configuration scoped to team access

+

+**Global Data with Team Context**:

+- Global satellites serve all teams with isolation

+- Cross-team usage tracking and analytics

+- Team-aware resource allocation

+- Compliance reporting per team

+

+## Authentication & Security

+

+### Multi-Layer Security Model

+

+**Registration Security**:

+- Temporary JWT tokens for initial pairing

+- Scope validation preventing privilege escalation

+- Single-use tokens with automatic expiration

+- Audit trail for security compliance

+

+**Operational Security**:

+- Permanent API keys for ongoing communication

+- Request authentication and authorization

+- Rate limiting and abuse prevention

+- IP whitelisting support for team satellites

+

+**Team Isolation Security**:

+- Team boundary enforcement

+- Resource isolation and access control

+- Cross-team data leakage prevention

+- Compliance with enterprise security policies

+

+### Role-Based Access Control Integration

+

+The satellite system integrates with DeployStack's existing role framework:

+

+**global_admin**:

+- Satellite system oversight

+- Global satellite registration and management

+- Cross-team analytics and monitoring

+- System-wide configuration control

+

+**team_admin**:

+- Team satellite registration and management

+- Team-scoped MCP server installation

+- Team resource monitoring and configuration

+- Team member access control

+

+**team_user**:

+- Satellite-hosted MCP server usage

+- Team satellite status visibility

+- Personal usage analytics access

+

+**global_user**:

+- Team satellite registration within memberships

+- Cross-team satellite usage through teams

+- Limited administrative capabilities

+

+## Integration Points

+

+### Existing DeployStack Systems

+

+**User Management Integration**:

+- Leverages existing authentication and session management

+- Integrates with current permission and role systems

+- Uses established user and team membership APIs

+- Maintains consistency with platform security model

+

+**MCP Configuration Integration**:

+- Builds on existing MCP server installation system

+- Extends current team-based configuration management

+- Integrates with established credential management

+- Maintains compatibility with existing MCP workflows

+

+**Monitoring Integration**:

+- Uses existing structured logging infrastructure

+- Integrates with current metrics collection system

+- Leverages established alerting and notification systems

+- Maintains consistency with platform observability

+

+## Development Implementation

+

+### Route Structure

+

+Satellite communication endpoints are organized in `services/backend/src/routes/satellites/`:

+

+```

+satellites/

+├── index.ts # Route registration

+├── register.ts # Satellite registration endpoint

+├── commands.ts # Command polling and result reporting

+├── config.ts # Configuration distribution

+├── heartbeat.ts # Health monitoring and status updates

+└── manage/ # Management endpoints for frontend

+ ├── list.ts # Satellite listing

+ └── status.ts # Satellite status queries

+```

+

+### Authentication Middleware

+

+Satellite authentication uses dedicated middleware in `services/backend/src/middleware/satelliteAuthMiddleware.ts`:

+

+**Key Features**:

+- Argon2 hash verification for API key validation

+- Satellite context injection for route handlers

+- Dual authentication support (user cookies + satellite API keys)

+- Comprehensive error handling and logging

+

+**Usage Pattern**:

+```typescript

+import { requireSatelliteAuth } from '../../middleware/satelliteAuthMiddleware';

+

+server.get('/satellites/:satelliteId/commands', {

+ preValidation: [requireSatelliteAuth()],

+ // Route implementation

+});

+```

+

+### Database Integration

+

+The satellite system extends the existing database schema with 5 specialized tables:

+

+**Schema Location**: `services/backend/src/db/schema.sqlite.ts`

+

+**Table Relationships**:

+- `satellites` table links to existing `teams` and `authUser` tables

+- `satelliteProcesses` table references `mcpServerInstallations` for team context

+- `satelliteCommands` table includes team context for command execution

+- All tables use existing foreign key relationships for data integrity

+

+### Configuration Query Implementation

+

+The configuration endpoint implements complex queries to merge team-specific MCP server configurations:

+

+**Query Strategy**:

+- Join `mcpServerInstallations`, `mcpServers`, and `teams` tables

+- Global satellites: Query ALL team installations

+- Team satellites: Query only specific team installations

+- JSON field parsing with comprehensive error handling

+

+**Configuration Merging Logic**:

+```typescript

+// Parse template and team configurations

+const templateArgs = JSON.parse(installation.template_args || '[]');

+const teamArgs = JSON.parse(installation.team_args || '[]');

+const templateEnv = JSON.parse(installation.template_env || '{}');

+const teamEnv = JSON.parse(installation.team_env || '{}');

+

+// Merge configurations with team overrides

+const finalArgs = [...templateArgs, ...teamArgs];

+const finalEnv = { ...templateEnv, ...teamEnv };

+```

+

+### Error Handling Patterns

+

+**Graceful Degradation**:

+- Inactive satellites receive empty command arrays instead of 403 errors

+- Invalid JSON configurations are skipped with warning logs

+- Failed satellite authentication returns 401 with structured error messages

+

+**Comprehensive Logging**:

+- Structured logging with operation identifiers

+- Error context preservation for debugging

+- Performance metrics collection (response times, success rates)

+

+### Development Workflow

+

+**Local Development Setup**:

+```bash

+# Backend setup

+cd services/backend

+npm install

+npm run dev # Starts on http://localhost:3000

+

+# Satellite setup (separate terminal)

+cd services/satellite

+npm install

+npm run dev # Starts on http://localhost:3001

+```

+

+**Testing Satellite Communication**:

+1. Start backend server

+2. Start satellite (automatically registers)

+3. Monitor logs for successful polling and configuration retrieval

+4. Use database tools to inspect satellite tables and command queue

+

+**Database Inspection**:

+```bash

+# View registered satellites

+sqlite3 services/backend/persistent_data/database/deploystack.db

+> SELECT id, name, satellite_type, status FROM satellites;

+

+# View MCP server installations

+> SELECT installation_name, team_id FROM mcpServerInstallations;

+```

+

+## API Documentation

+

+For detailed API endpoints, request/response formats, and authentication patterns, see the [API Specification](/development/backend/api) generated from the backend OpenAPI schema.

+

+## Related Documentation

+

+For detailed satellite architecture and implementation:

+

+- [API Security](/development/backend/api-security) - Security patterns and authorization

+- [Database Management](/development/backend/database) - Schema and data management

+- [OAuth2 Server](/development/backend/oauth2-server) - OAuth2 implementation details

diff --git a/docs/development/index.mdx b/docs/development/index.mdx

index aa4e461..3d8111a 100644

--- a/docs/development/index.mdx

+++ b/docs/development/index.mdx

@@ -1,25 +1,25 @@

---

title: Development Guide

-description: Complete development documentation for DeployStack - covering frontend, backend, and contribution guidelines for the MCP server management platform.

+description: Complete development documentation for DeployStack - the first MCP-as-a-Service platform with satellite infrastructure and cloud control plane.

icon: FileCode

---

import { Card, Cards } from 'fumadocs-ui/components/card';

-import { Code2, Server, GitBranch, Users, Shield } from 'lucide-react';

+import { Code2, Server, Cloud, Users } from 'lucide-react';

# DeployStack Development

-Welcome to the DeployStack development documentation! DeployStack is a comprehensive enterprise platform for managing Model Context Protocol (MCP) servers, featuring a cloud control plane, local gateway proxy, and modern web interface for team-based MCP server orchestration.

+Welcome to the DeployStack development documentation! DeployStack eliminates MCP adoption friction by transforming complex installations into simple URL configurations through managed satellite infrastructure.

## Architecture Overview

-DeployStack implements a sophisticated Control Plane / Data Plane architecture for enterprise MCP server management:

+DeployStack implements an MCP-as-a-Service architecture that eliminates installation friction:

-- **Frontend**: Vue 3 + TypeScript web application providing the management interface for MCP server configurations

-- **Backend**: Fastify-based cloud control plane handling authentication, team management, and configuration distribution

-- **Gateway**: Local secure proxy that runs on developer machines, managing MCP server processes and credential injection

+- **Frontend**: Vue 3 + TypeScript web application (cloud.deploystack.io) for team management and configuration

+- **Backend**: Fastify-based cloud control plane handling authentication, teams, and satellite coordination

+- **Satellite Infrastructure**: Managed MCP servers accessible via HTTPS URLs - no installation required

- **Shared**: Common utilities and TypeScript types used across all services

-- **Dual Transport**: Supports both stdio (CLI tools) and SSE (VS Code) protocols for maximum compatibility

+- **Dual Deployment**: Global satellites (DeployStack-managed) and team satellites (customer-deployed)

## Development Areas

@@ -29,7 +29,7 @@ DeployStack implements a sophisticated Control Plane / Data Plane architecture f

href="/development/frontend"

title="Frontend Development"

>

- Vue 3 web application with TypeScript, Vite, and shadcn-vue components. Direct fetch API patterns, SFC components, and internationalization.

+ Vue 3 web application with TypeScript, Vite, and shadcn-vue components. Team management interface for satellite configuration and usage analytics.

- Fastify cloud control plane with Drizzle ORM, plugin architecture, role-based access control, and OpenAPI documentation generation.

+ Fastify cloud control plane with Drizzle ORM, JWT authentication, team management, and satellite coordination APIs.

}

- href="/development/gateway"

- title="Gateway Development"

+ icon={}

+ href="/development/satellite"

+ title="Satellite Development"

>

- Local secure proxy managing MCP server processes, credential injection, dual transport protocols (stdio/SSE), and team-based access control.

+ MCP-as-a-Service infrastructure with global satellites, team satellites, zero-installation access, and enterprise-grade security.

@@ -56,7 +56,7 @@ DeployStack implements a sophisticated Control Plane / Data Plane architecture f

- Node.js 18 or higher

- npm 8 or higher

- Git for version control

-- DeployStack account at [cloud.deploystack.io](https://cloud.deploystack.io) (for gateway development)

+- DeployStack account at [cloud.deploystack.io](https://cloud.deploystack.io) (for satellite testing)

### Quick Setup

@@ -68,7 +68,7 @@ cd deploystack

# Install dependencies for all services

cd services/frontend && npm install

cd ../backend && npm install

-cd ../gateway && npm install

+cd ../satellite && npm install

# Start development servers (in separate terminals)

# Terminal 1 - Backend

@@ -77,16 +77,16 @@ cd services/backend && npm run dev # http://localhost:3000

# Terminal 2 - Frontend

cd services/frontend && npm run dev # http://localhost:5173

-# Terminal 3 - Gateway (optional, for local MCP testing)

-cd services/gateway && npm run dev # http://localhost:9095

+# Terminal 3 - Satellite (for local satellite testing)