-

Notifications

You must be signed in to change notification settings - Fork 8.3k

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

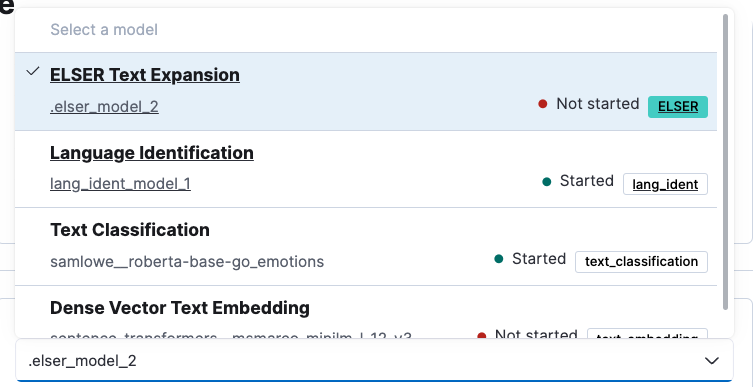

[Enterprise Search] Replace model selection dropdown with list (#171436)

## Summary This PR replaces the model selection dropdown in the ML inference pipeline configuration flyout with a cleaner selection list. The model cards also contain fast deploy action buttons for promoted models (ELSER, E5). The list is periodically updated. Old:  New: <img width="1442" alt="Screenshot 2023-11-30 at 15 13 46" src="https://github.com/elastic/kibana/assets/14224983/fd439280-6dce-4973-b622-08ad3e34e665"> ### Checklist - [x] [Unit or functional tests](https://www.elastic.co/guide/en/kibana/master/development-tests.html) were updated or added to match the most common scenarios - [x] Any UI touched in this PR is usable by keyboard only (learn more about [keyboard accessibility](https://webaim.org/techniques/keyboard/)) - [ ] Any UI touched in this PR does not create any new axe failures (run axe in browser: [FF](https://addons.mozilla.org/en-US/firefox/addon/axe-devtools/), [Chrome](https://chrome.google.com/webstore/detail/axe-web-accessibility-tes/lhdoppojpmngadmnindnejefpokejbdd?hl=en-US)) - [x] This renders correctly on smaller devices using a responsive layout. (You can test this [in your browser](https://www.browserstack.com/guide/responsive-testing-on-local-server)) --------- Co-authored-by: kibanamachine <42973632+kibanamachine@users.noreply.github.com>

- Loading branch information

1 parent

1533f30

commit 2c4d0a3

Showing

13 changed files

with

942 additions

and

99 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

136 changes: 136 additions & 0 deletions

136

...prise_search_content/components/search_index/pipelines/ml_inference/model_select.test.tsx

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,136 @@ | ||

| /* | ||

| * Copyright Elasticsearch B.V. and/or licensed to Elasticsearch B.V. under one | ||

| * or more contributor license agreements. Licensed under the Elastic License | ||

| * 2.0; you may not use this file except in compliance with the Elastic License | ||

| * 2.0. | ||

| */ | ||

|

|

||

| import { setMockActions, setMockValues } from '../../../../../__mocks__/kea_logic'; | ||

|

|

||

| import React from 'react'; | ||

|

|

||

| import { shallow } from 'enzyme'; | ||

|

|

||

| import { EuiSelectable } from '@elastic/eui'; | ||

|

|

||

| import { ModelSelect } from './model_select'; | ||

|

|

||

| const DEFAULT_VALUES = { | ||

| addInferencePipelineModal: { | ||

| configuration: {}, | ||

| }, | ||

| selectableModels: [ | ||

| { | ||

| modelId: 'model_1', | ||

| }, | ||

| { | ||

| modelId: 'model_2', | ||

| }, | ||

| ], | ||

| indexName: 'my-index', | ||

| }; | ||

| const MOCK_ACTIONS = { | ||

| setInferencePipelineConfiguration: jest.fn(), | ||

| }; | ||

|

|

||

| describe('ModelSelect', () => { | ||

| beforeEach(() => { | ||

| jest.clearAllMocks(); | ||

| setMockValues({}); | ||

| setMockActions(MOCK_ACTIONS); | ||

| }); | ||

| it('renders model select with no options', () => { | ||

| setMockValues({ | ||

| ...DEFAULT_VALUES, | ||

| selectableModels: null, | ||

| }); | ||

|

|

||

| const wrapper = shallow(<ModelSelect />); | ||

| expect(wrapper.find(EuiSelectable)).toHaveLength(1); | ||

| const selectable = wrapper.find(EuiSelectable); | ||

| expect(selectable.prop('options')).toEqual([]); | ||

| }); | ||

| it('renders model select with options', () => { | ||

| setMockValues(DEFAULT_VALUES); | ||

|

|

||

| const wrapper = shallow(<ModelSelect />); | ||

| expect(wrapper.find(EuiSelectable)).toHaveLength(1); | ||

| const selectable = wrapper.find(EuiSelectable); | ||

| expect(selectable.prop('options')).toEqual([ | ||

| { | ||

| modelId: 'model_1', | ||

| label: 'model_1', | ||

| }, | ||

| { | ||

| modelId: 'model_2', | ||

| label: 'model_2', | ||

| }, | ||

| ]); | ||

| }); | ||

| it('selects the chosen option', () => { | ||

| setMockValues({ | ||

| ...DEFAULT_VALUES, | ||

| addInferencePipelineModal: { | ||

| configuration: { | ||

| ...DEFAULT_VALUES.addInferencePipelineModal.configuration, | ||

| modelID: 'model_2', | ||

| }, | ||

| }, | ||

| }); | ||

|

|

||

| const wrapper = shallow(<ModelSelect />); | ||

| expect(wrapper.find(EuiSelectable)).toHaveLength(1); | ||

| const selectable = wrapper.find(EuiSelectable); | ||

| expect(selectable.prop('options')[1].checked).toEqual('on'); | ||

| }); | ||

| it('sets model ID on selecting an item and clears config', () => { | ||

| setMockValues(DEFAULT_VALUES); | ||

|

|

||

| const wrapper = shallow(<ModelSelect />); | ||

| expect(wrapper.find(EuiSelectable)).toHaveLength(1); | ||

| const selectable = wrapper.find(EuiSelectable); | ||

| selectable.simulate('change', [{ modelId: 'model_1' }, { modelId: 'model_2', checked: 'on' }]); | ||

| expect(MOCK_ACTIONS.setInferencePipelineConfiguration).toHaveBeenCalledWith( | ||

| expect.objectContaining({ | ||

| inferenceConfig: undefined, | ||

| modelID: 'model_2', | ||

| fieldMappings: undefined, | ||

| }) | ||

| ); | ||

| }); | ||

| it('generates pipeline name on selecting an item', () => { | ||

| setMockValues(DEFAULT_VALUES); | ||

|

|

||

| const wrapper = shallow(<ModelSelect />); | ||

| expect(wrapper.find(EuiSelectable)).toHaveLength(1); | ||

| const selectable = wrapper.find(EuiSelectable); | ||

| selectable.simulate('change', [{ modelId: 'model_1' }, { modelId: 'model_2', checked: 'on' }]); | ||

| expect(MOCK_ACTIONS.setInferencePipelineConfiguration).toHaveBeenCalledWith( | ||

| expect.objectContaining({ | ||

| pipelineName: 'my-index-model_2', | ||

| }) | ||

| ); | ||

| }); | ||

| it('does not generate pipeline name on selecting an item if it a name was supplied by the user', () => { | ||

| setMockValues({ | ||

| ...DEFAULT_VALUES, | ||

| addInferencePipelineModal: { | ||

| configuration: { | ||

| ...DEFAULT_VALUES.addInferencePipelineModal.configuration, | ||

| pipelineName: 'user-pipeline', | ||

| isPipelineNameUserSupplied: true, | ||

| }, | ||

| }, | ||

| }); | ||

|

|

||

| const wrapper = shallow(<ModelSelect />); | ||

| expect(wrapper.find(EuiSelectable)).toHaveLength(1); | ||

| const selectable = wrapper.find(EuiSelectable); | ||

| selectable.simulate('change', [{ modelId: 'model_1' }, { modelId: 'model_2', checked: 'on' }]); | ||

| expect(MOCK_ACTIONS.setInferencePipelineConfiguration).toHaveBeenCalledWith( | ||

| expect.objectContaining({ | ||

| pipelineName: 'user-pipeline', | ||

| }) | ||

| ); | ||

| }); | ||

| }); |

81 changes: 81 additions & 0 deletions

81

...enterprise_search_content/components/search_index/pipelines/ml_inference/model_select.tsx

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,81 @@ | ||

| /* | ||

| * Copyright Elasticsearch B.V. and/or licensed to Elasticsearch B.V. under one | ||

| * or more contributor license agreements. Licensed under the Elastic License | ||

| * 2.0; you may not use this file except in compliance with the Elastic License | ||

| * 2.0. | ||

| */ | ||

|

|

||

| import React from 'react'; | ||

|

|

||

| import { useActions, useValues } from 'kea'; | ||

|

|

||

| import { EuiSelectable, useIsWithinMaxBreakpoint } from '@elastic/eui'; | ||

|

|

||

| import { MlModel } from '../../../../../../../common/types/ml'; | ||

| import { IndexNameLogic } from '../../index_name_logic'; | ||

| import { IndexViewLogic } from '../../index_view_logic'; | ||

|

|

||

| import { MLInferenceLogic } from './ml_inference_logic'; | ||

| import { ModelSelectLogic } from './model_select_logic'; | ||

| import { ModelSelectOption, ModelSelectOptionProps } from './model_select_option'; | ||

| import { normalizeModelName } from './utils'; | ||

|

|

||

| export const ModelSelect: React.FC = () => { | ||

| const { indexName } = useValues(IndexNameLogic); | ||

| const { ingestionMethod } = useValues(IndexViewLogic); | ||

| const { | ||

| addInferencePipelineModal: { configuration }, | ||

| } = useValues(MLInferenceLogic); | ||

| const { selectableModels, isLoading } = useValues(ModelSelectLogic); | ||

| const { setInferencePipelineConfiguration } = useActions(MLInferenceLogic); | ||

|

|

||

| const { modelID, pipelineName, isPipelineNameUserSupplied } = configuration; | ||

|

|

||

| const getModelSelectOptionProps = (models: MlModel[]): ModelSelectOptionProps[] => | ||

| (models ?? []).map((model) => ({ | ||

| ...model, | ||

| label: model.modelId, | ||

| checked: model.modelId === modelID ? 'on' : undefined, | ||

| })); | ||

|

|

||

| const onChange = (options: ModelSelectOptionProps[]) => { | ||

| const selectedOption = options.find((option) => option.checked === 'on'); | ||

| setInferencePipelineConfiguration({ | ||

| ...configuration, | ||

| inferenceConfig: undefined, | ||

| modelID: selectedOption?.modelId ?? '', | ||

| fieldMappings: undefined, | ||

| pipelineName: isPipelineNameUserSupplied | ||

| ? pipelineName | ||

| : indexName + '-' + normalizeModelName(selectedOption?.modelId ?? ''), | ||

| }); | ||

| }; | ||

|

|

||

| const renderOption = (option: ModelSelectOptionProps) => <ModelSelectOption {...option} />; | ||

|

|

||

| return ( | ||

| <EuiSelectable | ||

| data-telemetry-id={`entSearchContent-${ingestionMethod}-pipelines-configureInferencePipeline-selectTrainedModel`} | ||

| options={getModelSelectOptionProps(selectableModels)} | ||

| singleSelection="always" | ||

| listProps={{ | ||

| bordered: true, | ||

| rowHeight: useIsWithinMaxBreakpoint('s') ? 180 : 90, | ||

| showIcons: false, | ||

| onFocusBadge: false, | ||

| }} | ||

| height={360} | ||

| onChange={onChange} | ||

| renderOption={renderOption} | ||

| isLoading={isLoading} | ||

| searchable | ||

| > | ||

| {(list, search) => ( | ||

| <> | ||

| {search} | ||

| {list} | ||

| </> | ||

| )} | ||

| </EuiSelectable> | ||

| ); | ||

| }; |

Oops, something went wrong.