From aacd285f2fcfdf102a803d28dd143fbea0cb8b31 Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Wed, 26 Apr 2023 15:39:59 -0300

Subject: [PATCH 01/17] wip: stub content for metrics restructure

---

.../en/02 Using k6/02 Metrics.md | 359 +-----------------

.../02 Metrics/100 Create custom metrics.md | 234 ++++++++++++

.../200 Calculate metric timings.md | 40 ++

.../02 Using k6/02 Metrics/999-reference.md | 167 ++++++++

4 files changed, 451 insertions(+), 349 deletions(-)

create mode 100644 src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

create mode 100644 src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md

create mode 100644 src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

index 41ffcb2b37..324211b916 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

@@ -13,362 +13,23 @@ Metrics fall into four broad types:

- _Rates_ track how frequently a non-zero value occurs.

- _Trends_ calculate statistics for multiple values (like mean or mode).

-## Built-in metrics

+If you want to make a test fail a certain criteria, you can write [Threshold](/using-k6/thresholds) based on the metric criteria (the specifics of the expression depend on the test type.

-The _built-in_ metrics output to `stdout` when you run the simplest possible k6 test:

-

+| On this page... | Read about... |

+|------------------------------------------------------------------------|--------------------------------------------------------------------------------|

+| [Create custom metrics](/using-k6/metrics/create-custom-metrics) | How to build your own metric for each metric type |

+| [Calculate metric timings](/using-k6/metrics/calculate-http-timings) | How to get timings from an individual request |

+| [Metrics reference](/using-k6/metrics/reference) | Each built-in metric for each supported [protocol](/using-k6/protocols) |

-```javascript

-import http from 'k6/http';

-export default function () {

- http.get('https://test-api.k6.io/');

-}

-```

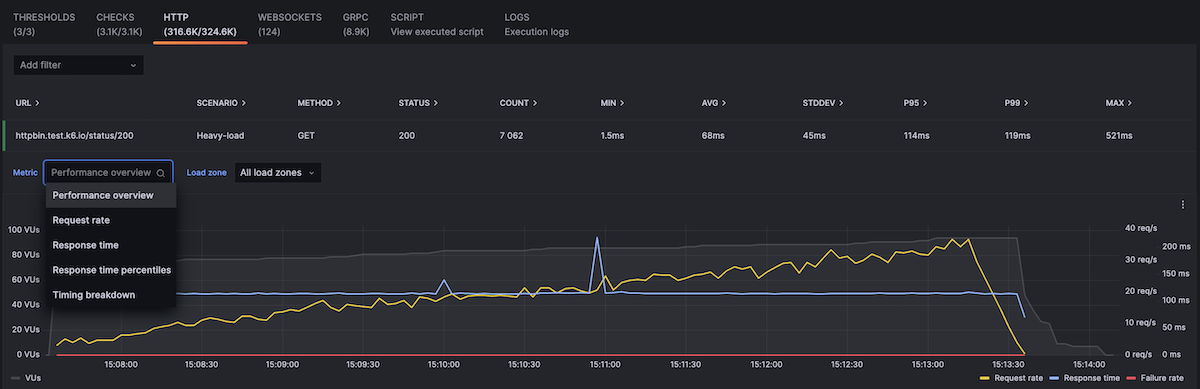

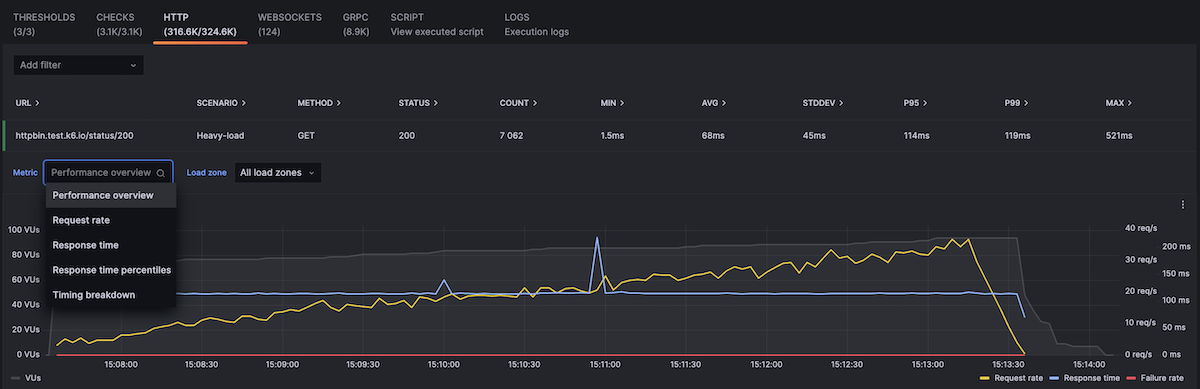

+## Metric graphs in Grafana cloud k6

-

-

-Running the preceding script outputs something like this:

-

-

-

-```bash

-$ k6 run script.js

-

- /\ |‾‾| /‾‾/ /‾‾/

- /\ / \ | |/ / / /

- / \/ \ | ( / ‾‾\

- / \ | |\ \ | (‾) |

- / __________ \ |__| \__\ \_____/ .io

-

- execution: local

- script: http_get.js

- output: -

-

- scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop):

- * default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

-

-

-running (00m03.8s), 0/1 VUs, 1 complete and 0 interrupted iterations

-default ✓ [======================================] 1 VUs 00m03.8s/10m0s 1/1 iters, 1 per VU

-

- data_received..................: 22 kB 5.7 kB/s

- data_sent......................: 742 B 198 B/s

- http_req_blocked...............: avg=1.05s min=1.05s med=1.05s max=1.05s p(90)=1.05s p(95)=1.05s

- http_req_connecting............: avg=334.26ms min=334.26ms med=334.26ms max=334.26ms p(90)=334.26ms p(95)=334.26ms

- http_req_duration..............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

- { expected_response:true }...: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

- http_req_failed................: 0.00% ✓ 0 ✗ 1

- http_req_receiving.............: avg=112.41µs min=112.41µs med=112.41µs max=112.41µs p(90)=112.41µs p(95)=112.41µs

- http_req_sending...............: avg=294.48µs min=294.48µs med=294.48µs max=294.48µs p(90)=294.48µs p(95)=294.48µs

- http_req_tls_handshaking.......: avg=700.6ms min=700.6ms med=700.6ms max=700.6ms p(90)=700.6ms p(95)=700.6ms

- http_req_waiting...............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

- http_reqs......................: 1 0.266167/s

- iteration_duration.............: avg=3.75s min=3.75s med=3.75s max=3.75s p(90)=3.75s p(95)=3.75s

- iterations.....................: 1 0.266167/s

- vus............................: 1 min=1 max=1

- vus_max........................: 1 min=1 max=1

-```

-

-

-

-In that output, all the metrics that start with `http`, `iteration`, and `vu` are _built-in_ metrics, which get written to stdout at the end of a test.

-

-k6 always collects the following built-in metrics:

-

-| Metric Name | Type | Description |

-|--------------------|---------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| vus | Gauge | Current number of active virtual users |

-| vus_max | Gauge | Max possible number of virtual users (VU resources are pre-allocated, ensuring performance will not be affected when scaling up the load level) |

-| iterations | Counter | The aggregate number of times the VUs executed the JS script (the `default` function). |

-| iteration_duration | Trend | The time it took to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

-| dropped_iterations | Counter | The number of iterations that weren't started due to lack of VUs (for the arrival-rate executors) or lack of time (expired maxDuration in the iteration-based executors). [About dropped iterations](/using-k6/scenarios/concepts/dropped-iterations/) |

-| data_received | Counter | The amount of received data. [This example covers how to track data for an individual URL](/examples/track-transmitted-data-per-url). |

-| data_sent | Counter | The amount of data sent. [Track data for an individual URL](/examples/track-transmitted-data-per-url) to track data for an individual URL. |

-| checks | Rate | The rate of successful checks. |

-

-## HTTP-specific built-in metrics

-

-These metrics are generated only when the test makes HTTP requests.

-

-

-

-For all `http_req_*` metrics, **the timestamp is emitted the end of the request.**

-In other words, the timestamp happens when k6 receives the end of the response body, or the request times out.

-

-

-

-

-| Metric Name | Type | Description |

-|--------------------------|---------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| http_reqs | Counter | How many total HTTP requests k6 generated. |

-| http_req_blocked | Trend | Time spent blocked (waiting for a free TCP connection slot) before initiating the request. `float` |

-| http_req_connecting | Trend | Time spent establishing TCP connection to the remote host. `float` |

-| http_req_tls_handshaking | Trend | Time spent handshaking TLS session with remote host |

-| http_req_sending | Trend | Time spent sending data to the remote host. `float` |

-| http_req_waiting | Trend | Time spent waiting for response from remote host (a.k.a. “time to first byte”, or “TTFB”). `float` |

-| http_req_receiving | Trend | Time spent receiving response data from the remote host. `float` |

-| http_req_duration | Trend | Total time for the request. It's equal to `http_req_sending + http_req_waiting + http_req_receiving` (i.e. how long did the remote server take to process the request and respond, without the initial DNS lookup/connection times). `float` |

-| http_req_failed | Rate | The rate of failed requests according to [setResponseCallback](/javascript-api/k6-http/setresponsecallback). |

-| | | |

-

-### Accessing HTTP timings from a script

-

-To access the timing information from an individual HTTP request, the [Response.timings](/javascript-api/k6-http/response) object provides the time spent on the various phases in `ms`:

-

-- blocked: equals to `http_req_blocked`.

-- connecting: equals to `http_req_connecting`.

-- tls_handshaking: equals to `http_req_tls_handshaking`.

-- sending: equals to `http_req_sending`.

-- waiting: equals to `http_req_waiting`.

-- receiving: equals to `http_req_receiving`.

-- duration: equals to `http_req_duration`.

-

-

-

-```javascript

-import http from 'k6/http';

-

-export default function () {

- const res = http.get('http://httpbin.test.k6.io');

- console.log('Response time was ' + String(res.timings.duration) + ' ms');

-}

-```

-

-

-

-The expected (partial) output looks like this:

-

-

-

-```bash

-$ k6 run script.js

-

- INFO[0001] Response time was 337.962473 ms source=console

-```

-

-

-

-## Custom metrics

-

-You can also create custom metrics.

-They are reported at the end of a load test, just like HTTP timings:

-

-

-

-```javascript

-import http from 'k6/http';

-import { Trend } from 'k6/metrics';

-

-const myTrend = new Trend('waiting_time');

-

-export default function () {

- const r = http.get('https://httpbin.test.k6.io');

- myTrend.add(r.timings.waiting);

- console.log(myTrend.name); // waiting_time

-}

-```

-

-

-

-The preceding code creates a Trend metric called `waiting_time`.

-In the code, it's referred to with the variable name `myTrend`.

-

-Custom metrics are reported at the end of a test.

-Here's how the output might look:

-

-

-

-```bash

-$ k6 run script.js

-

- ...

- INFO[0001] waiting_time source=console

-

- ...

- iteration_duration.............: avg=1.15s min=1.15s med=1.15s max=1.15s p(90)=1.15s p(95)=1.15s

- iterations.....................: 1 0.864973/s

- waiting_time...................: avg=265.245396 min=265.245396 med=265.245396 max=265.245396 p(90)=265.245396 p(95)=265.245396

-```

-

-

-

-You can optionally [tag](/using-k6/tags-and-groups) any value for a custom metric.

-This can be useful when analyzing test results.

-

-

-

-Custom metrics are collected from VU threads only at the end of a VU iteration.

-For long-running scripts, custom metrics might appear only after the test runs a while.

-

-

-

-

-## Metric types

-

-All metrics (both built-in and custom) have a _type_. The four different metric types in k6 are:

-

-| Metric type | Description |

-| --------------------------------------------- | -------------------------------------------------------------------------------------------------------- |

-| [Counter](/javascript-api/k6-metrics/counter) | A metric that cumulatively sums added values. |

-| [Gauge](/javascript-api/k6-metrics/gauge) | A metric that stores the min, max and last values added to it. |

-| [Rate](/javascript-api/k6-metrics/rate) | A metric that tracks the percentage of added values that are non-zero. |

-| [Trend](/javascript-api/k6-metrics/trend) | A metric that allows for calculating statistics on the added values (min, max, average and percentiles). |

-

-

-### Counter _(cumulative metric)_

-

-

-

-```javascript

-import { Counter } from 'k6/metrics';

-

-const myCounter = new Counter('my_counter');

-

-export default function () {

- myCounter.add(1);

- myCounter.add(2);

-}

-```

-

-

-

-The preceding code generates something like the following output:

-

-

-

-```bash

-$ k6 run script.js

-

- ...

- iteration_duration...: avg=16.48µs min=16.48µs med=16.48µs max=16.48µs p(90)=16.48µs p(95)=16.48µs

- iterations...........: 1 1327.67919/s

- my_counter...........: 3 3983.037571/s

-```

-

-

-

-If you run the script for one iteration—without specifying `--iterations` or `--duration`—the value of `my_counter` will be three.

-

-Note that there is currently no way to access the value of any custom metric from within JavaScript.

-Note also that counters that have a value of zero (`0`) at the end of a test are a special case.

-They will _NOT_ print to the stdout summary.

-

-### Gauge _(keep the latest value only)_

-

-

-

-```javascript

-import { Gauge } from 'k6/metrics';

-

-const myGauge = new Gauge('my_gauge');

-

-export default function () {

- myGauge.add(3);

- myGauge.add(1);

- myGauge.add(2);

-}

-```

-

-

-

-The preceding code results in output like this:

-

-

-

-```bash

-$ k6 run script.js

-

- ...

- iteration_duration...: avg=21.74µs min=21.74µs med=21.74µs max=21.74µs p(90)=21.74µs p(95)=21.74µs

- iterations...........: 1 1293.475322/s

- my_gauge.............: 2 min=1 max=3

-```

-

-

-

-The value of `my_gauge` will be 2 at the end of the test.

-As with the Counter metric, a Gauge with a value of zero (`0`) will *NOT* be printed to the `stdout` summary at the end of the test.

-

-### Trend _(collect trend statistics (min/max/avg/percentiles) for a series of values)_

-

-

-

-```javascript

-import { Trend } from 'k6/metrics';

-

-const myTrend = new Trend('my_trend');

-

-export default function () {

- myTrend.add(1);

- myTrend.add(2);

-}

-```

-

-

-

-The preceding code outputs something like this:

-

-

-

-```bash

-$ k6 run script.js

-

- ...

- iteration_duration...: avg=20.78µs min=20.78µs med=20.78µs max=20.78µs p(90)=20.78µs p(95)=20.78µs

- iterations...........: 1 1217.544821/s

- my_trend.............: avg=1.5 min=1 med=1.5 max=2 p(90)=1.9 p(95)=1.95

-```

-

-

-

-A _trend metric_ holds a set of sample values, which it can output statistics about (min, max, average, median, or percentiles).

-By default, k6 prints `average`, `min`, `max`, `median`, `90th percentile`, and `95th percentile`.

-

-### Rate _(keeps track of the percentage of values in a series that are non-zero)_

-

-

-

-```javascript

-import { Rate } from 'k6/metrics';

-

-const myRate = new Rate('my_rate');

-

-export default function () {

- myRate.add(true);

- myRate.add(false);

- myRate.add(1);

- myRate.add(0);

-}

-```

-

-

-

-The preceding code outputs something like this:

-

-

-

-```bash

-$ k6 run script.js

-

- ...

- iteration_duration...: avg=22.12µs min=22.12µs med=22.12µs max=22.12µs p(90)=22.12µs p(95)=22.12µs

- iterations...........: 1 1384.362792/s

- my_rate..............: 50.00% ✓ 2 ✗ 2

-```

-

-

-

-The value of `my_rate` at the end of the test will be 50%, indicating that half of the values

-added to the metric were non-zero.

-

-## Metric graphs in k6 Cloud Results

-

-If you use [k6 Cloud Results](/cloud/analyzing-results/overview), you can access all test

+If you use [Grafana cloud k6](https://grafana.com/docs/grafana-cloud/k6), you can access all test

metrics within the [Analysis Tab](/cloud/analyzing-results/analysis-tab).

+

You can use this tab to analyze, compare, and look for meaningful correlations in your test result data.

-

+

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

new file mode 100644

index 0000000000..ecf901eee7

--- /dev/null

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

@@ -0,0 +1,234 @@

+---

+title: Create custom metrics

+description: How to build custom k6 metrics for each metric type.

+---

+

+Besides the [built-in metrics](/results-output/metrics-reference), you can create custom metrics.

+k6 reports custom metrics at the end of a load test, just like HTTP timings.

+

+Each metric type has a [constructor](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Classes/constructor) to create a custom metric.

+The constructor creates a metric object of the declared type. Each type has methods to take metric measurements.

+

+If you need help constructing a custom metric, read the following sections of this document.

+They provide a walkthrough of the procedure, along with examples for each metric type.

+If you're comfortable with these Javascript conventions already, you might prefer to read the [reference documentation for each metric constructor](/javascript-api/k6-metrics).

+Each topic has examples to make a custom metric and create thresholds from it.

+

+

+## Create a custom metric

+

+The generic procedure to create a custom metric is as follows:

+

+1. In [init context](/using-k6/test-lifecycle), construct a new custom-metric object.

+

+ For example, the following creates a custom trend. The object in the script is called `myTrend`, and its metric appears in the results output as `waiting_time`.

+

+ ```

+ const myTrend = new Trend('waiting_time');

+ ```

+

+1. In the VU iteration code, use the `add` method to take a measurement.

+

+For example, this VU code makes a request, then adds the timing value of the request to the `myTrend` object.

+

+

+

+

+```javascript

+import http from 'k6/http';

+import { Trend } from 'k6/metrics';

+

+const myTrend = new Trend('waiting_time');

+

+export default function () {

+ const r = http.get('https://httpbin.test.k6.io');

+ myTrend.add(r.timings.waiting);

+ console.log(myTrend.name); // waiting_time

+}

+```

+

+

+

+Custom metrics appear in both the end-of-test summary and in the granular points of the [Results output](/results-output).

+Here's how the output of the preceding script might look. Since the metric is a trend, k6 calculates various trends based on the number of values and their summation.

+

+

+

+```bash

+$ k6 run script.js

+

+ ...

+ INFO[0001] waiting_time source=console

+

+ ...

+ iteration_duration.............: avg=1.15s min=1.15s med=1.15s max=1.15s p(90)=1.15s p(95)=1.15s

+ iterations.....................: 1 0.864973/s

+ waiting_time...................: avg=265.245396 min=265.245396 med=265.245396 max=265.245396 p(90)=265.245396 p(95)=265.245396

+```

+

+

+

+You can optionally [tag](/using-k6/tags-and-groups) any value for a custom metric.

+This can be useful when analyzing test results.

+

+

+

+Custom metrics are collected from VU threads only at the end of a VU iteration.

+For long-running scripts, custom metrics might appear only after the test runs a while.

+

+

+

+## Examples for each type

+### Create a counter

+

+This counter metric is a _cumulative metric_

+

+

+

+```javascript

+import { Counter } from 'k6/metrics';

+

+const myCounter = new Counter('my_counter');

+

+export default function () {

+ myCounter.add(1);

+ myCounter.add(2);

+}

+```

+

+

+

+The preceding code generates something like the following output:

+

+

+

+```bash

+$ k6 run script.js

+

+ ...

+ iteration_duration...: avg=16.48µs min=16.48µs med=16.48µs max=16.48µs p(90)=16.48µs p(95)=16.48µs

+ iterations...........: 1 1327.67919/s

+ my_counter...........: 3 3983.037571/s

+```

+

+

+

+If you run the script for one iteration—without specifying `--iterations` or `--duration`—the value of `my_counter` will be three.

+

+Note that there is currently no way to access the value of any custom metric from within JavaScript.

+Note also that counters that have a value of zero (`0`) at the end of a test are a special case.

+They will _NOT_ print to the stdout summary.

+

+### Create a gauge

+

+This metric keeps the minimum, maximum, and latest values.

+

+

+

+```javascrip t

+import { Gauge } from 'k6/metrics';

+

+const myGauge = new Gauge('my_gauge');

+

+export default function () {

+ myGauge.add(3);

+ myGauge.add(1);

+ myGauge.add(2);

+}

+```

+

+

+

+The preceding code results in output like this:

+

+

+

+```bash

+$ k6 run script.js

+

+ ...

+ iteration_duration...: avg=21.74µs min=21.74µs med=21.74µs max=21.74µs p(90)=21.74µs p(95)=21.74µs

+ iterations...........: 1 1293.475322/s

+ my_gauge.............: 2 min=1 max=3

+```

+

+

+

+The value of `my_gauge` will be 2 at the end of the test.

+As with the Counter metric, a Gauge with a value of zero (`0`) will *NOT* be printed to the `stdout` summary at the end of the test.

+

+### Create a trend

+

+This custom trend collects trend statistics (min/max/avg/percentiles) for a series of values.

+

+

+

+```javascript

+import { Trend } from 'k6/metrics';

+

+const myTrend = new Trend('my_trend');

+

+export default function () {

+ myTrend.add(1);

+ myTrend.add(2);

+}

+```

+

+

+

+The preceding code outputs something like this:

+

+

+

+```bash

+$ k6 run script.js

+

+ ...

+ iteration_duration...: avg=20.78µs min=20.78µs med=20.78µs max=20.78µs p(90)=20.78µs p(95)=20.78µs

+ iterations...........: 1 1217.544821/s

+ my_trend.............: avg=1.5 min=1 med=1.5 max=2 p(90)=1.9 p(95)=1.95

+```

+

+

+

+A _trend metric_ holds a set of sample values, which it can output statistics about (min, max, average, median, or percentiles).

+By default, k6 prints `average`, `min`, `max`, `median`, `90th percentile`, and `95th percentile`.

+

+### Create a rate

+

+This custom rate keeps track of the percentage of values in a series that are non-zero.

+

+

+

+```javascript

+import { Rate } from 'k6/metrics';

+

+const myRate = new Rate('my_rate');

+

+export default function () {

+ myRate.add(true);

+ myRate.add(false);

+ myRate.add(1);

+ myRate.add(0);

+}

+```

+

+

+

+The preceding code outputs something like this:

+

+

+

+```bash

+$ k6 run script.js

+

+ ...

+ iteration_duration...: avg=22.12µs min=22.12µs med=22.12µs max=22.12µs p(90)=22.12µs p(95)=22.12µs

+ iterations...........: 1 1384.362792/s

+ my_rate..............: 50.00% ✓ 2 ✗ 2

+```

+

+

+

+The value of `my_rate` at the end of the test will be 50%, indicating that half of the values added to the metric were non-zero.

+

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md

new file mode 100644

index 0000000000..233713bcb4

--- /dev/null

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md

@@ -0,0 +1,40 @@

+---

+title: Calculate HTTP timings

+excerpt: How to calculate timings for an individual k6 metric

+---

+

+To access the timing information from an individual HTTP request, the [Response.timings](/javascript-api/k6-http/response) object provides the time spent on the various phases in `ms`:

+

+- blocked: equals to `http_req_blocked`.

+- connecting: equals to `http_req_connecting`.

+- tls_handshaking: equals to `http_req_tls_handshaking`.

+- sending: equals to `http_req_sending`.

+- waiting: equals to `http_req_waiting`.

+- receiving: equals to `http_req_receiving`.

+- duration: equals to `http_req_duration`.

+

+

+

+```javascript

+import http from 'k6/http';

+

+export default function () {

+ const res = http.get('http://httpbin.test.k6.io');

+ console.log('Response time was ' + String(res.timings.duration) + ' ms');

+}

+```

+

+

+

+The expected (partial) output looks like this:

+

+

+

+```bash

+$ k6 run script.js

+

+ INFO[0001] Response time was 337.962473 ms source=console

+```

+

+

+

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md

new file mode 100644

index 0000000000..c802effcd5

--- /dev/null

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md

@@ -0,0 +1,167 @@

+---

+title: Metrics reference

+slug: '/using-k6/metrics/reference'

+excerpt: Built-in k6 metrics for each protocol

+---

+

+Every k6 test emits built-in and custom metrics.

+Each supported protocol also has its own metrics.

+

+## Built-in metrics

+

+The _built-in_ metrics output to `stdout` when you run the simplest possible k6 test:

+

+

+

+```javascript

+import http from 'k6/http';

+

+export default function () {

+ http.get('https://test-api.k6.io/');

+}

+```

+

+

+

+Running the preceding script outputs something like this:

+

+

+

+```bash

+$ k6 run script.js

+

+ /\ |‾‾| /‾‾/ /‾‾/

+ /\ / \ | |/ / / /

+ / \/ \ | ( / ‾‾\

+ / \ | |\ \ | (‾) |

+ / __________ \ |__| \__\ \_____/ .io

+

+ execution: local

+ script: http_get.js

+ output: -

+

+ scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop):

+ * default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

+

+

+running (00m03.8s), 0/1 VUs, 1 complete and 0 interrupted iterations

+default ✓ [======================================] 1 VUs 00m03.8s/10m0s 1/1 iters, 1 per VU

+

+ data_received..................: 22 kB 5.7 kB/s

+ data_sent......................: 742 B 198 B/s

+ http_req_blocked...............: avg=1.05s min=1.05s med=1.05s max=1.05s p(90)=1.05s p(95)=1.05s

+ http_req_connecting............: avg=334.26ms min=334.26ms med=334.26ms max=334.26ms p(90)=334.26ms p(95)=334.26ms

+ http_req_duration..............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

+ { expected_response:true }...: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

+ http_req_failed................: 0.00% ✓ 0 ✗ 1

+ http_req_receiving.............: avg=112.41µs min=112.41µs med=112.41µs max=112.41µs p(90)=112.41µs p(95)=112.41µs

+ http_req_sending...............: avg=294.48µs min=294.48µs med=294.48µs max=294.48µs p(90)=294.48µs p(95)=294.48µs

+ http_req_tls_handshaking.......: avg=700.6ms min=700.6ms med=700.6ms max=700.6ms p(90)=700.6ms p(95)=700.6ms

+ http_req_waiting...............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

+ http_reqs......................: 1 0.266167/s

+ iteration_duration.............: avg=3.75s min=3.75s med=3.75s max=3.75s p(90)=3.75s p(95)=3.75s

+ iterations.....................: 1 0.266167/s

+ vus............................: 1 min=1 max=1

+ vus_max........................: 1 min=1 max=1

+```

+

+

+

+In that output, all the metrics that start with `http`, `iteration`, and `vu` are _built-in_ metrics, which get written to stdout at the end of a test.

+

+k6 always collects the following built-in metrics:

+

+| Metric Name | Type | Description |

+|--------------------|---------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| vus | Gauge | Current number of active virtual users |

+| vus_max | Gauge | Max possible number of virtual users (VU resources are pre-allocated, ensuring performance will not be affected when scaling up the load level) |

+| iterations | Counter | The aggregate number of times the VUs executed the JS script (the `default` function). |

+| iteration_duration | Trend | The time it took to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

+| dropped_iterations | Counter | The number of iterations that weren't started due to lack of VUs (for the arrival-rate executors) or lack of time (expired maxDuration in the iteration-based executors). [About dropped iterations](/using-k6/scenarios/concepts/dropped-iterations/) |

+| data_received | Counter | The amount of received data. [This example covers how to track data for an individual URL](/examples/track-transmitted-data-per-url). |

+| data_sent | Counter | The amount of data sent. [Track data for an individual URL](/examples/track-transmitted-data-per-url) to track data for an individual URL. |

+| checks | Rate | The rate of successful checks. |

+

+## HTTP-specific built-in metrics

+

+These metrics are generated only when the test makes HTTP requests.

+

+

+

+For all `http_req_*` metrics, **the timestamp is emitted the end of the request.**

+In other words, the timestamp happens when k6 receives the end of the response body, or the request times out.

+

+

+

+| Metric Name | Type | Description |

+|--------------------------|---------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| http_reqs | Counter | How many total HTTP requests k6 generated. |

+| http_req_blocked | Trend | Time spent blocked (waiting for a free TCP connection slot) before initiating the request. `float` |

+| http_req_connecting | Trend | Time spent establishing TCP connection to the remote host. `float` |

+| http_req_tls_handshaking | Trend | Time spent handshaking TLS session with remote host |

+| http_req_sending | Trend | Time spent sending data to the remote host. `float` |

+| http_req_waiting | Trend | Time spent waiting for response from remote host (a.k.a. “time to first byte”, or “TTFB”). `float` |

+| http_req_receiving | Trend | Time spent receiving response data from the remote host. `float` |

+| http_req_duration | Trend | Total time for the request. It's equal to `http_req_sending + http_req_waiting + http_req_receiving` (i.e. how long did the remote server take to process the request and respond, without the initial DNS lookup/connection times). `float` |

+| http_req_failed | Rate | The rate of failed requests according to [setResponseCallback](/javascript-api/k6-http/setresponsecallback). |

+| | | |

+

+### Accessing HTTP timings from a script

+

+To access the timing information from an individual HTTP request, the [Response.timings](/javascript-api/k6-http/response) object provides the time spent on the various phases in `ms`:

+

+- blocked: equals to `http_req_blocked`.

+- connecting: equals to `http_req_connecting`.

+- tls_handshaking: equals to `http_req_tls_handshaking`.

+- sending: equals to `http_req_sending`.

+- waiting: equals to `http_req_waiting`.

+- receiving: equals to `http_req_receiving`.

+- duration: equals to `http_req_duration`.

+

+

+

+```javascript

+import http from 'k6/http';

+

+export default function () {

+ const res = http.get('http://httpbin.test.k6.io');

+ console.log('Response time was ' + String(res.timings.duration) + ' ms');

+}

+```

+

+

+

+The expected (partial) output looks like this:

+

+

+

+```bash

+$ k6 run script.js

+

+ INFO[0001] Response time was 337.962473 ms source=console

+```

+

+

+

+

+## Built-in WebSocket metrics

+

+`k6` emits the following metrics when interacting with a WebSocket service through the [`experimental`](/javascript-api/k6-experimental/websockets) or legacy websockets API.

+

+| Metric name | Type | Description |

+| ------------------- | ------- | -------------------------------------------------------------------------------------------------------------------------- |

+| ws_connecting | Trend | Total duration for the WebSocket connection request. |

+| ws_session_duration | Trend | Duration of the WebSocket session. Time between the start of the connection and the end of the VU execution. |

+| ws_sessions | Counter | Total number of started WebSocket sessions. |

+| ws_ping | Trend | Duration between a ping request and its pong reception |

+| ws_msgs_sent | Counter | Total number of messages sent through [Socket.send(data)](/javascript-api/k6-ws/socket/socket-send) |

+| ws_msgs_received | Counter | Total number of received messages [Socket.on('message', callback)](/javascript-api/k6-ws/socket/socket-on). |

+

+## Built-in gRPC metrics

+

+k6 emits the following metrics when it interacts with a service through the [`gRPC`](https://k6.io/docs/javascript-api/k6-net-grpc/) API.

+

+| Metric Name | Type | Description |

+|---------------------|-------|-------------------------------------------|

+| `grpc_req_duration` | Trend | Time to receive response from remote host |

+

From 69b12a0a0c6b5981a6d73dcf26f49bc53c5e7a88 Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Thu, 27 Apr 2023 12:28:53 -0300

Subject: [PATCH 02/17] More metrics refactoring and example

---

.../23 get-timings-for-an-http-metric.md} | 11 +-

.../en/02 Using k6/02 Metrics.md | 102 +++++++++--

.../02 Using k6/02 Metrics/000-reference.md | 53 ++++++

.../02 Metrics/100 Create custom metrics.md | 3 +-

.../02 Using k6/02 Metrics/999-reference.md | 167 ------------------

5 files changed, 156 insertions(+), 180 deletions(-)

rename src/data/markdown/{translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md => docs/05 Examples/01 Examples/23 get-timings-for-an-http-metric.md} (72%)

create mode 100644 src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

delete mode 100644 src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md b/src/data/markdown/docs/05 Examples/01 Examples/23 get-timings-for-an-http-metric.md

similarity index 72%

rename from src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md

rename to src/data/markdown/docs/05 Examples/01 Examples/23 get-timings-for-an-http-metric.md

index 233713bcb4..2b63831210 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/200 Calculate metric timings.md

+++ b/src/data/markdown/docs/05 Examples/01 Examples/23 get-timings-for-an-http-metric.md

@@ -1,9 +1,14 @@

---

-title: Calculate HTTP timings

+title: Get timings for an HTTP metric

excerpt: How to calculate timings for an individual k6 metric

---

-To access the timing information from an individual HTTP request, the [Response.timings](/javascript-api/k6-http/response) object provides the time spent on the various phases in `ms`:

+

+To access the timing information from an individual HTTP request, the [Response.timings](/javascript-api/k6-http/response) object provides the time spent on the various phases in `ms`.

+One use case of this is to use the timings in a [Custom metric](/using-k6/metrics/create-custom-metrics) to make a trend for a specific endpoint.

+

+The timings are as follows:

+

- blocked: equals to `http_req_blocked`.

- connecting: equals to `http_req_connecting`.

@@ -13,6 +18,8 @@ To access the timing information from an individual HTTP request, the [Response.

- receiving: equals to `http_req_receiving`.

- duration: equals to `http_req_duration`.

+This script gets the request duration timing for a specific GET request and logs it to the console.

+

```javascript

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

index 324211b916..8f56a619ce 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

@@ -16,20 +16,102 @@ Metrics fall into four broad types:

If you want to make a test fail a certain criteria, you can write [Threshold](/using-k6/thresholds) based on the metric criteria (the specifics of the expression depend on the test type.

-| On this page... | Read about... |

-|------------------------------------------------------------------------|--------------------------------------------------------------------------------|

-| [Create custom metrics](/using-k6/metrics/create-custom-metrics) | How to build your own metric for each metric type |

-| [Calculate metric timings](/using-k6/metrics/calculate-http-timings) | How to get timings from an individual request |

-| [Metrics reference](/using-k6/metrics/reference) | Each built-in metric for each supported [protocol](/using-k6/protocols) |

+| On this page... | Read about... |

+|----------------------------------------------------------------------|-------------------------------------------------------------------------|

+| [Built-in metrics](/using-k6/metrics/reference) | Each built-in metric for each supported [protocol](/using-k6/protocols) |

+| [Create custom metrics](/using-k6/metrics/create-custom-metrics) | How to build your own metric for each metric type |

+## What metrics to look at?

-## Metric graphs in Grafana cloud k6

+Each metric provides a different perspective on performance.

+So the best metric for your analysis depends on your goals.

-If you use [Grafana cloud k6](https://grafana.com/docs/grafana-cloud/k6), you can access all test

-metrics within the [Analysis Tab](/cloud/analyzing-results/analysis-tab).

+However, if you're not sure what metrics to focus on, a good place to start is with the metrics that measure the requests, errors, and duration (the criteria of the [RED method](https://grafana.com/blog/2018/08/02/the-red-method-how-to-instrument-your-services/)).

+- `http_reqs`, to measure requests

+- `http_req_failed`, to measure error rate

+- `req_duration`, to measure duration

-You can use this tab to analyze, compare, and look for meaningful correlations in your test result data.

+

-

+In other terminology, these metrics measure traffic (in requests), availability (in error rate), and latency (in request duration).

+SREs might recognize these metrics as three of the [four Golden Signals](https://sre.google/sre-book/monitoring-distributed-systems/#xref_monitoring_golden-signals).

+

+

+

+

+## Example output

+

+An aggregated summary of all _built-in_ and custom metrics outputs to `stdout` when you run a test.:

+

+

+

+```javascript

+import http from 'k6/http';

+

+export default function () {

+ http.get('https://test-api.k6.io/');

+}

+```

+

+

+

+Running the preceding script outputs something like this:

+

+

+

+```bash

+$ k6 run script.js

+

+ /\ |‾‾| /‾‾/ /‾‾/

+ /\ / \ | |/ / / /

+ / \/ \ | ( / ‾‾\

+ / \ | |\ \ | (‾) |

+ / __________ \ |__| \__\ \_____/ .io

+

+ execution: local

+ script: http_get.js

+ output: -

+

+ scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop):

+ * default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

+

+

+running (00m03.8s), 0/1 VUs, 1 complete and 0 interrupted iterations

+default ✓ [======================================] 1 VUs 00m03.8s/10m0s 1/1 iters, 1 per VU

+

+ data_received..................: 22 kB 5.7 kB/s

+ data_sent......................: 742 B 198 B/s

+ http_req_blocked...............: avg=1.05s min=1.05s med=1.05s max=1.05s p(90)=1.05s p(95)=1.05s

+ http_req_connecting............: avg=334.26ms min=334.26ms med=334.26ms max=334.26ms p(90)=334.26ms p(95)=334.26ms

+ http_req_duration..............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

+ { expected_response:true }...: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

+ http_req_failed................: 0.00% ✓ 0 ✗ 1

+ http_req_receiving.............: avg=112.41µs min=112.41µs med=112.41µs max=112.41µs p(90)=112.41µs p(95)=112.41µs

+ http_req_sending...............: avg=294.48µs min=294.48µs med=294.48µs max=294.48µs p(90)=294.48µs p(95)=294.48µs

+ http_req_tls_handshaking.......: avg=700.6ms min=700.6ms med=700.6ms max=700.6ms p(90)=700.6ms p(95)=700.6ms

+ http_req_waiting...............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

+ http_reqs......................: 1 0.266167/s

+ iteration_duration.............: avg=3.75s min=3.75s med=3.75s max=3.75s p(90)=3.75s p(95)=3.75s

+ iterations.....................: 1 0.266167/s

+ vus............................: 1 min=1 max=1

+ vus_max........................: 1 min=1 max=1

+```

+

+

+

+In that output, all the metrics that start with `http`, `iteration`, and `vu` are _built-in_ metrics, which get written to stdout at the end of a test.

+

+k6 always collects the following built-in metrics:

+

+| Metric Name | Type | Description |

+|--------------------|---------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| vus | Gauge | Current number of active virtual users |

+| vus_max | Gauge | Max possible number of virtual users (VU resources are pre-allocated, ensuring performance will not be affected when scaling up the load level) |

+| iterations | Counter | The aggregate number of times the VUs executed the JS script (the `default` function). |

+| iteration_duration | Trend | The time it took to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

+| dropped_iterations | Counter | The number of iterations that weren't started due to lack of VUs (for the arrival-rate executors) or lack of time (expired maxDuration in the iteration-based executors). [About dropped iterations](/using-k6/scenarios/concepts/dropped-iterations/) |

+| data_received | Counter | The amount of received data. [This example covers how to track data for an individual URL](/examples/track-transmitted-data-per-url). |

+| data_sent | Counter | The amount of data sent. [Track data for an individual URL](/examples/track-transmitted-data-per-url) to track data for an individual URL. |

+| checks | Rate | The rate of successful checks. |

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

new file mode 100644

index 0000000000..457d2505bd

--- /dev/null

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

@@ -0,0 +1,53 @@

+---

+title: Built-in metrics

+slug: '/using-k6/metrics/reference'

+excerpt: A reference of built-in metrics for different supported protocols.

+---

+

+Every k6 test emits built-in and custom metrics.

+Each supported protocol also has its specific metrics.

+

+## HTTP-specific built-in metrics

+

+These metrics are generated only when the test makes HTTP requests.

+

+

+

+For all `http_req_*` metrics, **the timestamp is emitted the end of the request.**

+In other words, the timestamp happens when k6 receives the end of the response body, or the request times out.

+

+

+

+| Metric Name | Type | Description |

+|--------------------------|---------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| http_reqs | Counter | How many total HTTP requests k6 generated. |

+| http_req_blocked | Trend | Time spent blocked (waiting for a free TCP connection slot) before initiating the request. `float` |

+| http_req_connecting | Trend | Time spent establishing TCP connection to the remote host. `float` |

+| http_req_tls_handshaking | Trend | Time spent handshaking TLS session with remote host |

+| http_req_sending | Trend | Time spent sending data to the remote host. `float` |

+| http_req_waiting | Trend | Time spent waiting for response from remote host (a.k.a. “time to first byte”, or “TTFB”). `float` |

+| http_req_receiving | Trend | Time spent receiving response data from the remote host. `float` |

+| http_req_duration | Trend | Total time for the request. It's equal to `http_req_sending + http_req_waiting + http_req_receiving` (i.e. how long did the remote server take to process the request and respond, without the initial DNS lookup/connection times). `float` |

+| http_req_failed | Rate | The rate of failed requests according to [setResponseCallback](/javascript-api/k6-http/setresponsecallback). |

+

+## Built-in WebSocket metrics

+

+`k6` emits the following metrics when interacting with a WebSocket service through the [`experimental`](/javascript-api/k6-experimental/websockets) or legacy websockets API.

+

+| Metric name | Type | Description |

+| ------------------- | ------- | -------------------------------------------------------------------------------------------------------------------------- |

+| ws_connecting | Trend | Total duration for the WebSocket connection request. |

+| ws_session_duration | Trend | Duration of the WebSocket session. Time between the start of the connection and the end of the VU execution. |

+| ws_sessions | Counter | Total number of started WebSocket sessions. |

+| ws_ping | Trend | Duration between a ping request and its pong reception |

+| ws_msgs_sent | Counter | Total number of messages sent through [Socket.send(data)](/javascript-api/k6-ws/socket/socket-send) |

+| ws_msgs_received | Counter | Total number of received messages [Socket.on('message', callback)](/javascript-api/k6-ws/socket/socket-on). |

+

+## Built-in gRPC metrics

+

+k6 emits the following metrics when it interacts with a service through the [`gRPC`](https://k6.io/docs/javascript-api/k6-net-grpc/) API.

+

+| Metric Name | Type | Description |

+|---------------------|-------|-------------------------------------------|

+| `grpc_req_duration` | Trend | Time to receive response from remote host |

+

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

index ecf901eee7..adb2724431 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

@@ -4,7 +4,7 @@ description: How to build custom k6 metrics for each metric type.

---

Besides the [built-in metrics](/results-output/metrics-reference), you can create custom metrics.

-k6 reports custom metrics at the end of a load test, just like HTTP timings.

+For example, you can create some derived metrics from the built-in metrics, or you might use the the [Response.timings](/javascript-api/k6-http/response) object to create a metric for a specific set of endpoints.

Each metric type has a [constructor](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Classes/constructor) to create a custom metric.

The constructor creates a metric object of the declared type. Each type has methods to take metric measurements.

@@ -14,6 +14,7 @@ They provide a walkthrough of the procedure, along with examples for each metric

If you're comfortable with these Javascript conventions already, you might prefer to read the [reference documentation for each metric constructor](/javascript-api/k6-metrics).

Each topic has examples to make a custom metric and create thresholds from it.

+k6 reports custom metrics at the end of a load test, just like HTTP timings.

## Create a custom metric

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md

deleted file mode 100644

index c802effcd5..0000000000

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/999-reference.md

+++ /dev/null

@@ -1,167 +0,0 @@

----

-title: Metrics reference

-slug: '/using-k6/metrics/reference'

-excerpt: Built-in k6 metrics for each protocol

----

-

-Every k6 test emits built-in and custom metrics.

-Each supported protocol also has its own metrics.

-

-## Built-in metrics

-

-The _built-in_ metrics output to `stdout` when you run the simplest possible k6 test:

-

-

-

-```javascript

-import http from 'k6/http';

-

-export default function () {

- http.get('https://test-api.k6.io/');

-}

-```

-

-

-

-Running the preceding script outputs something like this:

-

-

-

-```bash

-$ k6 run script.js

-

- /\ |‾‾| /‾‾/ /‾‾/

- /\ / \ | |/ / / /

- / \/ \ | ( / ‾‾\

- / \ | |\ \ | (‾) |

- / __________ \ |__| \__\ \_____/ .io

-

- execution: local

- script: http_get.js

- output: -

-

- scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop):

- * default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

-

-

-running (00m03.8s), 0/1 VUs, 1 complete and 0 interrupted iterations

-default ✓ [======================================] 1 VUs 00m03.8s/10m0s 1/1 iters, 1 per VU

-

- data_received..................: 22 kB 5.7 kB/s

- data_sent......................: 742 B 198 B/s

- http_req_blocked...............: avg=1.05s min=1.05s med=1.05s max=1.05s p(90)=1.05s p(95)=1.05s

- http_req_connecting............: avg=334.26ms min=334.26ms med=334.26ms max=334.26ms p(90)=334.26ms p(95)=334.26ms

- http_req_duration..............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

- { expected_response:true }...: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

- http_req_failed................: 0.00% ✓ 0 ✗ 1

- http_req_receiving.............: avg=112.41µs min=112.41µs med=112.41µs max=112.41µs p(90)=112.41µs p(95)=112.41µs

- http_req_sending...............: avg=294.48µs min=294.48µs med=294.48µs max=294.48µs p(90)=294.48µs p(95)=294.48µs

- http_req_tls_handshaking.......: avg=700.6ms min=700.6ms med=700.6ms max=700.6ms p(90)=700.6ms p(95)=700.6ms

- http_req_waiting...............: avg=2.7s min=2.7s med=2.7s max=2.7s p(90)=2.7s p(95)=2.7s

- http_reqs......................: 1 0.266167/s

- iteration_duration.............: avg=3.75s min=3.75s med=3.75s max=3.75s p(90)=3.75s p(95)=3.75s

- iterations.....................: 1 0.266167/s

- vus............................: 1 min=1 max=1

- vus_max........................: 1 min=1 max=1

-```

-

-

-

-In that output, all the metrics that start with `http`, `iteration`, and `vu` are _built-in_ metrics, which get written to stdout at the end of a test.

-

-k6 always collects the following built-in metrics:

-

-| Metric Name | Type | Description |

-|--------------------|---------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| vus | Gauge | Current number of active virtual users |

-| vus_max | Gauge | Max possible number of virtual users (VU resources are pre-allocated, ensuring performance will not be affected when scaling up the load level) |

-| iterations | Counter | The aggregate number of times the VUs executed the JS script (the `default` function). |

-| iteration_duration | Trend | The time it took to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

-| dropped_iterations | Counter | The number of iterations that weren't started due to lack of VUs (for the arrival-rate executors) or lack of time (expired maxDuration in the iteration-based executors). [About dropped iterations](/using-k6/scenarios/concepts/dropped-iterations/) |

-| data_received | Counter | The amount of received data. [This example covers how to track data for an individual URL](/examples/track-transmitted-data-per-url). |

-| data_sent | Counter | The amount of data sent. [Track data for an individual URL](/examples/track-transmitted-data-per-url) to track data for an individual URL. |

-| checks | Rate | The rate of successful checks. |

-

-## HTTP-specific built-in metrics

-

-These metrics are generated only when the test makes HTTP requests.

-

-

-

-For all `http_req_*` metrics, **the timestamp is emitted the end of the request.**

-In other words, the timestamp happens when k6 receives the end of the response body, or the request times out.

-

-

-

-| Metric Name | Type | Description |

-|--------------------------|---------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| http_reqs | Counter | How many total HTTP requests k6 generated. |

-| http_req_blocked | Trend | Time spent blocked (waiting for a free TCP connection slot) before initiating the request. `float` |

-| http_req_connecting | Trend | Time spent establishing TCP connection to the remote host. `float` |

-| http_req_tls_handshaking | Trend | Time spent handshaking TLS session with remote host |

-| http_req_sending | Trend | Time spent sending data to the remote host. `float` |

-| http_req_waiting | Trend | Time spent waiting for response from remote host (a.k.a. “time to first byte”, or “TTFB”). `float` |

-| http_req_receiving | Trend | Time spent receiving response data from the remote host. `float` |

-| http_req_duration | Trend | Total time for the request. It's equal to `http_req_sending + http_req_waiting + http_req_receiving` (i.e. how long did the remote server take to process the request and respond, without the initial DNS lookup/connection times). `float` |

-| http_req_failed | Rate | The rate of failed requests according to [setResponseCallback](/javascript-api/k6-http/setresponsecallback). |

-| | | |

-

-### Accessing HTTP timings from a script

-

-To access the timing information from an individual HTTP request, the [Response.timings](/javascript-api/k6-http/response) object provides the time spent on the various phases in `ms`:

-

-- blocked: equals to `http_req_blocked`.

-- connecting: equals to `http_req_connecting`.

-- tls_handshaking: equals to `http_req_tls_handshaking`.

-- sending: equals to `http_req_sending`.

-- waiting: equals to `http_req_waiting`.

-- receiving: equals to `http_req_receiving`.

-- duration: equals to `http_req_duration`.

-

-

-

-```javascript

-import http from 'k6/http';

-

-export default function () {

- const res = http.get('http://httpbin.test.k6.io');

- console.log('Response time was ' + String(res.timings.duration) + ' ms');

-}

-```

-

-

-

-The expected (partial) output looks like this:

-

-

-

-```bash

-$ k6 run script.js

-

- INFO[0001] Response time was 337.962473 ms source=console

-```

-

-

-

-

-## Built-in WebSocket metrics

-

-`k6` emits the following metrics when interacting with a WebSocket service through the [`experimental`](/javascript-api/k6-experimental/websockets) or legacy websockets API.

-

-| Metric name | Type | Description |

-| ------------------- | ------- | -------------------------------------------------------------------------------------------------------------------------- |

-| ws_connecting | Trend | Total duration for the WebSocket connection request. |

-| ws_session_duration | Trend | Duration of the WebSocket session. Time between the start of the connection and the end of the VU execution. |

-| ws_sessions | Counter | Total number of started WebSocket sessions. |

-| ws_ping | Trend | Duration between a ping request and its pong reception |

-| ws_msgs_sent | Counter | Total number of messages sent through [Socket.send(data)](/javascript-api/k6-ws/socket/socket-send) |

-| ws_msgs_received | Counter | Total number of received messages [Socket.on('message', callback)](/javascript-api/k6-ws/socket/socket-on). |

-

-## Built-in gRPC metrics

-

-k6 emits the following metrics when it interacts with a service through the [`gRPC`](https://k6.io/docs/javascript-api/k6-net-grpc/) API.

-

-| Metric Name | Type | Description |

-|---------------------|-------|-------------------------------------------|

-| `grpc_req_duration` | Trend | Time to receive response from remote host |

-

From 961a1a2093f79b723dfcef65dee011c4fe12b0c1 Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Thu, 27 Apr 2023 15:17:51 -0300

Subject: [PATCH 03/17] prose trimming

---

.../markdown/translated-guides/en/02 Using k6/02 Metrics.md | 2 +-

.../en/02 Using k6/02 Metrics/100 Create custom metrics.md | 2 +-

2 files changed, 2 insertions(+), 2 deletions(-)

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

index 8f56a619ce..ab579fb9b0 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

@@ -26,7 +26,7 @@ If you want to make a test fail a certain criteria, you can write [Threshold](/u

Each metric provides a different perspective on performance.

So the best metric for your analysis depends on your goals.

-However, if you're not sure what metrics to focus on, a good place to start is with the metrics that measure the requests, errors, and duration (the criteria of the [RED method](https://grafana.com/blog/2018/08/02/the-red-method-how-to-instrument-your-services/)).

+However, if you're unsure about the metrics to focus on, you can start with the metrics that measure the requests, errors, and duration (the criteria of the [RED method](https://grafana.com/blog/2018/08/02/the-red-method-how-to-instrument-your-services/)).

- `http_reqs`, to measure requests

- `http_req_failed`, to measure error rate

- `req_duration`, to measure duration

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

index adb2724431..1271ce2c9a 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

@@ -4,7 +4,7 @@ description: How to build custom k6 metrics for each metric type.

---

Besides the [built-in metrics](/results-output/metrics-reference), you can create custom metrics.

-For example, you can create some derived metrics from the built-in metrics, or you might use the the [Response.timings](/javascript-api/k6-http/response) object to create a metric for a specific set of endpoints.

+For example, you can create some derived metrics from the built-in metrics, or you might use the [Response.timings](/javascript-api/k6-http/response) object to create a metric for a specific set of endpoints.

Each metric type has a [constructor](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Classes/constructor) to create a custom metric.

The constructor creates a metric object of the declared type. Each type has methods to take metric measurements.

From d809aeb158574f8304f0455b64c060b5b596867a Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Mon, 1 May 2023 19:33:31 -0300

Subject: [PATCH 04/17] Add non-proto metrics

---

.../en/02 Using k6/02 Metrics/000-reference.md | 15 +++++++++++++++

.../02 Metrics/100 Create custom metrics.md | 11 +++++------

2 files changed, 20 insertions(+), 6 deletions(-)

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

index 457d2505bd..9b237ca84a 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

@@ -7,6 +7,21 @@ excerpt: A reference of built-in metrics for different supported protocols.

Every k6 test emits built-in and custom metrics.

Each supported protocol also has its specific metrics.

+## Standard built-in metrics.

+

+k6 always collects the following metrics, no matter what protocol the test uses:

+

+| Metric Name | Type | Description |

+|--------------------|---------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| vus | Gauge | Current number of active virtual users |

+| vus_max | Gauge | Max possible number of virtual users (VU resources are pre-allocated, ensuring performance will not be affected when scaling up the load level) |

+| iterations | Counter | The aggregate number of times the VUs executed the JS script (the `default` function). |

+| iteration_duration | Trend | The time it took to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

+| dropped_iterations | Counter | The number of iterations that weren't started due to lack of VUs (for the arrival-rate executors) or lack of time (expired maxDuration in the iteration-based executors). [About dropped iterations](/using-k6/scenarios/concepts/dropped-iterations/) |

+| data_received | Counter | The amount of received data. [This example covers how to track data for an individual URL](/examples/track-transmitted-data-per-url). |

+| data_sent | Counter | The amount of data sent. [Track data for an individual URL](/examples/track-transmitted-data-per-url) to track data for an individual URL. |

+| checks | Rate | The rate of successful checks.

+

## HTTP-specific built-in metrics

These metrics are generated only when the test makes HTTP requests.

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

index 1271ce2c9a..c96f6e39ac 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

@@ -4,18 +4,17 @@ description: How to build custom k6 metrics for each metric type.

---

Besides the [built-in metrics](/results-output/metrics-reference), you can create custom metrics.

-For example, you can create some derived metrics from the built-in metrics, or you might use the [Response.timings](/javascript-api/k6-http/response) object to create a metric for a specific set of endpoints.

+For example, you can create some derived metrics from the built-in metrics, or use the [Response.timings](/javascript-api/k6-http/response) object to create a metric for a specific set of endpoints.

Each metric type has a [constructor](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Classes/constructor) to create a custom metric.

The constructor creates a metric object of the declared type. Each type has methods to take metric measurements.

If you need help constructing a custom metric, read the following sections of this document.

-They provide a walkthrough of the procedure, along with examples for each metric type.

+They document the procedure and provide examples.

+

If you're comfortable with these Javascript conventions already, you might prefer to read the [reference documentation for each metric constructor](/javascript-api/k6-metrics).

Each topic has examples to make a custom metric and create thresholds from it.

-k6 reports custom metrics at the end of a load test, just like HTTP timings.

-

## Create a custom metric

The generic procedure to create a custom metric is as follows:

@@ -70,7 +69,7 @@ $ k6 run script.js

You can optionally [tag](/using-k6/tags-and-groups) any value for a custom metric.

-This can be useful when analyzing test results.

+You can use these tags to filter test results.

@@ -82,7 +81,7 @@ For long-running scripts, custom metrics might appear only after the test runs a

## Examples for each type

### Create a counter

-This counter metric is a _cumulative metric_

+This counter metric is a cumulative metric

From 7de88156c32976d3b9f8c5bb76c3a1f39c7260be Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Mon, 1 May 2023 19:35:43 -0300

Subject: [PATCH 05/17] Adding links

---

.../markdown/translated-guides/en/02 Using k6/02 Metrics.md | 3 +++

1 file changed, 3 insertions(+)

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

index ab579fb9b0..a4ffdbc249 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

@@ -14,6 +14,9 @@ Metrics fall into four broad types:

- _Trends_ calculate statistics for multiple values (like mean or mode).

If you want to make a test fail a certain criteria, you can write [Threshold](/using-k6/thresholds) based on the metric criteria (the specifics of the expression depend on the test type.

+To filter metrics, you can use [Tags and groups](/using-k6/tags-and-groups).

+You can also export metrics in various summary and granular formats, as documented in [Results output](/results-output).

+

| On this page... | Read about... |

From dd4d1af226151610e4ce0dcd1eee23746ea2270b Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Tue, 2 May 2023 11:55:32 -0300

Subject: [PATCH 06/17] Lang fixes on the top page

---

.../en/02 Using k6/02 Metrics.md | 19 +++----------------

1 file changed, 3 insertions(+), 16 deletions(-)

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

index a4ffdbc249..71ac232f3a 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics.md

@@ -44,7 +44,7 @@ SREs might recognize these metrics as three of the [four Golden Signals](https:/

## Example output

-An aggregated summary of all _built-in_ and custom metrics outputs to `stdout` when you run a test.:

+An aggregated summary of all _built-in_ and custom metrics outputs to `stdout` when you run a test:

@@ -58,7 +58,7 @@ export default function () {

-Running the preceding script outputs something like this:

+The preceding script outputs something like this:

@@ -103,18 +103,5 @@ default ✓ [======================================] 1 VUs 00m03.8s/10m0s 1/1

In that output, all the metrics that start with `http`, `iteration`, and `vu` are _built-in_ metrics, which get written to stdout at the end of a test.

-

-k6 always collects the following built-in metrics:

-

-| Metric Name | Type | Description |

-|--------------------|---------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| vus | Gauge | Current number of active virtual users |

-| vus_max | Gauge | Max possible number of virtual users (VU resources are pre-allocated, ensuring performance will not be affected when scaling up the load level) |

-| iterations | Counter | The aggregate number of times the VUs executed the JS script (the `default` function). |

-| iteration_duration | Trend | The time it took to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

-| dropped_iterations | Counter | The number of iterations that weren't started due to lack of VUs (for the arrival-rate executors) or lack of time (expired maxDuration in the iteration-based executors). [About dropped iterations](/using-k6/scenarios/concepts/dropped-iterations/) |

-| data_received | Counter | The amount of received data. [This example covers how to track data for an individual URL](/examples/track-transmitted-data-per-url). |

-| data_sent | Counter | The amount of data sent. [Track data for an individual URL](/examples/track-transmitted-data-per-url) to track data for an individual URL. |

-| checks | Rate | The rate of successful checks. |

-

+For details of all metrics, refer to the [Metrics reference](/using-k6/metrics/reference/).

From dc80629ebff72774dce613e9809fa8fe7c36ffa6 Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Tue, 2 May 2023 12:08:05 -0300

Subject: [PATCH 07/17] clean up reference a bit

---

.../en/02 Using k6/02 Metrics/000-reference.md | 8 ++++----

1 file changed, 4 insertions(+), 4 deletions(-)

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

index 9b237ca84a..dc8c90c7b9 100644

--- a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

+++ b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/000-reference.md

@@ -4,7 +4,7 @@ slug: '/using-k6/metrics/reference'

excerpt: A reference of built-in metrics for different supported protocols.

---

-Every k6 test emits built-in and custom metrics.

+Every k6 test emits built-in and [Custom metrics](/using-k6/metrics/create-custom).

Each supported protocol also has its specific metrics.

## Standard built-in metrics.

@@ -14,9 +14,9 @@ k6 always collects the following metrics, no matter what protocol the test uses:

| Metric Name | Type | Description |

|--------------------|---------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| vus | Gauge | Current number of active virtual users |

-| vus_max | Gauge | Max possible number of virtual users (VU resources are pre-allocated, ensuring performance will not be affected when scaling up the load level) |

-| iterations | Counter | The aggregate number of times the VUs executed the JS script (the `default` function). |

-| iteration_duration | Trend | The time it took to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

+| vus_max | Gauge | Max possible number of virtual users (VU resources are [pre-allocated](/using-k6/scenarios/concepts/arrival-rate-vu-allocation/), to avoid affecting performance when scaling up load ) |

+| iterations | Counter | The aggregate number of times the VUs execute the JS script (the `default` function). |

+| iteration_duration | Trend | The time to complete one full iteration, including time spent in `setup` and `teardown`. To calculate the duration of the iteration's function for the specific scenario, [try this workaround](/using-k6/workaround-to-calculate-iteration_duration) |

| dropped_iterations | Counter | The number of iterations that weren't started due to lack of VUs (for the arrival-rate executors) or lack of time (expired maxDuration in the iteration-based executors). [About dropped iterations](/using-k6/scenarios/concepts/dropped-iterations/) |

| data_received | Counter | The amount of received data. [This example covers how to track data for an individual URL](/examples/track-transmitted-data-per-url). |

| data_sent | Counter | The amount of data sent. [Track data for an individual URL](/examples/track-transmitted-data-per-url) to track data for an individual URL. |

From 597cece2f8c6dfda266aebdb3f803bcaa989c048 Mon Sep 17 00:00:00 2001

From: Matt Dodson <47385188+MattDodsonEnglish@users.noreply.github.com>

Date: Tue, 2 May 2023 12:14:10 -0300

Subject: [PATCH 08/17] specify add metric

Co-authored-by: Ivan <2103732+codebien@users.noreply.github.com>

---

.../en/02 Using k6/02 Metrics/100 Create custom metrics.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md b/src/data/markdown/translated-guides/en/02 Using k6/02 Metrics/100 Create custom metrics.md

index c96f6e39ac..7ad7801665 100644