diff --git a/README.md b/README.md

index c461d786fd2e..908d2e690faa 100644

--- a/README.md

+++ b/README.md

@@ -244,6 +244,7 @@ Current number of checkpoints: ** (from Google Research) released with the paper [ByT5: Towards a token-free future with pre-trained byte-to-byte models](https://arxiv.org/abs/2105.13626) by Linting Xue, Aditya Barua, Noah Constant, Rami Al-Rfou, Sharan Narang, Mihir Kale, Adam Roberts, Colin Raffel.

1. **[CamemBERT](https://huggingface.co/docs/transformers/model_doc/camembert)** (from Inria/Facebook/Sorbonne) released with the paper [CamemBERT: a Tasty French Language Model](https://arxiv.org/abs/1911.03894) by Louis Martin*, Benjamin Muller*, Pedro Javier Ortiz Suárez*, Yoann Dupont, Laurent Romary, Éric Villemonte de la Clergerie, Djamé Seddah and Benoît Sagot.

1. **[CANINE](https://huggingface.co/docs/transformers/model_doc/canine)** (from Google Research) released with the paper [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) by Jonathan H. Clark, Dan Garrette, Iulia Turc, John Wieting.

+1. **[ConvNeXT](https://huggingface.co/docs/transformers/master/model_doc/convnext)** (from Facebook AI) released with the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie.

1. **[CLIP](https://huggingface.co/docs/transformers/model_doc/clip)** (from OpenAI) released with the paper [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) by Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever.

1. **[ConvBERT](https://huggingface.co/docs/transformers/model_doc/convbert)** (from YituTech) released with the paper [ConvBERT: Improving BERT with Span-based Dynamic Convolution](https://arxiv.org/abs/2008.02496) by Zihang Jiang, Weihao Yu, Daquan Zhou, Yunpeng Chen, Jiashi Feng, Shuicheng Yan.

1. **[CPM](https://huggingface.co/docs/transformers/model_doc/cpm)** (from Tsinghua University) released with the paper [CPM: A Large-scale Generative Chinese Pre-trained Language Model](https://arxiv.org/abs/2012.00413) by Zhengyan Zhang, Xu Han, Hao Zhou, Pei Ke, Yuxian Gu, Deming Ye, Yujia Qin, Yusheng Su, Haozhe Ji, Jian Guan, Fanchao Qi, Xiaozhi Wang, Yanan Zheng, Guoyang Zeng, Huanqi Cao, Shengqi Chen, Daixuan Li, Zhenbo Sun, Zhiyuan Liu, Minlie Huang, Wentao Han, Jie Tang, Juanzi Li, Xiaoyan Zhu, Maosong Sun.

diff --git a/README_ko.md b/README_ko.md

index 1bf1270c03b1..d6bc4ae44a4a 100644

--- a/README_ko.md

+++ b/README_ko.md

@@ -227,6 +227,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

1. **[CANINE](https://huggingface.co/docs/transformers/model_doc/canine)** (from Google Research) released with the paper [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) by Jonathan H. Clark, Dan Garrette, Iulia Turc, John Wieting.

1. **[CLIP](https://huggingface.co/docs/transformers/model_doc/clip)** (from OpenAI) released with the paper [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) by Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever.

1. **[ConvBERT](https://huggingface.co/docs/transformers/model_doc/convbert)** (from YituTech) released with the paper [ConvBERT: Improving BERT with Span-based Dynamic Convolution](https://arxiv.org/abs/2008.02496) by Zihang Jiang, Weihao Yu, Daquan Zhou, Yunpeng Chen, Jiashi Feng, Shuicheng Yan.

+1. **[ConvNeXT](https://huggingface.co/docs/transformers/master/model_doc/convnext)** (from Facebook AI) released with the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie.

1. **[CPM](https://huggingface.co/docs/transformers/model_doc/cpm)** (from Tsinghua University) released with the paper [CPM: A Large-scale Generative Chinese Pre-trained Language Model](https://arxiv.org/abs/2012.00413) by Zhengyan Zhang, Xu Han, Hao Zhou, Pei Ke, Yuxian Gu, Deming Ye, Yujia Qin, Yusheng Su, Haozhe Ji, Jian Guan, Fanchao Qi, Xiaozhi Wang, Yanan Zheng, Guoyang Zeng, Huanqi Cao, Shengqi Chen, Daixuan Li, Zhenbo Sun, Zhiyuan Liu, Minlie Huang, Wentao Han, Jie Tang, Juanzi Li, Xiaoyan Zhu, Maosong Sun.

1. **[CTRL](https://huggingface.co/docs/transformers/model_doc/ctrl)** (from Salesforce) released with the paper [CTRL: A Conditional Transformer Language Model for Controllable Generation](https://arxiv.org/abs/1909.05858) by Nitish Shirish Keskar*, Bryan McCann*, Lav R. Varshney, Caiming Xiong and Richard Socher.

1. **[DeBERTa](https://huggingface.co/docs/transformers/model_doc/deberta)** (from Microsoft) released with the paper [DeBERTa: Decoding-enhanced BERT with Disentangled Attention](https://arxiv.org/abs/2006.03654) by Pengcheng He, Xiaodong Liu, Jianfeng Gao, Weizhu Chen.

diff --git a/README_zh-hans.md b/README_zh-hans.md

index fe69542ddd8f..c5878ca2004f 100644

--- a/README_zh-hans.md

+++ b/README_zh-hans.md

@@ -251,6 +251,7 @@ conda install -c huggingface transformers

1. **[CANINE](https://huggingface.co/docs/transformers/model_doc/canine)** (来自 Google Research) 伴随论文 [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) 由 Jonathan H. Clark, Dan Garrette, Iulia Turc, John Wieting 发布。

1. **[CLIP](https://huggingface.co/docs/transformers/model_doc/clip)** (来自 OpenAI) 伴随论文 [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) 由 Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever 发布。

1. **[ConvBERT](https://huggingface.co/docs/transformers/model_doc/convbert)** (来自 YituTech) 伴随论文 [ConvBERT: Improving BERT with Span-based Dynamic Convolution](https://arxiv.org/abs/2008.02496) 由 Zihang Jiang, Weihao Yu, Daquan Zhou, Yunpeng Chen, Jiashi Feng, Shuicheng Yan 发布。

+1. **[ConvNeXT](https://huggingface.co/docs/transformers/master/model_doc/convnext)** (来自 Facebook AI) 伴随论文 [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) 由 Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie 发布。

1. **[CPM](https://huggingface.co/docs/transformers/model_doc/cpm)** (来自 Tsinghua University) 伴随论文 [CPM: A Large-scale Generative Chinese Pre-trained Language Model](https://arxiv.org/abs/2012.00413) 由 Zhengyan Zhang, Xu Han, Hao Zhou, Pei Ke, Yuxian Gu, Deming Ye, Yujia Qin, Yusheng Su, Haozhe Ji, Jian Guan, Fanchao Qi, Xiaozhi Wang, Yanan Zheng, Guoyang Zeng, Huanqi Cao, Shengqi Chen, Daixuan Li, Zhenbo Sun, Zhiyuan Liu, Minlie Huang, Wentao Han, Jie Tang, Juanzi Li, Xiaoyan Zhu, Maosong Sun 发布。

1. **[CTRL](https://huggingface.co/docs/transformers/model_doc/ctrl)** (来自 Salesforce) 伴随论文 [CTRL: A Conditional Transformer Language Model for Controllable Generation](https://arxiv.org/abs/1909.05858) 由 Nitish Shirish Keskar*, Bryan McCann*, Lav R. Varshney, Caiming Xiong and Richard Socher 发布。

1. **[DeBERTa](https://huggingface.co/docs/transformers/model_doc/deberta)** (来自 Microsoft) 伴随论文 [DeBERTa: Decoding-enhanced BERT with Disentangled Attention](https://arxiv.org/abs/2006.03654) 由 Pengcheng He, Xiaodong Liu, Jianfeng Gao, Weizhu Chen 发布。

diff --git a/README_zh-hant.md b/README_zh-hant.md

index f65b9a82a997..bd9cb706aa05 100644

--- a/README_zh-hant.md

+++ b/README_zh-hant.md

@@ -263,6 +263,7 @@ conda install -c huggingface transformers

1. **[CANINE](https://huggingface.co/docs/transformers/model_doc/canine)** (from Google Research) released with the paper [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) by Jonathan H. Clark, Dan Garrette, Iulia Turc, John Wieting.

1. **[CLIP](https://huggingface.co/docs/transformers/model_doc/clip)** (from OpenAI) released with the paper [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) by Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever.

1. **[ConvBERT](https://huggingface.co/docs/transformers/model_doc/convbert)** (from YituTech) released with the paper [ConvBERT: Improving BERT with Span-based Dynamic Convolution](https://arxiv.org/abs/2008.02496) by Zihang Jiang, Weihao Yu, Daquan Zhou, Yunpeng Chen, Jiashi Feng, Shuicheng Yan.

+1. **[ConvNeXT](https://huggingface.co/docs/transformers/master/model_doc/convnext)** (from Facebook AI) released with the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie.

1. **[CPM](https://huggingface.co/docs/transformers/model_doc/cpm)** (from Tsinghua University) released with the paper [CPM: A Large-scale Generative Chinese Pre-trained Language Model](https://arxiv.org/abs/2012.00413) by Zhengyan Zhang, Xu Han, Hao Zhou, Pei Ke, Yuxian Gu, Deming Ye, Yujia Qin, Yusheng Su, Haozhe Ji, Jian Guan, Fanchao Qi, Xiaozhi Wang, Yanan Zheng, Guoyang Zeng, Huanqi Cao, Shengqi Chen, Daixuan Li, Zhenbo Sun, Zhiyuan Liu, Minlie Huang, Wentao Han, Jie Tang, Juanzi Li, Xiaoyan Zhu, Maosong Sun.

1. **[CTRL](https://huggingface.co/docs/transformers/model_doc/ctrl)** (from Salesforce) released with the paper [CTRL: A Conditional Transformer Language Model for Controllable Generation](https://arxiv.org/abs/1909.05858) by Nitish Shirish Keskar*, Bryan McCann*, Lav R. Varshney, Caiming Xiong and Richard Socher.

1. **[DeBERTa](https://huggingface.co/docs/transformers/model_doc/deberta)** (from Microsoft) released with the paper [DeBERTa: Decoding-enhanced BERT with Disentangled Attention](https://arxiv.org/abs/2006.03654) by Pengcheng He, Xiaodong Liu, Jianfeng Gao, Weizhu Chen.

diff --git a/docs/source/_toctree.yml b/docs/source/_toctree.yml

index f1cb9a80f31a..70d2455d0aea 100644

--- a/docs/source/_toctree.yml

+++ b/docs/source/_toctree.yml

@@ -22,7 +22,7 @@

- local: model_summary

title: Summary of the models

- local: training

- title: Fine-tuning a pretrained model

+ title: Fine-tune a pretrained model

- local: accelerate

title: Distributed training with 🤗 Accelerate

- local: model_sharing

@@ -33,6 +33,8 @@

title: Multi-lingual models

title: Tutorials

- sections:

+ - local: create_a_model

+ title: Create a custom model

- local: examples

title: Examples

- local: troubleshooting

@@ -150,6 +152,8 @@

title: CamemBERT

- local: model_doc/canine

title: CANINE

+ - local: model_doc/convnext

+ title: ConvNeXT

- local: model_doc/clip

title: CLIP

- local: model_doc/convbert

diff --git a/docs/source/create_a_model.mdx b/docs/source/create_a_model.mdx

new file mode 100644

index 000000000000..8a1b80b09303

--- /dev/null

+++ b/docs/source/create_a_model.mdx

@@ -0,0 +1,323 @@

+

+

+# Create a custom model

+

+An [`AutoClass`](model_doc/auto) automatically infers the model architecture and downloads pretrained configuration and weights. Generally, we recommend using an `AutoClass` to produce checkpoint-agnostic code. But users who want more control over specific model parameters can create a custom 🤗 Transformers model from just a few base classes. This could be particularly useful for anyone who is interested in studying, training or experimenting with a 🤗 Transformers model. In this guide, dive deeper into creating a custom model without an `AutoClass`. Learn how to:

+

+- Load and customize a model configuration.

+- Create a model architecture.

+- Create a slow and fast tokenizer for text.

+- Create a feature extractor for audio or image tasks.

+- Create a processor for multimodal tasks.

+

+## Configuration

+

+A [configuration](main_classes/configuration) refers to a model's specific attributes. Each model configuration has different attributes; for instance, all NLP models have the `hidden_size`, `num_attention_heads`, `num_hidden_layers` and `vocab_size` attributes in common. These attributes specify the number of attention heads or hidden layers to construct a model with.

+

+Get a closer look at [DistilBERT](model_doc/distilbert) by accessing [`DistilBertConfig`] to inspect it's attributes:

+

+```py

+>>> from transformers import DistilBertConfig

+

+>>> config = DistilBertConfig()

+>>> print(config)

+DistilBertConfig {

+ "activation": "gelu",

+ "attention_dropout": 0.1,

+ "dim": 768,

+ "dropout": 0.1,

+ "hidden_dim": 3072,

+ "initializer_range": 0.02,

+ "max_position_embeddings": 512,

+ "model_type": "distilbert",

+ "n_heads": 12,

+ "n_layers": 6,

+ "pad_token_id": 0,

+ "qa_dropout": 0.1,

+ "seq_classif_dropout": 0.2,

+ "sinusoidal_pos_embds": false,

+ "transformers_version": "4.16.2",

+ "vocab_size": 30522

+}

+```

+

+[`DistilBertConfig`] displays all the default attributes used to build a base [`DistilBertModel`]. All attributes are customizable, creating space for experimentation. For example, you can customize a default model to:

+

+- Try a different activation function with the `activation` parameter.

+- Use a higher dropout ratio for the attention probabilities with the `attention_dropout` parameter.

+

+```py

+>>> my_config = DistilBertConfig(activation="relu", attention_dropout=0.4)

+>>> print(my_config)

+DistilBertConfig {

+ "activation": "relu",

+ "attention_dropout": 0.4,

+ "dim": 768,

+ "dropout": 0.1,

+ "hidden_dim": 3072,

+ "initializer_range": 0.02,

+ "max_position_embeddings": 512,

+ "model_type": "distilbert",

+ "n_heads": 12,

+ "n_layers": 6,

+ "pad_token_id": 0,

+ "qa_dropout": 0.1,

+ "seq_classif_dropout": 0.2,

+ "sinusoidal_pos_embds": false,

+ "transformers_version": "4.16.2",

+ "vocab_size": 30522

+}

+```

+

+Pretrained model attributes can be modified in the [`~PretrainedConfig.from_pretrained`] function:

+

+```py

+>>> my_config = DistilBertConfig.from_pretrained("distilbert-base-uncased", activation="relu", attention_dropout=0.4)

+```

+

+Once you are satisfied with your model configuration, you can save it with [`~PretrainedConfig.save_pretrained`]. Your configuration file is stored as a JSON file in the specified save directory:

+

+```py

+>>> my_config.save_pretrained(save_directory="./your_model_save_path")

+```

+

+To reuse the configuration file, load it with [`~PretrainedConfig.from_pretrained`]:

+

+```py

+>>> my_config = DistilBertConfig.from_pretrained("./your_model_save_path/my_config.json")

+```

+

+

+

+You can also save your configuration file as a dictionary or even just the difference between your custom configuration attributes and the default configuration attributes! See the [configuration](main_classes/configuration) documentation for more details.

+

+

+

+## Model

+

+The next step is to create a [model](main_classes/models). The model - also loosely referred to as the architecture - defines what each layer is doing and what operations are happening. Attributes like `num_hidden_layers` from the configuration are used to define the architecture. Every model shares the base class [`PreTrainedModel`] and a few common methods like resizing input embeddings and pruning self-attention heads. In addition, all models are also either a [`torch.nn.Module`](https://pytorch.org/docs/stable/generated/torch.nn.Module.html), [`tf.keras.Model`](https://www.tensorflow.org/api_docs/python/tf/keras/Model) or [`flax.linen.Module`](https://flax.readthedocs.io/en/latest/flax.linen.html#module) subclass. This means models are compatible with each of their respective framework's usage.

+

+Load your custom configuration attributes into the model:

+

+```py

+>>> from transformers import DistilBertModel

+

+>>> my_config = DistilBertConfig.from_pretrained("./your_model_save_path/my_config.json")

+>>> model = DistilBertModel(my_config)

+===PT-TF-SPLIT===

+>>> from transformers import TFDistilBertModel

+

+>>> my_config = DistilBertConfig.from_pretrained("./your_model_save_path/my_config.json")

+>>> tf_model = TFDistilBertModel(my_config)

+```

+

+This creates a model with random values instead of pretrained weights. You won't be able to use this model for anything useful yet until you train it. Training is a costly and time-consuming process. It is generally better to use a pretrained model to obtain better results faster, while using only a fraction of the resources required for training.

+

+Create a pretrained model with [`~PreTrainedModel.from_pretrained`]:

+

+```py

+>>> model = DistilBertModel.from_pretrained("distilbert-base-uncased")

+===PT-TF-SPLIT===

+>>> tf_model = TFDistilBertModel.from_pretrained("distilbert-base-uncased")

+```

+

+When you load pretrained weights, the default model configuration is automatically loaded if the model is provided by 🤗 Transformers. However, you can still replace - some or all of - the default model configuration attributes with your own if you'd like:

+

+```py

+>>> model = DistilBertModel.from_pretrained("distilbert-base-uncased", config=my_config)

+===PT-TF-SPLIT===

+>>> tf_model = TFDistilBertModel.from_pretrained("distilbert-base-uncased", config=my_config)

+```

+

+### Model heads

+

+At this point, you have a base DistilBERT model which outputs the *hidden states*. The hidden states are passed as inputs to a model head to produce the final output. 🤗 Transformers provides a different model head for each task as long as a model supports the task (i.e., you can't use DistilBERT for a sequence-to-sequence task like translation).

+

+For example, [`DistilBertForSequenceClassification`] is a base DistilBERT model with a sequence classification head. The sequence classification head is a linear layer on top of the pooled outputs.

+

+```py

+>>> from transformers import DistilBertForSequenceClassification

+

+>>> model = DistilBertForSequenceClassification.from_pretrained("distilbert-base-uncased")

+===PT-TF-SPLIT===

+>>> from transformers import TFDistilBertForSequenceClassification

+

+>>> tf_model = TFDistilBertForSequenceClassification.from_pretrained("distilbert-base-uncased")

+```

+

+Easily reuse this checkpoint for another task by switching to a different model head. For a question answering task, you would use the [`DistilBertForQuestionAnswering`] model head. The question answering head is similar to the sequence classification head except it is a linear layer on top of the hidden states output.

+

+```py

+>>> from transformers import DistilBertForQuestionAnswering

+

+>>> model = DistilBertForQuestionAnswering.from_pretrained("distilbert-base-uncased")

+===PT-TF-SPLIT===

+>>> from transformers import TFDistilBertForQuestionAnswering

+

+>>> tf_model = TFDistilBertForQuestionAnswering.from_pretrained("distilbert-base-uncased")

+```

+

+## Tokenizer

+

+The last base class you need before using a model for textual data is a [tokenizer](main_classes/tokenizer) to convert raw text to tensors. There are two types of tokenizers you can use with 🤗 Transformers:

+

+- [`PreTrainedTokenizer`]: a Python implementation of a tokenizer.

+- [`PreTrainedTokenizerFast`]: a tokenizer from our Rust-based [🤗 Tokenizer](https://huggingface.co/docs/tokenizers/python/latest/) library. This tokenizer type is significantly faster - especially during batch tokenization - due to it's Rust implementation. The fast tokenizer also offers additional methods like *offset mapping* which maps tokens to their original words or characters.

+

+Both tokenizers support common methods such as encoding and decoding, adding new tokens, and managing special tokens.

+

+

+

+Not every model supports a fast tokenizer. Take a look at this [table](index#supported-frameworks) to check if a model has fast tokenizer support.

+

+

+

+If you trained your own tokenizer, you can create one from your *vocabulary* file:

+

+```py

+>>> from transformers import DistilBertTokenizer

+

+>>> my_tokenizer = DistilBertTokenizer(vocab_file="my_vocab_file.txt", do_lower_case=False, padding_side="left")

+```

+

+It is important to remember the vocabulary from a custom tokenizer will be different from the vocabulary generated by a pretrained model's tokenizer. You need to use a pretrained model's vocabulary if you are using a pretrained model, otherwise the inputs won't make sense. Create a tokenizer with a pretrained model's vocabulary with the [`DistilBertTokenizer`] class:

+

+```py

+>>> from transformers import DistilBertTokenizer

+

+>>> slow_tokenizer = DistilBertTokenizer.from_pretrained("distilbert-base-uncased")

+```

+

+Create a fast tokenizer with the [`DistilBertTokenizerFast`] class:

+

+```py

+>>> from transformers import DistilBertTokenizerFast

+

+>>> fast_tokenizer = DistilBertTokenizerFast.from_pretrained("distilbert-base-uncased")

+```

+

+

+

+By default, [`AutoTokenizer`] will try to load a fast tokenizer. You can disable this behavior by setting `use_fast=False` in `from_pretrained`.

+

+

+

+## Feature Extractor

+

+A feature extractor processes audio or image inputs. It inherits from the base [`~feature_extraction_utils.FeatureExtractionMixin`] class, and may also inherit from the [`ImageFeatureExtractionMixin`] class for processing image features or the [`SequenceFeatureExtractor`] class for processing audio inputs.

+

+Depending on whether you are working on an audio or vision task, create a feature extractor associated with the model you're using. For example, create a default [`ViTFeatureExtractor`] if you are using [ViT](model_doc/vit) for image classification:

+

+```py

+>>> from transformers import ViTFeatureExtractor

+

+>>> vit_extractor = ViTFeatureExtractor()

+>>> print(vit_extractor)

+ViTFeatureExtractor {

+ "do_normalize": true,

+ "do_resize": true,

+ "feature_extractor_type": "ViTFeatureExtractor",

+ "image_mean": [

+ 0.5,

+ 0.5,

+ 0.5

+ ],

+ "image_std": [

+ 0.5,

+ 0.5,

+ 0.5

+ ],

+ "resample": 2,

+ "size": 224

+}

+```

+

+

+

+If you aren't looking for any customization, just use the `from_pretrained` method to load a model's default feature extractor parameters.

+

+

+

+Modify any of the [`ViTFeatureExtractor`] parameters to create your custom feature extractor:

+

+```py

+>>> from transformers import ViTFeatureExtractor

+

+>>> my_vit_extractor = ViTFeatureExtractor(resample="PIL.Image.BOX", do_normalize=False, image_mean=[0.3, 0.3, 0.3])

+>>> print(my_vit_extractor)

+ViTFeatureExtractor {

+ "do_normalize": false,

+ "do_resize": true,

+ "feature_extractor_type": "ViTFeatureExtractor",

+ "image_mean": [

+ 0.3,

+ 0.3,

+ 0.3

+ ],

+ "image_std": [

+ 0.5,

+ 0.5,

+ 0.5

+ ],

+ "resample": "PIL.Image.BOX",

+ "size": 224

+}

+```

+

+For audio inputs, you can create a [`Wav2Vec2FeatureExtractor`] and customize the parameters in a similar way:

+

+```py

+>>> from transformers import Wav2Vec2FeatureExtractor

+

+>>> w2v2_extractor = Wav2Vec2FeatureExtractor()

+>>> print(w2v2_extractor)

+Wav2Vec2FeatureExtractor {

+ "do_normalize": true,

+ "feature_extractor_type": "Wav2Vec2FeatureExtractor",

+ "feature_size": 1,

+ "padding_side": "right",

+ "padding_value": 0.0,

+ "return_attention_mask": false,

+ "sampling_rate": 16000

+}

+```

+

+## Processor

+

+For models that support multimodal tasks, 🤗 Transformers offers a processor class that conveniently wraps a feature extractor and tokenizer into a single object. For example, let's use the [`Wav2Vec2Processor`] for an automatic speech recognition task (ASR). ASR transcribes audio to text, so you will need a feature extractor and a tokenizer.

+

+Create a feature extractor to handle the audio inputs:

+

+```py

+>>> from transformers import Wav2Vec2FeatureExtractor

+

+>>> feature_extractor = Wav2Vec2FeatureExtractor(padding_value=1.0, do_normalize=True)

+```

+

+Create a tokenizer to handle the text inputs:

+

+```py

+>>> from transformers import Wav2Vec2CTCTokenizer

+

+>>> tokenizer = Wav2Vec2CTCTokenizer(vocab_file="my_vocab_file.txt")

+```

+

+Combine the feature extractor and tokenizer in [`Wav2Vec2Processor`]:

+

+```py

+>>> from transformers import Wav2Vec2Processor

+

+>>> processor = Wav2Vec2Processor(feature_extractor=feature_extractor, tokenizer=tokenizer)

+```

+

+With two basic classes - configuration and model - and an additional preprocessing class (tokenizer, feature extractor, or processor), you can create any of the models supported by 🤗 Transformers. Each of these base classes are configurable, allowing you to use the specific attributes you want. You can easily setup a model for training or modify an existing pretrained model to fine-tune.

\ No newline at end of file

diff --git a/docs/source/index.mdx b/docs/source/index.mdx

index 6f97693065c8..f6fc659ed47a 100644

--- a/docs/source/index.mdx

+++ b/docs/source/index.mdx

@@ -70,6 +70,7 @@ conversion utilities for the following models.

1. **[ByT5](model_doc/byt5)** (from Google Research) released with the paper [ByT5: Towards a token-free future with pre-trained byte-to-byte models](https://arxiv.org/abs/2105.13626) by Linting Xue, Aditya Barua, Noah Constant, Rami Al-Rfou, Sharan Narang, Mihir Kale, Adam Roberts, Colin Raffel.

1. **[CamemBERT](model_doc/camembert)** (from Inria/Facebook/Sorbonne) released with the paper [CamemBERT: a Tasty French Language Model](https://arxiv.org/abs/1911.03894) by Louis Martin*, Benjamin Muller*, Pedro Javier Ortiz Suárez*, Yoann Dupont, Laurent Romary, Éric Villemonte de la Clergerie, Djamé Seddah and Benoît Sagot.

1. **[CANINE](model_doc/canine)** (from Google Research) released with the paper [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) by Jonathan H. Clark, Dan Garrette, Iulia Turc, John Wieting.

+1. **[ConvNeXT](model_doc/convnext)** (from Facebook AI) released with the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie.

1. **[CLIP](model_doc/clip)** (from OpenAI) released with the paper [Learning Transferable Visual Models From Natural Language Supervision](https://arxiv.org/abs/2103.00020) by Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever.

1. **[ConvBERT](model_doc/convbert)** (from YituTech) released with the paper [ConvBERT: Improving BERT with Span-based Dynamic Convolution](https://arxiv.org/abs/2008.02496) by Zihang Jiang, Weihao Yu, Daquan Zhou, Yunpeng Chen, Jiashi Feng, Shuicheng Yan.

1. **[CPM](model_doc/cpm)** (from Tsinghua University) released with the paper [CPM: A Large-scale Generative Chinese Pre-trained Language Model](https://arxiv.org/abs/2012.00413) by Zhengyan Zhang, Xu Han, Hao Zhou, Pei Ke, Yuxian Gu, Deming Ye, Yujia Qin, Yusheng Su, Haozhe Ji, Jian Guan, Fanchao Qi, Xiaozhi Wang, Yanan Zheng, Guoyang Zeng, Huanqi Cao, Shengqi Chen, Daixuan Li, Zhenbo Sun, Zhiyuan Liu, Minlie Huang, Wentao Han, Jie Tang, Juanzi Li, Xiaoyan Zhu, Maosong Sun.

@@ -176,6 +177,7 @@ Flax), PyTorch, and/or TensorFlow.

| Canine | ✅ | ❌ | ✅ | ❌ | ❌ |

| CLIP | ✅ | ✅ | ✅ | ✅ | ✅ |

| ConvBERT | ✅ | ✅ | ✅ | ✅ | ❌ |

+| ConvNext | ❌ | ❌ | ✅ | ❌ | ❌ |

| CTRL | ✅ | ❌ | ✅ | ✅ | ❌ |

| DeBERTa | ✅ | ✅ | ✅ | ✅ | ❌ |

| DeBERTa-v2 | ✅ | ❌ | ✅ | ✅ | ❌ |

diff --git a/docs/source/main_classes/deepspeed.mdx b/docs/source/main_classes/deepspeed.mdx

index 3fdf694629ac..a558cf5390e8 100644

--- a/docs/source/main_classes/deepspeed.mdx

+++ b/docs/source/main_classes/deepspeed.mdx

@@ -1805,6 +1805,170 @@ Please note that if you're not using the [`Trainer`] integration, you're complet

[[autodoc]] deepspeed.HfDeepSpeedConfig

- all

+### DeepSpeed ZeRO Inference

+

+Here is an example of how one could do DeepSpeed ZeRO Inference without using [`Trainer`] when one can't fit a model onto a single GPU. The solution includes using additional GPUs or/and offloading GPU memory to CPU memory.

+

+The important nuance to understand here is that the way ZeRO is designed you can process different inputs on different GPUs in parallel.

+

+The example has copious notes and is self-documenting.

+

+Make sure to:

+

+1. disable CPU offload if you have enough GPU memory (since it slows things down)

+2. enable bf16 if you own an Ampere or a newer GPU to make things faster. If you don't have that hardware you may enable fp16 as long as you don't use any model that was pre-trained in bf16 mixed precision (such as most t5 models). These usually overflow in fp16 and you will see garbage as output.

+

+```python

+#!/usr/bin/env python

+

+# This script demonstrates how to use Deepspeed ZeRO in an inference mode when one can't fit a model

+# into a single GPU

+#

+# 1. Use 1 GPU with CPU offload

+# 2. Or use multiple GPUs instead

+#

+# First you need to install deepspeed: pip install deepspeed

+#

+# Here we use a 3B "bigscience/T0_3B" model which needs about 15GB GPU RAM - so 1 largish or 2

+# small GPUs can handle it. or 1 small GPU and a lot of CPU memory.

+#

+# To use a larger model like "bigscience/T0" which needs about 50GB, unless you have an 80GB GPU -

+# you will need 2-4 gpus. And then you can adapt the script to handle more gpus if you want to

+# process multiple inputs at once.

+#

+# The provided deepspeed config also activates CPU memory offloading, so chances are that if you

+# have a lot of available CPU memory and you don't mind a slowdown you should be able to load a

+# model that doesn't normally fit into a single GPU. If you have enough GPU memory the program will

+# run faster if you don't want offload to CPU - so disable that section then.

+#

+# To deploy on 1 gpu:

+#

+# deepspeed --num_gpus 1 t0.py

+# or:

+# python -m torch.distributed.run --nproc_per_node=1 t0.py

+#

+# To deploy on 2 gpus:

+#

+# deepspeed --num_gpus 2 t0.py

+# or:

+# python -m torch.distributed.run --nproc_per_node=2 t0.py

+

+

+from transformers import AutoTokenizer, AutoConfig, AutoModelForSeq2SeqLM

+from transformers.deepspeed import HfDeepSpeedConfig

+import deepspeed

+import os

+import torch

+

+os.environ["TOKENIZERS_PARALLELISM"] = "false" # To avoid warnings about parallelism in tokenizers

+

+# distributed setup

+local_rank = int(os.getenv("LOCAL_RANK", "0"))

+world_size = int(os.getenv("WORLD_SIZE", "1"))

+torch.cuda.set_device(local_rank)

+deepspeed.init_distributed()

+

+model_name = "bigscience/T0_3B"

+

+config = AutoConfig.from_pretrained(model_name)

+model_hidden_size = config.d_model

+

+# batch size has to be divisible by world_size, but can be bigger than world_size

+train_batch_size = 1 * world_size

+

+# ds_config notes

+#

+# - enable bf16 if you use Ampere or higher GPU - this will run in mixed precision and will be

+# faster.

+#

+# - for older GPUs you can enable fp16, but it'll only work for non-bf16 pretrained models - e.g.

+# all official t5 models are bf16-pretrained

+#

+# - set offload_param.device to "none" or completely remove the `offload_param` section if you don't

+# - want CPU offload

+#

+# - if using `offload_param` you can manually finetune stage3_param_persistence_threshold to control

+# - which params should remain on gpus - the larger the value the smaller the offload size

+#

+# For indepth info on Deepspeed config see

+# https://huggingface.co/docs/transformers/master/main_classes/deepspeed

+

+# keeping the same format as json for consistency, except it uses lower case for true/false

+# fmt: off

+ds_config = {

+ "fp16": {

+ "enabled": False

+ },

+ "bf16": {

+ "enabled": False

+ },

+ "zero_optimization": {

+ "stage": 3,

+ "offload_param": {

+ "device": "cpu",

+ "pin_memory": True

+ },

+ "overlap_comm": True,

+ "contiguous_gradients": True,

+ "reduce_bucket_size": model_hidden_size * model_hidden_size,

+ "stage3_prefetch_bucket_size": 0.9 * model_hidden_size * model_hidden_size,

+ "stage3_param_persistence_threshold": 10 * model_hidden_size

+ },

+ "steps_per_print": 2000,

+ "train_batch_size": train_batch_size,

+ "train_micro_batch_size_per_gpu": 1,

+ "wall_clock_breakdown": False

+}

+# fmt: on

+

+# next line instructs transformers to partition the model directly over multiple gpus using

+# deepspeed.zero.Init when model's `from_pretrained` method is called.

+#

+# **it has to be run before loading the model AutoModelForSeq2SeqLM.from_pretrained(model_name)**

+#

+# otherwise the model will first be loaded normally and only partitioned at forward time which is

+# less efficient and when there is little CPU RAM may fail

+dschf = HfDeepSpeedConfig(ds_config) # keep this object alive

+

+# now a model can be loaded.

+model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

+

+# initialise Deepspeed ZeRO and store only the engine object

+ds_engine = deepspeed.initialize(model=model, config_params=ds_config)[0]

+ds_engine.module.eval() # inference

+

+# Deepspeed ZeRO can process unrelated inputs on each GPU. So for 2 gpus you process 2 inputs at once.

+# If you use more GPUs adjust for more.

+# And of course if you have just one input to process you then need to pass the same string to both gpus

+# If you use only one GPU, then you will have only rank 0.

+rank = torch.distributed.get_rank()

+if rank == 0:

+ text_in = "Is this review positive or negative? Review: this is the best cast iron skillet you will ever buy"

+elif rank == 1:

+ text_in = "Is this review positive or negative? Review: this is the worst restaurant ever"

+

+tokenizer = AutoTokenizer.from_pretrained(model_name)

+inputs = tokenizer.encode(text_in, return_tensors="pt").to(device=local_rank)

+with torch.no_grad():

+ outputs = ds_engine.module.generate(inputs, synced_gpus=True)

+text_out = tokenizer.decode(outputs[0], skip_special_tokens=True)

+print(f"rank{rank}:\n in={text_in}\n out={text_out}")

+```

+

+Let's save it as `t0.py` and run it:

+```

+$ deepspeed --num_gpus 2 t0.py

+rank0:

+ in=Is this review positive or negative? Review: this is the best cast iron skillet you will ever buy

+ out=Positive

+rank1:

+ in=Is this review positive or negative? Review: this is the worst restaurant ever

+ out=negative

+```

+

+This was a very basic example and you will want to adapt it to your needs.

+

+

## Main DeepSpeed Resources

- [Project's github](https://github.com/microsoft/deepspeed)

diff --git a/docs/source/model_doc/auto.mdx b/docs/source/model_doc/auto.mdx

index ab7d02d5498e..3588445b09ec 100644

--- a/docs/source/model_doc/auto.mdx

+++ b/docs/source/model_doc/auto.mdx

@@ -150,6 +150,10 @@ Likewise, if your `NewModel` is a subclass of [`PreTrainedModel`], make sure its

[[autodoc]] AutoModelForImageSegmentation

+## AutoModelForSemanticSegmentation

+

+[[autodoc]] AutoModelForSemanticSegmentation

+

## TFAutoModel

[[autodoc]] TFAutoModel

diff --git a/docs/source/model_doc/convnext.mdx b/docs/source/model_doc/convnext.mdx

new file mode 100644

index 000000000000..e3a04d371e64

--- /dev/null

+++ b/docs/source/model_doc/convnext.mdx

@@ -0,0 +1,66 @@

+

+

+# ConvNeXT

+

+## Overview

+

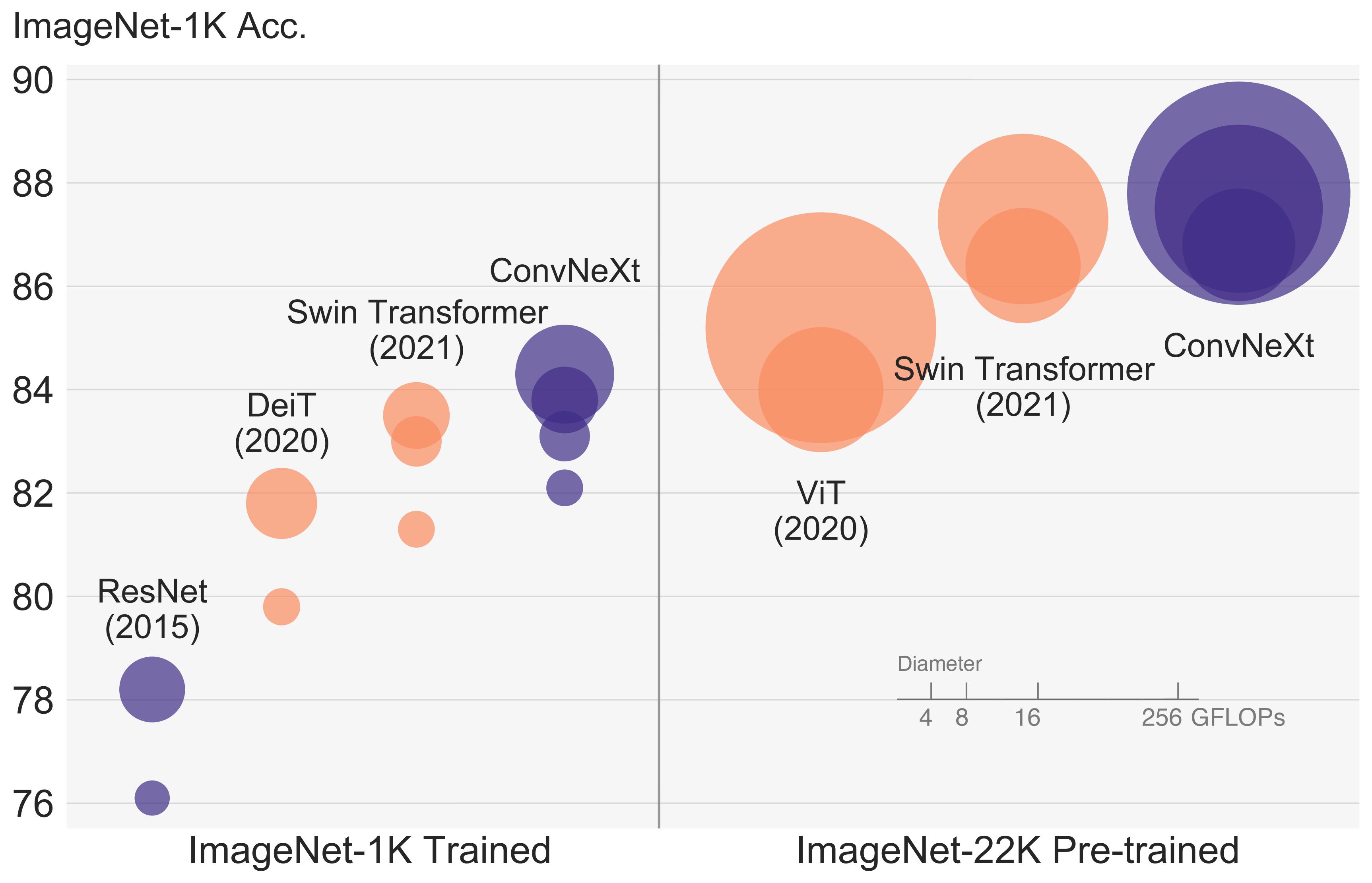

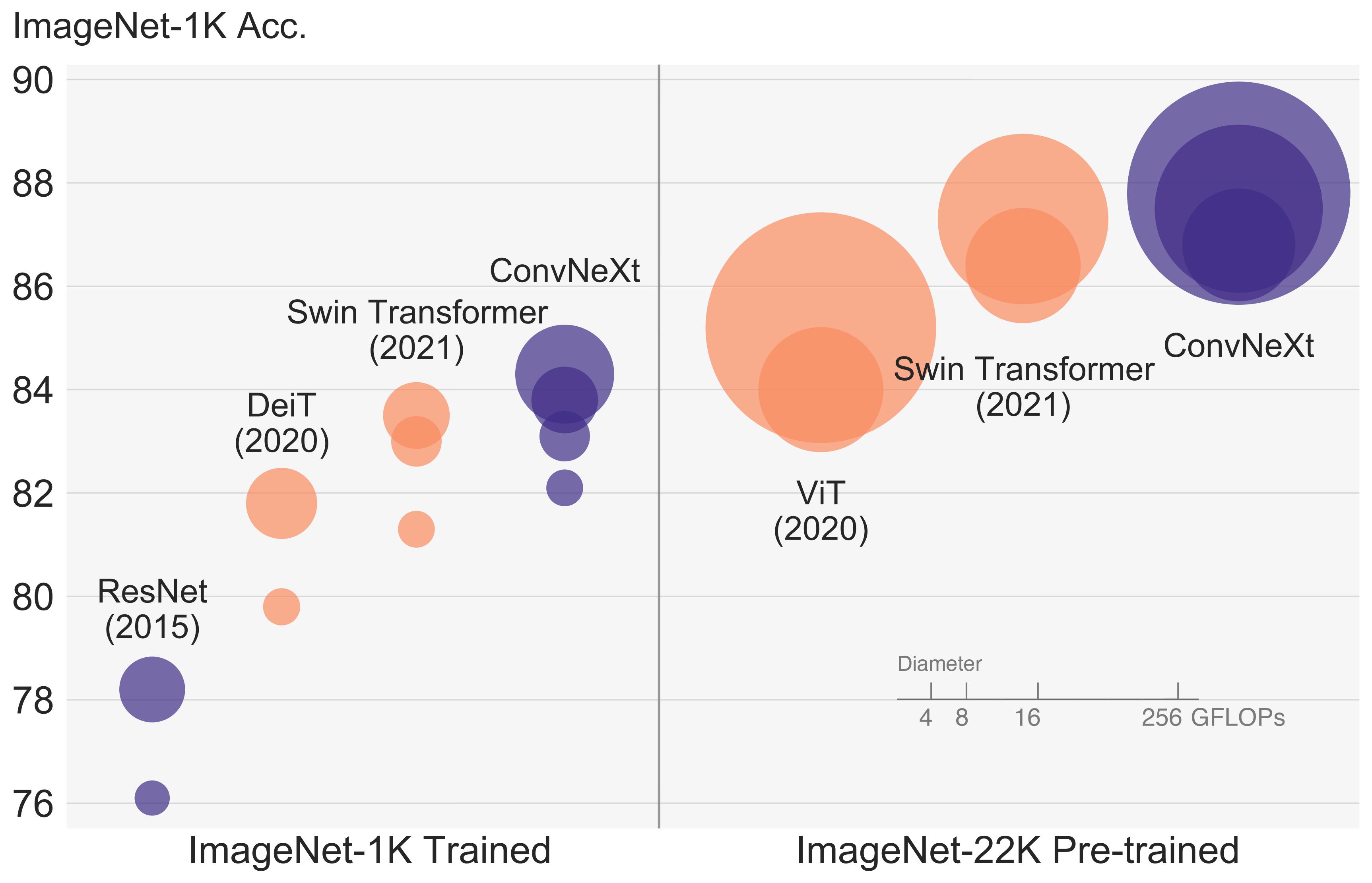

+The ConvNeXT model was proposed in [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie.

+ConvNeXT is a pure convolutional model (ConvNet), inspired by the design of Vision Transformers, that claims to outperform them.

+

+The abstract from the paper is the following:

+

+*The "Roaring 20s" of visual recognition began with the introduction of Vision Transformers (ViTs), which quickly superseded ConvNets as the state-of-the-art image classification model.

+A vanilla ViT, on the other hand, faces difficulties when applied to general computer vision tasks such as object detection and semantic segmentation. It is the hierarchical Transformers

+(e.g., Swin Transformers) that reintroduced several ConvNet priors, making Transformers practically viable as a generic vision backbone and demonstrating remarkable performance on a wide

+variety of vision tasks. However, the effectiveness of such hybrid approaches is still largely credited to the intrinsic superiority of Transformers, rather than the inherent inductive

+biases of convolutions. In this work, we reexamine the design spaces and test the limits of what a pure ConvNet can achieve. We gradually "modernize" a standard ResNet toward the design

+of a vision Transformer, and discover several key components that contribute to the performance difference along the way. The outcome of this exploration is a family of pure ConvNet models

+dubbed ConvNeXt. Constructed entirely from standard ConvNet modules, ConvNeXts compete favorably with Transformers in terms of accuracy and scalability, achieving 87.8% ImageNet top-1 accuracy

+and outperforming Swin Transformers on COCO detection and ADE20K segmentation, while maintaining the simplicity and efficiency of standard ConvNets.*

+

+Tips:

+

+- See the code examples below each model regarding usage.

+

+ +

+ ConvNeXT architecture. Taken from the original paper.

+

+This model was contributed by [nielsr](https://huggingface.co/nielsr). The original code can be found [here](https://github.com/facebookresearch/ConvNeXt).

+

+## ConvNeXT specific outputs

+

+[[autodoc]] models.convnext.modeling_convnext.ConvNextModelOutput

+

+

+## ConvNextConfig

+

+[[autodoc]] ConvNextConfig

+

+

+## ConvNextFeatureExtractor

+

+[[autodoc]] ConvNextFeatureExtractor

+

+

+## ConvNextModel

+

+[[autodoc]] ConvNextModel

+ - forward

+

+

+## ConvNextForImageClassification

+

+[[autodoc]] ConvNextForImageClassification

+ - forward

\ No newline at end of file

diff --git a/docs/source/parallelism.mdx b/docs/source/parallelism.mdx

index 61af187136b6..c832c740ffdf 100644

--- a/docs/source/parallelism.mdx

+++ b/docs/source/parallelism.mdx

@@ -308,9 +308,14 @@ ZeRO stage 3 is not a good choice either for the same reason - more inter-node c

And since we have ZeRO, the other benefit is ZeRO-Offload. Since this is stage 1 optimizer states can be offloaded to CPU.

Implementations:

-- [Megatron-DeepSpeed](https://github.com/microsoft/Megatron-DeepSpeed)

+- [Megatron-DeepSpeed](https://github.com/microsoft/Megatron-DeepSpeed) and [Megatron-Deepspeed from BigScience](https://github.com/bigscience-workshop/Megatron-DeepSpeed), which is the fork of the former repo.

- [OSLO](https://github.com/tunib-ai/oslo)

+Important papers:

+

+- [Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, A Large-Scale Generative Language Model](

+https://arxiv.org/abs/2201.11990)

+

🤗 Transformers status: not yet implemented, since we have no PP and TP.

## FlexFlow

diff --git a/docs/source/serialization.mdx b/docs/source/serialization.mdx

index 83d291f7672e..2be3fc3735ad 100644

--- a/docs/source/serialization.mdx

+++ b/docs/source/serialization.mdx

@@ -43,17 +43,17 @@ and are designed to be easily extendable to other architectures.

Ready-made configurations include the following architectures:

-

+

- ALBERT

- BART

- BERT

- CamemBERT

- DistilBERT

+- ELECTRA

- GPT Neo

- I-BERT

- LayoutLM

-- Longformer

- Marian

- mBART

- OpenAI GPT-2

diff --git a/examples/pytorch/speech-recognition/README.md b/examples/pytorch/speech-recognition/README.md

index 7db7811ba2c5..a462cf338576 100644

--- a/examples/pytorch/speech-recognition/README.md

+++ b/examples/pytorch/speech-recognition/README.md

@@ -127,6 +127,62 @@ python -m torch.distributed.launch \

On 8 V100 GPUs, this script should run in *ca.* 18 minutes and yield a CTC loss of **0.39** and word error rate

of **0.36**.

+

+### Multi GPU CTC with Dataset Streaming

+

+The following command shows how to use [Dataset Streaming mode](https://huggingface.co/docs/datasets/dataset_streaming.html)

+to fine-tune [XLS-R](https://huggingface.co/transformers/master/model_doc/xls_r.html)

+on [Common Voice](https://huggingface.co/datasets/common_voice) using 4 GPUs in half-precision.

+

+Streaming mode imposes several constraints on training:

+1. We need to construct a tokenizer beforehand and define it via `--tokenizer_name_or_path`.

+2. `--num_train_epochs` has to be replaced by `--max_steps`. Similarly, all other epoch-based arguments have to be

+replaced by step-based ones.

+3. Full dataset shuffling on each epoch is not possible, since we don't have the whole dataset available at once.

+However, the `--shuffle_buffer_size` argument controls how many examples we can pre-download before shuffling them.

+

+

+```bash

+**python -m torch.distributed.launch \

+ --nproc_per_node 4 run_speech_recognition_ctc_streaming.py \

+ --dataset_name="common_voice" \

+ --model_name_or_path="facebook/wav2vec2-xls-r-300m" \

+ --tokenizer_name_or_path="anton-l/wav2vec2-tokenizer-turkish" \

+ --dataset_config_name="tr" \

+ --train_split_name="train+validation" \

+ --eval_split_name="test" \

+ --output_dir="wav2vec2-xls-r-common_voice-tr-ft" \

+ --overwrite_output_dir \

+ --max_steps="5000" \

+ --per_device_train_batch_size="8" \

+ --gradient_accumulation_steps="2" \

+ --learning_rate="5e-4" \

+ --warmup_steps="500" \

+ --evaluation_strategy="steps" \

+ --text_column_name="sentence" \

+ --save_steps="500" \

+ --eval_steps="500" \

+ --logging_steps="1" \

+ --layerdrop="0.0" \

+ --eval_metrics wer cer \

+ --save_total_limit="1" \

+ --mask_time_prob="0.3" \

+ --mask_time_length="10" \

+ --mask_feature_prob="0.1" \

+ --mask_feature_length="64" \

+ --freeze_feature_encoder \

+ --chars_to_ignore , ? . ! - \; \: \" “ % ‘ ” � \

+ --max_duration_in_seconds="20" \

+ --shuffle_buffer_size="500" \

+ --fp16 \

+ --push_to_hub \

+ --do_train --do_eval \

+ --gradient_checkpointing**

+```

+

+On 4 V100 GPUs, this script should run in *ca.* 3h 31min and yield a CTC loss of **0.35** and word error rate

+of **0.29**.

+

### Examples CTC

The following tables present a couple of example runs on the most popular speech-recognition datasets.

@@ -175,6 +231,7 @@ they can serve as a baseline to improve upon.

| [Common Voice](https://huggingface.co/datasets/common_voice)| `"tr"` | [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) | 0.35 | - | 1 GPU V100 | 1h20min | [here](https://huggingface.co/patrickvonplaten/wav2vec2-common_voice-tr-demo) | [run.sh](https://huggingface.co/patrickvonplaten/wav2vec2-common_voice-tr-demo/blob/main/run.sh) |

| [Common Voice](https://huggingface.co/datasets/common_voice)| `"tr"` | [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) | 0.31 | - | 8 GPU V100 | 1h05 | [here](https://huggingface.co/patrickvonplaten/wav2vec2-large-xls-r-300m-common_voice-tr-ft) | [run.sh](https://huggingface.co/patrickvonplaten/wav2vec2-large-xls-r-300m-common_voice-tr-ft/blob/main/run.sh) |

| [Common Voice](https://huggingface.co/datasets/common_voice)| `"tr"` | [facebook/wav2vec2-xls-r-1b](https://huggingface.co/facebook/wav2vec2-xls-r-1b) | 0.21 | - | 2 GPU Titan 24 GB RAM | 15h10 | [here](https://huggingface.co/patrickvonplaten/wav2vec2-xls-r-1b-common_voice-tr-ft) | [run.sh](https://huggingface.co/patrickvonplaten/wav2vec2-large-xls-r-1b-common_voice-tr-ft/blob/main/run.sh) |

+| [Common Voice](https://huggingface.co/datasets/common_voice)| `"tr"` in streaming mode | [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) | 0.29 | - | 4 GPU V100 | 3h31 | [here](https://huggingface.co/anton-l/wav2vec2-xls-r-common_voice-tr-ft-stream) | [run.sh](https://huggingface.co/anton-l/wav2vec2-xls-r-common_voice-tr-ft-stream/blob/main/run.sh) |

#### Multilingual Librispeech CTC

diff --git a/examples/pytorch/speech-recognition/requirements.txt b/examples/pytorch/speech-recognition/requirements.txt

index b8c475e40a1e..219959a4b267 100644

--- a/examples/pytorch/speech-recognition/requirements.txt

+++ b/examples/pytorch/speech-recognition/requirements.txt

@@ -1,4 +1,4 @@

-datasets >= 1.13.3

+datasets >= 1.18.0

torch >= 1.5

torchaudio

librosa

diff --git a/examples/pytorch/speech-recognition/run_speech_recognition_ctc.py b/examples/pytorch/speech-recognition/run_speech_recognition_ctc.py

index eea78f58ae7c..b09b0b33f270 100755

--- a/examples/pytorch/speech-recognition/run_speech_recognition_ctc.py

+++ b/examples/pytorch/speech-recognition/run_speech_recognition_ctc.py

@@ -51,7 +51,7 @@

# Will error if the minimal version of Transformers is not installed. Remove at your own risks.

check_min_version("4.17.0.dev0")

-require_version("datasets>=1.13.3", "To fix: pip install -r examples/pytorch/text-classification/requirements.txt")

+require_version("datasets>=1.18.0", "To fix: pip install -r examples/pytorch/speech-recognition/requirements.txt")

logger = logging.getLogger(__name__)

@@ -146,7 +146,8 @@ class DataTrainingArguments:

train_split_name: str = field(

default="train+validation",

metadata={

- "help": "The name of the training data set split to use (via the datasets library). Defaults to 'train+validation'"

+ "help": "The name of the training data set split to use (via the datasets library). Defaults to "

+ "'train+validation'"

},

)

eval_split_name: str = field(

diff --git a/examples/pytorch/speech-recognition/run_speech_recognition_seq2seq.py b/examples/pytorch/speech-recognition/run_speech_recognition_seq2seq.py

index 40bc9aeb9a56..ee5530caebd1 100755

--- a/examples/pytorch/speech-recognition/run_speech_recognition_seq2seq.py

+++ b/examples/pytorch/speech-recognition/run_speech_recognition_seq2seq.py

@@ -49,7 +49,7 @@

# Will error if the minimal version of Transformers is not installed. Remove at your own risks.

check_min_version("4.17.0.dev0")

-require_version("datasets>=1.8.0", "To fix: pip install -r examples/pytorch/summarization/requirements.txt")

+require_version("datasets>=1.18.0", "To fix: pip install -r examples/pytorch/speech-recognition/requirements.txt")

logger = logging.getLogger(__name__)

diff --git a/examples/research_projects/robust-speech-event/run_speech_recognition_ctc_streaming.py b/examples/research_projects/robust-speech-event/run_speech_recognition_ctc_streaming.py

new file mode 100644

index 000000000000..9e69178088f6

--- /dev/null

+++ b/examples/research_projects/robust-speech-event/run_speech_recognition_ctc_streaming.py

@@ -0,0 +1,659 @@

+#!/usr/bin/env python

+# coding=utf-8

+# Copyright 2022 The HuggingFace Inc. team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+

+""" Fine-tuning a 🤗 Transformers CTC model for automatic speech recognition in streaming mode"""

+

+import logging

+import os

+import re

+import sys

+import warnings

+from dataclasses import dataclass, field

+from typing import Dict, List, Optional, Union

+

+import datasets

+import numpy as np

+import torch

+from datasets import IterableDatasetDict, interleave_datasets, load_dataset, load_metric

+from torch.utils.data import IterableDataset

+

+import transformers

+from transformers import (

+ AutoConfig,

+ AutoFeatureExtractor,

+ AutoModelForCTC,

+ AutoProcessor,

+ AutoTokenizer,

+ HfArgumentParser,

+ Trainer,

+ TrainerCallback,

+ TrainingArguments,

+ Wav2Vec2Processor,

+ set_seed,

+)

+from transformers.trainer_pt_utils import IterableDatasetShard

+from transformers.trainer_utils import get_last_checkpoint, is_main_process

+from transformers.utils import check_min_version

+from transformers.utils.versions import require_version

+

+

+# Will error if the minimal version of Transformers is not installed. Remove at your own risk.

+check_min_version("4.17.0.dev0")

+

+require_version("datasets>=1.18.2", "To fix: pip install 'datasets>=1.18.2'")

+

+

+logger = logging.getLogger(__name__)

+

+

+def list_field(default=None, metadata=None):

+ return field(default_factory=lambda: default, metadata=metadata)

+

+

+@dataclass

+class ModelArguments:

+ """

+ Arguments pertaining to which model/config/tokenizer we are going to fine-tune from.

+ """

+

+ model_name_or_path: str = field(

+ metadata={"help": "Path to pretrained model or model identifier from huggingface.co/models"}

+ )

+ tokenizer_name_or_path: Optional[str] = field(

+ default=None,

+ metadata={"help": "Path to pretrained tokenizer or tokenizer identifier from huggingface.co/models"},

+ )

+ cache_dir: Optional[str] = field(

+ default=None,

+ metadata={"help": "Where do you want to store the pretrained models downloaded from huggingface.co"},

+ )

+ freeze_feature_encoder: bool = field(

+ default=True, metadata={"help": "Whether to freeze the feature encoder layers of the model."}

+ )

+ attention_dropout: float = field(

+ default=0.0, metadata={"help": "The dropout ratio for the attention probabilities."}

+ )

+ activation_dropout: float = field(

+ default=0.0, metadata={"help": "The dropout ratio for activations inside the fully connected layer."}

+ )

+ feat_proj_dropout: float = field(default=0.0, metadata={"help": "The dropout ratio for the projected features."})

+ hidden_dropout: float = field(

+ default=0.0,

+ metadata={

+ "help": "The dropout probability for all fully connected layers in the embeddings, encoder, and pooler."

+ },

+ )

+ final_dropout: float = field(

+ default=0.0,

+ metadata={"help": "The dropout probability for the final projection layer."},

+ )

+ mask_time_prob: float = field(

+ default=0.05,

+ metadata={

+ "help": "Probability of each feature vector along the time axis to be chosen as the start of the vector"

+ "span to be masked. Approximately ``mask_time_prob * sequence_length // mask_time_length`` feature"

+ "vectors will be masked along the time axis."

+ },

+ )

+ mask_time_length: int = field(

+ default=10,

+ metadata={"help": "Length of vector span to mask along the time axis."},

+ )

+ mask_feature_prob: float = field(

+ default=0.0,

+ metadata={

+ "help": "Probability of each feature vector along the feature axis to be chosen as the start of the vector"

+ "span to be masked. Approximately ``mask_feature_prob * sequence_length // mask_feature_length`` feature bins will be masked along the time axis."

+ },

+ )

+ mask_feature_length: int = field(

+ default=10,

+ metadata={"help": "Length of vector span to mask along the feature axis."},

+ )

+ layerdrop: float = field(default=0.0, metadata={"help": "The LayerDrop probability."})

+ ctc_loss_reduction: Optional[str] = field(

+ default="mean", metadata={"help": "The way the ctc loss should be reduced. Should be one of 'mean' or 'sum'."}

+ )

+

+

+@dataclass

+class DataTrainingArguments:

+ """

+ Arguments pertaining to what data we are going to input our model for training and eval.

+

+ Using `HfArgumentParser` we can turn this class

+ into argparse arguments to be able to specify them on

+ the command line.

+ """

+

+ dataset_name: str = field(

+ metadata={"help": "The configuration name of the dataset to use (via the datasets library)."}

+ )

+ dataset_config_name: str = field(

+ default=None, metadata={"help": "The configuration name of the dataset to use (via the datasets library)."}

+ )

+ train_split_name: str = field(

+ default="train+validation",

+ metadata={

+ "help": "The name of the training data set split to use (via the datasets library). Defaults to "

+ "'train+validation'"

+ },

+ )

+ eval_split_name: str = field(

+ default="test",

+ metadata={

+ "help": "The name of the training data set split to use (via the datasets library). Defaults to 'test'"

+ },

+ )

+ audio_column_name: str = field(

+ default="audio",

+ metadata={"help": "The name of the dataset column containing the audio data. Defaults to 'audio'"},

+ )

+ text_column_name: str = field(

+ default="text",

+ metadata={"help": "The name of the dataset column containing the text data. Defaults to 'text'"},

+ )

+ overwrite_cache: bool = field(

+ default=False, metadata={"help": "Overwrite the cached preprocessed datasets or not."}

+ )

+ preprocessing_num_workers: Optional[int] = field(

+ default=None,

+ metadata={"help": "The number of processes to use for the preprocessing."},

+ )

+ max_train_samples: Optional[int] = field(

+ default=None,

+ metadata={

+ "help": "For debugging purposes or quicker training, truncate the number of training examples to this "

+ "value if set."

+ },

+ )

+ max_eval_samples: Optional[int] = field(

+ default=None,

+ metadata={

+ "help": "For debugging purposes or quicker training, truncate the number of validation examples to this "

+ "value if set."

+ },

+ )

+ shuffle_buffer_size: Optional[int] = field(

+ default=500,

+ metadata={

+ "help": "The number of streamed examples to download before shuffling them. The large the buffer, "

+ "the closer it is to real offline shuffling."

+ },

+ )

+ chars_to_ignore: Optional[List[str]] = list_field(

+ default=None,

+ metadata={"help": "A list of characters to remove from the transcripts."},

+ )

+ eval_metrics: List[str] = list_field(

+ default=["wer"],

+ metadata={"help": "A list of metrics the model should be evaluated on. E.g. `'wer cer'`"},

+ )

+ max_duration_in_seconds: float = field(

+ default=20.0,

+ metadata={"help": "Filter audio files that are longer than `max_duration_in_seconds` seconds."},

+ )

+ preprocessing_only: bool = field(

+ default=False,

+ metadata={

+ "help": "Whether to only do data preprocessing and skip training. "

+ "This is especially useful when data preprocessing errors out in distributed training due to timeout. "

+ "In this case, one should run the preprocessing in a non-distributed setup with `preprocessing_only=True` "

+ "so that the cached datasets can consequently be loaded in distributed training"

+ },

+ )

+ use_auth_token: bool = field(

+ default=False,

+ metadata={

+ "help": "If :obj:`True`, will use the token generated when running"

+ ":obj:`transformers-cli login` as HTTP bearer authorization for remote files."

+ },

+ )

+ phoneme_language: Optional[str] = field(

+ default=None,

+ metadata={

+ "help": "The target language that should be used be"

+ " passed to the tokenizer for tokenization. Note that"

+ " this is only relevant if the model classifies the"

+ " input audio to a sequence of phoneme sequences."

+ },

+ )

+

+

+@dataclass

+class DataCollatorCTCWithPadding:

+ """

+ Data collator that will dynamically pad the inputs received.

+ Args:

+ processor (:class:`~transformers.AutoProcessor`)

+ The processor used for proccessing the data.

+ padding (:obj:`bool`, :obj:`str` or :class:`~transformers.tokenization_utils_base.PaddingStrategy`, `optional`, defaults to :obj:`True`):

+ Select a strategy to pad the returned sequences (according to the model's padding side and padding index)

+ among:

+ * :obj:`True` or :obj:`'longest'`: Pad to the longest sequence in the batch (or no padding if only a single

+ sequence if provided).

+ * :obj:`'max_length'`: Pad to a maximum length specified with the argument :obj:`max_length` or to the

+ maximum acceptable input length for the model if that argument is not provided.

+ * :obj:`False` or :obj:`'do_not_pad'` (default): No padding (i.e., can output a batch with sequences of

+ different lengths).

+ max_length (:obj:`int`, `optional`):

+ Maximum length of the ``input_values`` of the returned list and optionally padding length (see above).

+ max_length_labels (:obj:`int`, `optional`):

+ Maximum length of the ``labels`` returned list and optionally padding length (see above).

+ pad_to_multiple_of (:obj:`int`, `optional`):

+ If set will pad the sequence to a multiple of the provided value.

+ This is especially useful to enable the use of Tensor Cores on NVIDIA hardware with compute capability >=

+ 7.5 (Volta).

+ """

+

+ processor: AutoProcessor

+ padding: Union[bool, str] = "longest"

+ max_length: Optional[int] = None

+ pad_to_multiple_of: Optional[int] = None

+ pad_to_multiple_of_labels: Optional[int] = None

+

+ def __call__(self, features: List[Dict[str, Union[List[int], torch.Tensor]]]) -> Dict[str, torch.Tensor]:

+ # split inputs and labels since they have to be of different lenghts and need

+ # different padding methods

+ input_features = []

+ label_features = []

+ for feature in features:

+ if self.max_length and feature["input_values"].shape[-1] > self.max_length:

+ continue

+ input_features.append({"input_values": feature["input_values"]})

+ label_features.append({"input_ids": feature["labels"]})

+

+ batch = self.processor.pad(

+ input_features,

+ padding=self.padding,

+ pad_to_multiple_of=self.pad_to_multiple_of,

+ return_tensors="pt",

+ )

+

+ with self.processor.as_target_processor():

+ labels_batch = self.processor.pad(

+ label_features,

+ padding=self.padding,

+ pad_to_multiple_of=self.pad_to_multiple_of_labels,

+ return_tensors="pt",

+ )

+

+ # replace padding with -100 to ignore loss correctly

+ labels = labels_batch["input_ids"].masked_fill(labels_batch.attention_mask.ne(1), -100)

+

+ batch["labels"] = labels

+

+ return batch

+

+

+def main():

+ # See all possible arguments in src/transformers/training_args.py

+ # or by passing the --help flag to this script.

+ # We now keep distinct sets of args, for a cleaner separation of concerns.

+

+ parser = HfArgumentParser((ModelArguments, DataTrainingArguments, TrainingArguments))

+ if len(sys.argv) == 2 and sys.argv[1].endswith(".json"):

+ # If we pass only one argument to the script and it's the path to a json file,

+ # let's parse it to get our arguments.

+ model_args, data_args, training_args = parser.parse_json_file(json_file=os.path.abspath(sys.argv[1]))

+ else:

+ model_args, data_args, training_args = parser.parse_args_into_dataclasses()

+

+ # Detecting last checkpoint.

+ last_checkpoint = None

+ if os.path.isdir(training_args.output_dir) and training_args.do_train and not training_args.overwrite_output_dir:

+ last_checkpoint = get_last_checkpoint(training_args.output_dir)

+ if last_checkpoint is None and len(os.listdir(training_args.output_dir)) > 0:

+ raise ValueError(

+ f"Output directory ({training_args.output_dir}) already exists and is not empty. "

+ "Use --overwrite_output_dir to overcome."

+ )

+ elif last_checkpoint is not None:

+ logger.info(

+ f"Checkpoint detected, resuming training at {last_checkpoint}. To avoid this behavior, change "

+ "the `--output_dir` or add `--overwrite_output_dir` to train from scratch."

+ )

+

+ # Setup logging

+ logging.basicConfig(

+ format="%(asctime)s - %(levelname)s - %(name)s - %(message)s",

+ datefmt="%m/%d/%Y %H:%M:%S",

+ handlers=[logging.StreamHandler(sys.stdout)],

+ )

+ logger.setLevel(logging.INFO if is_main_process(training_args.local_rank) else logging.WARN)

+

+ # Log on each process the small summary:

+ logger.warning(

+ f"Process rank: {training_args.local_rank}, device: {training_args.device}, n_gpu: {training_args.n_gpu}"

+ f"distributed training: {bool(training_args.local_rank != -1)}, 16-bits training: {training_args.fp16}"

+ )

+ # Set the verbosity to info of the Transformers logger (on main process only):

+ if is_main_process(training_args.local_rank):

+ transformers.utils.logging.set_verbosity_info()

+ logger.info("Training/evaluation parameters %s", training_args)

+

+ # Set seed before initializing model.

+ set_seed(training_args.seed)

+

+ # 1. First, let's load the dataset

+ raw_datasets = IterableDatasetDict()

+ raw_column_names = {}

+

+ def load_streaming_dataset(split, sampling_rate, **kwargs):

+ if "+" in split:

+ dataset_splits = [load_dataset(split=split_name, **kwargs) for split_name in split.split("+")]

+ # `features` and `cast_column` won't be available after interleaving, so we'll use them here

+ features = dataset_splits[0].features

+ # make sure that the dataset decodes audio with a correct sampling rate

+ dataset_splits = [

+ dataset.cast_column(data_args.audio_column_name, datasets.features.Audio(sampling_rate=sampling_rate))

+ for dataset in dataset_splits

+ ]

+

+ interleaved_dataset = interleave_datasets(dataset_splits)

+ return interleaved_dataset, features

+ else:

+ dataset = load_dataset(split=split, **kwargs)

+ features = dataset.features

+ # make sure that the dataset decodes audio with a correct sampling rate

+ dataset = dataset.cast_column(

+ data_args.audio_column_name, datasets.features.Audio(sampling_rate=sampling_rate)

+ )

+ return dataset, features

+

+ # `datasets` takes care of automatically loading and resampling the audio,

+ # so we just need to set the correct target sampling rate and normalize the input

+ # via the `feature_extractor`

+ feature_extractor = AutoFeatureExtractor.from_pretrained(

+ model_args.model_name_or_path, cache_dir=model_args.cache_dir, use_auth_token=data_args.use_auth_token

+ )

+

+ if training_args.do_train:

+ raw_datasets["train"], train_features = load_streaming_dataset(

+ path=data_args.dataset_name,

+ name=data_args.dataset_config_name,

+ split=data_args.train_split_name,

+ use_auth_token=data_args.use_auth_token,

+ streaming=True,

+ sampling_rate=feature_extractor.sampling_rate,

+ )

+ raw_column_names["train"] = list(train_features.keys())

+

+ if data_args.audio_column_name not in raw_column_names["train"]:

+ raise ValueError(

+ f"--audio_column_name '{data_args.audio_column_name}' not found in dataset '{data_args.dataset_name}'. "

+ "Make sure to set `--audio_column_name` to the correct audio column - one of "

+ f"{', '.join(raw_column_names['train'])}."

+ )

+

+ if data_args.text_column_name not in raw_column_names["train"]:

+ raise ValueError(

+ f"--text_column_name {data_args.text_column_name} not found in dataset '{data_args.dataset_name}'. "

+ "Make sure to set `--text_column_name` to the correct text column - one of "

+ f"{', '.join(raw_column_names['train'])}."

+ )

+

+ if data_args.max_train_samples is not None:

+ raw_datasets["train"] = raw_datasets["train"].take(range(data_args.max_train_samples))

+

+ if training_args.do_eval:

+ raw_datasets["eval"], eval_features = load_streaming_dataset(

+ path=data_args.dataset_name,

+ name=data_args.dataset_config_name,

+ split=data_args.eval_split_name,

+ use_auth_token=data_args.use_auth_token,

+ streaming=True,

+ sampling_rate=feature_extractor.sampling_rate,

+ )

+ raw_column_names["eval"] = list(eval_features.keys())

+

+ if data_args.max_eval_samples is not None:

+ raw_datasets["eval"] = raw_datasets["eval"].take(range(data_args.max_eval_samples))

+

+ # 2. We remove some special characters from the datasets

+ # that make training complicated and do not help in transcribing the speech

+ # E.g. characters, such as `,` and `.` do not really have an acoustic characteristic

+ # that could be easily picked up by the model

+ chars_to_ignore_regex = (

+ f'[{"".join(data_args.chars_to_ignore)}]' if data_args.chars_to_ignore is not None else None

+ )

+ text_column_name = data_args.text_column_name

+

+ def remove_special_characters(batch):

+ if chars_to_ignore_regex is not None:

+ batch["target_text"] = re.sub(chars_to_ignore_regex, "", batch[text_column_name]).lower() + " "

+ else:

+ batch["target_text"] = batch[text_column_name].lower() + " "

+ return batch

+

+ with training_args.main_process_first(desc="dataset map special characters removal"):

+ for split, dataset in raw_datasets.items():

+ raw_datasets[split] = dataset.map(

+ remove_special_characters,

+ ).remove_columns([text_column_name])

+

+ # 3. Next, let's load the config as we might need it to create

+ # the tokenizer

+ config = AutoConfig.from_pretrained(

+ model_args.model_name_or_path, cache_dir=model_args.cache_dir, use_auth_token=data_args.use_auth_token

+ )

+

+ # 4. Now we can instantiate the tokenizer and model

+ # Note for distributed training, the .from_pretrained methods guarantee that only

+ # one local process can concurrently download model & vocab.

+

+ tokenizer_name_or_path = model_args.tokenizer_name_or_path

+ if tokenizer_name_or_path is None:

+ raise ValueError(

+ "Tokenizer has to be created before training in streaming mode. Please specify --tokenizer_name_or_path"

+ )

+ # load feature_extractor and tokenizer

+ tokenizer = AutoTokenizer.from_pretrained(

+ tokenizer_name_or_path,

+ config=config,

+ use_auth_token=data_args.use_auth_token,

+ )

+

+ # adapt config

+ config.update(

+ {

+ "feat_proj_dropout": model_args.feat_proj_dropout,

+ "attention_dropout": model_args.attention_dropout,

+ "hidden_dropout": model_args.hidden_dropout,

+ "final_dropout": model_args.final_dropout,

+ "mask_time_prob": model_args.mask_time_prob,

+ "mask_time_length": model_args.mask_time_length,

+ "mask_feature_prob": model_args.mask_feature_prob,

+ "mask_feature_length": model_args.mask_feature_length,

+ "gradient_checkpointing": training_args.gradient_checkpointing,

+ "layerdrop": model_args.layerdrop,

+ "ctc_loss_reduction": model_args.ctc_loss_reduction,

+ "pad_token_id": tokenizer.pad_token_id,

+ "vocab_size": len(tokenizer),

+ "activation_dropout": model_args.activation_dropout,

+ }

+ )

+

+ # create model

+ model = AutoModelForCTC.from_pretrained(

+ model_args.model_name_or_path,

+ cache_dir=model_args.cache_dir,

+ config=config,

+ use_auth_token=data_args.use_auth_token,

+ )

+

+ # freeze encoder

+ if model_args.freeze_feature_encoder:

+ model.freeze_feature_encoder()

+

+ # 5. Now we preprocess the datasets including loading the audio, resampling and normalization

+ audio_column_name = data_args.audio_column_name

+

+ # `phoneme_language` is only relevant if the model is fine-tuned on phoneme classification

+ phoneme_language = data_args.phoneme_language

+

+ # Preprocessing the datasets.

+ # We need to read the audio files as arrays and tokenize the targets.

+ def prepare_dataset(batch):

+ # load audio

+ sample = batch[audio_column_name]

+

+ inputs = feature_extractor(sample["array"], sampling_rate=sample["sampling_rate"])

+ batch["input_values"] = inputs.input_values[0]

+ batch["input_length"] = len(batch["input_values"])

+

+ # encode targets

+ additional_kwargs = {}

+ if phoneme_language is not None:

+ additional_kwargs["phonemizer_lang"] = phoneme_language

+

+ batch["labels"] = tokenizer(batch["target_text"], **additional_kwargs).input_ids

+ return batch

+

+ vectorized_datasets = IterableDatasetDict()

+ with training_args.main_process_first(desc="dataset map preprocessing"):

+ for split, dataset in raw_datasets.items():

+ vectorized_datasets[split] = (

+ dataset.map(prepare_dataset)

+ .remove_columns(raw_column_names[split] + ["target_text"])

+ .with_format("torch")

+ )

+ if split == "train":

+ vectorized_datasets[split] = vectorized_datasets[split].shuffle(

+ buffer_size=data_args.shuffle_buffer_size,

+ seed=training_args.seed,

+ )

+

+ # 6. Next, we can prepare the training.

+ # Let's use word error rate (WER) as our evaluation metric,

+ # instantiate a data collator and the trainer

+

+ # Define evaluation metrics during training, *i.e.* word error rate, character error rate

+ eval_metrics = {metric: load_metric(metric) for metric in data_args.eval_metrics}

+

+ def compute_metrics(pred):

+ pred_logits = pred.predictions

+ pred_ids = np.argmax(pred_logits, axis=-1)

+

+ pred.label_ids[pred.label_ids == -100] = tokenizer.pad_token_id

+

+ pred_str = tokenizer.batch_decode(pred_ids)

+ # we do not want to group tokens when computing the metrics

+ label_str = tokenizer.batch_decode(pred.label_ids, group_tokens=False)

+

+ metrics = {k: v.compute(predictions=pred_str, references=label_str) for k, v in eval_metrics.items()}

+

+ return metrics

+

+ # Now save everything to be able to create a single processor later

+ if is_main_process(training_args.local_rank):

+ # save feature extractor, tokenizer and config

+ feature_extractor.save_pretrained(training_args.output_dir)

+ tokenizer.save_pretrained(training_args.output_dir)

+ config.save_pretrained(training_args.output_dir)

+

+ try:

+ processor = AutoProcessor.from_pretrained(training_args.output_dir)

+ except (OSError, KeyError):

+ warnings.warn(

+ "Loading a processor from a feature extractor config that does not"

+ " include a `processor_class` attribute is deprecated and will be removed in v5. Please add the following "

+ " attribute to your `preprocessor_config.json` file to suppress this warning: "

+ " `'processor_class': 'Wav2Vec2Processor'`",

+ FutureWarning,

+ )

+ processor = Wav2Vec2Processor.from_pretrained(training_args.output_dir)

+

+ # Instantiate custom data collator

+ max_input_length = data_args.max_duration_in_seconds * feature_extractor.sampling_rate

+ data_collator = DataCollatorCTCWithPadding(processor=processor, max_length=max_input_length)

+

+ # trainer callback to reinitialize and reshuffle the streamable datasets at the beginning of each epoch

+ class ShuffleCallback(TrainerCallback):

+ def on_epoch_begin(self, args, state, control, train_dataloader, **kwargs):

+ if isinstance(train_dataloader.dataset, IterableDatasetShard):

+ pass # set_epoch() is handled by the Trainer

+ elif isinstance(train_dataloader.dataset, IterableDataset):

+ train_dataloader.dataset.set_epoch(train_dataloader.dataset._epoch + 1)

+

+ # Initialize Trainer

+ trainer = Trainer(

+ model=model,

+ data_collator=data_collator,

+ args=training_args,

+ compute_metrics=compute_metrics,

+ train_dataset=vectorized_datasets["train"] if training_args.do_train else None,

+ eval_dataset=vectorized_datasets["eval"] if training_args.do_eval else None,

+ tokenizer=processor,

+ callbacks=[ShuffleCallback()],

+ )

+

+ # 7. Finally, we can start training

+

+ # Training

+ if training_args.do_train:

+

+ # use last checkpoint if exist

+ if last_checkpoint is not None:

+ checkpoint = last_checkpoint

+ elif os.path.isdir(model_args.model_name_or_path):

+ checkpoint = model_args.model_name_or_path

+ else:

+ checkpoint = None

+

+ train_result = trainer.train(resume_from_checkpoint=checkpoint)

+ trainer.save_model()

+

+ metrics = train_result.metrics

+ if data_args.max_train_samples:

+ metrics["train_samples"] = data_args.max_train_samples

+

+ trainer.log_metrics("train", metrics)

+ trainer.save_metrics("train", metrics)

+ trainer.save_state()

+

+ # Evaluation

+ results = {}

+ if training_args.do_eval:

+ logger.info("*** Evaluate ***")

+ metrics = trainer.evaluate()

+ if data_args.max_eval_samples:

+ metrics["eval_samples"] = data_args.max_eval_samples

+

+ trainer.log_metrics("eval", metrics)

+ trainer.save_metrics("eval", metrics)

+

+ # Write model card and (optionally) push to hub

+ config_name = data_args.dataset_config_name if data_args.dataset_config_name is not None else "na"

+ kwargs = {

+ "finetuned_from": model_args.model_name_or_path,

+ "tasks": "speech-recognition",

+ "tags": ["automatic-speech-recognition", data_args.dataset_name],

+ "dataset_args": f"Config: {config_name}, Training split: {data_args.train_split_name}, Eval split: {data_args.eval_split_name}",

+ "dataset": f"{data_args.dataset_name.upper()} - {config_name.upper()}",

+ }