diff --git a/README.md b/README.md

index c67a2aa8e..5fe1a8002 100644

--- a/README.md

+++ b/README.md

@@ -1,4 +1,4 @@

-

+

A Toolkit for Evaluating Large Vision-Language Models.

-

**VLMEvalKit** (the python package name is **vlmeval**) is an **open-source evaluation toolkit** of **large vision-language models (LVLMs)**. It enables **one-command evaluation** of LVLMs on various benchmarks, without the heavy workload of data preparation under multiple repositories. In VLMEvalKit, we adopt **generation-based evaluation** for all LVLMs (obtain the answer via `generate` / `chat` interface), and provide the evaluation results obtained with both **exact matching** and **LLM(ChatGPT)-based answer extraction**.

## 🆕 News

-- **[2024-01-14]** We have supported [**LLaVABench (in-the-wild)**](https://huggingface.co/datasets/liuhaotian/llava-bench-in-the-wild).

+- **[2024-01-21]** We have updated results for [**LLaVABench (in-the-wild)**](/results/LLaVABench.md) and [**AI2D**](/results/AI2D.md).

- **[2024-01-14]** We have supported [**AI2D**](https://allenai.org/data/diagrams) and provided the [**script**](/scripts/AI2D_preproc.ipynb) for data pre-processing. 🔥🔥🔥

- **[2024-01-13]** We have supported [**EMU2 / EMU2-Chat**](https://github.com/baaivision/Emu) and [**DocVQA**](https://www.docvqa.org). 🔥🔥🔥

- **[2024-01-11]** We have supported [**Monkey**](https://github.com/Yuliang-Liu/Monkey). 🔥🔥🔥

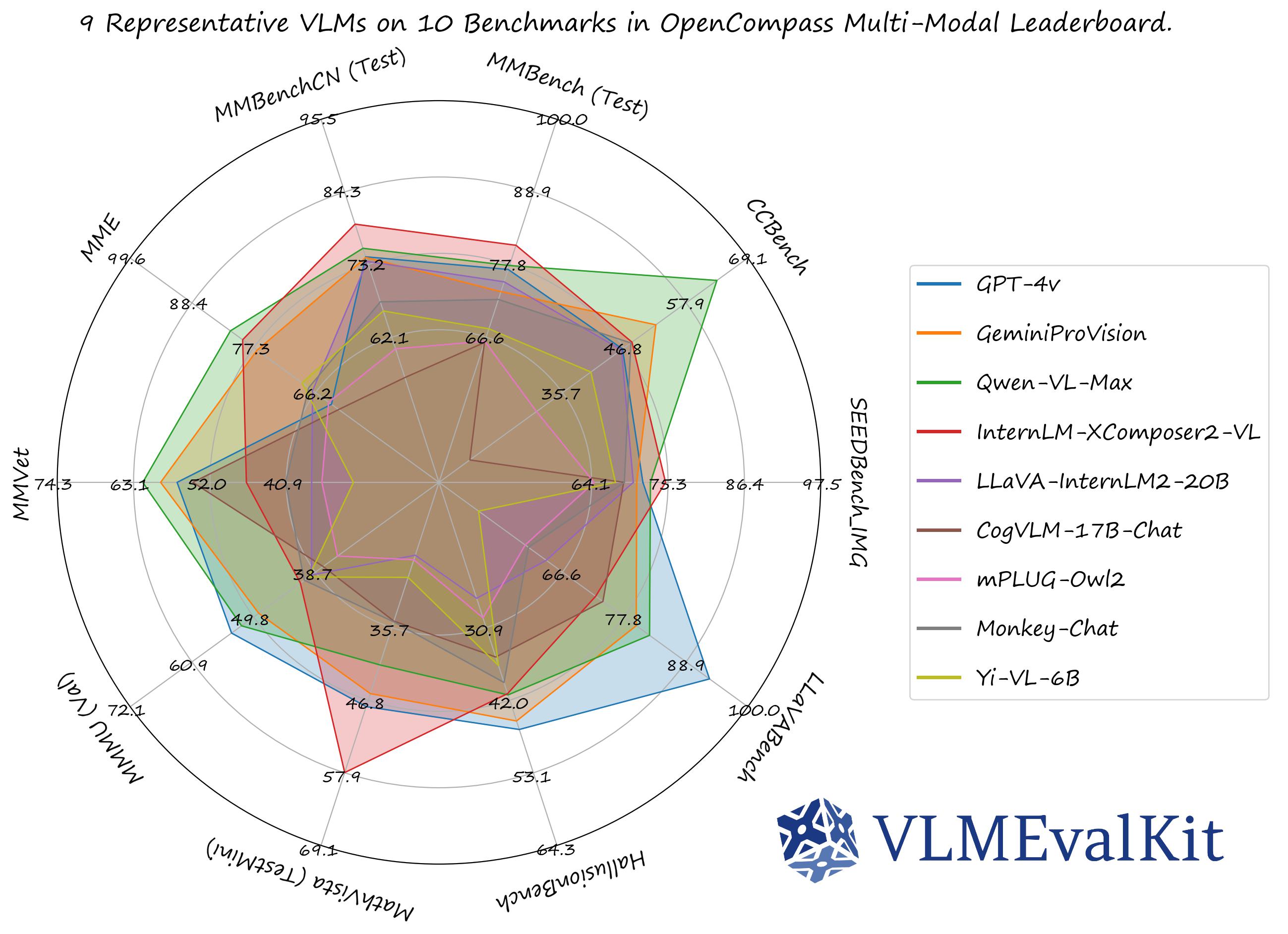

-- **[2024-01-09]** The performance numbers on our official multi-modal leaderboards can be downloaded in json files: [**MMBench Leaderboard**](http://opencompass.openxlab.space/utils/MMBench.json), [**OpenCompass Multi-Modal Leaderboard**](http://opencompass.openxlab.space/utils/MMLB.json). We also add a [notebook](scripts/visualize.ipynb) to visualize these results.🔥🔥🔥

+- **[2024-01-09]** The performance numbers on our official multi-modal leaderboards can be downloaded in json files: [**MMBench Leaderboard**](http://opencompass.openxlab.space/utils/MMBench.json), [**OpenCompass Multi-Modal Leaderboard**](http://opencompass.openxlab.space/utils/MMLB.json). We also added a [**notebook**](scripts/visualize.ipynb) to visualize these results.🔥🔥🔥

- **[2024-01-03]** We support **ScienceQA (Img)** (Dataset Name: ScienceQA_[VAL/TEST], [**eval results**](results/ScienceQA.md)), **HallusionBench** (Dataset Name: HallusionBench, [**eval results**](/results/HallusionBench.md)), and **MathVista** (Dataset Name: MathVista_MINI, [**eval results**](/results/MathVista.md)). 🔥🔥🔥

- **[2023-12-31]** We release the [**preliminary results**](/results/VQA.md) of three VQA datasets (**OCRVQA**, **TextVQA**, **ChatVQA**). The results are obtained by exact matching and may not faithfully reflect the real performance of VLMs on the corresponding task.

@@ -46,9 +45,9 @@

| [**OCRVQA**](https://ocr-vqa.github.io) | OCRVQA_[TESTCORE/TEST] | ✅ | ✅ | [**VQA**](/results/VQA.md) |

| [**TextVQA**](https://textvqa.org) | TextVQA_VAL | ✅ | ✅ | [**VQA**](/results/VQA.md) |

| [**ChartQA**](https://github.com/vis-nlp/ChartQA) | ChartQA_VALTEST_HUMAN | ✅ | ✅ | [**VQA**](/results/VQA.md) |

+| [**AI2D**](https://allenai.org/data/diagrams) | AI2D | ✅ | ✅ | [**AI2D**](/results/AI2D.md) |

+| [**LLaVABench**](https://huggingface.co/datasets/liuhaotian/llava-bench-in-the-wild) | LLaVABench | ✅ | ✅ | [**LLaVABench**](/results/LLaVABench.md) |

| [**DocVQA**](https://www.docvqa.org) | DocVQA_VAL | ✅ | ✅ | |

-| [**AI2D**](https://allenai.org/data/diagrams) | AI2D | ✅ | ✅ | |

-| [**LLaVABench**](https://huggingface.co/datasets/liuhaotian/llava-bench-in-the-wild) | LLaVABench | ✅ | ✅ | |

| [**Core-MM**](https://github.com/core-mm/core-mm) | CORE_MM | ✅ | | |

**Supported API Models**

diff --git a/results/AI2D.md b/results/AI2D.md

new file mode 100644

index 000000000..026fee888

--- /dev/null

+++ b/results/AI2D.md

@@ -0,0 +1,39 @@

+# AI2D Evaluation Results

+

+> During evaluation, we use `GPT-3.5-Turbo-0613` as the choice extractor for all VLMs if the choice can not be extracted via heuristic matching. **Zero-shot** inference is adopted.

+

+## AI2D Accuracy

+

+| Model | overall |

+|:----------------------------|----------:|

+| Monkey-Chat | 72.6 |

+| GPT-4v (detail: low) | 71.3 |

+| Qwen-VL-Chat | 68.5 |

+| Monkey | 67.6 |

+| GeminiProVision | 66.7 |

+| QwenVLPlus | 63.7 |

+| Qwen-VL | 63.4 |

+| LLaVA-InternLM2-20B (QLoRA) | 61.4 |

+| CogVLM-17B-Chat | 60.3 |

+| ShareGPT4V-13B | 59.3 |

+| TransCore-M | 59.2 |

+| LLaVA-v1.5-13B (QLoRA) | 59 |

+| LLaVA-v1.5-13B | 57.9 |

+| ShareGPT4V-7B | 56.7 |

+| InternLM-XComposer-VL | 56.1 |

+| LLaVA-InternLM-7B (QLoRA) | 56 |

+| LLaVA-v1.5-7B (QLoRA) | 55.2 |

+| mPLUG-Owl2 | 55.2 |

+| SharedCaptioner | 55.1 |

+| IDEFICS-80B-Instruct | 54.4 |

+| LLaVA-v1.5-7B | 54.1 |

+| PandaGPT-13B | 49.2 |

+| LLaVA-v1-7B | 47.8 |

+| IDEFICS-9B-Instruct | 42.7 |

+| InstructBLIP-7B | 40.2 |

+| VisualGLM | 40.2 |

+| InstructBLIP-13B | 38.6 |

+| MiniGPT-4-v1-13B | 33.4 |

+| OpenFlamingo v2 | 30.7 |

+| MiniGPT-4-v2 | 29.4 |

+| MiniGPT-4-v1-7B | 28.7 |

\ No newline at end of file

diff --git a/results/Caption.md b/results/Caption.md

index 12f753d10..75b4970c7 100644

--- a/results/Caption.md

+++ b/results/Caption.md

@@ -10,34 +10,36 @@

### Evaluation Results

-| Model | BLEU-4 | BLEU-1 | ROUGE-L | CIDEr | Word_cnt mean. | Word_cnt std. |

-|:------------------------------|---------:|---------:|----------:|--------:|-----------------:|----------------:|

-| Qwen-VL-Chat | 34 | 75.8 | 54.9 | 98.9 | 10 | 1.7 |

-| IDEFICS-80B-Instruct | 32.5 | 76.1 | 54.1 | 94.9 | 9.7 | 3.2 |

-| IDEFICS-9B-Instruct | 29.4 | 72.7 | 53.4 | 90.4 | 10.5 | 4.4 |

-| InstructBLIP-7B | 20.9 | 56.8 | 39.9 | 58.1 | 11.6 | 5.9 |

-| InstructBLIP-13B | 16.9 | 50 | 37 | 52.4 | 11.8 | 12.8 |

-| InternLM-XComposer-VL | 12.4 | 38.3 | 37.9 | 41 | 26.3 | 22.2 |

-| TransCore-M | 8.8 | 30.3 | 36.1 | 34.7 | 39.9 | 27.9 |

-| GeminiProVision | 8.4 | 33.2 | 31.2 | 9.7 | 35.2 | 15.7 |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 7.2 | 25 | 36.6 | 43.2 | 48.8 | 42.9 |

-| mPLUG-Owl2 | 7.1 | 25.8 | 33.6 | 35 | 45.8 | 32.1 |

-| LLaVA-v1-7B | 6.7 | 27.3 | 26.7 | 6.1 | 40.9 | 16.1 |

-| VisualGLM | 5.4 | 28.6 | 23.6 | 0.2 | 41.5 | 11.5 |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 5.3 | 19.6 | 25.8 | 17.8 | 72.2 | 39.4 |

-| LLaVA-v1.5-13B | 5.1 | 20.7 | 21.2 | 0.3 | 70.6 | 22.3 |

-| LLaVA-v1.5-7B | 4.6 | 19.6 | 19.9 | 0.1 | 72.5 | 21.7 |

-| PandaGPT-13B | 4.6 | 19.9 | 19.3 | 0.1 | 65.4 | 16.6 |

-| MiniGPT-4-v1-13B | 4.4 | 20 | 19.8 | 1.3 | 64.4 | 30.5 |

-| MiniGPT-4-v1-7B | 4.3 | 19.6 | 17.5 | 0.8 | 61.9 | 30.6 |

-| LLaVA-InternLM-7B (QLoRA) | 4 | 17.3 | 17.2 | 0.1 | 82.3 | 21 |

-| CogVLM-17B-Chat | 3.6 | 21.3 | 20 | 0.1 | 56.2 | 13.7 |

-| Qwen-VL | 3.5 | 11.6 | 30 | 41.1 | 46.6 | 105.2 |

-| GPT-4v (detail: low) | 3.3 | 18 | 18.1 | 0 | 77.8 | 20.4 |

-| ShareGPT4V-7B | 1.4 | 9.7 | 10.6 | 0.1 | 147.9 | 45.4 |

-| MiniGPT-4-v2 | 1.4 | 12.6 | 13.3 | 0.1 | 83 | 27.1 |

-| OpenFlamingo v2 | 1.3 | 6.4 | 15.8 | 14.9 | 60 | 81.9 |

-| SharedCaptioner | 1 | 8.8 | 9.2 | 0 | 164.2 | 31.6 |

+| Model | BLEU-4 | BLEU-1 | ROUGE-L | CIDEr | Word_cnt mean. | Word_cnt std. |

+|:----------------------------|---------:|---------:|----------:|--------:|-----------------:|----------------:|

+| EMU2-Chat | 38.7 | 78.2 | 56.9 | 109.2 | 9.6 | 1.1 |

+| Qwen-VL-Chat | 34 | 75.8 | 54.9 | 98.9 | 10 | 1.7 |

+| IDEFICS-80B-Instruct | 32.5 | 76.1 | 54.1 | 94.9 | 9.7 | 3.2 |

+| IDEFICS-9B-Instruct | 29.4 | 72.7 | 53.4 | 90.4 | 10.5 | 4.4 |

+| InstructBLIP-7B | 20.9 | 56.8 | 39.9 | 58.1 | 11.6 | 5.9 |

+| InstructBLIP-13B | 16.9 | 50 | 37 | 52.4 | 11.8 | 12.8 |

+| InternLM-XComposer-VL | 12.4 | 38.3 | 37.9 | 41 | 26.3 | 22.2 |

+| GeminiProVision | 8.4 | 33.2 | 31.2 | 9.7 | 35.2 | 15.7 |

+| LLaVA-v1.5-7B (QLoRA) | 7.2 | 25 | 36.6 | 43.2 | 48.8 | 42.9 |

+| mPLUG-Owl2 | 7.1 | 25.8 | 33.6 | 35 | 45.8 | 32.1 |

+| LLaVA-v1-7B | 6.7 | 27.3 | 26.7 | 6.1 | 40.9 | 16.1 |

+| VisualGLM | 5.4 | 28.6 | 23.6 | 0.2 | 41.5 | 11.5 |

+| LLaVA-v1.5-13B (QLoRA) | 5.3 | 19.6 | 25.8 | 17.8 | 72.2 | 39.4 |

+| LLaVA-v1.5-13B | 5.1 | 20.7 | 21.2 | 0.3 | 70.6 | 22.3 |

+| LLaVA-v1.5-7B | 4.6 | 19.6 | 19.9 | 0.1 | 72.5 | 21.7 |

+| PandaGPT-13B | 4.6 | 19.9 | 19.3 | 0.1 | 65.4 | 16.6 |

+| MiniGPT-4-v1-13B | 4.4 | 20 | 19.8 | 1.3 | 64.4 | 30.5 |

+| MiniGPT-4-v1-7B | 4.3 | 19.6 | 17.5 | 0.8 | 61.9 | 30.6 |

+| LLaVA-InternLM-7B (QLoRA) | 4 | 17.3 | 17.2 | 0.1 | 82.3 | 21 |

+| LLaVA-InternLM2-20B (QLoRA) | 4 | 17.9 | 17.3 | 0 | 83.2 | 20.4 |

+| CogVLM-17B-Chat | 3.6 | 21.3 | 20 | 0.1 | 56.2 | 13.7 |

+| Qwen-VL | 3.5 | 11.6 | 30 | 41.1 | 46.6 | 105.2 |

+| GPT-4v (detail: low) | 3.3 | 18 | 18.1 | 0 | 77.8 | 20.4 |

+| TransCore-M | 2.1 | 14.2 | 13.8 | 0.2 | 92 | 6.7 |

+| ShareGPT4V-7B | 1.4 | 9.7 | 10.6 | 0.1 | 147.9 | 45.4 |

+| MiniGPT-4-v2 | 1.4 | 12.6 | 13.3 | 0.1 | 83 | 27.1 |

+| OpenFlamingo v2 | 1.3 | 6.4 | 15.8 | 14.9 | 60 | 81.9 |

+| SharedCaptioner | 1 | 8.8 | 9.2 | 0 | 164.2 | 31.6 |

We noticed that, VLMs that generate long image descriptions tend to achieve inferior scores under different caption metrics.

diff --git a/results/HallusionBench.md b/results/HallusionBench.md

index 65d69b5f9..ff9f3e5cd 100644

--- a/results/HallusionBench.md

+++ b/results/HallusionBench.md

@@ -29,31 +29,36 @@

> Models are sorted by the **descending order of qAcc.**

-| Model | aAcc | fAcc | qAcc |

-|:------------------------------|-------:|-------:|-------:|

-| GPT-4v (detail: low) | 65.8 | 38.4 | 35.2 |

-| GeminiProVision | 63.9 | 37.3 | 34.3 |

-| Qwen-VL-Chat | 56.4 | 27.7 | 26.4 |

-| MiniGPT-4-v1-7B | 52.4 | 17.3 | 25.9 |

-| CogVLM-17B-Chat | 55.1 | 26.3 | 24.8 |

-| InternLM-XComposer-VL | 57 | 26.3 | 24.6 |

-| MiniGPT-4-v1-13B | 51.3 | 16.2 | 24.6 |

-| SharedCaptioner | 55.6 | 22.8 | 24.2 |

-| MiniGPT-4-v2 | 52.6 | 16.5 | 21.1 |

-| InstructBLIP-7B | 53.6 | 20.2 | 19.8 |

-| Qwen-VL | 57.6 | 12.4 | 19.6 |

-| OpenFlamingo v2 | 52.7 | 17.6 | 18 |

-| mPLUG-Owl2 | 48.9 | 22.5 | 16.7 |

-| VisualGLM | 47.2 | 11.3 | 16.5 |

-| IDEFICS-9B-Instruct | 50.1 | 16.2 | 15.6 |

-| ShareGPT4V-7B | 48.2 | 21.7 | 15.6 |

-| LLaVA-InternLM-7B (QLoRA) | 49.1 | 22.3 | 15.4 |

-| InstructBLIP-13B | 47.9 | 17.3 | 15.2 |

-| LLaVA-v1.5-7B | 48.3 | 19.9 | 14.1 |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 46.9 | 17.6 | 14.1 |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 46.2 | 16.2 | 13.2 |

-| LLaVA-v1.5-13B | 46.7 | 17.3 | 13 |

-| IDEFICS-80B-Instruct | 46.1 | 13.3 | 11 |

-| TransCore-M | 44.7 | 16.5 | 10.1 |

-| LLaVA-v1-7B | 44.1 | 13.6 | 9.5 |

-| PandaGPT-13B | 43.1 | 9.2 | 7.7 |

\ No newline at end of file

+| Model | aAcc | fAcc | qAcc |

+|:----------------------------|-------:|-------:|-------:|

+| GPT-4v (detail: low) | 65.8 | 38.4 | 35.2 |

+| GeminiProVision | 63.9 | 37.3 | 34.3 |

+| Monkey-Chat | 58.4 | 30.6 | 29 |

+| Qwen-VL-Chat | 56.4 | 27.7 | 26.4 |

+| MiniGPT-4-v1-7B | 52.4 | 17.3 | 25.9 |

+| Monkey | 55.1 | 24 | 25.5 |

+| CogVLM-17B-Chat | 55.1 | 26.3 | 24.8 |

+| MiniGPT-4-v1-13B | 51.3 | 16.2 | 24.6 |

+| InternLM-XComposer-VL | 57 | 26.3 | 24.6 |

+| SharedCaptioner | 55.6 | 22.8 | 24.2 |

+| MiniGPT-4-v2 | 52.6 | 16.5 | 21.1 |

+| InstructBLIP-7B | 53.6 | 20.2 | 19.8 |

+| Qwen-VL | 57.6 | 12.4 | 19.6 |

+| OpenFlamingo v2 | 52.7 | 17.6 | 18 |

+| EMU2-Chat | 49.4 | 22.3 | 16.9 |

+| mPLUG-Owl2 | 48.9 | 22.5 | 16.7 |

+| ShareGPT4V-13B | 49.8 | 21.7 | 16.7 |

+| VisualGLM | 47.2 | 11.3 | 16.5 |

+| TransCore-M | 49.7 | 21.4 | 15.8 |

+| IDEFICS-9B-Instruct | 50.1 | 16.2 | 15.6 |

+| ShareGPT4V-7B | 48.2 | 21.7 | 15.6 |

+| LLaVA-InternLM-7B (QLoRA) | 49.1 | 22.3 | 15.4 |

+| InstructBLIP-13B | 47.9 | 17.3 | 15.2 |

+| LLaVA-InternLM2-20B (QLoRA) | 47.7 | 17.1 | 14.3 |

+| LLaVA-v1.5-13B (QLoRA) | 46.9 | 17.6 | 14.1 |

+| LLaVA-v1.5-7B | 48.3 | 19.9 | 14.1 |

+| LLaVA-v1.5-7B (QLoRA) | 46.2 | 16.2 | 13.2 |

+| LLaVA-v1.5-13B | 46.7 | 17.3 | 13 |

+| IDEFICS-80B-Instruct | 46.1 | 13.3 | 11 |

+| LLaVA-v1-7B | 44.1 | 13.6 | 9.5 |

+| PandaGPT-13B | 43.1 | 9.2 | 7.7 |

\ No newline at end of file

diff --git a/results/LLaVABench.md b/results/LLaVABench.md

new file mode 100644

index 000000000..17e53efd3

--- /dev/null

+++ b/results/LLaVABench.md

@@ -0,0 +1,43 @@

+# LLaVABench Evaluation Results

+

+> - In LLaVABench Evaluation, we use GPT-4-Turbo (gpt-4-1106-preview) as the judge LLM to assign scores to the VLM outputs. We only perform the evaluation once due to the limited variance among results of multiple evaluation pass originally reported.

+> - No specific prompt template adopted for **ALL VLMs**.

+> - We also include the official results (obtained by gpt-4-0314) for applicable models.

+

+

+## Results

+

+| Model | complex | detail | conv | overall | overall (GPT-4-0314) |

+|:----------------------------|----------:|---------:|-------:|----------:|:-----------------------|

+| GPT-4v (detail: low) | 102.3 | 90.1 | 82.2 | 93.1 | N/A |

+| GeminiProVision | 78.8 | 67.3 | 90.8 | 79.9 | N/A |

+| CogVLM-17B-Chat | 77.6 | 67.3 | 73.6 | 73.9 | N/A |

+| QwenVLPlus | 77.1 | 65.7 | 74.3 | 73.7 | N/A |

+| Qwen-VL-Chat | 71.6 | 54.9 | 71.5 | 67.7 | N/A |

+| ShareGPT4V-13B | 70.1 | 58.3 | 66.9 | 66.6 | 72.6 |

+| ShareGPT4V-7B | 69.5 | 56 | 64.5 | 64.9 | N/A |

+| LLaVA-v1.5-13B | 70.3 | 52.5 | 66.2 | 64.6 | 70.7 |

+| LLaVA-InternLM2-20B (QLoRA) | 70.5 | 50.8 | 63.6 | 63.7 | N/A |

+| LLaVA-v1.5-13B (QLoRA) | 77 | 52.9 | 53.8 | 63.6 | N/A |

+| TransCore-M | 60.6 | 57.4 | 66 | 61.7 | N/A |

+| LLaVA-v1.5-7B | 68.6 | 51.7 | 56 | 60.7 | 63.4 |

+| InstructBLIP-7B | 59.3 | 48.2 | 69.3 | 59.8 | 60.9 |

+| LLaVA-InternLM-7B (QLoRA) | 61.3 | 52.8 | 62.7 | 59.7 | N/A |

+| LLaVA-v1-7B | 67.6 | 43.8 | 58.7 | 58.9 | N/A |

+| LLaVA-v1.5-7B (QLoRA) | 64.4 | 45.2 | 56.2 | 57.2 | N/A |

+| IDEFICS-80B-Instruct | 57.7 | 49.6 | 61.7 | 56.9 | N/A |

+| EMU2-Chat | 48.5 | 38.4 | 82.2 | 56.4 | N/A |

+| InternLM-XComposer-VL | 61.7 | 52.5 | 44.3 | 53.8 | N/A |

+| InstructBLIP-13B | 57.3 | 41.7 | 56.9 | 53.5 | 58.2 |

+| SharedCaptioner | 38.3 | 44.2 | 62.1 | 47.4 | N/A |

+| MiniGPT-4-v1-13B | 57.3 | 44.4 | 32.5 | 46.2 | N/A |

+| MiniGPT-4-v1-7B | 48 | 44.4 | 41.4 | 45.1 | N/A |

+| IDEFICS-9B-Instruct | 49.3 | 45.2 | 38.6 | 45 | N/A |

+| Monkey | 30.3 | 29.1 | 74.5 | 43.1 | N/A |

+| VisualGLM | 43.6 | 36.6 | 28.4 | 37.3 | N/A |

+| PandaGPT-13B | 46.2 | 29.2 | 31 | 37.1 | N/A |

+| OpenFlamingo v2 | 26.1 | 29.6 | 50.3 | 34.2 | N/A |

+| Monkey-Chat | 19.5 | 18.9 | 67.3 | 33.5 | N/A |

+| MiniGPT-4-v2 | 36.8 | 15.5 | 28.9 | 28.8 | N/A |

+| mPLUG-Owl2 | 13 | 17.1 | 51 | 25 | N/A |

+| Qwen-VL | 5.6 | 26.5 | 13.7 | 12.9 | N/A |

\ No newline at end of file

diff --git a/results/MME.md b/results/MME.md

index 9e195d83a..44449a3d2 100644

--- a/results/MME.md

+++ b/results/MME.md

@@ -8,34 +8,40 @@ In each cell, we list `vanilla score / ChatGPT Answer Extraction Score` if the t

VLMs are sorted by the descending order of Total score.

-| Model | Total | Perception | Reasoning |

-|:------------------------------|:------------|:-------------|:------------|

-| GeminiProVision | 2131 / 2149 | 1601 / 1609 | 530 / 540 |

-| InternLM-XComposer-VL | 1874 | 1497 | 377 |

-| ShareGPT4V-7B | 1872 / 1874 | 1530 | 342 / 344 |

-| Qwen-VL-Chat | 1849 / 1860 | 1457 / 1468 | 392 |

-| LLaVA-v1.5-13B | 1800 / 1805 | 1485 / 1490 | 315 |

-| mPLUG-Owl2 | 1781 / 1786 | 1435 / 1436 | 346 / 350 |

-| LLaVA-v1.5-7B | 1775 | 1490 | 285 |

-| GPT-4v (detail: low) | 1737 / 1771 | 1300 / 1334 | 437 |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 1766 | 1475 | 291 |

-| CogVLM-17B-Chat | 1727 / 1737 | 1437 / 1438 | 290 / 299 |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 1716 | 1434 | 282 |

-| TransCore-M | 1681 / 1701 | 1427 / 1429 | 254 / 272 |

-| instructblip_13b | 1624 / 1646 | 1381 / 1383 | 243 / 263 |

-| SharedCaptioner | 1592 / 1643 | 1247 / 1295 | 345 / 348 |

-| LLaVA-InternLM-7B (QLoRA) | 1637 | 1393 | 244 |

-| IDEFICS-80B-Instruct | 1507 / 1519 | 1276 / 1285 | 231 / 234 |

-| InstructBLIP-7B | 1313 / 1391 | 1084 / 1137 | 229 / 254 |

-| IDEFICS-9B-Instruct | 1177 | 942 | 235 |

-| PandaGPT-13B | 1072 | 826 | 246 |

-| MiniGPT-4-v1-13B | 648 / 1067 | 533 / 794 | 115 / 273 |

-| MiniGPT-4-v1-7B | 806 / 1048 | 622 / 771 | 184 / 277 |

-| LLaVA-v1-7B | 1027 / 1044 | 793 / 807 | 234 / 237 |

-| MiniGPT-4-v2 | 968 | 708 | 260 |

-| VisualGLM | 738 | 628 | 110 |

-| OpenFlamingo v2 | 607 | 535 | 72 |

-| Qwen-VL | 6 / 483 | 0 / 334 | 6 / 149 |

+| Model | Total | Perception | Reasoning |

+|:----------------------------|:------------|:-------------|:------------|

+| QwenVLPlus | 2152 / 2229 | 1684 / 1692 | 468 / 537 |

+| GeminiProVision | 2131 / 2149 | 1601 / 1609 | 530 / 540 |

+| TransCore-M | 1890 / 1898 | 1593 / 1594 | 297 / 304 |

+| Monkey-Chat | 1888 | 1506 | 382 |

+| ShareGPT4V-7B | 1872 / 1874 | 1530 | 342 / 344 |

+| InternLM-XComposer-VL | 1874 | 1497 | 377 |

+| LLaVA-InternLM2-20B (QLoRA) | 1867 | 1512 | 355 |

+| Qwen-VL-Chat | 1849 / 1860 | 1457 / 1468 | 392 |

+| ShareGPT4V-13B | 1828 | 1559 | 269 |

+| LLaVA-v1.5-13B | 1800 / 1805 | 1485 / 1490 | 315 |

+| mPLUG-Owl2 | 1781 / 1786 | 1435 / 1436 | 346 / 350 |

+| LLaVA-v1.5-7B | 1775 | 1490 | 285 |

+| GPT-4v (detail: low) | 1737 / 1771 | 1300 / 1334 | 437 |

+| LLaVA-v1.5-13B (QLoRA) | 1766 | 1475 | 291 |

+| Monkey | 1760 | 1472 | 288 |

+| CogVLM-17B-Chat | 1727 / 1737 | 1437 / 1438 | 290 / 299 |

+| LLaVA-v1.5-7B (QLoRA) | 1716 | 1434 | 282 |

+| EMU2-Chat | 1653 / 1678 | 1322 / 1345 | 331 / 333 |

+| InstructBLIP-13B | 1624 / 1646 | 1381 / 1383 | 243 / 263 |

+| SharedCaptioner | 1592 / 1643 | 1247 / 1295 | 345 / 348 |

+| LLaVA-InternLM-7B (QLoRA) | 1637 | 1393 | 244 |

+| IDEFICS-80B-Instruct | 1507 / 1519 | 1276 / 1285 | 231 / 234 |

+| InstructBLIP-7B | 1313 / 1391 | 1084 / 1137 | 229 / 254 |

+| IDEFICS-9B-Instruct | 1177 | 942 | 235 |

+| PandaGPT-13B | 1072 | 826 | 246 |

+| MiniGPT-4-v1-13B | 648 / 1067 | 533 / 794 | 115 / 273 |

+| MiniGPT-4-v1-7B | 806 / 1048 | 622 / 771 | 184 / 277 |

+| LLaVA-v1-7B | 1027 / 1044 | 793 / 807 | 234 / 237 |

+| MiniGPT-4-v2 | 968 | 708 | 260 |

+| VisualGLM | 738 | 628 | 110 |

+| OpenFlamingo v2 | 607 | 535 | 72 |

+| Qwen-VL | 6 / 483 | 0 / 334 | 6 / 149 |

### Comments

@@ -43,7 +49,6 @@ For most VLMs, using ChatGPT as the answer extractor or not may not significantl

| MME Score Improvement with ChatGPT Answer Extractor | Models |

| --------------------------------------------------- | ------------------------------------------------------------ |

-| **No (0)** | XComposer, llava_v1.5_7b, idefics_9b_instruct, PandaGPT_13B, MiniGPT-4-v2,

VisualGLM_6b, flamingov2, LLaVA-XTuner Series |

-| **Minor (1~20)** | qwen_chat (11), llava_v1.5_13b (5), mPLUG-Owl2 (5), idefics_80b_instruct (12), llava_v1_7b (17),

sharegpt4v_7b (2), TransCore_M (19), GeminiProVision (18), CogVLM-17B-Chat (10) |

-| **Moderate (21~100)** | instructblip_13b (22), instructblip_7b (78), GPT-4v (34), SharedCaptioner (51) |

-| **Huge (> 100)** | MiniGPT-4-v1-7B (242), MiniGPT-4-v1-13B (419), qwen_base (477) |

\ No newline at end of file

+| **Minor (1~20)** | Qwen-VL-Chat (11), LLaVA-v1.5-13B (5), mPLUG-Owl2 (5), IDEFICS-80B-Instruct (12), LLaVA-v1-7B (17),

ShareGPT4V-7B (2), TransCore_M (8), GeminiProVision (18), CogVLM-17B-Chat (10) |

+| **Moderate (21~100)** | InstructBLIP-13B (22), InstructBLIP-7B (78), GPT-4v (34), SharedCaptioner (51), QwenVLPlus (77),

EMU2-Chat (25), SharedCaptioner (51)|

+| **Huge (> 100)** | MiniGPT-4-v1-7B (242), MiniGPT-4-v1-13B (419), Qwen-VL (477) |

\ No newline at end of file

diff --git a/results/MMMU.md b/results/MMMU.md

index c80db9761..74ea1bca5 100644

--- a/results/MMMU.md

+++ b/results/MMMU.md

@@ -11,32 +11,38 @@

### MMMU Scores

-| Model | Overall | Art & Design | Business | Science | Health & Medicine | Humanities & Social Science | Tech & Engineering |

-|:------------------------------|----------:|---------------:|-----------:|----------:|--------------------:|------------------------------:|---------------------:|

-| GPT-4v (detail: low) | 53.8 | 67.5 | 59.3 | 46 | 54.7 | 70.8 | 37.1 |

-| GeminiProVision | 48.9 | 59.2 | 36.7 | 42.7 | 52 | 66.7 | 43.8 |

-| CogVLM-17B-Chat | 37.3 | 51.7 | 34 | 36 | 35.3 | 41.7 | 31.4 |

-| Qwen-VL-Chat | 37 | 49.2 | 35.3 | 28 | 31.3 | 54.2 | 31.9 |

-| LLaVA-InternLM-7B (QLoRA) | 36.9 | 44.2 | 32 | 29.3 | 38.7 | 46.7 | 34.8 |

-| LLaVA-v1.5-13B | 36.9 | 49.2 | 24 | 37.3 | 33.3 | 50.8 | 33.3 |

-| TransCore-M | 36.9 | 54.2 | 32.7 | 28 | 32 | 48.3 | 33.3 |

-| ShareGPT4V-7B | 36.6 | 50 | 28.7 | 26 | 37.3 | 49.2 | 34.3 |

-| SharedCaptioner | 36.3 | 44.2 | 28.7 | 29.3 | 37.3 | 45.8 | 36.2 |

-| LLaVA-v1.5-7B | 36.2 | 45.8 | 26 | 34 | 32.7 | 47.5 | 35.7 |

-| InternLM-XComposer-VL | 35.6 | 45.8 | 28.7 | 22.7 | 30.7 | 52.5 | 37.6 |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 35.2 | 40.8 | 30.7 | 27.3 | 35.3 | 44.2 | 35.7 |

-| mPLUG-Owl2 | 34.7 | 47.5 | 26 | 21.3 | 38 | 50 | 31.9 |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 33.7 | 48.3 | 23.3 | 30.7 | 32.7 | 45.8 | 28.6 |

-| InstructBLIP-13B | 33.2 | 37.5 | 30 | 32.7 | 30 | 36.7 | 33.8 |

-| PandaGPT-13B | 32.9 | 42.5 | 36 | 30.7 | 30 | 43.3 | 22.9 |

-| LLaVA-v1-7B | 32.3 | 31.7 | 26 | 31.3 | 32.7 | 35.8 | 35.7 |

-| InstructBLIP-7B | 30.6 | 38.3 | 28.7 | 22 | 30.7 | 39.2 | 28.6 |

-| VisualGLM | 29.9 | 30.8 | 27.3 | 28.7 | 29.3 | 40.8 | 26.2 |

-| Qwen-VL | 29.6 | 45 | 18.7 | 26.7 | 32.7 | 42.5 | 21 |

-| OpenFlamingo v2 | 28.8 | 27.5 | 30.7 | 29.3 | 28.7 | 33.3 | 25.2 |

-| MiniGPT-4-v1-13B | 26.3 | 31.7 | 20.7 | 28 | 25.3 | 35 | 21.9 |

-| Frequent Choice | 25.8 | 26.7 | 28.4 | 24 | 24.4 | 25.2 | 26.5 |

-| MiniGPT-4-v2 | 25 | 27.5 | 23.3 | 22 | 27.3 | 32.5 | 21 |

-| IDEFICS-80B-Instruct | 24 | 39.2 | 18 | 20 | 22 | 46.7 | 11 |

-| MiniGPT-4-v1-7B | 23.6 | 33.3 | 28.7 | 19.3 | 18 | 15 | 26.2 |

-| IDEFICS-9B-Instruct | 18.4 | 22.5 | 11.3 | 17.3 | 21.3 | 30 | 13.3 |

\ No newline at end of file

+| Model | Overall | Art & Design | Business | Science | Health & Medicine | Humanities & Social Science | Tech & Engineering |

+|:----------------------------|----------:|---------------:|-----------:|----------:|--------------------:|------------------------------:|---------------------:|

+| GPT-4v (detail: low) | 53.8 | 67.5 | 59.3 | 46 | 54.7 | 70.8 | 37.1 |

+| GeminiProVision | 48.9 | 59.2 | 36.7 | 42.7 | 52 | 66.7 | 43.8 |

+| QwenVLPlus | 40.9 | 56.7 | 32 | 33.3 | 36.7 | 59.2 | 36.2 |

+| Monkey-Chat | 40.7 | 50 | 34 | 43.3 | 36 | 51.7 | 35.2 |

+| LLaVA-InternLM2-20B (QLoRA) | 39.4 | 52.5 | 30 | 34.7 | 40 | 54.2 | 33.3 |

+| Monkey | 38.9 | 55 | 31.3 | 35.3 | 37.3 | 45.8 | 34.8 |

+| CogVLM-17B-Chat | 37.3 | 51.7 | 34 | 36 | 35.3 | 41.7 | 31.4 |

+| Qwen-VL-Chat | 37 | 49.2 | 35.3 | 28 | 31.3 | 54.2 | 31.9 |

+| LLaVA-v1.5-13B | 36.9 | 49.2 | 24 | 37.3 | 33.3 | 50.8 | 33.3 |

+| LLaVA-InternLM-7B (QLoRA) | 36.9 | 44.2 | 32 | 29.3 | 38.7 | 46.7 | 34.8 |

+| ShareGPT4V-7B | 36.6 | 50 | 28.7 | 26 | 37.3 | 49.2 | 34.3 |

+| TransCore-M | 36.4 | 45.8 | 33.3 | 28.7 | 38 | 51.7 | 29 |

+| SharedCaptioner | 36.3 | 44.2 | 28.7 | 29.3 | 37.3 | 45.8 | 36.2 |

+| LLaVA-v1.5-7B | 36.2 | 45.8 | 26 | 34 | 32.7 | 47.5 | 35.7 |

+| InternLM-XComposer-VL | 35.6 | 45.8 | 28.7 | 22.7 | 30.7 | 52.5 | 37.6 |

+| LLaVA-v1.5-13B (QLoRA) | 35.2 | 40.8 | 30.7 | 27.3 | 35.3 | 44.2 | 35.7 |

+| EMU2-Chat | 35 | 44.2 | 33.3 | 32 | 32 | 41.7 | 31.4 |

+| ShareGPT4V-13B | 34.8 | 45.8 | 26 | 30.7 | 34.7 | 46.7 | 31 |

+| mPLUG-Owl2 | 34.7 | 47.5 | 26 | 21.3 | 38 | 50 | 31.9 |

+| LLaVA-v1.5-7B (QLoRA) | 33.7 | 48.3 | 23.3 | 30.7 | 32.7 | 45.8 | 28.6 |

+| InstructBLIP-13B | 33.2 | 37.5 | 30 | 32.7 | 30 | 36.7 | 33.8 |

+| PandaGPT-13B | 32.9 | 42.5 | 36 | 30.7 | 30 | 43.3 | 22.9 |

+| LLaVA-v1-7B | 32.3 | 31.7 | 26 | 31.3 | 32.7 | 35.8 | 35.7 |

+| InstructBLIP-7B | 30.6 | 38.3 | 28.7 | 22 | 30.7 | 39.2 | 28.6 |

+| VisualGLM | 29.9 | 30.8 | 27.3 | 28.7 | 29.3 | 40.8 | 26.2 |

+| Qwen-VL | 29.6 | 45 | 18.7 | 26.7 | 32.7 | 42.5 | 21 |

+| OpenFlamingo v2 | 28.8 | 27.5 | 30.7 | 29.3 | 28.7 | 33.3 | 25.2 |

+| MiniGPT-4-v1-13B | 26.3 | 31.7 | 20.7 | 28 | 25.3 | 35 | 21.9 |

+| Frequent Choice | 25.8 | 26.7 | 28.4 | 24 | 24.4 | 25.2 | 26.5 |

+| MiniGPT-4-v2 | 25 | 27.5 | 23.3 | 22 | 27.3 | 32.5 | 21 |

+| IDEFICS-80B-Instruct | 24 | 39.2 | 18 | 20 | 22 | 46.7 | 11 |

+| MiniGPT-4-v1-7B | 23.6 | 33.3 | 28.7 | 19.3 | 18 | 15 | 26.2 |

+| IDEFICS-9B-Instruct | 18.4 | 22.5 | 11.3 | 17.3 | 21.3 | 30 | 13.3 |

\ No newline at end of file

diff --git a/results/MMVet.md b/results/MMVet.md

index 1d61f1dfc..2bb6d9860 100644

--- a/results/MMVet.md

+++ b/results/MMVet.md

@@ -2,35 +2,42 @@

> - In MMVet Evaluation, we use GPT-4-Turbo (gpt-4-1106-preview) as the judge LLM to assign scores to the VLM outputs. We only perform the evaluation once due to the limited variance among results of multiple evaluation pass originally reported.

> - No specific prompt template adopted for **ALL VLMs**.

+> - We also provide performance on the [**Official Leaderboard**](https://paperswithcode.com/sota/visual-question-answering-on-mm-vet) for models that are applicable. Those results are obtained with GPT-4-0314 evaluator (which has been deperacted for new users).

### MMVet Scores

-| Model | ocr | math | spat | rec | know | gen | Overall | [**Overall (Official)**](https://paperswithcode.com/sota/visual-question-answering-on-mm-vet) |

-| :---------------------------- | ---: | ---: | ---: | ---: | ---: | ---: | ------: | -----------------------------------------------------------: |

-| GeminiProVision | 63.6 | 41.5 | 61.2 | 59.8 | 51 | 48 | 59.2 | 64.3±0.4 |

-| GPT-4v (detail: low) | 59.4 | 61.2 | 52.5 | 59.7 | 48 | 46.5 | 56.8 | 60.2±0.3 |

-| CogVLM-17B-Chat | 46.4 | 10.8 | 46.1 | 64.7 | 52.4 | 50.6 | 54.5 ||

-| Qwen-VL-Chat | 37.2 | 22.3 | 42.8 | 52.5 | 45.4 | 40.3 | 47.3 | N/A |

-| IDEFICS-80B-Instruct | 29.9 | 15 | 30.7 | 45.6 | 38.6 | 37.1 | 39.7 | N/A |

-| LLaVA-v1.5-13B | 28.8 | 11.5 | 31.5 | 42 | 23.1 | 23 | 38.3 | 36.3±0.2 |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 31.3 | 15 | 28 | 46.3 | 25.6 | 27.3 | 35.9 | N/A |

-| mPLUG-Owl2 | 29.5 | 7.7 | 32.1 | 47.3 | 23.8 | 20.9 | 35.7 | 36.3±0.1 |

-| InternLM-XComposer-VL | 21.8 | 3.8 | 24.7 | 43.1 | 28.9 | 27.5 | 35.2 | N/A |

-| ShareGPT4V-7B | 30.2 | 18.5 | 30 | 36.1 | 20.2 | 18.1 | 34.7 | 37.6 |

-| TransCore-M | 27.3 | 15.4 | 32.7 | 36.7 | 23 | 23.5 | 33.9 | N/A |

-| InstructBLIP-7B | 25.5 | 11.5 | 23.5 | 39.3 | 24.3 | 23.6 | 33.1 | 26.2±0.2 |

-| LLaVA-v1.5-7B | 25 | 7.7 | 26.3 | 36.9 | 22 | 21.5 | 32.7 | 31.1±0.2 |

-| LLaVA-InternLM-7B (QLoRA) | 29.2 | 7.7 | 27.5 | 41.1 | 21.7 | 18.5 | 32.4 | N/A |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 28.2 | 11.5 | 26.8 | 41.1 | 21.7 | 17 | 32.2 | N/A |

-| InstructBLIP-13B | 25.4 | 11.2 | 26.9 | 33.4 | 19 | 18.2 | 30.1 | 25.6±0.3 |

-| SharedCaptioner | 26 | 11.2 | 31.1 | 39.5 | 17.1 | 12 | 30.1 |

-| IDEFICS-9B-Instruct | 21.7 | 11.5 | 22.4 | 34.6 | 27.4 | 26.9 | 30 | N/A |

-| LLaVA-v1-7B | 19 | 11.5 | 25.6 | 31.4 | 18.1 | 16.2 | 27.4 | 23.8±0.6 |

-| OpenFlamingo v2 | 19.5 | 7.7 | 21.7 | 24.7 | 21.7 | 19 | 23.3 | 24.8±0.2 |

-| PandaGPT-13B | 6.8 | 6.5 | 16.5 | 26.3 | 13.7 | 13.9 | 19.6 | N/A |

-| MiniGPT-4-v1-13B | 10.3 | 7.7 | 12.5 | 19.9 | 14.9 | 13.8 | 16.9 | 24.4±0.4 |

-| MiniGPT-4-v1-7B | 9.2 | 3.8 | 10.1 | 19.4 | 13.3 | 12.5 | 15.6 | 22.1±0.1 |

-| VisualGLM | 8.5 | 6.5 | 9.1 | 18 | 8.1 | 7.1 | 14.8 | N/A |

-| Qwen-VL | 7.4 | 0 | 3.9 | 16.5 | 18.6 | 18.1 | 13 | N/A |

-| MiniGPT-4-v2 | 7.1 | 7.3 | 9.6 | 12.2 | 9.2 | 8 | 10.5 | N/A |

+| Model | ocr | math | spat | rec | know | gen | Overall (GPT-4-Turbo) | Overall (Official) |

+|:----------------------------|------:|-------:|-------:|------:|-------:|------:|------------------------:|:---------------------|

+| GeminiProVision | 63.6 | 41.5 | 61.2 | 59.8 | 51 | 48 | 59.2 | 64.3±0.4 |

+| GPT-4v (detail: low) | 59.4 | 61.2 | 52.5 | 59.7 | 48 | 46.5 | 56.8 | 60.2±0.3 |

+| QwenVLPlus | 59 | 45.8 | 48.7 | 58.4 | 49.2 | 49.3 | 55.7 | N/A |

+| CogVLM-17B-Chat | 46.4 | 10.8 | 46.1 | 64.7 | 52.4 | 50.6 | 54.5 | N/A |

+| Qwen-VL-Chat | 37.2 | 22.3 | 42.8 | 52.5 | 45.4 | 40.3 | 47.3 | N/A |

+| IDEFICS-80B-Instruct | 29.9 | 15 | 30.7 | 45.6 | 38.6 | 37.1 | 39.7 | N/A |

+| ShareGPT4V-13B | 37.3 | 18.8 | 39.1 | 45.2 | 23.7 | 22.4 | 39.2 | 43.1 |

+| TransCore-M | 35.8 | 11.2 | 36.8 | 47.3 | 26.1 | 27.3 | 38.8 | N/A |

+| LLaVA-v1.5-13B | 28.8 | 11.5 | 31.5 | 42 | 23.1 | 23 | 38.3 | 36.3±0.2 |

+| Monkey | 30.9 | 7.7 | 33.6 | 50.3 | 21.4 | 16.5 | 37.5 | N/A |

+| LLaVA-InternLM2-20B (QLoRA) | 30.6 | 7.7 | 32.4 | 49.1 | 29.2 | 31.9 | 37.2 | N/A |

+| LLaVA-v1.5-13B (QLoRA) | 31.3 | 15 | 28 | 46.3 | 25.6 | 27.3 | 35.9 | N/A |

+| mPLUG-Owl2 | 29.5 | 7.7 | 32.1 | 47.3 | 23.8 | 20.9 | 35.7 | 36.3±0.1 |

+| InternLM-XComposer-VL | 21.8 | 3.8 | 24.7 | 43.1 | 28.9 | 27.5 | 35.2 | N/A |

+| ShareGPT4V-7B | 30.2 | 18.5 | 30 | 36.1 | 20.2 | 18.1 | 34.7 | 37.6 |

+| InstructBLIP-7B | 25.5 | 11.5 | 23.5 | 39.3 | 24.3 | 23.6 | 33.1 | 26.2±0.2 |

+| Monkey-Chat | 26.9 | 3.8 | 28 | 44.5 | 17.3 | 13 | 33 | N/A |

+| LLaVA-v1.5-7B | 25 | 7.7 | 26.3 | 36.9 | 22 | 21.5 | 32.7 | 31.1±0.2 |

+| LLaVA-InternLM-7B (QLoRA) | 29.2 | 7.7 | 27.5 | 41.1 | 21.7 | 18.5 | 32.4 | N/A |

+| LLaVA-v1.5-7B (QLoRA) | 28.2 | 11.5 | 26.8 | 41.1 | 21.7 | 17 | 32.2 | N/A |

+| EMU2-Chat | 30.1 | 11.5 | 31.7 | 38.4 | 16 | 11.7 | 31 | 48.5 |

+| SharedCaptioner | 26 | 11.2 | 31.1 | 39.5 | 17.1 | 12 | 30.1 | N/A |

+| InstructBLIP-13B | 25.4 | 11.2 | 26.9 | 33.4 | 19 | 18.2 | 30.1 | 25.6±0.3 |

+| IDEFICS-9B-Instruct | 21.7 | 11.5 | 22.4 | 34.6 | 27.4 | 26.9 | 30 | N/A |

+| LLaVA-v1-7B | 19 | 11.5 | 25.6 | 31.4 | 18.1 | 16.2 | 27.4 | 23.8±0.6 |

+| OpenFlamingo v2 | 19.5 | 7.7 | 21.7 | 24.7 | 21.7 | 19 | 23.3 | 24.8±0.2 |

+| PandaGPT-13B | 6.8 | 6.5 | 16.5 | 26.3 | 13.7 | 13.9 | 19.6 | N/A |

+| MiniGPT-4-v1-13B | 10.3 | 7.7 | 12.5 | 19.9 | 14.9 | 13.8 | 16.9 | 24.4±0.4 |

+| MiniGPT-4-v1-7B | 9.2 | 3.8 | 10.1 | 19.4 | 13.3 | 12.5 | 15.6 | 22.1±0.1 |

+| VisualGLM | 8.5 | 6.5 | 9.1 | 18 | 8.1 | 7.1 | 14.8 | N/A |

+| Qwen-VL | 7.4 | 0 | 3.9 | 16.5 | 18.6 | 18.1 | 13 | N/A |

+| MiniGPT-4-v2 | 7.1 | 7.3 | 9.6 | 12.2 | 9.2 | 8 | 10.5 | N/A |

diff --git a/results/MathVista.md b/results/MathVista.md

index 929fac489..98a97c891 100644

--- a/results/MathVista.md

+++ b/results/MathVista.md

@@ -9,33 +9,38 @@

## Evaluation Results

-| Model | ALL | SCI | TQA | NUM | ARI | VQA | GEO | ALG | GPS | MWP | LOG | FQA | STA |

-|:------------------------------|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|

-| **Human (High School)** | 60.3 | 64.9 | 63.2 | 53.8 | 59.2 | 55.9 | 51.4 | 50.9 | 48.4 | 73 | 40.7 | 59.7 | 63.9 |

-| GPT-4v (detail: low) | 47.8 | 63.9 | 67.1 | 22.9 | 45.9 | 38.5 | 49.8 | 53 | 49.5 | 57.5 | 18.9 | 34.6 | 46.5 |

-| GeminiProVision | 45.8 | 58.2 | 60.8 | 27.1 | 41.9 | 40.2 | 39.7 | 42.3 | 38.5 | 45.7 | 10.8 | 46.5 | 52.8 |

-| CogVLM-17B-Chat | 34.7 | 51.6 | 44.3 | 23.6 | 30.9 | 36.3 | 26.8 | 28.1 | 26.4 | 26.9 | 16.2 | 39.8 | 42.9 |

-| Qwen-VL-Chat | 33.8 | 41.8 | 39.2 | 24.3 | 28.3 | 33 | 28.5 | 30.2 | 29.8 | 25.8 | 13.5 | 39.8 | 41.5 |

-| InternLM-XComposer-VL | 29.5 | 37.7 | 37.3 | 27.8 | 28.6 | 34.1 | 31.8 | 28.1 | 28.8 | 29.6 | 13.5 | 22.3 | 22.3 |

-| SharedCaptioner | 29 | 37.7 | 37.3 | 35.4 | 28.3 | 33 | 25.9 | 23.8 | 22.1 | 36.6 | 16.2 | 21.6 | 20.9 |

-| LLaVA-v1.5-13B | 26.4 | 37.7 | 38.6 | 22.9 | 24.9 | 32.4 | 22.6 | 24.2 | 22.6 | 18.8 | 21.6 | 23.4 | 23.6 |

-| LLaVA-InternLM-7B (QLoRA) | 26.3 | 32 | 34.8 | 20.8 | 22.4 | 30.2 | 27.6 | 28.1 | 27.9 | 21 | 24.3 | 21.2 | 19.6 |

-| IDEFICS-80B-Instruct | 26.2 | 37.7 | 34.8 | 22.2 | 25.2 | 33 | 23.4 | 22.8 | 23.1 | 21.5 | 18.9 | 22.3 | 21.3 |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 26.2 | 44.3 | 39.2 | 20.1 | 24.1 | 32.4 | 21.3 | 22.4 | 22.1 | 18.8 | 18.9 | 22.7 | 22.6 |

-| ShareGPT4V-7B | 25.8 | 41 | 38.6 | 19.4 | 25.5 | 36.3 | 19.7 | 21.4 | 20.2 | 16.1 | 13.5 | 22.3 | 21.6 |

-| TransCore-M | 25.4 | 41 | 44.3 | 19.4 | 24.4 | 34.1 | 20.9 | 24.2 | 20.2 | 17.2 | 13.5 | 18.2 | 19.3 |

-| mPLUG-Owl2 | 25.3 | 44.3 | 41.8 | 18.8 | 23.5 | 31.8 | 18.8 | 20.3 | 17.8 | 16.7 | 13.5 | 23 | 23.9 |

-| PandaGPT-13B | 24.6 | 36.1 | 30.4 | 17.4 | 21 | 27.4 | 23.8 | 23.8 | 25.5 | 18.8 | 16.2 | 22.7 | 21.9 |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 24.2 | 39.3 | 36.1 | 17.4 | 22.1 | 30.2 | 21.3 | 21.4 | 21.6 | 16.1 | 24.3 | 20.8 | 20.3 |

-| InstructBLIP-7B | 23.7 | 33.6 | 31.6 | 13.9 | 23.5 | 29.6 | 19.7 | 20.6 | 20.2 | 15.6 | 13.5 | 23.4 | 21.3 |

-| LLaVA-v1-7B | 23.7 | 32.8 | 34.2 | 13.9 | 20.7 | 28.5 | 22.2 | 24.6 | 24 | 13.4 | 10.8 | 21.2 | 19.9 |

-| LLaVA-v1.5-7B | 23.6 | 33.6 | 36.7 | 11.1 | 21 | 28.5 | 18.8 | 23.1 | 19.2 | 14.5 | 13.5 | 22.3 | 21.6 |

-| MiniGPT-4-v2 | 22.9 | 29.5 | 32.3 | 13.2 | 17 | 25.7 | 22.6 | 26.7 | 24.5 | 10.8 | 16.2 | 22.7 | 20.3 |

-| VisualGLM | 21.5 | 36.9 | 29.7 | 15.3 | 18.1 | 30.2 | 22.2 | 22.8 | 24 | 7 | 2.7 | 19 | 18.6 |

-| InstructBLIP-13B | 21.5 | 28.7 | 27.8 | 19.4 | 21.5 | 31.8 | 17.6 | 18.5 | 18.3 | 13.4 | 13.5 | 19 | 17.9 |

-| IDEFICS-9B-Instruct | 20.4 | 29.5 | 31 | 13.2 | 17.8 | 29.6 | 15.1 | 18.9 | 15.9 | 8.1 | 13.5 | 20.1 | 18.6 |

-| MiniGPT-4-v1-13B | 20.4 | 27 | 24.7 | 9 | 18.1 | 27.4 | 20.9 | 22.8 | 22.6 | 9.7 | 10.8 | 19 | 16.9 |

-| MiniGPT-4-v1-7B | 20.2 | 27 | 29.1 | 7.6 | 16.7 | 21.8 | 20.9 | 23.1 | 22.1 | 14 | 5.4 | 16.7 | 17.3 |

-| OpenFlamingo v2 | 18.6 | 22.1 | 24.7 | 5.6 | 16.4 | 24 | 21.3 | 23.8 | 23.6 | 8.1 | 10.8 | 14.9 | 13.3 |

-| **Random Chance** | 17.9 | 15.8 | 23.4 | 8.8 | 13.8 | 24.3 | 22.7 | 25.8 | 24.1 | 4.5 | 13.4 | 15.5 | 14.3 |

-| Qwen-VL | 15.5 | 34.4 | 29.7 | 10.4 | 12.2 | 22.9 | 9.6 | 10.7 | 9.1 | 5.4 | 16.2 | 14.1 | 11.6 |

+| Model | ALL | SCI | TQA | NUM | ARI | VQA | GEO | ALG | GPS | MWP | LOG | FQA | STA |

+|:----------------------------|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|------:|

+| **Human (High School)** | 60.3 | 64.9 | 63.2 | 53.8 | 59.2 | 55.9 | 51.4 | 50.9 | 48.4 | 73 | 40.7 | 59.7 | 63.9 |

+| GPT-4v (detail: low) | 47.8 | 63.9 | 67.1 | 22.9 | 45.9 | 38.5 | 49.8 | 53 | 49.5 | 57.5 | 18.9 | 34.6 | 46.5 |

+| GeminiProVision | 45.8 | 58.2 | 60.8 | 27.1 | 41.9 | 40.2 | 39.7 | 42.3 | 38.5 | 45.7 | 10.8 | 46.5 | 52.8 |

+| Monkey-Chat | 34.8 | 48.4 | 42.4 | 22.9 | 29.7 | 33.5 | 25.9 | 26.3 | 26.9 | 28.5 | 13.5 | 41.6 | 41.5 |

+| CogVLM-17B-Chat | 34.7 | 51.6 | 44.3 | 23.6 | 30.9 | 36.3 | 26.8 | 28.1 | 26.4 | 26.9 | 16.2 | 39.8 | 42.9 |

+| Qwen-VL-Chat | 33.8 | 41.8 | 39.2 | 24.3 | 28.3 | 33 | 28.5 | 30.2 | 29.8 | 25.8 | 13.5 | 39.8 | 41.5 |

+| Monkey | 32.5 | 38.5 | 36.1 | 21.5 | 28.6 | 35.2 | 26.8 | 27.4 | 27.4 | 22 | 18.9 | 39.8 | 38.9 |

+| EMU2-Chat | 30 | 36.9 | 36.7 | 25 | 30.6 | 36.3 | 31.4 | 29.9 | 30.8 | 30.1 | 8.1 | 21.2 | 23.6 |

+| InternLM-XComposer-VL | 29.5 | 37.7 | 37.3 | 27.8 | 28.6 | 34.1 | 31.8 | 28.1 | 28.8 | 29.6 | 13.5 | 22.3 | 22.3 |

+| SharedCaptioner | 29 | 37.7 | 37.3 | 35.4 | 28.3 | 33 | 25.9 | 23.8 | 22.1 | 36.6 | 16.2 | 21.6 | 20.9 |

+| ShareGPT4V-13B | 27.8 | 42.6 | 43.7 | 19.4 | 26.6 | 31.3 | 28.5 | 28.1 | 26.9 | 23.7 | 16.2 | 19.7 | 22.3 |

+| TransCore-M | 27.8 | 43.4 | 44.9 | 20.8 | 28.6 | 36.9 | 24.3 | 25.6 | 23.6 | 21.5 | 8.1 | 19.3 | 22.3 |

+| LLaVA-v1.5-13B | 26.4 | 37.7 | 38.6 | 22.9 | 24.9 | 32.4 | 22.6 | 24.2 | 22.6 | 18.8 | 21.6 | 23.4 | 23.6 |

+| LLaVA-InternLM-7B (QLoRA) | 26.3 | 32 | 34.8 | 20.8 | 22.4 | 30.2 | 27.6 | 28.1 | 27.9 | 21 | 24.3 | 21.2 | 19.6 |

+| LLaVA-v1.5-13B (QLoRA) | 26.2 | 44.3 | 39.2 | 20.1 | 24.1 | 32.4 | 21.3 | 22.4 | 22.1 | 18.8 | 18.9 | 22.7 | 22.6 |

+| IDEFICS-80B-Instruct | 26.2 | 37.7 | 34.8 | 22.2 | 25.2 | 33 | 23.4 | 22.8 | 23.1 | 21.5 | 18.9 | 22.3 | 21.3 |

+| ShareGPT4V-7B | 25.8 | 41 | 38.6 | 19.4 | 25.5 | 36.3 | 19.7 | 21.4 | 20.2 | 16.1 | 13.5 | 22.3 | 21.6 |

+| mPLUG-Owl2 | 25.3 | 44.3 | 41.8 | 18.8 | 23.5 | 31.8 | 18.8 | 20.3 | 17.8 | 16.7 | 13.5 | 23 | 23.9 |

+| PandaGPT-13B | 24.6 | 36.1 | 30.4 | 17.4 | 21 | 27.4 | 23.8 | 23.8 | 25.5 | 18.8 | 16.2 | 22.7 | 21.9 |

+| LLaVA-InternLM2-20B (QLoRA) | 24.6 | 45.1 | 44.3 | 20.8 | 20.7 | 35.8 | 24.3 | 26 | 24 | 9.7 | 16.2 | 16.4 | 15.9 |

+| LLaVA-v1.5-7B (QLoRA) | 24.2 | 39.3 | 36.1 | 17.4 | 22.1 | 30.2 | 21.3 | 21.4 | 21.6 | 16.1 | 24.3 | 20.8 | 20.3 |

+| LLaVA-v1-7B | 23.7 | 32.8 | 34.2 | 13.9 | 20.7 | 28.5 | 22.2 | 24.6 | 24 | 13.4 | 10.8 | 21.2 | 19.9 |

+| InstructBLIP-7B | 23.7 | 33.6 | 31.6 | 13.9 | 23.5 | 29.6 | 19.7 | 20.6 | 20.2 | 15.6 | 13.5 | 23.4 | 21.3 |

+| LLaVA-v1.5-7B | 23.6 | 33.6 | 36.7 | 11.1 | 21 | 28.5 | 18.8 | 23.1 | 19.2 | 14.5 | 13.5 | 22.3 | 21.6 |

+| MiniGPT-4-v2 | 22.9 | 29.5 | 32.3 | 13.2 | 17 | 25.7 | 22.6 | 26.7 | 24.5 | 10.8 | 16.2 | 22.7 | 20.3 |

+| VisualGLM | 21.5 | 36.9 | 29.7 | 15.3 | 18.1 | 30.2 | 22.2 | 22.8 | 24 | 7 | 2.7 | 19 | 18.6 |

+| InstructBLIP-13B | 21.5 | 28.7 | 27.8 | 19.4 | 21.5 | 31.8 | 17.6 | 18.5 | 18.3 | 13.4 | 13.5 | 19 | 17.9 |

+| IDEFICS-9B-Instruct | 20.4 | 29.5 | 31 | 13.2 | 17.8 | 29.6 | 15.1 | 18.9 | 15.9 | 8.1 | 13.5 | 20.1 | 18.6 |

+| MiniGPT-4-v1-13B | 20.4 | 27 | 24.7 | 9 | 18.1 | 27.4 | 20.9 | 22.8 | 22.6 | 9.7 | 10.8 | 19 | 16.9 |

+| MiniGPT-4-v1-7B | 20.2 | 27 | 29.1 | 7.6 | 16.7 | 21.8 | 20.9 | 23.1 | 22.1 | 14 | 5.4 | 16.7 | 17.3 |

+| OpenFlamingo v2 | 18.6 | 22.1 | 24.7 | 5.6 | 16.4 | 24 | 21.3 | 23.8 | 23.6 | 8.1 | 10.8 | 14.9 | 13.3 |

+| **Random Chance** | 17.9 | 15.8 | 23.4 | 8.8 | 13.8 | 24.3 | 22.7 | 25.8 | 24.1 | 4.5 | 13.4 | 15.5 | 14.3 |

+| Qwen-VL | 15.5 | 34.4 | 29.7 | 10.4 | 12.2 | 22.9 | 9.6 | 10.7 | 9.1 | 5.4 | 16.2 | 14.1 | 11.6 |

diff --git a/results/SEEDBench_IMG.md b/results/SEEDBench_IMG.md

index 2e9941112..1d5265f99 100644

--- a/results/SEEDBench_IMG.md

+++ b/results/SEEDBench_IMG.md

@@ -4,40 +4,45 @@

### SEEDBench_IMG Scores (Vanilla / ChatGPT Answer Extraction / Official Leaderboard)

-- **ExactMatchRate**: The success rate of extracting the option label with heuristic matching.

-- **MatchedAcc:** The overall accuracy across questions with predictions successfully matched, **with exact matching**.

-- **ExactMatchAcc:** The overall accuracy across all questions with **exact matching** (if prediction not successfully matched with an option, count it as wrong).

-- **LLMMatchAcc:** The overall accuracy across all questions with **ChatGPT answer matching**.

-- **OfficialAcc**: SEEDBench_IMG acc on the official leaderboard (if applicable).

+- **Acc w/o. ChatGPT Extraction**: The accuracy when using exact matching for evaluation.

+- **Acc w. ChatGPT Extraction**: The overall accuracy across all questions with **ChatGPT answer matching**.

+- **Official**: SEEDBench_IMG acc on the official leaderboard (if applicable).

-| Model | ExactMatchRate | MatchedAcc | ExactMatchAcc | LLMMatchAcc | [**Official Leaderboard (Eval Method)**](https://huggingface.co/spaces/AILab-CVC/SEED-Bench_Leaderboard) |

-| :---------------------------- | -------------: | ---------: | ------------: | ----------: | ------------------------------------------------------------ |

-| GPT-4v (detail: low) | 95.58 | 73.92 | 70.65 | 71.59 | 69.1 (Gen) |

-| GeminiProVision | 99.38 | 71.09 | 70.65 | 70.74 | N/A |

-| ShareGPT4V-7B | 100 | 69.25 | 69.25 | 69.25 | 69.7 (Gen) |

-| CogVLM-17B-Chat | 99.93 | 68.76 | 68.71 | 68.76 |

-| LLaVA-v1.5-13B | 100 | 68.11 | 68.11 | 68.11 | 68.2 (Gen) |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 100 | 67.95 | 67.95 | 67.95 | N/A |

-| TransCore-M | 100 | 66.77 | 66.77 | 66.77 | N/A |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 100 | 66.39 | 66.39 | 66.39 | N/A |

-| InternLM-XComposer-VL | 100 | 66.07 | 66.07 | 66.07 | 66.9 (PPL) |

-| LLaVA-InternLM-7B (QLoRA) | 100 | 65.75 | 65.75 | 65.75 | N/A |

-| LLaVA-v1.5-7B | 100 | 65.59 | 65.59 | 65.59 | N/A |

-| Qwen-VL-Chat | 96.21 | 66.61 | 64.08 | 64.83 | 65.4 (PPL) |

-| mPLUG-Owl2 | 100 | 64.52 | 64.52 | 64.52 | 64.1 (Not Given) |

-| SharedCaptioner | 84.57 | 64.7 | 54.71 | 61.22 |

-| Qwen-VL | 99.28 | 52.69 | 52.31 | 52.53 | 62.3 (PPL) |

-| IDEFICS-80B-Instruct | 99.84 | 51.96 | 51.88 | 51.96 | 53.2 (Not Given) |

-| LLaVA-v1-7B | 82.51 | 50.18 | 41.41 | 49.48 | N/A |

-| PandaGPT_13B | 82.02 | 48.41 | 39.71 | 47.63 | N/A |

-| InstructBLIP_13B | 99.07 | 47.5 | 47.06 | 47.26 | N/A |

-| VisualGLM | 74.15 | 47.66 | 35.34 | 47.02 | N/A |

-| IDEFICS-9B-Instruct | 99.52 | 44.97 | 44.75 | 45 | 44.5 (Not Given) |

-| InstructBLIP_7B | 88.35 | 49.63 | 43.84 | 44.51 | 58.8 (PPL) |

-| MiniGPT-4-v1-13B | 67.71 | 39.37 | 26.66 | 34.91 | N/A |

-| MiniGPT-4-v1-7B | 69.25 | 33.62 | 23.29 | 31.56 | 47.4 (PPL) |

-| MiniGPT-4-v2 | 81.4 | 31.81 | 25.89 | 29.38 | N/A |

-| OpenFlamingo v2 | 99.84 | 28.83 | 28.79 | 28.84 | 42.7 (PPL) |

+| Model | Acc w/o. ChatGPT Extraction | Acc w. ChatGPT Extraction | [**Official (Eval Method)**](https://huggingface.co/spaces/AILab-CVC/SEED-Bench_Leaderboard) |

+|:----------------------------|------------------------------:|----------------------------:|:-----------------------------------------------------------------------------------------------|

+| GPT-4v (detail: low) | 70.65 | 71.59 | 69.1 (Gen) |

+| TransCore-M | 71.22 | 71.22 | N/A |

+| LLaVA-InternLM2-7B (QLoRA) | 71.2 | 71.2 | N/A |

+| GeminiProVision | 70.65 | 70.74 | N/A |

+| ShareGPT4V-13B | 70.66 | 70.66 | 70.8 (Gen) |

+| LLaVA-InternLM2-20B (QLoRA) | 70.24 | 70.24 | N/A |

+| ShareGPT4V-7B | 69.25 | 69.25 | 69.7 (Gen) |

+| EMU2-Chat | 68.35 | 68.89 | N/A |

+| Monkey-Chat | 68.89 | 68.89 | N/A |

+| CogVLM-17B-Chat | 68.71 | 68.76 | N/A |

+| LLaVA-v1.5-13B | 68.11 | 68.11 | 68.2 (Gen) |

+| LLaVA-v1.5-13B (QLoRA) | 67.95 | 67.95 | N/A |

+| LLaVA-v1.5-7B (QLoRA) | 66.39 | 66.39 | N/A |

+| InternLM-XComposer-VL | 66.07 | 66.07 | 66.9 (PPL) |

+| LLaVA-InternLM-7B (QLoRA) | 65.75 | 65.75 | N/A |

+| QwenVLPlus | 55.27 | 65.73 | N/A |

+| LLaVA-v1.5-7B | 65.59 | 65.59 | N/A |

+| Qwen-VL-Chat | 64.08 | 64.83 | 65.4 (PPL) |

+| mPLUG-Owl2 | 64.52 | 64.52 | 64.1 (Not Given) |

+| Monkey | 64.3 | 64.3 | N/A |

+| SharedCaptioner | 54.71 | 61.22 | N/A |

+| Qwen-VL | 52.31 | 52.53 | 62.3 (PPL) |

+| IDEFICS-80B-Instruct | 51.88 | 51.96 | 53.2 (Not Given) |

+| LLaVA-v1-7B | 41.41 | 49.48 | N/A |

+| PandaGPT-13B | 39.71 | 47.63 | N/A |

+| InstructBLIP-13B | 47.06 | 47.26 | N/A |

+| VisualGLM | 35.34 | 47.02 | N/A |

+| IDEFICS-9B-Instruct | 44.75 | 45 | 44.5 (Not Given) |

+| InstructBLIP-7B | 43.84 | 44.51 | 58.8 (PPL) |

+| MiniGPT-4-v1-13B | 26.66 | 34.91 | N/A |

+| MiniGPT-4-v1-7B | 23.29 | 31.56 | 47.4 (PPL) |

+| MiniGPT-4-v2 | 25.89 | 29.38 | N/A |

+| OpenFlamingo v2 | 28.79 | 28.84 | 42.7 (PPL) |

### Comments

diff --git a/results/ScienceQA.md b/results/ScienceQA.md

index f8ba8ef31..7d8cb55ed 100644

--- a/results/ScienceQA.md

+++ b/results/ScienceQA.md

@@ -6,32 +6,35 @@

## ScienceQA Accuracy

-| Model | ScienceQA-Image Val | ScienceQA-Image Test |

-|:------------------------------|----------------------:|-----------------------:|

-| InternLM-XComposer-VL | 88 | 89.8 |

-| Human Performance | | 87.5 |

-| SharedCaptioner | 81 | 82.3 |

-| GPT-4v (detail: low) | 84.6 | 82.1 |

-| GeminiProVision | 80.1 | 81.4 |

-| TransCore-M | 68.9 | 72.1 |

-| LLaVA-v1.5-13B | 69.2 | 72 |

-| LLaVA-v1.5-13B (QLoRA, XTuner) | 68.9 | 70.3 |

-| mPLUG-Owl2 | 69.5 | 69.5 |

-| ShareGPT4V-7B | 68.1 | 69.4 |

-| LLaVA-v1.5-7B | 66.6 | 68.9 |

-| Qwen-VL-Chat | 65.5 | 68.8 |

-| LLaVA-v1.5-7B (QLoRA, XTuner) | 68.8 | 68.7 |

-| LLaVA-InternLM-7B (QLoRA) | 65.3 | 68.4 |

-| CogVLM-17B-Chat | 65.6 | 66.2 |

-| PandaGPT-13B | 60.9 | 63.2 |

-| IDEFICS-80B-Instruct | 59.9 | 61.8 |

-| Qwen-VL | 57.7 | 61.1 |

-| LLaVA-v1-7B | 59.9 | 60.5 |

-| InstructBLIP-13B | 53.3 | 58.3 |

-| VisualGLM | 53.4 | 56.1 |

-| MiniGPT-4-v2 | 54.1 | 54.7 |

-| InstructBLIP-7B | 54.7 | 54.1 |

-| IDEFICS-9B-Instruct | 51.6 | 53.5 |

-| MiniGPT-4-v1-13B | 44.3 | 46 |

-| OpenFlamingo v2 | 45.7 | 44.8 |

-| MiniGPT-4-v1-7B | 39 | 39.6 |

+| Model | ScienceQA-Image Val | ScienceQA-Image Test |

+|:----------------------------|:----------------------|-----------------------:|

+| InternLM-XComposer-VL | 88.0 | 89.8 |

+| Human Performance | N/A | 87.5 |

+| SharedCaptioner | 81.0 | 82.3 |

+| GPT-4v (detail: low) | 84.6 | 82.1 |

+| GeminiProVision | 80.1 | 81.4 |

+| LLaVA-InternLM2-20B (QLoRA) | 72.7 | 73.7 |

+| Monkey | 68.2 | 72.1 |

+| LLaVA-v1.5-13B | 69.2 | 72 |

+| TransCore-M | 68.8 | 71.2 |

+| LLaVA-v1.5-13B (QLoRA) | 68.9 | 70.3 |

+| mPLUG-Owl2 | 69.5 | 69.5 |

+| ShareGPT4V-7B | 68.1 | 69.4 |

+| LLaVA-v1.5-7B | 66.6 | 68.9 |

+| Qwen-VL-Chat | 65.5 | 68.8 |

+| LLaVA-v1.5-7B (QLoRA) | 68.8 | 68.7 |

+| LLaVA-InternLM-7B (QLoRA) | 65.3 | 68.4 |

+| EMU2-Chat | 65.3 | 68.2 |

+| CogVLM-17B-Chat | 65.6 | 66.2 |

+| PandaGPT-13B | 60.9 | 63.2 |

+| IDEFICS-80B-Instruct | 59.9 | 61.8 |

+| Qwen-VL | 57.7 | 61.1 |

+| LLaVA-v1-7B | 59.9 | 60.5 |

+| InstructBLIP-13B | 53.3 | 58.3 |

+| VisualGLM | 53.4 | 56.1 |

+| MiniGPT-4-v2 | 54.1 | 54.7 |

+| InstructBLIP-7B | 54.7 | 54.1 |

+| IDEFICS-9B-Instruct | 51.6 | 53.5 |

+| MiniGPT-4-v1-13B | 44.3 | 46 |

+| OpenFlamingo v2 | 45.7 | 44.8 |

+| MiniGPT-4-v1-7B | 39.0 | 39.6 |

diff --git a/vlmeval/vlm/emu.py b/vlmeval/vlm/emu.py

index 4c6479316..3b7113d42 100644

--- a/vlmeval/vlm/emu.py

+++ b/vlmeval/vlm/emu.py

@@ -12,7 +12,7 @@ def __init__(self,

name,

model_path_map={

"emu2":"BAAI/Emu2",

- "emu2_chat":"BAAI/Emu2_Chat"

+ "emu2_chat":"BAAI/Emu2-Chat"

},

**kwargs):