diff --git a/.dev_scripts/github/update_model_index.py b/.dev_scripts/github/update_model_index.py

index 3c24055060..f6721f7790 100755

--- a/.dev_scripts/github/update_model_index.py

+++ b/.dev_scripts/github/update_model_index.py

@@ -151,6 +151,7 @@ def parse_config_path(path):

'3d_kpt_mview_rgb_img': '3D Keypoint',

'3d_kpt_sview_rgb_vid': '3D Keypoint',

'3d_mesh_sview_rgb_img': '3D Mesh',

+ 'gesture_sview_rgbd_vid': 'Gesture',

None: None

}

task_readable = task2readable.get(task)

diff --git a/configs/_base_/datasets/nvgesture.py b/configs/_base_/datasets/nvgesture.py

new file mode 100644

index 0000000000..7d5a3df7b9

--- /dev/null

+++ b/configs/_base_/datasets/nvgesture.py

@@ -0,0 +1,42 @@

+dataset_info = dict(

+ dataset_name='nvgesture',

+ paper_info=dict(

+ author='Pavlo Molchanov and Xiaodong Yang and Shalini Gupta '

+ 'and Kihwan Kim and Stephen Tyree and Jan Kautz',

+ title='Online Detection and Classification of Dynamic Hand Gestures '

+ 'with Recurrent 3D Convolutional Neural Networks',

+ container='Proceedings of the IEEE Conference on '

+ 'Computer Vision and Pattern Recognition',

+ year='2016',

+ homepage='https://research.nvidia.com/publication/2016-06_online-'

+ 'detection-and-classification-dynamic-hand-gestures-recurrent-3d',

+ ),

+ category_info={

+ 0: 'five fingers move right',

+ 1: 'five fingers move left',

+ 2: 'five fingers move up',

+ 3: 'five fingers move down',

+ 4: 'two fingers move right',

+ 5: 'two fingers move left',

+ 6: 'two fingers move up',

+ 7: 'two fingers move down',

+ 8: 'click',

+ 9: 'beckoned',

+ 10: 'stretch hand',

+ 11: 'shake hand',

+ 12: 'one',

+ 13: 'two',

+ 14: 'three',

+ 15: 'lift up',

+ 16: 'press down',

+ 17: 'push',

+ 18: 'shrink',

+ 19: 'levorotation',

+ 20: 'dextrorotation',

+ 21: 'two fingers prod',

+ 22: 'grab',

+ 23: 'thumbs up',

+ 24: 'OK'

+ },

+ flip_pairs=[(0, 1), (4, 5), (19, 20)],

+ fps=30)

diff --git a/configs/hand/gesture_sview_rgbd_vid/README.md b/configs/hand/gesture_sview_rgbd_vid/README.md

new file mode 100644

index 0000000000..fb5ce51f9a

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/README.md

@@ -0,0 +1,7 @@

+# Gesture Recognition

+

+Gesture recognition aims to recognize the hand gestures in the video, such as thumbs up.

+

+## Data preparation

+

+Please follow [DATA Preparation](/docs/en/tasks/2d_hand_gesture.md) to prepare data.

diff --git a/configs/hand/gesture_sview_rgbd_vid/mtut/README.md b/configs/hand/gesture_sview_rgbd_vid/mtut/README.md

new file mode 100644

index 0000000000..80e0e8f0b0

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/mtut/README.md

@@ -0,0 +1,8 @@

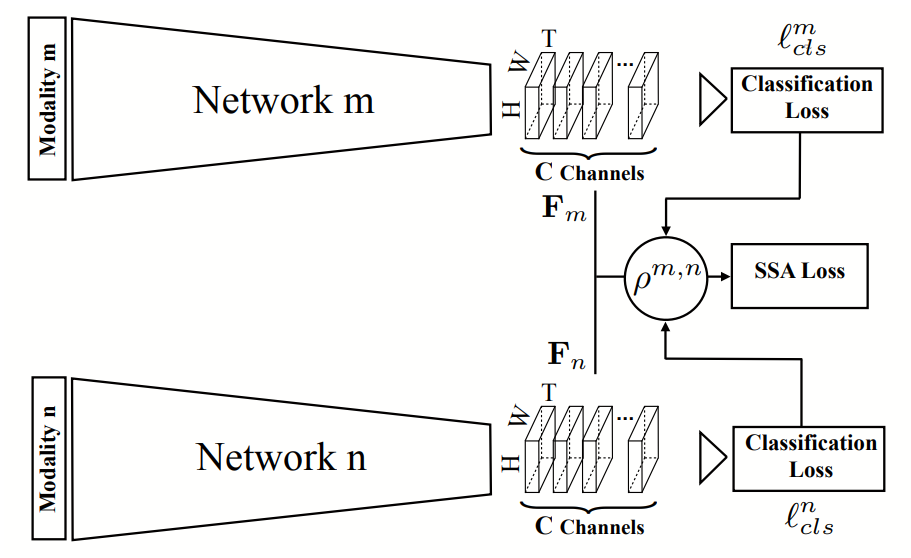

+# Multi-modal Training and Uni-modal Testing (MTUT) for gesture recognition

+

+MTUT method uses multi-modal data in the training phase, such as RGB videos and depth videos.

+For each modality, an I3D network is trained to conduct gesture recognition. The property

+of spatial-temporal semantic alignment across multi-modal data is utilized to supervise the

+learning, in order to improve the performance of each I3D network for a single modality.

+

+In the testing phase, uni-modal data, generally RGB video, is used.

diff --git a/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture.md b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture.md

new file mode 100644

index 0000000000..297c6b95c6

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture.md

@@ -0,0 +1,60 @@

+

+

+

+MTUT (CVPR'2019)

+

+```bibtex

+@InProceedings{Abavisani_2019_CVPR,

+ author = {Abavisani, Mahdi and Joze, Hamid Reza Vaezi and Patel, Vishal M.},

+ title = {Improving the Performance of Unimodal Dynamic Hand-Gesture Recognition With Multimodal Training},

+ booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

+ month = {June},

+ year = {2019}

+}

+```

+

+

+

+

+

+

+I3D (CVPR'2017)

+

+```bibtex

+@InProceedings{Carreira_2017_CVPR,

+ author = {Carreira, Joao and Zisserman, Andrew},

+ title = {Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset},

+ booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+ month = {July},

+ year = {2017}

+}

+```

+

+

+

+

+

+

+NVGesture (CVPR'2016)

+

+```bibtex

+@InProceedings{Molchanov_2016_CVPR,

+ author = {Molchanov, Pavlo and Yang, Xiaodong and Gupta, Shalini and Kim, Kihwan and Tyree, Stephen and Kautz, Jan},

+ title = {Online Detection and Classification of Dynamic Hand Gestures With Recurrent 3D Convolutional Neural Network},

+ booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+ month = {June},

+ year = {2016}

+}

+```

+

+

+

+Results on NVGesture test set

+

+| Arch | Input Size | fps | bbox | AP_rgb | AP_depth | ckpt | log |

+| :------------------------------------------------------ | :--------: | :-: | :-------: | :----: | :------: | :-----------------------------------------------------: | :----------------------------------------------------: |

+| [I3D+MTUT](/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15.py)$^\*$ | 112x112 | 15 | $\\surd$ | 0.725 | 0.730 | [ckpt](https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_bbox_112x112_fps15-363b5956_20220530.pth) | [log](https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_bbox_112x112_fps15-20220530.log.json) |

+| [I3D+MTUT](/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_224x224_fps30.py) | 224x224 | 30 | $\\surd$ | 0.782 | 0.811 | [ckpt](https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_bbox_224x224_fps30-98a8f288_20220530.pthh) | [log](https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_bbox_224x224_fps30-20220530.log.json) |

+| [I3D+MTUT](/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_224x224_fps30.py) | 224x224 | 30 | $\\times$ | 0.739 | 0.809 | [ckpt](https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_224x224_fps30-b7abf574_20220530.pth) | [log](https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_224x224_fps30-20220530.log.json) |

+

+$^\*$: MTUT supports multi-modal training and uni-modal testing. Model trained with this config can be used to recognize gestures in rgb videos with [inference config](/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15_rgb.py).

diff --git a/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture.yml b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture.yml

new file mode 100644

index 0000000000..26e6f58f78

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture.yml

@@ -0,0 +1,49 @@

+Collections:

+- Name: MTUT

+ Paper:

+ Title: Improving the Performance of Unimodal Dynamic Hand-Gesture Recognition

+ With Multimodal Training

+ URL: https://openaccess.thecvf.com/content_CVPR_2019/html/Abavisani_Improving_the_Performance_of_Unimodal_Dynamic_Hand-Gesture_Recognition_With_Multimodal_CVPR_2019_paper.html

+ README: https://github.com/open-mmlab/mmpose/blob/master/docs/en/papers/algorithms/mtut.md

+Models:

+- Config: configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15.py

+ In Collection: MTUT

+ Metadata:

+ Architecture: &id001

+ - MTUT

+ - I3D

+ Training Data: NVGesture

+ Name: mtut_i3d_nvgesture_bbox_112x112_fps15

+ Results:

+ - Dataset: NVGesture

+ Metrics:

+ AP depth: 0.73

+ AP rgb: 0.725

+ Task: Hand Gesture

+ Weights: https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_bbox_112x112_fps15-363b5956_20220530.pth

+- Config: configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_224x224_fps30.py

+ In Collection: MTUT

+ Metadata:

+ Architecture: *id001

+ Training Data: NVGesture

+ Name: mtut_i3d_nvgesture_bbox_224x224_fps30

+ Results:

+ - Dataset: NVGesture

+ Metrics:

+ AP depth: 0.811

+ AP rgb: 0.782

+ Task: Hand Gesture

+ Weights: https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_bbox_224x224_fps30-98a8f288_20220530.pthh

+- Config: configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_224x224_fps30.py

+ In Collection: MTUT

+ Metadata:

+ Architecture: *id001

+ Training Data: NVGesture

+ Name: mtut_i3d_nvgesture_224x224_fps30

+ Results:

+ - Dataset: NVGesture

+ Metrics:

+ AP depth: 0.809

+ AP rgb: 0.739

+ Task: Hand Gesture

+ Weights: https://download.openmmlab.com/mmpose/gesture/mtut/i3d_nvgesture/i3d_nvgesture_224x224_fps30-b7abf574_20220530.pth

diff --git a/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_224x224_fps30.py b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_224x224_fps30.py

new file mode 100644

index 0000000000..4c2e2b400f

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_224x224_fps30.py

@@ -0,0 +1,128 @@

+_base_ = [

+ '../../../../_base_/default_runtime.py',

+ '../../../../_base_/datasets/nvgesture.py'

+]

+

+checkpoint_config = dict(interval=5)

+evaluation = dict(interval=5, metric='AP', save_best='AP_rgb')

+

+optimizer = dict(

+ type='SGD',

+ lr=1e-2,

+ momentum=0.9,

+)

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(policy='step', gamma=0.1, step=[30, 50])

+total_epochs = 75

+log_config = dict(interval=10)

+

+custom_hooks_config = [dict(type='ModelSetEpochHook')]

+

+model = dict(

+ type='GestureRecognizer',

+ modality=['rgb', 'depth'],

+ pretrained=dict(

+ rgb='https://github.com/hassony2/kinetics_i3d_pytorch/'

+ 'raw/master/model/model_rgb.pth',

+ depth='https://github.com/hassony2/kinetics_i3d_pytorch/'

+ 'raw/master/model/model_rgb.pth',

+ ),

+ backbone=dict(

+ rgb=dict(

+ type='I3D',

+ in_channels=3,

+ expansion=1,

+ ),

+ depth=dict(

+ type='I3D',

+ in_channels=1,

+ expansion=1,

+ ),

+ ),

+ cls_head=dict(

+ type='MultiModalSSAHead',

+ num_classes=25,

+ ),

+ train_cfg=dict(

+ beta=2,

+ lambda_=5e-3,

+ ssa_start_epoch=61,

+ ),

+ test_cfg=dict(),

+)

+

+data_cfg = dict(

+ video_size=[320, 240],

+ modality=['rgb', 'depth'],

+)

+

+train_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=64, ref_fps=30),

+ dict(type='ResizeGivenShortEdge', length=256),

+ dict(type='RandomAlignedSpatialCrop', length=224),

+ dict(type='GestureRandomFlip'),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+val_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=-1, ref_fps=30),

+ dict(type='ResizeGivenShortEdge', length=256),

+ dict(type='CenterSpatialCrop', length=224),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+test_pipeline = val_pipeline

+

+data_root = 'data/nvgesture'

+data = dict(

+ samples_per_gpu=6,

+ workers_per_gpu=2,

+ val_dataloader=dict(samples_per_gpu=6),

+ test_dataloader=dict(samples_per_gpu=6),

+ train=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_train_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=train_pipeline,

+ dataset_info={{_base_.dataset_info}}),

+ val=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=val_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}),

+ test=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=test_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}))

diff --git a/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15.py b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15.py

new file mode 100644

index 0000000000..ae2fe2e960

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15.py

@@ -0,0 +1,136 @@

+_base_ = [

+ '../../../../_base_/default_runtime.py',

+ '../../../../_base_/datasets/nvgesture.py'

+]

+

+checkpoint_config = dict(interval=5)

+evaluation = dict(interval=5, metric='AP', save_best='AP_rgb')

+

+optimizer = dict(

+ type='SGD',

+ lr=1e-1,

+ momentum=0.9,

+)

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(policy='step', gamma=0.1, step=[30, 60, 90, 110])

+total_epochs = 130

+log_config = dict(interval=10)

+

+custom_hooks_config = [dict(type='ModelSetEpochHook')]

+

+model = dict(

+ type='GestureRecognizer',

+ modality=['rgb', 'depth'],

+ pretrained=dict(

+ rgb='https://github.com/hassony2/kinetics_i3d_pytorch/'

+ 'raw/master/model/model_rgb.pth',

+ depth='https://github.com/hassony2/kinetics_i3d_pytorch/'

+ 'raw/master/model/model_rgb.pth',

+ ),

+ backbone=dict(

+ rgb=dict(

+ type='I3D',

+ in_channels=3,

+ expansion=1,

+ ),

+ depth=dict(

+ type='I3D',

+ in_channels=1,

+ expansion=1,

+ ),

+ ),

+ cls_head=dict(

+ type='MultiModalSSAHead',

+ num_classes=25,

+ avg_pool_kernel=(1, 2, 2),

+ ),

+ train_cfg=dict(

+ beta=2,

+ lambda_=1e-3,

+ ssa_start_epoch=111,

+ ),

+ test_cfg=dict(),

+)

+

+data_root = 'data/nvgesture'

+data_cfg = dict(

+ video_size=[320, 240],

+ modality=['rgb', 'depth'],

+ bbox_file=f'{data_root}/annotations/bboxes.json',

+)

+

+train_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=16, ref_fps=15),

+ dict(type='MultiFrameBBoxMerge'),

+ dict(

+ type='ResizedCropByBBox',

+ size=112,

+ scale=(0.8, 1.25),

+ ratio=(0.75, 1.33),

+ shift=0.3),

+ dict(type='GestureRandomFlip'),

+ dict(type='VideoColorJitter', brightness=0.4, contrast=0.3),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+val_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=-1, ref_fps=15),

+ dict(type='MultiFrameBBoxMerge'),

+ dict(type='ResizedCropByBBox', size=112),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+test_pipeline = val_pipeline

+

+data = dict(

+ samples_per_gpu=6,

+ workers_per_gpu=2,

+ val_dataloader=dict(samples_per_gpu=6),

+ test_dataloader=dict(samples_per_gpu=6),

+ train=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_train_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=train_pipeline,

+ dataset_info={{_base_.dataset_info}}),

+ val=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=val_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}),

+ test=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=test_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}))

diff --git a/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15_rgb.py b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15_rgb.py

new file mode 100644

index 0000000000..9777dda67e

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_112x112_fps15_rgb.py

@@ -0,0 +1,124 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+_base_ = [

+ '../../../../_base_/default_runtime.py',

+ '../../../../_base_/datasets/nvgesture.py'

+]

+

+checkpoint_config = dict(interval=5)

+evaluation = dict(interval=5, metric='AP', save_best='AP_rgb')

+

+optimizer = dict(

+ type='SGD',

+ lr=1e-1,

+ momentum=0.9,

+)

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(policy='step', gamma=0.1, step=[30, 60, 90, 110])

+total_epochs = 130

+log_config = dict(interval=10)

+

+custom_hooks_config = [dict(type='ModelSetEpochHook')]

+

+model = dict(

+ type='GestureRecognizer',

+ modality=['rgb'],

+ backbone=dict(rgb=dict(

+ type='I3D',

+ in_channels=3,

+ expansion=1,

+ ), ),

+ cls_head=dict(

+ type='MultiModalSSAHead',

+ num_classes=25,

+ avg_pool_kernel=(1, 2, 2),

+ ),

+ train_cfg=dict(

+ beta=2,

+ lambda_=1e-3,

+ ssa_start_epoch=111,

+ ),

+ test_cfg=dict(),

+)

+

+data_root = 'data/nvgesture'

+data_cfg = dict(

+ video_size=[320, 240],

+ modality=['rgb'],

+ bbox_file=f'{data_root}/annotations/bboxes.json',

+)

+

+train_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=16, ref_fps=15),

+ dict(type='MultiFrameBBoxMerge'),

+ dict(

+ type='ResizedCropByBBox',

+ size=112,

+ scale=(0.8, 1.25),

+ ratio=(0.75, 1.33),

+ shift=0.3),

+ dict(type='GestureRandomFlip'),

+ dict(type='VideoColorJitter', brightness=0.4, contrast=0.3),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+val_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=-1, ref_fps=15),

+ dict(type='MultiFrameBBoxMerge'),

+ dict(type='ResizedCropByBBox', size=112),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+test_pipeline = val_pipeline

+

+data = dict(

+ samples_per_gpu=6,

+ workers_per_gpu=2,

+ val_dataloader=dict(samples_per_gpu=6),

+ test_dataloader=dict(samples_per_gpu=6),

+ train=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_train_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=train_pipeline,

+ dataset_info={{_base_.dataset_info}}),

+ val=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=val_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}),

+ test=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=test_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}))

diff --git a/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_224x224_fps30.py b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_224x224_fps30.py

new file mode 100644

index 0000000000..8a00d1e9f4

--- /dev/null

+++ b/configs/hand/gesture_sview_rgbd_vid/mtut/nvgesture/i3d_nvgesture_bbox_224x224_fps30.py

@@ -0,0 +1,134 @@

+_base_ = [

+ '../../../../_base_/default_runtime.py',

+ '../../../../_base_/datasets/nvgesture.py'

+]

+

+checkpoint_config = dict(interval=5)

+evaluation = dict(interval=5, metric='AP', save_best='AP_rgb')

+

+optimizer = dict(

+ type='SGD',

+ lr=1e-2,

+ momentum=0.9,

+)

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(policy='step', gamma=0.1, step=[30, 50])

+total_epochs = 75

+log_config = dict(interval=10)

+

+custom_hooks_config = [dict(type='ModelSetEpochHook')]

+

+model = dict(

+ type='GestureRecognizer',

+ modality=['rgb', 'depth'],

+ pretrained=dict(

+ rgb='https://github.com/hassony2/kinetics_i3d_pytorch/'

+ 'raw/master/model/model_rgb.pth',

+ depth='https://github.com/hassony2/kinetics_i3d_pytorch/'

+ 'raw/master/model/model_rgb.pth',

+ ),

+ backbone=dict(

+ rgb=dict(

+ type='I3D',

+ in_channels=3,

+ expansion=1,

+ ),

+ depth=dict(

+ type='I3D',

+ in_channels=1,

+ expansion=1,

+ ),

+ ),

+ cls_head=dict(

+ type='MultiModalSSAHead',

+ num_classes=25,

+ ),

+ train_cfg=dict(

+ beta=2,

+ lambda_=5e-3,

+ ssa_start_epoch=61,

+ ),

+ test_cfg=dict(),

+)

+

+data_root = 'data/nvgesture'

+data_cfg = dict(

+ video_size=[320, 240],

+ modality=['rgb', 'depth'],

+ bbox_file=f'{data_root}/annotations/bboxes.json',

+)

+

+train_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=64, ref_fps=30),

+ dict(type='MultiFrameBBoxMerge'),

+ dict(

+ type='ResizedCropByBBox',

+ size=224,

+ scale=(0.8, 1.25),

+ ratio=(0.75, 1.33),

+ shift=0.3),

+ dict(type='GestureRandomFlip'),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+val_pipeline = [

+ dict(type='LoadVideoFromFile'),

+ dict(type='ModalWiseChannelProcess'),

+ dict(type='CropValidClip'),

+ dict(type='TemporalPooling', length=-1, ref_fps=30),

+ dict(type='MultiFrameBBoxMerge'),

+ dict(type='ResizedCropByBBox', size=224),

+ dict(type='MultiModalVideoToTensor'),

+ dict(

+ type='VideoNormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(

+ type='Collect', keys=['video', 'label'], meta_keys=['fps',

+ 'modality']),

+]

+

+test_pipeline = val_pipeline

+

+data = dict(

+ samples_per_gpu=6,

+ workers_per_gpu=2,

+ val_dataloader=dict(samples_per_gpu=6),

+ test_dataloader=dict(samples_per_gpu=6),

+ train=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_train_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=train_pipeline,

+ dataset_info={{_base_.dataset_info}}),

+ val=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=val_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}),

+ test=dict(

+ type='NVGestureDataset',

+ ann_file=f'{data_root}/annotations/'

+ 'nvgesture_test_correct_cvpr2016_v2.lst',

+ vid_prefix=f'{data_root}/',

+ data_cfg=data_cfg,

+ pipeline=test_pipeline,

+ test_mode=True,

+ dataset_info={{_base_.dataset_info}}))

diff --git a/demo/docs/mmdet_modelzoo.md b/demo/docs/mmdet_modelzoo.md

index a1d9a025aa..ae6b24df28 100644

--- a/demo/docs/mmdet_modelzoo.md

+++ b/demo/docs/mmdet_modelzoo.md

@@ -14,6 +14,7 @@ For hand bounding box detection, we simply train our hand box models on onehand1

| Arch | Box AP | ckpt | log |

| :---------------------------------------------------------------- | :----: | :---------------------------------------------------------------: | :--------------------------------------------------------------: |

| [Cascade_R-CNN X-101-64x4d-FPN-1class](/demo/mmdetection_cfg/cascade_rcnn_x101_64x4d_fpn_1class.py) | 0.817 | [ckpt](https://download.openmmlab.com/mmpose/mmdet_pretrained/cascade_rcnn_x101_64x4d_fpn_20e_onehand10k-dac19597_20201030.pth) | [log](https://download.openmmlab.com/mmpose/mmdet_pretrained/cascade_rcnn_x101_64x4d_fpn_20e_onehand10k_20201030.log.json) |

+| [ssdlite_mobilenetv2-1class](/demo/mmdetection_cfg/ssdlite_mobilenetv2_scratch_600e_onehand.py) | 0.779 | [ckpt](https://download.openmmlab.com/mmpose/mmdet_pretrained/ssdlite_mobilenetv2_scratch_600e_onehand-4f9f8686_20220523.pth) | [log](https://download.openmmlab.com/mmpose/mmdet_pretrainedssdlite_mobilenetv2_scratch_600e_onehand_20220523.log.json) |

### Animal Bounding Box Detection Models

diff --git a/demo/mmdetection_cfg/ssdlite_mobilenetv2_scratch_600e_onehand.py b/demo/mmdetection_cfg/ssdlite_mobilenetv2_scratch_600e_onehand.py

new file mode 100644

index 0000000000..b7e37964cf

--- /dev/null

+++ b/demo/mmdetection_cfg/ssdlite_mobilenetv2_scratch_600e_onehand.py

@@ -0,0 +1,240 @@

+# =========================================================

+# from 'mmdetection/configs/_base_/default_runtime.py'

+# =========================================================

+checkpoint_config = dict(interval=1)

+# yapf:disable

+log_config = dict(

+ interval=50,

+ hooks=[

+ dict(type='TextLoggerHook'),

+ # dict(type='TensorboardLoggerHook')

+ ])

+# yapf:enable

+custom_hooks = [dict(type='NumClassCheckHook')]

+# =========================================================

+

+# =========================================================

+# from 'mmdetection/configs/_base_/datasets/coco_detection.py'

+# =========================================================

+# dataset settings

+dataset_type = 'CocoDataset'

+data_root = 'data/coco/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(1333, 800),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip'),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=2,

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_train2017.json',

+ img_prefix=data_root + 'train2017/',

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline))

+evaluation = dict(interval=1, metric='bbox')

+# =========================================================

+

+model = dict(

+ type='SingleStageDetector',

+ backbone=dict(

+ type='MobileNetV2',

+ out_indices=(4, 7),

+ norm_cfg=dict(type='BN', eps=0.001, momentum=0.03),

+ init_cfg=dict(type='TruncNormal', layer='Conv2d', std=0.03)),

+ neck=dict(

+ type='SSDNeck',

+ in_channels=(96, 1280),

+ out_channels=(96, 1280, 512, 256, 256, 128),

+ level_strides=(2, 2, 2, 2),

+ level_paddings=(1, 1, 1, 1),

+ l2_norm_scale=None,

+ use_depthwise=True,

+ norm_cfg=dict(type='BN', eps=0.001, momentum=0.03),

+ act_cfg=dict(type='ReLU6'),

+ init_cfg=dict(type='TruncNormal', layer='Conv2d', std=0.03)),

+ bbox_head=dict(

+ type='SSDHead',

+ in_channels=(96, 1280, 512, 256, 256, 128),

+ num_classes=1,

+ use_depthwise=True,

+ norm_cfg=dict(type='BN', eps=0.001, momentum=0.03),

+ act_cfg=dict(type='ReLU6'),

+ init_cfg=dict(type='Normal', layer='Conv2d', std=0.001),

+

+ # set anchor size manually instead of using the predefined

+ # SSD300 setting.

+ anchor_generator=dict(

+ type='SSDAnchorGenerator',

+ scale_major=False,

+ strides=[16, 32, 64, 107, 160, 320],

+ ratios=[[2, 3], [2, 3], [2, 3], [2, 3], [2, 3], [2, 3]],

+ min_sizes=[48, 100, 150, 202, 253, 304],

+ max_sizes=[100, 150, 202, 253, 304, 320]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[0.1, 0.1, 0.2, 0.2])),

+ # model training and testing settings

+ train_cfg=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.,

+ ignore_iof_thr=-1,

+ gt_max_assign_all=False),

+ smoothl1_beta=1.,

+ allowed_border=-1,

+ pos_weight=-1,

+ neg_pos_ratio=3,

+ debug=False),

+ test_cfg=dict(

+ nms_pre=1000,

+ nms=dict(type='nms', iou_threshold=0.45),

+ min_bbox_size=0,

+ score_thr=0.02,

+ max_per_img=200))

+cudnn_benchmark = True

+

+# dataset settings

+file_client_args = dict(

+ backend='petrel',

+ path_mapping=dict({

+ '.data/onehand10k/':

+ 'openmmlab:s3://openmmlab/datasets/pose/OneHand10K/',

+ 'data/onehand10k/':

+ 'openmmlab:s3://openmmlab/datasets/pose/OneHand10K/'

+ }))

+

+dataset_type = 'CocoDataset'

+data_root = 'data/onehand10k/'

+classes = ('hand', )

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile', file_client_args=file_client_args),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(

+ type='Expand',

+ mean=img_norm_cfg['mean'],

+ to_rgb=img_norm_cfg['to_rgb'],

+ ratio_range=(1, 4)),

+ dict(

+ type='MinIoURandomCrop',

+ min_ious=(0.1, 0.3, 0.5, 0.7, 0.9),

+ min_crop_size=0.3),

+ dict(type='Resize', img_scale=(320, 320), keep_ratio=False),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(

+ type='PhotoMetricDistortion',

+ brightness_delta=32,

+ contrast_range=(0.5, 1.5),

+ saturation_range=(0.5, 1.5),

+ hue_delta=18),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=320),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile', file_client_args=file_client_args),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(320, 320),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=False),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=320),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=24,

+ workers_per_gpu=4,

+ train=dict(

+ _delete_=True,

+ type='RepeatDataset', # use RepeatDataset to speed up training

+ times=5,

+ dataset=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/onehand10k_train.json',

+ img_prefix=data_root,

+ classes=classes,

+ pipeline=train_pipeline)),

+ val=dict(

+ ann_file=data_root + 'annotations/onehand10k_test.json',

+ img_prefix=data_root,

+ classes=classes,

+ pipeline=test_pipeline),

+ test=dict(

+ ann_file=data_root + 'annotations/onehand10k_test.json',

+ img_prefix=data_root,

+ classes=classes,

+ pipeline=test_pipeline))

+

+# optimizer

+optimizer = dict(type='SGD', lr=0.015, momentum=0.9, weight_decay=4.0e-5)

+optimizer_config = dict(grad_clip=None)

+

+# learning policy

+lr_config = dict(

+ policy='CosineAnnealing',

+ warmup='linear',

+ warmup_iters=500,

+ warmup_ratio=0.001,

+ min_lr=0)

+runner = dict(type='EpochBasedRunner', max_epochs=120)

+

+# Avoid evaluation and saving weights too frequently

+evaluation = dict(interval=5, metric='bbox')

+checkpoint_config = dict(interval=5)

+custom_hooks = [

+ dict(type='NumClassCheckHook'),

+ dict(type='CheckInvalidLossHook', interval=50, priority='VERY_LOW')

+]

+

+log_config = dict(interval=5)

+

+# NOTE: `auto_scale_lr` is for automatically scaling LR,

+# USER SHOULD NOT CHANGE ITS VALUES.

+# base_batch_size = (8 GPUs) x (24 samples per GPU)

+auto_scale_lr = dict(base_batch_size=192)

+

+load_from = 'https://download.openmmlab.com/mmdetection/'

+'v2.0/ssd/ssdlite_mobilenetv2_scratch_600e_coco/'

+'ssdlite_mobilenetv2_scratch_600e_coco_20210629_110627-974d9307.pth'

diff --git a/docs/en/papers/algorithms/mtut.md b/docs/en/papers/algorithms/mtut.md

new file mode 100644

index 0000000000..7cfefeef2f

--- /dev/null

+++ b/docs/en/papers/algorithms/mtut.md

@@ -0,0 +1,30 @@

+# Improving the Performance of Unimodal Dynamic Hand-Gesture Recognition with Multimodal Training

+

+

+

+

+MTUT (CVPR'2019)

+

+```bibtex

+@InProceedings{Abavisani_2019_CVPR,

+author = {Abavisani, Mahdi and Joze, Hamid Reza Vaezi and Patel, Vishal M.},

+title = {Improving the Performance of Unimodal Dynamic Hand-Gesture Recognition With Multimodal Training},

+booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

+month = {June},

+year = {2019}

+}

+```

+

+

+

+## Abstract

+

+

+

+We present an efficient approach for leveraging the knowledge from multiple modalities in training unimodal 3D convolutional neural networks (3D-CNNs) for the task of dynamic hand gesture recognition. Instead of explicitly combining multimodal information, which is commonplace in many state-of-the-art methods, we propose a different framework in which we embed the knowledge of multiple modalities in individual networks so that each unimodal network can achieve an improved performance. In particular, we dedicate separate networks per available modality and enforce them to collaborate and learn to develop networks with common semantics and better representations. We introduce a "spatiotemporal semantic alignment" loss (SSA) to align the content of the features from different networks. In addition, we regularize this loss with our proposed "focal regularization parameter" to avoid negative knowledge transfer. Experimental results show that our framework improves the test time recognition accuracy of unimodal networks, and provides the state-of-the-art performance on various dynamic hand gesture recognition datasets.

+

+

+

+

+

+

+I3D (CVPR'2017)

+

+```bibtex

+@InProceedings{Carreira_2017_CVPR,

+ author = {Carreira, Joao and Zisserman, Andrew},

+ title = {Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset},

+ booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+ month = {July},

+ year = {2017}

+}

+```

+

+

+

+## Abstract

+

+

+

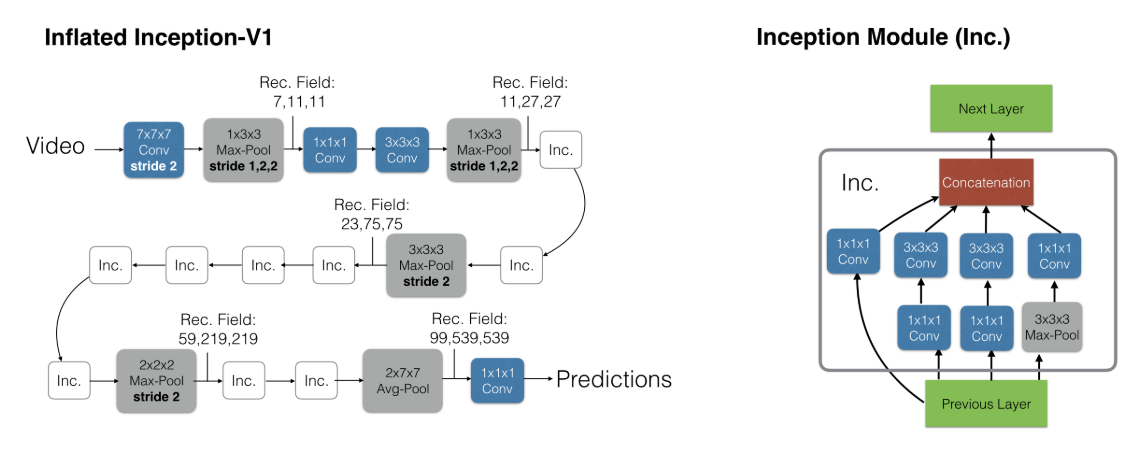

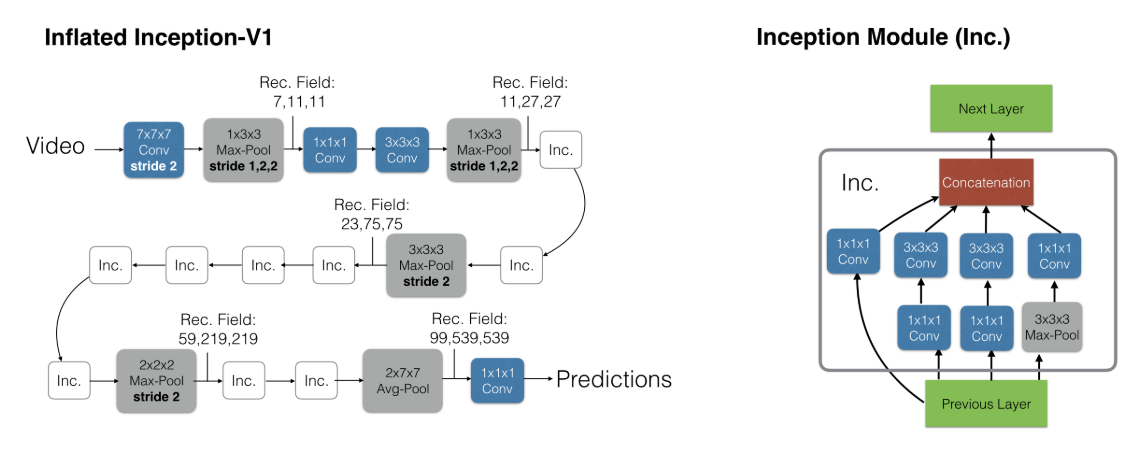

+The paucity of videos in current action classification datasets (UCF-101 and HMDB-51) has made it difficult to identify good video architectures, as most methods obtain similar performance on existing small-scale benchmarks. This paper re-evaluates state-of-the-art architectures in light of the new Kinetics Human Action Video dataset. Kinetics has two orders of magnitude more data, with 400 human action classes and over 400 clips per class, and is collected from realistic, challenging YouTube videos. We provide an analysis on how current architectures fare on the task of action classification on this dataset and how much performance improves on the smaller benchmark datasets after pre-training on Kinetics. We also introduce a new Two-Stream Inflated 3D ConvNet (I3D) that is based on 2D ConvNet inflation: filters and pooling kernels of very deep image classification ConvNets are expanded into 3D, making it possible to learn seamless spatio-temporal feature extractors from video while leveraging successful ImageNet architecture designs and even their parameters. We show that, after pre-training on Kinetics, I3D models considerably improve upon the state-of-the-art in action classification, reaching 80.2% on HMDB-51 and 97.9% on UCF-101.

+

+

+

+

+

+

+NVGesture (CVPR'2016)

+

+```bibtex

+@InProceedings{Molchanov_2016_CVPR,

+ author = {Molchanov, Pavlo and Yang, Xiaodong and Gupta, Shalini and Kim, Kihwan and Tyree, Stephen and Kautz, Jan},

+ title = {Online Detection and Classification of Dynamic Hand Gestures With Recurrent 3D Convolutional Neural Network},

+ booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+ month = {June},

+ year = {2016}

+}

+```

+

+

diff --git a/docs/en/tasks/2d_hand_gesture.md b/docs/en/tasks/2d_hand_gesture.md

new file mode 100644

index 0000000000..6161b401f7

--- /dev/null

+++ b/docs/en/tasks/2d_hand_gesture.md

@@ -0,0 +1,60 @@

+# 2D Hand Keypoint Datasets

+

+It is recommended to symlink the dataset root to `$MMPOSE/data`.

+If your folder structure is different, you may need to change the corresponding paths in config files.

+

+MMPose supported datasets:

+

+- [NVGesture](#nvgesture) \[ [Homepage](https://www.v7labs.com/open-datasets/nvgesture) \]

+

+## NVGesture

+

+

+

+

+OneHand10K (CVPR'2016)

+

+```bibtex

+@inproceedings{molchanov2016online,

+ title={Online detection and classification of dynamic hand gestures with recurrent 3d convolutional neural network},

+ author={Molchanov, Pavlo and Yang, Xiaodong and Gupta, Shalini and Kim, Kihwan and Tyree, Stephen and Kautz, Jan},

+ booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

+ pages={4207--4215},

+ year={2016}

+}

+```

+

+

+

+For [NVGesture](https://www.v7labs.com/open-datasets/nvgesture) data and annotation, please download from [NVGesture Dataset](https://drive.google.com/drive/folders/0ByhYoRYACz9cMUk0QkRRMHM3enc?resourcekey=0-cJe9M3PZy2qCbfGmgpFrHQ&usp=sharing).

+Extract them under {MMPose}/data, and make them look like this:

+

+```text

+mmpose

+├── mmpose

+├── docs

+├── tests

+├── tools

+├── configs

+`── data

+ │── nvgesture

+ |── annotations

+ | |── nvgesture_train_correct_cvpr2016_v2.lst

+ | |── nvgesture_test_correct_cvpr2016_v2.lst

+ | ...

+ `── Video_data

+ |── class_01

+ | |── subject1_r0

+ | | |── sk_color.avi

+ | | |── sk_depth.avi

+ | | ...

+ | |── subject1_r1

+ | |── subject2_r0

+ | ...

+ |── class_02

+ |── class_03

+ ...

+

+```

+

+The hand bounding box is computed by the hand detection model described in [det model zoo](/demo/docs/mmdet_modelzoo.md). The detected bounding box can be downloaded from [GoogleDrive](https://drive.google.com/drive/folders/1AGOeX0iHhaigBVRicjetieNRC7Zctuz4?usp=sharing). It is recommended to place it at `data/nvgesture/annotations/bboxes.json`.

diff --git a/mmpose/apis/__init__.py b/mmpose/apis/__init__.py

index 772341fb02..2a7f70fbec 100644

--- a/mmpose/apis/__init__.py

+++ b/mmpose/apis/__init__.py

@@ -1,7 +1,8 @@

# Copyright (c) OpenMMLab. All rights reserved.

from .inference import (collect_multi_frames, inference_bottom_up_pose_model,

- inference_top_down_pose_model, init_pose_model,

- process_mmdet_results, vis_pose_result)

+ inference_gesture_model, inference_top_down_pose_model,

+ init_pose_model, process_mmdet_results,

+ vis_pose_result)

from .inference_3d import (extract_pose_sequence, inference_interhand_3d_model,

inference_mesh_model, inference_pose_lifter_model,

vis_3d_mesh_result, vis_3d_pose_result)

@@ -10,11 +11,23 @@

from .train import init_random_seed, train_model

__all__ = [

- 'train_model', 'init_pose_model', 'inference_top_down_pose_model',

- 'inference_bottom_up_pose_model', 'multi_gpu_test', 'single_gpu_test',

- 'vis_pose_result', 'get_track_id', 'vis_pose_tracking_result',

- 'inference_pose_lifter_model', 'vis_3d_pose_result',

- 'inference_interhand_3d_model', 'extract_pose_sequence',

- 'inference_mesh_model', 'vis_3d_mesh_result', 'process_mmdet_results',

- 'init_random_seed', 'collect_multi_frames'

+ 'train_model',

+ 'init_pose_model',

+ 'inference_top_down_pose_model',

+ 'inference_bottom_up_pose_model',

+ 'multi_gpu_test',

+ 'single_gpu_test',

+ 'vis_pose_result',

+ 'get_track_id',

+ 'vis_pose_tracking_result',

+ 'inference_pose_lifter_model',

+ 'vis_3d_pose_result',

+ 'inference_interhand_3d_model',

+ 'extract_pose_sequence',

+ 'inference_mesh_model',

+ 'vis_3d_mesh_result',

+ 'process_mmdet_results',

+ 'init_random_seed',

+ 'collect_multi_frames',

+ 'inference_gesture_model',

]

diff --git a/mmpose/apis/inference.py b/mmpose/apis/inference.py

index 6d7f4dacaf..20c6d0d007 100644

--- a/mmpose/apis/inference.py

+++ b/mmpose/apis/inference.py

@@ -2,6 +2,7 @@

import copy

import os

import warnings

+from collections import defaultdict

import mmcv

import numpy as np

@@ -800,6 +801,61 @@ def vis_pose_result(model,

return img

+def inference_gesture_model(

+ model,

+ videos_or_paths,

+ bboxes=None,

+ dataset_info=None,

+):

+

+ cfg = model.cfg

+ device = next(model.parameters()).device

+ if device.type == 'cpu':

+ device = -1

+

+ # build the data pipeline

+ test_pipeline = Compose(cfg.test_pipeline)

+ _pipeline_gpu_speedup(test_pipeline, next(model.parameters()).device)

+

+ # data preprocessing

+ data = defaultdict(list)

+ data['label'] = -1

+

+ if not isinstance(videos_or_paths, (tuple, list)):

+ videos_or_paths = [videos_or_paths]

+ if isinstance(videos_or_paths[0], str):

+ data['video_file'] = videos_or_paths

+ else:

+ data['video'] = videos_or_paths

+

+ if bboxes is not None:

+ data['bbox'] = bboxes

+

+ if isinstance(dataset_info, dict):

+ data['modality'] = dataset_info.get('modality', ['rgb'])

+ data['fps'] = dataset_info.get('fps', None)

+ if not isinstance(data['fps'], (tuple, list)):

+ data['fps'] = [data['fps']]

+

+ data = test_pipeline(data)

+ batch_data = collate([data], samples_per_gpu=1)

+ batch_data = scatter(batch_data, [device])[0]

+

+ # inference

+ with torch.no_grad():

+ output = model.forward(return_loss=False, **batch_data)

+ scores = []

+ for modal, logit in output['logits'].items():

+ while logit.ndim > 2:

+ logit = logit.mean(dim=2)

+ score = torch.softmax(logit, dim=1)

+ scores.append(score)

+ score = torch.stack(scores, dim=2).mean(dim=2)

+ pred_score, pred_label = torch.max(score, dim=1)

+

+ return pred_label, pred_score

+

+

def process_mmdet_results(mmdet_results, cat_id=1):

"""Process mmdet results, and return a list of bboxes.

diff --git a/mmpose/core/utils/__init__.py b/mmpose/core/utils/__init__.py

index d059d21422..512e7680bc 100644

--- a/mmpose/core/utils/__init__.py

+++ b/mmpose/core/utils/__init__.py

@@ -1,5 +1,9 @@

# Copyright (c) OpenMMLab. All rights reserved.

from .dist_utils import allreduce_grads, sync_random_seed

+from .model_util_hooks import ModelSetEpochHook

from .regularizations import WeightNormClipHook

-__all__ = ['allreduce_grads', 'WeightNormClipHook', 'sync_random_seed']

+__all__ = [

+ 'allreduce_grads', 'WeightNormClipHook', 'sync_random_seed',

+ 'ModelSetEpochHook'

+]

diff --git a/mmpose/core/utils/model_util_hooks.py b/mmpose/core/utils/model_util_hooks.py

new file mode 100644

index 0000000000..d308a8a57a

--- /dev/null

+++ b/mmpose/core/utils/model_util_hooks.py

@@ -0,0 +1,13 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+from mmcv.runner import HOOKS, Hook

+

+

+@HOOKS.register_module()

+class ModelSetEpochHook(Hook):

+ """The hook that tells model the current epoch in training."""

+

+ def __init__(self):

+ pass

+

+ def before_epoch(self, runner):

+ runner.model.module.set_train_epoch(runner.epoch + 1)

diff --git a/mmpose/datasets/__init__.py b/mmpose/datasets/__init__.py

index 650d7e9480..fd58f3ea6d 100644

--- a/mmpose/datasets/__init__.py

+++ b/mmpose/datasets/__init__.py

@@ -21,7 +21,7 @@

TopDownOCHumanDataset, TopDownOneHand10KDataset, TopDownPanopticDataset,

TopDownPoseTrack18Dataset, TopDownPoseTrack18VideoDataset,

Body3DMviewDirectPanopticDataset, Body3DMviewDirectShelfDataset,

- Body3DMviewDirectCampusDataset)

+ Body3DMviewDirectCampusDataset, NVGestureDataset)

__all__ = [

'TopDownCocoDataset', 'BottomUpCocoDataset', 'BottomUpMhpDataset',

@@ -42,5 +42,5 @@

'TopDownPoseTrack18VideoDataset', 'build_dataloader', 'build_dataset',

'Compose', 'DistributedSampler', 'DATASETS', 'PIPELINES', 'DatasetInfo',

'Body3DMviewDirectPanopticDataset', 'Body3DMviewDirectShelfDataset',

- 'Body3DMviewDirectCampusDataset'

+ 'Body3DMviewDirectCampusDataset', 'NVGestureDataset'

]

diff --git a/mmpose/datasets/datasets/__init__.py b/mmpose/datasets/datasets/__init__.py

index 603f840206..f44fc8e198 100644

--- a/mmpose/datasets/datasets/__init__.py

+++ b/mmpose/datasets/datasets/__init__.py

@@ -13,6 +13,7 @@

from .face import (Face300WDataset, FaceAFLWDataset, FaceCocoWholeBodyDataset,

FaceCOFWDataset, FaceWFLWDataset)

from .fashion import DeepFashionDataset

+from .gesture import NVGestureDataset

from .hand import (FreiHandDataset, HandCocoWholeBodyDataset,

InterHand2DDataset, InterHand3DDataset, OneHand10KDataset,

PanopticDataset)

@@ -44,5 +45,5 @@

'AnimalATRWDataset', 'AnimalPoseDataset', 'TopDownH36MDataset',

'TopDownHalpeDataset', 'TopDownPoseTrack18VideoDataset',

'Body3DMviewDirectPanopticDataset', 'Body3DMviewDirectShelfDataset',

- 'Body3DMviewDirectCampusDataset'

+ 'Body3DMviewDirectCampusDataset', 'NVGestureDataset'

]

diff --git a/mmpose/datasets/datasets/gesture/__init__.py b/mmpose/datasets/datasets/gesture/__init__.py

new file mode 100644

index 0000000000..22c85afd7c

--- /dev/null

+++ b/mmpose/datasets/datasets/gesture/__init__.py

@@ -0,0 +1,4 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+from .nvgesture_dataset import NVGestureDataset

+

+__all__ = ['NVGestureDataset']

diff --git a/mmpose/datasets/datasets/gesture/gesture_base_dataset.py b/mmpose/datasets/datasets/gesture/gesture_base_dataset.py

new file mode 100644

index 0000000000..e81d972ece

--- /dev/null

+++ b/mmpose/datasets/datasets/gesture/gesture_base_dataset.py

@@ -0,0 +1,86 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+import copy

+from abc import ABCMeta, abstractmethod

+

+import numpy as np

+from torch.utils.data import Dataset

+

+from mmpose.datasets.pipelines import Compose

+

+

+class GestureBaseDataset(Dataset, metaclass=ABCMeta):

+ """Base class for gesture recognition datasets with Multi-Modal video as

+ the input.

+

+ All gesture datasets should subclass it.

+ All subclasses should overwrite:

+ Methods:`_get_single`, 'evaluate'

+

+ Args:

+ ann_file (str): Path to the annotation file.

+ vid_prefix (str): Path to a directory where videos are held.

+ data_cfg (dict): config

+ pipeline (list[dict | callable]): A sequence of data transforms.

+ dataset_info (DatasetInfo): A class containing all dataset info.

+ test_mode (bool): Store True when building test or

+ validation dataset. Default: False.

+ """

+

+ def __init__(self,

+ ann_file,

+ vid_prefix,

+ data_cfg,

+ pipeline,

+ dataset_info=None,

+ test_mode=False):

+

+ self.video_info = {}

+ self.ann_info = {}

+

+ self.ann_file = ann_file

+ self.vid_prefix = vid_prefix

+ self.pipeline = pipeline

+ self.test_mode = test_mode

+

+ self.ann_info['video_size'] = np.array(data_cfg['video_size'])

+ self.ann_info['flip_pairs'] = dataset_info.flip_pairs

+ self.modality = data_cfg['modality']

+ if isinstance(self.modality, (list, tuple)):

+ self.modality = self.modality

+ else:

+ self.modality = (self.modality, )

+ self.bbox_file = data_cfg.get('bbox_file', None)

+ self.dataset_name = dataset_info.dataset_name

+ self.pipeline = Compose(self.pipeline)

+

+ @abstractmethod

+ def _get_single(self, idx):

+ """Get anno for a single video."""

+ raise NotImplementedError

+

+ @abstractmethod

+ def evaluate(self, results, *args, **kwargs):

+ """Evaluate recognition results."""

+

+ def prepare_train_vid(self, idx):

+ """Prepare video for training given the index."""

+ results = copy.deepcopy(self._get_single(idx))

+ results['ann_info'] = self.ann_info

+ return self.pipeline(results)

+

+ def prepare_test_vid(self, idx):

+ """Prepare video for testing given the index."""

+ results = copy.deepcopy(self._get_single(idx))

+ results['ann_info'] = self.ann_info

+ return self.pipeline(results)

+

+ def __len__(self):

+ """Get dataset length."""

+ return len(self.vid_ids)

+

+ def __getitem__(self, idx):

+ """Get the sample for either training or testing given index."""

+ if self.test_mode:

+ return self.prepare_test_vid(idx)

+

+ return self.prepare_train_vid(idx)

diff --git a/mmpose/datasets/datasets/gesture/nvgesture_dataset.py b/mmpose/datasets/datasets/gesture/nvgesture_dataset.py

new file mode 100644

index 0000000000..83f5e0df06

--- /dev/null

+++ b/mmpose/datasets/datasets/gesture/nvgesture_dataset.py

@@ -0,0 +1,185 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+import os

+import os.path as osp

+import tempfile

+import warnings

+from collections import defaultdict

+

+import json_tricks as json

+import numpy as np

+from mmcv import Config

+

+from ...builder import DATASETS

+from .gesture_base_dataset import GestureBaseDataset

+

+

+@DATASETS.register_module()

+class NVGestureDataset(GestureBaseDataset):

+ """NVGesture dataset for gesture recognition.

+

+ "Online Detection and Classification of Dynamic Hand Gestures

+ With Recurrent 3D Convolutional Neural Network",

+ Conference on Computer Vision and Pattern Recognition (CVPR) 2016.

+

+ The dataset loads raw videos and apply specified transforms

+ to return a dict containing the image tensors and other information.

+

+ Args:

+ ann_file (str): Path to the annotation file.

+ vid_prefix (str): Path to a directory where videos are held.

+ data_cfg (dict): config

+ pipeline (list[dict | callable]): A sequence of data transforms.

+ dataset_info (DatasetInfo): A class containing all dataset info.

+ test_mode (bool): Store True when building test or

+ validation dataset. Default: False.

+ """

+

+ def __init__(self,

+ ann_file,

+ vid_prefix,

+ data_cfg,

+ pipeline,

+ dataset_info=None,

+ test_mode=False):

+

+ if dataset_info is None:

+ warnings.warn(

+ 'dataset_info is missing. '

+ 'Check https://github.com/open-mmlab/mmpose/pull/663 '

+ 'for details.', DeprecationWarning)

+ cfg = Config.fromfile('configs/_base_/datasets/nvgesture.py')

+ dataset_info = cfg._cfg_dict['dataset_info']

+

+ super().__init__(

+ ann_file,

+ vid_prefix,

+ data_cfg,

+ pipeline,

+ dataset_info=dataset_info,

+ test_mode=test_mode)

+

+ self.db = self._get_db()

+ self.vid_ids = list(range(len(self.db)))

+ print(f'=> load {len(self.db)} samples')

+

+ def _get_db(self):

+ """Load dataset."""

+ db = []

+ with open(self.ann_file, 'r') as f:

+ samples = f.readlines()

+

+ use_bbox = bool(self.bbox_file)

+ if use_bbox:

+ with open(self.bbox_file, 'r') as f:

+ bboxes = json.load(f)

+

+ for sample in samples:

+ sample = sample.strip().split()

+ sample = {

+ item.split(':', 1)[0]: item.split(':', 1)[1]

+ for item in sample

+ }

+ path = sample['path'][2:]

+ for key in ('depth', 'color'):

+ fname, start, end = sample[key].split(':')

+ sample[key] = {

+ 'path': os.path.join(path, fname + '.avi'),

+ 'valid_frames': (eval(start), eval(end))

+ }

+ sample['flow'] = {

+ 'path': sample['color']['path'].replace('color', 'flow'),

+ 'valid_frames': sample['color']['valid_frames']

+ }

+ sample['rgb'] = sample['color']

+ sample['label'] = eval(sample['label']) - 1

+

+ if use_bbox:

+ sample['bbox'] = bboxes[path]

+

+ del sample['path'], sample['duo_left'], sample['color']

+ db.append(sample)

+

+ return db

+

+ def _get_single(self, idx):

+ """Get anno for a single video."""

+ anno = defaultdict(list)

+ sample = self.db[self.vid_ids[idx]]

+

+ anno['label'] = sample['label']

+ anno['modality'] = self.modality

+ if 'bbox' in sample:

+ anno['bbox'] = sample['bbox']

+

+ for modal in self.modality:

+ anno['video_file'].append(

+ os.path.join(self.vid_prefix, sample[modal]['path']))

+ anno['valid_frames'].append(sample[modal]['valid_frames'])

+

+ return anno

+

+ def evaluate(self, results, res_folder=None, metric='AP', **kwargs):

+ """Evaluate nvgesture recognition results. The gesture prediction

+ results will be saved in ``${res_folder}/result_gesture.json``.

+

+ Note:

+ - batch_size: N

+ - heatmap length: L

+

+ Args:

+ results (dict): Testing results containing the following

+ items:

+ - logits (dict[str, torch.tensor[N,25,L]]): For each item, \

+ the key represents the modality of input video, while \

+ the value represents the prediction of gesture. Three \

+ dimensions represent batch, category and temporal \

+ length, respectively.

+ - label (np.ndarray[N]): [center[0], center[1], scale[0], \

+ scale[1],area, score]

+ res_folder (str, optional): The folder to save the testing

+ results. If not specified, a temp folder will be created.

+ Default: None.

+ metric (str | list[str]): Metric to be performed.

+ Options: 'AP'.

+

+ Returns:

+ dict: Evaluation results for evaluation metric.

+ """

+ metrics = metric if isinstance(metric, list) else [metric]

+ allowed_metrics = ['AP']

+ for metric in metrics:

+ if metric not in allowed_metrics:

+ raise KeyError(f'metric {metric} is not supported')

+

+ if res_folder is not None:

+ tmp_folder = None

+ res_file = osp.join(res_folder, 'result_gesture.json')

+ else:

+ tmp_folder = tempfile.TemporaryDirectory()

+ res_file = osp.join(tmp_folder.name, 'result_gesture.json')

+

+ predictions = defaultdict(list)

+ label = []

+ for result in results:

+ label.append(result['label'].cpu().numpy())

+ for modal in result['logits']:

+ logit = result['logits'][modal].mean(dim=2)

+ pred = logit.argmax(dim=1).cpu().numpy()

+ predictions[modal].append(pred)

+

+ label = np.concatenate(label, axis=0)

+ for modal in predictions:

+ predictions[modal] = np.concatenate(predictions[modal], axis=0)

+

+ with open(res_file, 'w') as f:

+ json.dump(predictions, f, indent=4)

+

+ results = dict()

+ if 'AP' in metrics:

+ APs = []

+ for modal in predictions:

+ results[f'AP_{modal}'] = (predictions[modal] == label).mean()

+ APs.append(results[f'AP_{modal}'])

+ results['AP_mean'] = sum(APs) / len(APs)

+

+ return results

diff --git a/mmpose/datasets/pipelines/__init__.py b/mmpose/datasets/pipelines/__init__.py

index cf06db1c9d..e619b339f6 100644

--- a/mmpose/datasets/pipelines/__init__.py

+++ b/mmpose/datasets/pipelines/__init__.py

@@ -1,7 +1,8 @@

# Copyright (c) OpenMMLab. All rights reserved.

from .bottom_up_transform import * # noqa

+from .gesture_transform import * # noqa

from .hand_transform import * # noqa

-from .loading import LoadImageFromFile # noqa

+from .loading import * # noqa

from .mesh_transform import * # noqa

from .pose3d_transform import * # noqa

from .shared_transform import * # noqa

diff --git a/mmpose/datasets/pipelines/gesture_transform.py b/mmpose/datasets/pipelines/gesture_transform.py

new file mode 100644

index 0000000000..28a3e568cc

--- /dev/null

+++ b/mmpose/datasets/pipelines/gesture_transform.py

@@ -0,0 +1,414 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+import mmcv

+import numpy as np

+import torch

+

+from mmpose.core import bbox_xywh2xyxy, bbox_xyxy2xywh

+from mmpose.datasets.builder import PIPELINES

+

+

+@PIPELINES.register_module()

+class CropValidClip:

+ """Generate the clip from complete video with valid frames.

+

+ Required keys: 'video', 'modality', 'valid_frames', 'num_frames'.

+

+ Modified keys: 'video', 'valid_frames', 'num_frames'.

+ """

+

+ def __init__(self):

+ pass

+

+ def __call__(self, results):

+ """Crop the valid part from the video."""

+ if 'valid_frames' not in results:

+ results['valid_frames'] = [[0, n - 1]

+ for n in results['num_frames']]

+ lengths = [(end - start) for start, end in results['valid_frames']]

+ length = min(lengths)

+ for i, modal in enumerate(results['modality']):

+ start = results['valid_frames'][i][0]

+ results['video'][i] = results['video'][i][start:start + length]

+ results['num_frames'] = length

+ del results['valid_frames']

+ if 'bbox' in results:

+ results['bbox'] = results['bbox'][start:start + length]

+ return results

+

+

+@PIPELINES.register_module()

+class TemporalPooling:

+ """Pick frames according to either stride or reference fps.

+

+ Required keys: 'video', 'modality', 'num_frames', 'fps'.

+

+ Modified keys: 'video', 'num_frames'.

+

+ Args:

+ length (int): output video length. If unset, the entire video will

+ be pooled.

+ stride (int): temporal pooling stride. If unset, the stride will be

+ computed with video fps and `ref_fps`. If both `stride` and

+ `ref_fps` are unset, the stride will be 1.

+ ref_fps (int): expected fps of output video. If unset, the video will

+ be pooling with `stride`.

+ """

+

+ def __init__(self, length: int = -1, stride: int = -1, ref_fps: int = -1):

+ self.length = length

+ if stride == -1 and ref_fps == -1:

+ stride = 1

+ elif stride != -1 and ref_fps != -1:

+ raise ValueError('`stride` and `ref_fps` can not be assigned '

+ 'simultaneously, as they might conflict.')

+ self.stride = stride

+ self.ref_fps = ref_fps

+

+ def __call__(self, results):

+ """Implement data aumentation with random temporal crop."""

+

+ if self.ref_fps > 0 and 'fps' in results:

+ assert len(set(results['fps'])) == 1, 'Videos of different '

+ 'modality have different rate. May be misaligned after pooling.'

+ stride = results['fps'][0] // self.ref_fps

+ if stride < 1:

+ raise ValueError(f'`ref_fps` must be smaller than video '

+ f"fps {results['fps'][0]}")

+ else:

+ stride = self.stride

+

+ if self.length < 0:

+ length = results['num_frames']

+ num_frames = (results['num_frames'] - 1) // stride + 1

+ else:

+ length = (self.length - 1) * stride + 1

+ num_frames = self.length

+

+ diff = length - results['num_frames']

+ start = np.random.randint(max(1 - diff, 1))

+

+ for i, modal in enumerate(results['modality']):

+ video = results['video'][i]

+ if diff > 0:

+ video = np.pad(video, ((diff // 2, diff - (diff // 2)),

+ *(((0, 0), ) * (video.ndim - 1))),

+ 'edge')

+ results['video'][i] = video[start:start + length:stride]

+ assert results['video'][i].shape[0] == num_frames

+

+ results['num_frames'] = num_frames

+ if 'bbox' in results:

+ results['bbox'] = results['bbox'][start:start + length:stride]

+ return results

+

+

+@PIPELINES.register_module()

+class ResizeGivenShortEdge:

+ """Resize the video to make its short edge have given length.

+

+ Required keys: 'video', 'modality', 'width', 'height'.

+

+ Modified keys: 'video', 'width', 'height'.

+ """

+

+ def __init__(self, length: int = 256):

+ self.length = length

+

+ def __call__(self, results):

+ """Implement data processing with resize given short edge."""

+ for i, modal in enumerate(results['modality']):

+ width, height = results['width'][i], results['height'][i]

+ video = results['video'][i].transpose(1, 2, 3, 0)

+ num_frames = video.shape[-1]

+ video = video.reshape(height, width, -1)

+ if width < height:

+ width, height = self.length, int(self.length * height / width)

+ else:

+ width, height = int(self.length * width / height), self.length

+ video = mmcv.imresize(video,

+ (width,

+ height)).reshape(height, width, -1,

+ num_frames)

+ results['video'][i] = video.transpose(3, 0, 1, 2)

+ results['width'][i], results['height'][i] = width, height

+ return results

+

+

+@PIPELINES.register_module()

+class MultiFrameBBoxMerge:

+ """Compute the union of bboxes in selected frames.

+

+ Required keys: 'bbox'.

+

+ Modified keys: 'bbox'.

+ """

+

+ def __init__(self):

+ pass

+

+ def __call__(self, results):

+ if 'bbox' not in results:

+ return results

+

+ bboxes = list(filter(lambda x: len(x), results['bbox']))

+ if len(bboxes) == 0:

+ bbox_xyxy = np.array(

+ (0, 0, results['width'][0] - 1, results['height'][0] - 1))

+ else:

+ bboxes_xyxy = np.stack([b[0]['bbox'] for b in bboxes])

+ bbox_xyxy = np.array((

+ bboxes_xyxy[:, 0].min(),

+ bboxes_xyxy[:, 1].min(),

+ bboxes_xyxy[:, 2].max(),

+ bboxes_xyxy[:, 3].max(),

+ ))

+ results['bbox'] = bbox_xyxy

+ return results

+

+

+@PIPELINES.register_module()

+class ResizedCropByBBox:

+ """Spatial crop for spatially aligned videos by bounding box.

+

+ Required keys: 'video', 'modality', 'width', 'height', 'bbox'.

+

+ Modified keys: 'video', 'width', 'height'.

+ """

+

+ def __init__(self, size, scale=(1, 1), ratio=(1, 1), shift=0):

+ self.size = size if isinstance(size, (tuple, list)) else (size, size)

+ self.scale = scale

+ self.ratio = ratio

+ self.shift = shift

+

+ def __call__(self, results):

+ bbox_xywh = bbox_xyxy2xywh(results['bbox'][None, :])[0]

+ length = bbox_xywh[2:].max()

+ length = length * np.random.uniform(*self.scale)

+ x = bbox_xywh[0] + np.random.uniform(-self.shift, self.shift) * length

+ y = bbox_xywh[1] + np.random.uniform(-self.shift, self.shift) * length

+ w, h = length, length * np.random.uniform(*self.ratio)

+

+ bbox_xyxy = bbox_xywh2xyxy(np.array([[x, y, w, h]]))[0]

+ bbox_xyxy = bbox_xyxy.clip(min=0)

+ bbox_xyxy[2] = min(bbox_xyxy[2], results['width'][0])

+ bbox_xyxy[3] = min(bbox_xyxy[3], results['height'][0])

+ bbox_xyxy = bbox_xyxy.astype(np.int32)

+

+ for i in range(len(results['video'])):

+ video = results['video'][i].transpose(1, 2, 3, 0)

+ num_frames = video.shape[-1]

+ video = video.reshape(video.shape[0], video.shape[1], -1)

+ video = mmcv.imcrop(video, bbox_xyxy)

+ video = mmcv.imresize(video, self.size)

+

+ results['video'][i] = video.reshape(video.shape[0], video.shape[1],

+ -1, num_frames)

+ results['video'][i] = results['video'][i].transpose(3, 0, 1, 2)

+ results['width'][i], results['height'][i] = video.shape[

+ 1], video.shape[0]

+

+ return results

+

+

+@PIPELINES.register_module()

+class GestureRandomFlip:

+ """Data augmentation by randomly horizontal flip the video. The label will

+ be alternated simultaneously.

+

+ Required keys: 'video', 'label', 'ann_info'.

+

+ Modified keys: 'video', 'label'.

+ """

+

+ def __init__(self, prob=0.5):

+ self.flip_prob = prob

+

+ def __call__(self, results):

+ flip = np.random.rand() < self.flip_prob

+ if flip:

+ for i in range(len(results['video'])):

+ results['video'][i] = results['video'][i][:, :, ::-1, :]

+ for flip_pairs in results['ann_info']['flip_pairs']:

+ if results['label'] in flip_pairs:

+ results['label'] = sum(flip_pairs) - results['label']

+ break

+

+ results['flipped'] = flip

+ return results

+

+

+@PIPELINES.register_module()

+class VideoColorJitter:

+ """Data augmentation with random color transformations.

+

+ Required keys: 'video', 'modality'.

+

+ Modified keys: 'video'.

+ """

+

+ def __init__(self, brightness=0, contrast=0):

+ self.brightness = brightness

+ self.contrast = contrast

+

+ def __call__(self, results):

+ for i, modal in enumerate(results['modality']):

+ if modal == 'rgb':

+ video = results['video'][i]

+ bright = np.random.uniform(

+ max(0, 1 - self.brightness), 1 + self.brightness)

+ contrast = np.random.uniform(

+ max(0, 1 - self.contrast), 1 + self.contrast)

+ video = mmcv.adjust_brightness(video.astype(np.int32), bright)

+ num_frames = video.shape[0]

+ video = video.astype(np.uint8).reshape(-1, video.shape[2], 3)

+ video = mmcv.adjust_contrast(video, contrast).reshape(

+ num_frames, -1, video.shape[1], 3)

+ results['video'][i] = video

+ return results

+

+

+@PIPELINES.register_module()

+class RandomAlignedSpatialCrop:

+ """Data augmentation with random spatial crop for spatially aligned videos.

+

+ Required keys: 'video', 'modality', 'width', 'height'.

+

+ Modified keys: 'video', 'width', 'height'.

+ """

+

+ def __init__(self, length: int = 224):

+ self.length = length

+

+ def __call__(self, results):

+ """Implement data augmentation with random spatial crop."""

+ assert len(set(results['height'])) == 1, \

+ f"the heights {results['height']} are not identical."

+ assert len(set(results['width'])) == 1, \

+ f"the widths {results['width']} are not identical."

+ height, width = results['height'][0], results['width'][0]

+ for i, modal in enumerate(results['modality']):

+ video = results['video'][i].transpose(1, 2, 3, 0)

+ num_frames = video.shape[-1]

+ video = video.reshape(height, width, -1)

+ start_h, start_w = np.random.randint(

+ height - self.length + 1), np.random.randint(width -

+ self.length + 1)

+ video = mmcv.imcrop(

+ video,

+ np.array((start_w, start_h, start_w + self.length - 1,

+ start_h + self.length - 1)))

+ results['video'][i] = video.reshape(self.length, self.length, -1,

+ num_frames).transpose(

+ 3, 0, 1, 2)

+ results['width'][i], results['height'][

+ i] = self.length, self.length

+ return results

+

+

+@PIPELINES.register_module()

+class CenterSpatialCrop:

+ """Data processing by crop the center region of a video.

+

+ Required keys: 'video', 'modality', 'width', 'height'.

+

+ Modified keys: 'video', 'width', 'height'.

+ """

+

+ def __init__(self, length: int = 224):

+ self.length = length

+

+ def __call__(self, results):

+ """Implement data processing with center crop."""

+ for i, modal in enumerate(results['modality']):

+ height, width = results['height'][i], results['width'][i]

+ video = results['video'][i].transpose(1, 2, 3, 0)

+ num_frames = video.shape[-1]

+ video = video.reshape(height, width, -1)

+ start_h, start_w = (height - self.length) // 2, (width -

+ self.length) // 2

+ video = mmcv.imcrop(

+ video,

+ np.array((start_w, start_h, start_w + self.length - 1,

+ start_h + self.length - 1)))

+ results['video'][i] = video.reshape(self.length, self.length, -1,

+ num_frames).transpose(

+ 3, 0, 1, 2)

+ results['width'][i], results['height'][

+ i] = self.length, self.length

+ return results

+

+

+@PIPELINES.register_module()

+class ModalWiseChannelProcess:

+ """Video channel processing according to modality.

+

+ Required keys: 'video', 'modality'.

+

+ Modified keys: 'video'.

+ """

+

+ def __init__(self):

+ pass

+

+ def __call__(self, results):

+ """Implement channel processing for video array."""

+ for i, modal in enumerate(results['modality']):

+ if modal == 'rgb':

+ results['video'][i] = results['video'][i][..., ::-1]

+ elif modal == 'depth':

+ if results['video'][i].ndim == 4:

+ results['video'][i] = results['video'][i][..., :1]

+ elif results['video'][i].ndim == 3:

+ results['video'][i] = results['video'][i][..., None]

+ elif modal == 'flow':

+ results['video'][i] = results['video'][i][..., :2]

+ else:

+ raise ValueError(f'modality {modal} is invalid.')

+ return results

+

+

+@PIPELINES.register_module()

+class MultiModalVideoToTensor:

+ """Data processing by converting video arrays to pytorch tensors.

+

+ Required keys: 'video', 'modality'.

+

+ Modified keys: 'video'.

+ """

+

+ def __init__(self):

+ pass

+

+ def __call__(self, results):

+ """Implement data processing similar to ToTensor."""

+ for i, modal in enumerate(results['modality']):

+ video = results['video'][i].transpose(3, 0, 1, 2)

+ results['video'][i] = torch.tensor(

+ np.ascontiguousarray(video), dtype=torch.float) / 255.0

+ return results

+

+

+@PIPELINES.register_module()

+class VideoNormalizeTensor:

+ """Data processing by normalizing video tensors with mean and std.

+

+ Required keys: 'video', 'modality'.

+

+ Modified keys: 'video'.

+ """

+

+ def __init__(self, mean, std):

+ self.mean = torch.tensor(mean)

+ self.std = torch.tensor(std)

+

+ def __call__(self, results):

+ """Implement data normalization."""

+ for i, modal in enumerate(results['modality']):

+ if modal == 'rgb':

+ video = results['video'][i]

+ dim = video.ndim - 1

+ video = video - self.mean.view(3, *((1, ) * dim))

+ video = video / self.std.view(3, *((1, ) * dim))

+ results['video'][i] = video

+ return results

diff --git a/mmpose/datasets/pipelines/loading.py b/mmpose/datasets/pipelines/loading.py

index d6374274ad..a19d220cc9 100644

--- a/mmpose/datasets/pipelines/loading.py

+++ b/mmpose/datasets/pipelines/loading.py

@@ -99,3 +99,81 @@ def __repr__(self):

f"color_type='{self.color_type}', "

f'file_client_args={self.file_client_args})')

return repr_str

+

+