diff --git a/.env b/.env

index 0b7b7614a3..59a801f052 100644

--- a/.env

+++ b/.env

@@ -43,7 +43,7 @@ EMAIL_SERVICE_PORT=6060

EMAIL_SERVICE_ADDR=http://emailservice:${EMAIL_SERVICE_PORT}

# Feature Flag Service

-FEATURE_FLAG_SERVICE_PORT=8081

+FEATURE_FLAG_SERVICE_PORT=8881

FEATURE_FLAG_SERVICE_ADDR=featureflagservice:${FEATURE_FLAG_SERVICE_PORT}

FEATURE_FLAG_SERVICE_HOST=feature-flag-service

FEATURE_FLAG_GRPC_SERVICE_PORT=50053

@@ -53,6 +53,10 @@ FEATURE_FLAG_GRPC_SERVICE_ADDR=featureflagservice:${FEATURE_FLAG_GRPC_SERVICE_PO

FRONTEND_PORT=8080

FRONTEND_ADDR=frontend:${FRONTEND_PORT}

+# Nginx Proxy

+NGINX_PORT=90

+NGINX_ADDR=nginx:${FRONTEND_PORT}

+

# Frontend Proxy (Envoy)

FRONTEND_HOST=frontend

ENVOY_PORT=8080

@@ -111,3 +115,13 @@ JAEGER_SERVICE_HOST=jaeger

PROMETHEUS_SERVICE_PORT=9090

PROMETHEUS_SERVICE_HOST=prometheus

PROMETHEUS_ADDR=${PROMETHEUS_SERVICE_HOST}:${PROMETHEUS_SERVICE_PORT}

+

+# OpenSearch Node1

+OPENSEARCH1_PORT=9200

+OPENSEARCH1_HOST=opensearch-node1

+OPENSEARCH1_ADDR=${OPENSEARCH1_HOST}:${OPENSEARCH1_PORT}

+

+# OpenSearch Dashboard

+OPENSEARCH_DASHBOARD_PORT=5601

+OPENSEARCH_DASHBOARD_HOST=opensearch-dashboards

+OPENSEARCH_DASHBOARD_ADDR=${OPENSEARCH_DASHBOARD_HOST}:${OPENSEARCH_DASHBOARD_PORT}

\ No newline at end of file

diff --git a/.github/README.md b/.github/README.md

new file mode 100644

index 0000000000..ea7c06fbc5

--- /dev/null

+++ b/.github/README.md

@@ -0,0 +1,57 @@

+# + OpenTelemetry Demo with OpenSearch

+

+The following guide describes how to setup the OpenTelemetry demo with OpenSearch Observability using [Docker compose](#docker-compose) or [Kubernetes](#kubernetes).

+

+## Docker compose

+

+### Prerequisites

+

+- Docker

+- Docker Compose v2.0.0+

+- 4 GB of RAM for the application

+

+### Running this demo

+

+```bash

+git clone https://github.com/opensearch/opentelemetry-demo.git

+cd opentelemetry-demo

+docker compose up -d

+```

+

+### Services

+

+Once the images are built and containers are started you can access:

+

+- Webstore-Proxy (Via Nginx Proxy): http://localhost:90/

+- Webstore: http://localhost:8080/

+- Dashboards: http://localhost:5061/

+- Feature Flags UI: http://localhost:8080/feature/

+- Load Generator UI: http://localhost:8080/loadgen/

+

+OpenSearch has [documented](https://opensearch.org/docs/latest/observing-your-data/trace/trace-analytics-jaeger/#setting-up-opensearch-to-use-jaeger-data) the usage of the Observability plugin with jaeger as a trace signal source.

+

+The next instructions are similar and use the same docker compose file.

+1. Start the demo with the following command from the repository's root directory:

+ ```

+ docker compose up -d

+ ```

+**Note:** The docker compose `--no-build` flag is used to fetch released docker images from [ghcr](http://ghcr.io/open-telemetry/demo) instead of building from source.

+Removing the `--no-build` command line option will rebuild all images from source. It may take more than 20 minutes to build if the flag is omitted.

+

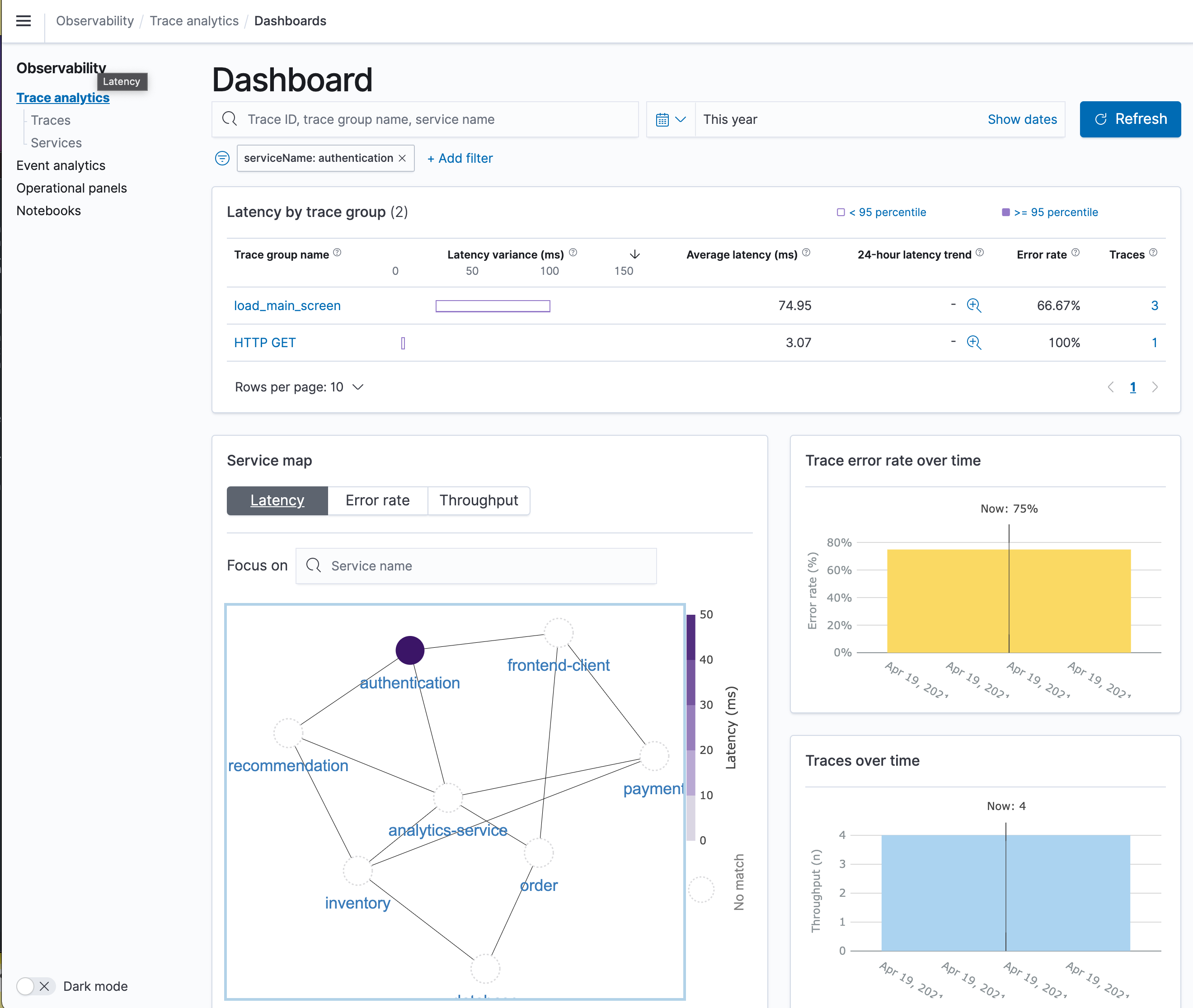

+### Explore and analyze the data With OpenSearch Observability

+Review revised OpenSearch [Observability Architecture](architecture.md)

+

+### Start learning OpenSearch Observability using our tutorial

+[Getting started Tutorial](../tutorial/README.md)

+

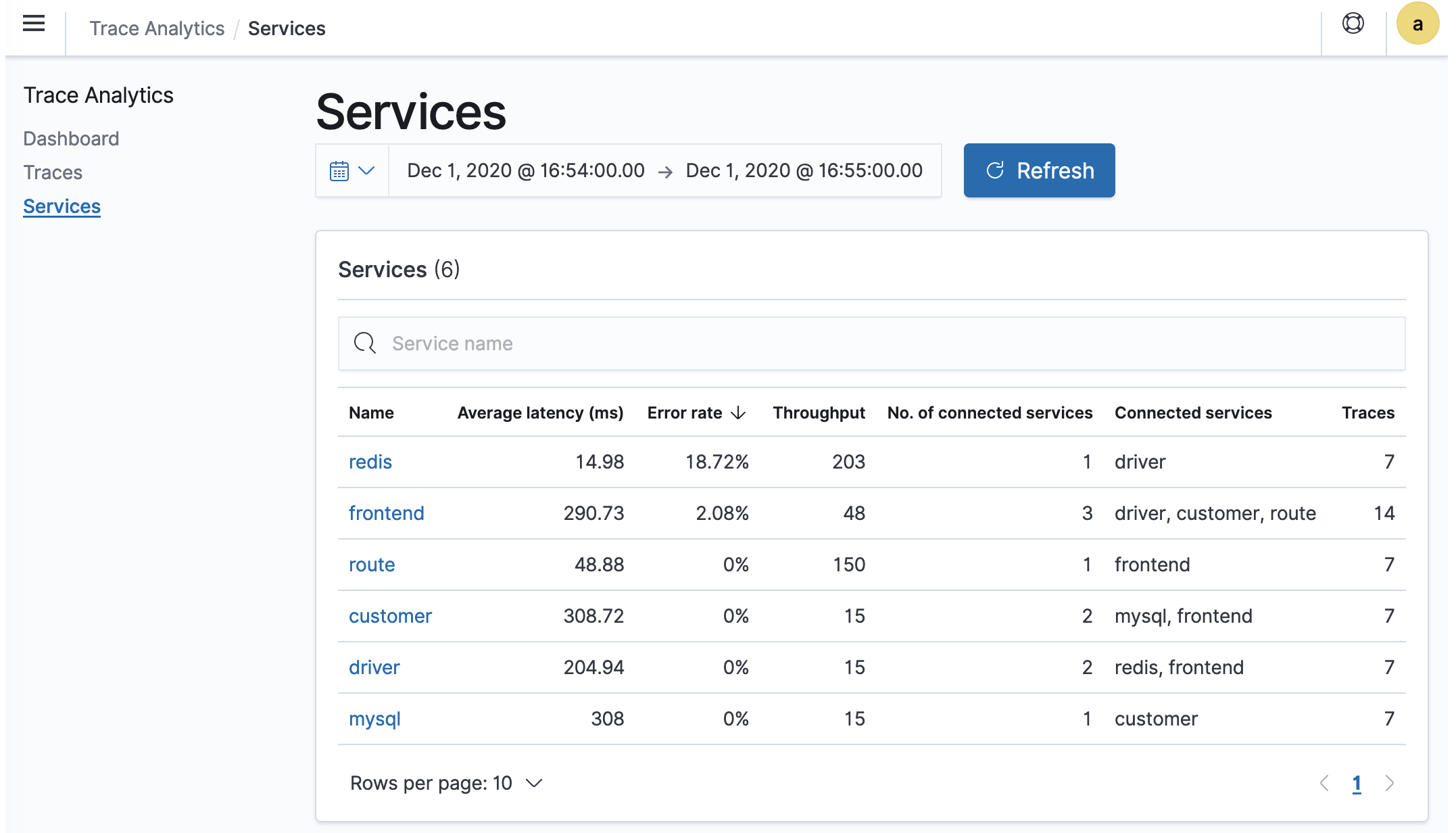

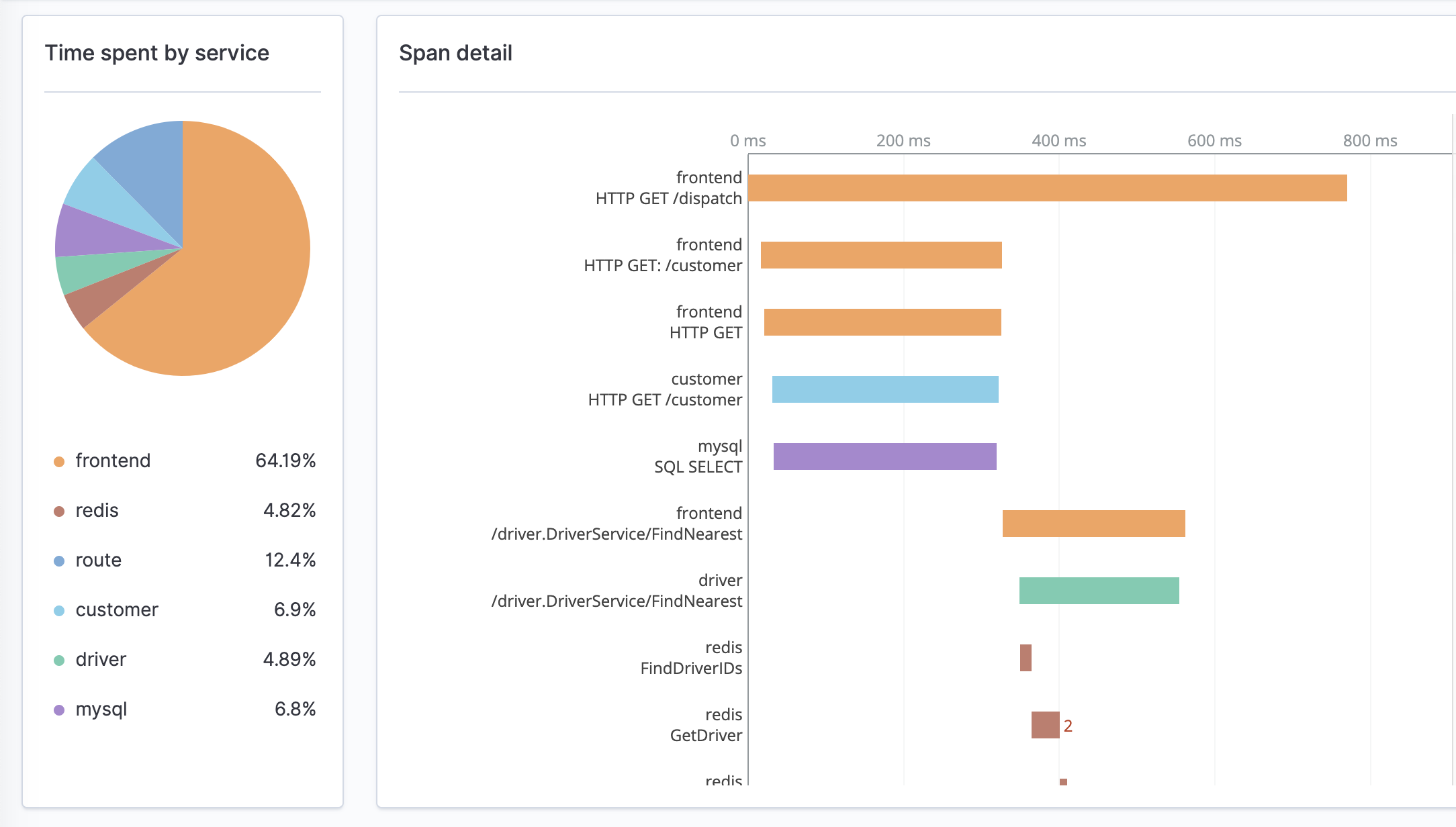

+#### Service map

+

+

+#### Traces

+

+

+#### Correlation

+

+

+#### Logs

+

\ No newline at end of file

diff --git a/.github/architecture.md b/.github/architecture.md

new file mode 100644

index 0000000000..d6ad7669d9

--- /dev/null

+++ b/.github/architecture.md

@@ -0,0 +1,104 @@

+# Opensearch OTEL Demo Architecture

+This document will review the OpenSearch architecture for the [OTEL demo](https://opentelemetry.io/docs/demo/) and will review how to use the new Observability capabilities

+implemented into OpenSearch.

+---

+This diagram provides an overview of the system components, showcasing the configuration derived from the OpenTelemetry Collector (otelcol) configuration file utilized by the OpenTelemetry demo application.

+

+Additionally, it highlights the observability data (traces and metrics) flow within the system.

+

+

+

+---

+[OTEL DEMO](https://opentelemetry.io/docs/demo/architecture/) Describes the list of services that are composing the Astronomy Shop.

+

+They are combined of:

+ - [Accounting](https://opentelemetry.io/docs/demo/services/accounting/)

+ - [Ad](https://opentelemetry.io/docs/demo/services/ad/)

+ - [Cart](https://opentelemetry.io/docs/demo/services/cart/)

+ - [Checkout](https://opentelemetry.io/docs/demo/services/checkout/)

+ - [Currency](https://opentelemetry.io/docs/demo/services/currency/)

+ - [Email](https://opentelemetry.io/docs/demo/services/email/)

+ - [Feature Flag](https://opentelemetry.io/docs/demo/services/feature-flag/)

+ - [Fraud Detection](https://opentelemetry.io/docs/demo/services/fraud-detection/)

+ - [Frontend](https://opentelemetry.io/docs/demo/services/frontend/)

+ - [Kafka](https://opentelemetry.io/docs/demo/services/kafka/)

+ - [Payment](https://opentelemetry.io/docs/demo/services/payment/)

+ - [Product Catalog](https://opentelemetry.io/docs/demo/services/product-catalog/)

+ - [Quote](https://opentelemetry.io/docs/demo/services/quote/)

+ - [Recommendation](https://opentelemetry.io/docs/demo/services/recommendation/)

+ - [Shipping](https://opentelemetry.io/docs/demo/services/shipping/)

+ - [Fluent-Bit](../src/fluent-bit/README.md) *(nginx's otel log exported)*

+ - [Integrations](../src/integrations/README.md) *(pre-canned OpenSearch assets)*

+ - [DataPrepper](../src/dataprepper/README.md) *(OpenSearch's ingestion pipeline)

+

+Backend supportive services

+ - [Load Generator](http://load-generator:8089)

+ - See [description](https://opentelemetry.io/docs/demo/services/load-generator/)

+ - [Frontend Nginx Proxy](http://nginx:90) *(replacement for _Frontend-Proxy_)*

+ - See [description](../src/nginx-otel/README.md)

+ - [OpenSearch](https://opensearch-node1:9200)

+ - See [description](https://github.com/YANG-DB/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L697)

+ - [Dashboards](http://opensearch-dashboards:5601)

+ - See [description](https://github.com/YANG-DB/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L747)

+ - [Prometheus](http://prometheus:9090)

+ - See [description](https://github.com/YANG-DB/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L674)

+ - [Feature-Flag](http://feature-flag-service:8881)

+ - See [description](../src/featureflagservice/README.md)

+ - [Grafana](http://grafana:3000)

+ - See [description](https://github.com/YANG-DB/opentelemetry-demo/blob/12d52cbb23bbf4226f6de2dfec840482a0a7d054/docker-compose.yml#L637)

+

+### Services Topology

+The next diagram shows the docker compose services dependencies

+

+

+---

+

+## Purpose

+The purpose of this demo is to demonstrate the different capabilities of OpenSearch Observability to investigate and reflect your system.

+

+### Ingestion

+The ingestion capabilities for OpenSearch is to be able to support multiple pipelines:

+ - [Data-Prepper](https://github.com/opensearch-project/data-prepper/) is an OpenSearch ingestion project that allows ingestion of OTEL standard signals using Otel-Collector

+ - [Jaeger](https://opensearch.org/docs/latest/observing-your-data/trace/trace-analytics-jaeger/) is an ingestion framework which has a build in capability for pushing OTEL signals into OpenSearch

+ - [Fluent-Bit](https://docs.fluentbit.io/manual/pipeline/outputs/opensearch) is an ingestion framework which has a build in capability for pushing OTEL signals into OpenSearch

+

+### Integrations -

+The integration service is a list of pre-canned assets that are loaded in a combined manner to allow users the ability for simple and automatic way to discover and review their services topology.

+

+These (demo-sample) integrations contain the following assets:

+ - components & index template mapping

+ - datasources

+ - data-stream & indices

+ - queries

+ - dashboards

+

+Once they are loaded, the user can imminently review his OTEL demo services and dashboards that reflect the system state.

+ - [Nginx Dashboard](../src/integrations/display/nginx-logs-dashboard-new.ndjson) - reflects the Nginx Proxy server that routes all the network communication to/from the frontend

+ - [Prometheus datasource](../src/integrations/datasource/prometheus.json) - reflects the connectivity to the prometheus metric storage that allows us to federate metrics analytics queries

+ - [Logs Datastream](../src/integrations/indices/data-stream.json) - reflects the data-stream used by nginx logs ingestion and dashboards representing a well-structured [log schema](../src/integrations/mapping-templates/logs.mapping)

+

+Once these assets are loaded - the user can start reviewing its Observability dashboards and traces

+

+

+

+

+

+

+

+

+

+

+

+

+---

+

+### **Scenarios**

+

+How can you solve problems with OpenTelemetry? These scenarios walk you through some pre-configured problems and show you how to interpret OpenTelemetry data to solve them.

+

+- Generate a Product Catalog error for GetProduct requests with product id: OLJCESPC7Z using the Feature Flag service

+- Discover a memory leak and diagnose it using metrics and traces. Read more

+

+### **Reference**

+Project reference documentation, like requirements and feature matrices [here](https://opentelemetry.io/docs/demo/#reference)

+

diff --git a/.github/img/DemoFlow.png b/.github/img/DemoFlow.png

new file mode 100644

index 0000000000..ddd45b41b6

Binary files /dev/null and b/.github/img/DemoFlow.png differ

diff --git a/.github/img/docker-services-topology.png b/.github/img/docker-services-topology.png

new file mode 100644

index 0000000000..8e3db8fca9

Binary files /dev/null and b/.github/img/docker-services-topology.png differ

diff --git a/.github/img/nginx_dashboard.png b/.github/img/nginx_dashboard.png

new file mode 100644

index 0000000000..e88c1b2094

Binary files /dev/null and b/.github/img/nginx_dashboard.png differ

diff --git a/.github/img/otelcol-data-flow-overview.png b/.github/img/otelcol-data-flow-overview.png

new file mode 100644

index 0000000000..e8359dcbdc

Binary files /dev/null and b/.github/img/otelcol-data-flow-overview.png differ

diff --git a/.github/img/prometheus_federated_metrics.png b/.github/img/prometheus_federated_metrics.png

new file mode 100644

index 0000000000..145e232ae0

Binary files /dev/null and b/.github/img/prometheus_federated_metrics.png differ

diff --git a/.github/img/service-graph.png b/.github/img/service-graph.png

new file mode 100644

index 0000000000..028c0aafa5

Binary files /dev/null and b/.github/img/service-graph.png differ

diff --git a/.github/img/services.png b/.github/img/services.png

new file mode 100644

index 0000000000..753e298a87

Binary files /dev/null and b/.github/img/services.png differ

diff --git a/.github/img/trace_analytics.png b/.github/img/trace_analytics.png

new file mode 100644

index 0000000000..9357a2b0fb

Binary files /dev/null and b/.github/img/trace_analytics.png differ

diff --git a/.github/img/traces.png b/.github/img/traces.png

new file mode 100644

index 0000000000..d4549bab84

Binary files /dev/null and b/.github/img/traces.png differ

diff --git a/README.md b/README.md

index 77f509cef2..1558935de1 100644

--- a/README.md

+++ b/README.md

@@ -54,14 +54,14 @@ adding a link below. The community is committed to maintaining the project and

keeping it up to date for you.

| | | |

-| ----------------------------------------------------------------------------------------------------------------- | ----------------------------------------------------------------- | -------------------------------------------------------------------------------------------- |

-| [AlibabaCloud LogService](https://github.com/aliyun-sls/opentelemetry-demo) | [Grafana Labs](https://github.com/grafana/opentelemetry-demo) | [Sentry](https://github.com/getsentry/opentelemetry-demo) |

-| [AppDynamics](https://www.appdynamics.com/blog/cloud/how-to-observe-opentelemetry-demo-app-in-appdynamics-cloud/) | [Helios](https://otelsandbox.gethelios.dev) | [Splunk](https://github.com/signalfx/opentelemetry-demo) |

-| [Aspecto](https://github.com/aspecto-io/opentelemetry-demo) | [Honeycomb.io](https://github.com/honeycombio/opentelemetry-demo) | [Sumo Logic](https://www.sumologic.com/blog/common-opentelemetry-demo-application/) |

-| [Coralogix](https://coralogix.com/blog/configure-otel-demo-send-telemetry-data-coralogix) | [Instana](https://github.com/instana/opentelemetry-demo) | [TelemetryHub](https://github.com/TelemetryHub/opentelemetry-demo/tree/telemetryhub-backend) |

-| [Datadog](https://github.com/DataDog/opentelemetry-demo) | [Kloudfuse](https://github.com/kloudfuse/opentelemetry-demo) | [Teletrace](https://github.com/teletrace/opentelemetry-demo) |

+| ----------------------------------------------------------------------------------------------------------------- | ----------------------------------------------------------------- |----------------------------------------------------------------------------------------------|

+| [AlibabaCloud LogService](https://github.com/aliyun-sls/opentelemetry-demo) | [Grafana Labs](https://github.com/grafana/opentelemetry-demo) | [Sentry](https://github.com/getsentry/opentelemetry-demo) |

+| [AppDynamics](https://www.appdynamics.com/blog/cloud/how-to-observe-opentelemetry-demo-app-in-appdynamics-cloud/) | [Helios](https://otelsandbox.gethelios.dev) | [Splunk](https://github.com/signalfx/opentelemetry-demo) |

+| [Aspecto](https://github.com/aspecto-io/opentelemetry-demo) | [Honeycomb.io](https://github.com/honeycombio/opentelemetry-demo) | [Sumo Logic](https://www.sumologic.com/blog/common-opentelemetry-demo-application/) |

+| [Coralogix](https://coralogix.com/blog/configure-otel-demo-send-telemetry-data-coralogix) | [Instana](https://github.com/instana/opentelemetry-demo) | [TelemetryHub](https://github.com/TelemetryHub/opentelemetry-demo/tree/telemetryhub-backend) |

+| [Datadog](https://github.com/DataDog/opentelemetry-demo) | [Kloudfuse](https://github.com/kloudfuse/opentelemetry-demo) | [Teletrace](https://github.com/teletrace/opentelemetry-demo) |

| [Dynatrace](https://www.dynatrace.com/news/blog/opentelemetry-demo-application-with-dynatrace/) | [Lightstep](https://github.com/lightstep/opentelemetry-demo) | [Uptrace](https://github.com/uptrace/uptrace/tree/master/example/opentelemetry-demo) |

-| [Elastic](https://github.com/elastic/opentelemetry-demo) | [New Relic](https://github.com/newrelic/opentelemetry-demo) | |

+| [Elastic](https://github.com/elastic/opentelemetry-demo) | [New Relic](https://github.com/newrelic/opentelemetry-demo) | [OpenSearch](https://github.com/opensearch-project/opentelemetry-demo) |

| | | |

## Contributing

diff --git a/add_hosts_locally.sh b/add_hosts_locally.sh

new file mode 100644

index 0000000000..de17f7340e

--- /dev/null

+++ b/add_hosts_locally.sh

@@ -0,0 +1,30 @@

+# Copyright The OpenTelemetry Authors

+# SPDX-License-Identifier: Apache-2.0

+

+#!/bin/bash

+# Local host that are used to help developing and debugging the OTEL demo locally

+

+# The IP address you want to associate with the hostname

+IP="127.0.0.1"

+

+# The hostname you want to associate with the IP address

+OPENSEARCH_HOST="opensearch-node1"

+OPENSEARCH_DASHBOARD="opensearch-dashboards"

+OTEL_STORE="frontend"

+FEATURE_FLAG_SERVICE="feature-flag-service"

+NGINX="nginx"

+OTEL_LOADER="loadgenerator"

+PROMETHEUS="prometheus"

+LOAD_GENERATOR="load-generator"

+GRAFANA="grafana"

+

+# Add the entry to the /etc/hosts file

+echo "$IP $OPENSEARCH_HOST" | sudo tee -a /etc/hosts

+echo "$IP $OPENSEARCH_DASHBOARD" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_STORE" | sudo tee -a /etc/hosts

+echo "$IP $PROMETHEUS" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_LOADER" | sudo tee -a /etc/hosts

+echo "$IP $NGINX" | sudo tee -a /etc/hosts

+echo "$IP $LOAD_GENERATOR" | sudo tee -a /etc/hosts

+echo "$IP $FEATURE_FLAG_SERVICE" | sudo tee -a /etc/hosts

+echo "$IP $GRAFANA" | sudo tee -a /etc/hosts

diff --git a/docker-compose.yml b/docker-compose.yml

index f06380dad6..8abcc62c87 100644

--- a/docker-compose.yml

+++ b/docker-compose.yml

@@ -9,6 +9,10 @@ x-default-logging: &logging

max-size: "5m"

max-file: "2"

+volumes:

+ opensearch-data1:

+ opensearch-data2:

+

networks:

default:

name: opentelemetry-demo

@@ -216,7 +220,7 @@ services:

memory: 175M

restart: unless-stopped

ports:

- - "${FEATURE_FLAG_SERVICE_PORT}" # Feature Flag Service UI

+ - "${FEATURE_FLAG_SERVICE_PORT}:${FEATURE_FLAG_SERVICE_PORT}" # Feature Flag Service UI

- "${FEATURE_FLAG_GRPC_SERVICE_PORT}" # Feature Flag Service gRPC API

environment:

- FEATURE_FLAG_SERVICE_PORT

@@ -274,7 +278,7 @@ services:

memory: 200M

restart: unless-stopped

ports:

- - "${FRONTEND_PORT}"

+ - "${FRONTEND_PORT}:${FRONTEND_PORT}"

environment:

- PORT=${FRONTEND_PORT}

- FRONTEND_ADDR

@@ -293,6 +297,7 @@ services:

- OTEL_EXPORTER_OTLP_METRICS_TEMPORALITY_PREFERENCE

- WEB_OTEL_SERVICE_NAME=frontend-web

depends_on:

+ - accountingservice

- adservice

- cartservice

- checkoutservice

@@ -302,42 +307,9 @@ services:

- quoteservice

- recommendationservice

- shippingservice

+ - paymentservice

logging: *logging

- # Frontend Proxy (Envoy)

- frontendproxy:

- image: ${IMAGE_NAME}:${IMAGE_VERSION}-frontendproxy

- container_name: frontend-proxy

- build:

- context: ./

- dockerfile: src/frontendproxy/Dockerfile

- deploy:

- resources:

- limits:

- memory: 50M

- ports:

- - "${ENVOY_PORT}:${ENVOY_PORT}"

- - 10000:10000

- environment:

- - FRONTEND_PORT

- - FRONTEND_HOST

- - FEATURE_FLAG_SERVICE_PORT

- - FEATURE_FLAG_SERVICE_HOST

- - LOCUST_WEB_HOST

- - LOCUST_WEB_PORT

- - GRAFANA_SERVICE_PORT

- - GRAFANA_SERVICE_HOST

- - JAEGER_SERVICE_PORT

- - JAEGER_SERVICE_HOST

- - OTEL_COLLECTOR_HOST

- - OTEL_COLLECTOR_PORT

- - ENVOY_PORT

- depends_on:

- - frontend

- - featureflagservice

- - loadgenerator

- - grafana

-

# Load Generator

loadgenerator:

image: ${IMAGE_NAME}:${IMAGE_VERSION}-loadgenerator

@@ -353,7 +325,7 @@ services:

memory: 120M

restart: unless-stopped

ports:

- - "${LOCUST_WEB_PORT}"

+ - "${LOCUST_WEB_PORT}:${LOCUST_WEB_PORT}"

environment:

- LOCUST_WEB_PORT

- LOCUST_USERS

@@ -395,6 +367,9 @@ services:

logging: *logging

# Product Catalog service

+ # if proxy.golang.org is experiencing i/o timeout use the next:

+ # - go env -w GOPROXY=direct

+ # - go env -w GOSUMDB=off

productcatalogservice:

image: ${IMAGE_NAME}:${IMAGE_VERSION}-productcatalogservice

container_name: product-catalog-service

@@ -479,6 +454,39 @@ services:

- featureflagservice

logging: *logging

+ # Frontend Proxy service

+ nginx:

+ image: nginx:latest

+ container_name: nginx

+ volumes:

+ - ./src/nginx-otel/default.conf:/etc/nginx/conf.d/default.conf

+ ports:

+ - 90:90

+ depends_on:

+ - frontend

+ - fluentbit

+ - otelcol

+ - loadgenerator

+ links:

+ - fluentbit

+ logging:

+ driver: "fluentd"

+ options:

+ fluentd-address: 127.0.0.1:24224

+ tag: nginx.access

+

+ # Fluent-bit logs shipper service

+ fluentbit:

+ container_name: fluentbit

+ image: fluent/fluent-bit:latest

+ volumes:

+ - ./src/fluent-bit:/fluent-bit/etc

+ ports:

+ - "24224:24224"

+ - "24224:24224/udp"

+ depends_on:

+ - opensearch-node1

+

# Shipping service

shippingservice:

image: ${IMAGE_NAME}:${IMAGE_VERSION}-shippingservice

@@ -576,17 +584,27 @@ services:

# ********************

# Telemetry Components

# ********************

+ # data-prepper

+ data-prepper:

+ image: opensearchproject/data-prepper:latest

+ container_name: data-prepper

+ restart: unless-stopped

+ volumes:

+ - ./src/dataprepper/trace_analytics_no_ssl_2x.yml:/usr/share/data-prepper/pipelines/pipelines.yaml

+ - ./src/dataprepper/data-prepper-config.yaml:/usr/share/data-prepper/config/data-prepper-config.yaml

+ ports:

+ - "21890:21890"

+ depends_on:

+ - opensearch-node1

+

# Jaeger

jaeger:

- image: jaegertracing/all-in-one

+ image: jaegertracing/jaeger-collector:latest

container_name: jaeger

command:

- - "--memory.max-traces"

- - "10000"

- - "--query.base-path"

- - "/jaeger/ui"

- - "--prometheus.server-url"

- - "http://${PROMETHEUS_ADDR}"

+ - "--metrics-backend=prometheus"

+ - "--es.server-urls=https://opensearch-node1:9200"

+ - "--es.tls.enabled=true"

deploy:

resources:

limits:

@@ -594,12 +612,43 @@ services:

restart: unless-stopped

ports:

- "${JAEGER_SERVICE_PORT}" # Jaeger UI

- - "4317" # OTLP gRPC default port

+ - "4317" # OTLP gRPC default port

+ - "14269:14269"

+ - "14268:14268"

+ - "14267:14267"

+ - "14250:14250"

+ - "9411:9411"

environment:

- COLLECTOR_OTLP_ENABLED=true

- METRICS_STORAGE_TYPE=prometheus

+ - SPAN_STORAGE_TYPE=opensearch

+ - ES_TAGS_AS_FIELDS_ALL=true

+ - ES_USERNAME=admin

+ - ES_PASSWORD=admin

+ - ES_TLS_SKIP_HOST_VERIFY=true

+ depends_on:

+ - opensearch-node1

+ - opensearch-node2

+

logging: *logging

+ jaeger-agent:

+ image: jaegertracing/jaeger-agent:latest

+ container_name: jaeger-agent

+ hostname: jaeger-agent

+ command: ["--reporter.grpc.host-port=jaeger:14250"]

+ ports:

+ - "${GRAFANA_SERVICE_PORT}"

+ - "5775:5775/udp"

+ - "6831:6831/udp"

+ - "6832:6832/udp"

+ - "5778:5778"

+ restart: on-failure

+ environment:

+ - SPAN_STORAGE_TYPE=opensearch

+ depends_on:

+ - jaeger

+

# Grafana

grafana:

image: grafana/grafana:9.4.7

@@ -612,7 +661,7 @@ services:

- ./src/grafana/grafana.ini:/etc/grafana/grafana.ini

- ./src/grafana/provisioning/:/etc/grafana/provisioning/

ports:

- - "${GRAFANA_SERVICE_PORT}"

+ - "${GRAFANA_SERVICE_PORT}:${GRAFANA_SERVICE_PORT}"

logging: *logging

# OpenTelemetry Collector

@@ -634,7 +683,8 @@ services:

- "9464" # Prometheus exporter

- "8888" # metrics endpoint

depends_on:

- - jaeger

+ - jaeger-agent

+ - data-prepper

logging: *logging

# Prometheus

@@ -660,6 +710,81 @@ services:

- "${PROMETHEUS_SERVICE_PORT}:${PROMETHEUS_SERVICE_PORT}"

logging: *logging

+ # OpenSearch store - node1

+ opensearch-node1: # This is also the hostname of the container within the Docker network (i.e. https://opensearch-node1/)

+ image: opensearchproject/opensearch:latest # Specifying the latest available image - modify if you want a specific version

+ container_name: opensearch-node1

+ environment:

+ - cluster.name=opensearch-cluster # Name the cluster

+ - node.name=opensearch-node1 # Name the node that will run in this container

+ - discovery.seed_hosts=opensearch-node1,opensearch-node2 # Nodes to look for when discovering the cluster

+ - cluster.initial_cluster_manager_nodes=opensearch-node1,opensearch-node2 # Nodes eligible to serve as cluster manager

+ - bootstrap.memory_lock=true # Disable JVM heap memory swapping

+ - "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m" # Set min and max JVM heap sizes to at least 50% of system RAM

+ ulimits:

+ memlock:

+ soft: -1 # Set memlock to unlimited (no soft or hard limit)

+ hard: -1

+ nofile:

+ soft: 65536 # Maximum number of open files for the opensearch user - set to at least 65536

+ hard: 65536

+ volumes:

+ - opensearch-data1:/usr/share/opensearch/data # Creates volume called opensearch-data1 and mounts it to the container

+ healthcheck:

+ test: ["CMD", "curl", "-f", "https://opensearch-node1:9200/_cluster/health?wait_for_status=yellow", "-ku admin:admin"]

+ interval: 5s

+ timeout: 25s

+ retries: 4

+ ports:

+ - "9200:9200"

+ - "9600:9600"

+

+ # OpenSearch store - node2

+ opensearch-node2:

+ image: opensearchproject/opensearch:latest # This should be the same image used for opensearch-node1 to avoid issues

+ container_name: opensearch-node2

+ environment:

+ - cluster.name=opensearch-cluster

+ - node.name=opensearch-node2

+ - discovery.seed_hosts=opensearch-node1,opensearch-node2

+ - cluster.initial_cluster_manager_nodes=opensearch-node1,opensearch-node2

+ - bootstrap.memory_lock=true

+ - "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m"

+ ulimits:

+ memlock:

+ soft: -1

+ hard: -1

+ nofile:

+ soft: 65536

+ hard: 65536

+ volumes:

+ - opensearch-data2:/usr/share/opensearch/data

+

+ # OpenSearch store - dashboard

+ opensearch-dashboards:

+ image: opensearchproject/opensearch-dashboards:latest # Make sure the version of opensearch-dashboards matches the version of opensearch installed on other nodes

+ container_name: opensearch-dashboards

+ ports:

+ - 5601:5601 # Map host port 5601 to container port 5601

+ expose:

+ - "5601" # Expose port 5601 for web access to OpenSearch Dashboards

+ environment:

+ OPENSEARCH_HOSTS: '["https://opensearch-node1:9200","https://opensearch-node2:9200"]' # Define the OpenSearch nodes that OpenSearch Dashboards will query

+ depends_on:

+ - opensearch-node1

+ - opensearch-node2

+ - prometheus

+

+# Observability OSD Integrations

+ integrations:

+ build:

+ context: ./src/integrations

+ dockerfile: Dockerfile

+ volumes:

+ - ./src/integrations:/integrations

+ depends_on:

+ - opensearch-dashboards

+

# *****

# Tests

# *****

diff --git a/local-config.md b/local-config.md

new file mode 100644

index 0000000000..10a38e7d43

--- /dev/null

+++ b/local-config.md

@@ -0,0 +1,33 @@

+# Local Dev Config

+

+Use the following configuration to locally run and test the OTEL demo:

+

+- For additional help go [here](https://opensearch.org/docs/latest/install-and-configure/install-opensearch/docker/)

+

+### Raise your host's ulimits:

+Raise the upper limits for OpenSearch to be able handling high I/O :

+

+`sudo sysctl -w vm.max_map_count=512000`

+

+### Map domain name to local dns

+

+run the [following script](add_hosts_locally.sh) to map the docker-compose service names to your local dns

+

+```text

+# The hostname you want to associate with the IP address

+

+OPENSEARCH_HOST="opensearch-node1"

+OPENSEARCH_DASHBOARD="opensearch-dashboards"

+OTEL_STORE="frontend"

+OTEL_LOADER="loadgenerator"

+PROMETHEUS="prometheus"

+

+# Add the entry to the /etc/hosts file

+

+echo "$IP $OPENSEARCH_HOST" | sudo tee -a /etc/hosts

+echo "$IP $OPENSEARCH_DASHBOARD" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_STORE" | sudo tee -a /etc/hosts

+echo "$IP $PROMETHEUS" | sudo tee -a /etc/hosts

+echo "$IP $OTEL_LOADER" | sudo tee -a /etc/hosts

+

+```

\ No newline at end of file

diff --git a/src/accountingservice/Dockerfile b/src/accountingservice/Dockerfile

index 320b538c54..c29cfff920 100644

--- a/src/accountingservice/Dockerfile

+++ b/src/accountingservice/Dockerfile

@@ -4,11 +4,18 @@

FROM golang:1.19.2-alpine AS builder

RUN apk add build-base protobuf-dev protoc

+RUN apk add --no-cache git

+RUN apk add --no-cache curl

+

WORKDIR /usr/src/app/

# Restore dependencies

COPY ./src/accountingservice/ ./

COPY ./pb/ ./proto/

+

+ENV GOPROXY=direct

+ENV GOSUMDB=off

+

RUN go mod download

RUN go install google.golang.org/protobuf/cmd/protoc-gen-go@v1.28

RUN go install google.golang.org/grpc/cmd/protoc-gen-go-grpc@v1.2

diff --git a/src/checkoutservice/Dockerfile b/src/checkoutservice/Dockerfile

index 2a7badab96..0a113cbc2b 100644

--- a/src/checkoutservice/Dockerfile

+++ b/src/checkoutservice/Dockerfile

@@ -3,12 +3,18 @@

FROM golang:1.19.2-alpine AS builder

+RUN apk add --no-cache git

+RUN apk add --no-cache curl

RUN apk add build-base protobuf-dev protoc

WORKDIR /usr/src/app/

# Restore dependencies

COPY ./src/checkoutservice/ ./

COPY ./pb/ ./proto/

+

+ENV GOPROXY=direct

+ENV GOSUMDB=off

+

RUN go mod download

RUN go install google.golang.org/protobuf/cmd/protoc-gen-go@v1.28

RUN go install google.golang.org/grpc/cmd/protoc-gen-go-grpc@v1.2

diff --git a/src/currencyservice/Dockerfile b/src/currencyservice/Dockerfile

index 68efe19c24..74f77f1cd4 100644

--- a/src/currencyservice/Dockerfile

+++ b/src/currencyservice/Dockerfile

@@ -17,6 +17,7 @@

FROM alpine as builder

RUN apk update

+RUN apk add --no-cache curl

RUN apk add git cmake make g++ grpc-dev re2-dev protobuf-dev c-ares-dev

ARG OPENTELEMETRY_CPP_VERSION=1.9.0

diff --git a/src/currencyservice/README.md b/src/currencyservice/README.md

index 27a23f397f..be2951d9ea 100644

--- a/src/currencyservice/README.md

+++ b/src/currencyservice/README.md

@@ -9,7 +9,7 @@ To build the currency service, run the following from root directory

of opentelemetry-demo

```sh

-docker-compose build currencyservice

+docker compose build currencyservice

```

## Run the service

@@ -17,7 +17,7 @@ docker-compose build currencyservice

Execute the below command to run the service.

```sh

-docker-compose up currencyservice

+docker compose up currencyservice

```

## Run the client

diff --git a/src/dataprepper/README.md b/src/dataprepper/README.md

new file mode 100644

index 0000000000..dd0a5d1135

--- /dev/null

+++ b/src/dataprepper/README.md

@@ -0,0 +1,52 @@

+

+

+# What is Data Prepper

+

+[Data Prepper](https://github.com/opensearch-project/data-prepper/blob/main/docs/overview.md) is an open source utility service. Data Prepper is a server side data collector with abilities to filter, enrich, transform, normalize and aggregate data for downstream analytics and visualization. The broader vision for Data Prepper is to enable an end-to-end data analysis life cycle from gathering raw logs to facilitating sophisticated and actionable interactive ad-hoc analyses on the data.

+

+# What is Data Prepper Integration

+

+Data Prepper integration is concerned with the following aspects

+

+- Allow simple and automatic generation of all schematic structured

+ - traces ( including specific fields mapping to map to SS4O schema)

+ - services ( adding support for specific service mapping category)

+ - metrics (using the standard SS4O schema)

+

+- Add Dashboard Assets for correlation between traces-services-metrics

+

+- Add correlation queries to investigate traces based metrics

+

+# Data - Prepper Trace Fields

+Data Prepper uses the following [Traces](https://github.com/opensearch-project/data-prepper/blob/main/docs/schemas/trace-analytics/otel-v1-apm-span-index-template.md) mapping file

+The next fields are used:

+```text

+

+- traceId - A unique identifier for a trace. All spans from the same trace share the same traceId.

+- spanId - A unique identifier for a span within a trace, assigned when the span is created.

+- traceState - Conveys information about request position in multiple distributed tracing graphs.

+- parentSpanId - The spanId of this span's parent span. If this is a root span, then this field must be empty.

+- name - A description of the span's operation.

+- kind - The type of span. See OpenTelemetry - SpanKind.

+- startTime - The start time of the span.

+- endTime - The end time of the span.

+- durationInNanos - Difference in nanoseconds between startTime and endTime.

+- serviceName - Currently derived from the opentelemetry.proto.resource.v1.Resource associated with the span, the resource from the span originates.

+- events - A list of events. See OpenTelemetry - Events.

+- links - A list of linked spans. See OpenTelemetry - Links.

+- droppedAttributesCount - The number of attributes that were discarded.

+- droppedEventsCount - The number of events that were discarded.

+- droppedLinksCount - The number of links that were dropped.

+- span.attributes.* - All span attributes are split into a list of keywords.

+- resource.attributes.* - All resource attributes are split into a list of keywords.

+- status.code - The status of the span. See OpenTelemetry - Status.

+

+```

+There are some additional `trace.group` related fields which are not part of the [OTEL spec](https://github.com/open-telemetry/opentelemetry-specification/blob/main/specification/trace/api.md) for traces

+```text

+- traceGroup - A derived field, the name of the trace's root span.

+- traceGroupFields.endTime - A derived field, the endTime of the trace's root span.

+- traceGroupFields.statusCode - A derived field, the status.code of the trace's root span.

+- traceGroupFields.durationInNanos - A derived field, the durationInNanos of the trace's root span.

+

+```

diff --git a/src/dataprepper/data-prepper-config.yaml b/src/dataprepper/data-prepper-config.yaml

new file mode 100644

index 0000000000..5544f3183b

--- /dev/null

+++ b/src/dataprepper/data-prepper-config.yaml

@@ -0,0 +1 @@

+ssl: false

\ No newline at end of file

diff --git a/src/dataprepper/dataPrepper.svg b/src/dataprepper/dataPrepper.svg

new file mode 100644

index 0000000000..2e17a10168

--- /dev/null

+++ b/src/dataprepper/dataPrepper.svg

@@ -0,0 +1,8 @@

+

\ No newline at end of file

diff --git a/src/dataprepper/root-ca.pem b/src/dataprepper/root-ca.pem

new file mode 100644

index 0000000000..4015d866e1

--- /dev/null

+++ b/src/dataprepper/root-ca.pem

@@ -0,0 +1,24 @@

+-----BEGIN CERTIFICATE-----

+MIID/jCCAuagAwIBAgIBATANBgkqhkiG9w0BAQsFADCBjzETMBEGCgmSJomT8ixk

+ARkWA2NvbTEXMBUGCgmSJomT8ixkARkWB2V4YW1wbGUxGTAXBgNVBAoMEEV4YW1w

+bGUgQ29tIEluYy4xITAfBgNVBAsMGEV4YW1wbGUgQ29tIEluYy4gUm9vdCBDQTEh

+MB8GA1UEAwwYRXhhbXBsZSBDb20gSW5jLiBSb290IENBMB4XDTE4MDQyMjAzNDM0

+NloXDTI4MDQxOTAzNDM0NlowgY8xEzARBgoJkiaJk/IsZAEZFgNjb20xFzAVBgoJ

+kiaJk/IsZAEZFgdleGFtcGxlMRkwFwYDVQQKDBBFeGFtcGxlIENvbSBJbmMuMSEw

+HwYDVQQLDBhFeGFtcGxlIENvbSBJbmMuIFJvb3QgQ0ExITAfBgNVBAMMGEV4YW1w

+bGUgQ29tIEluYy4gUm9vdCBDQTCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoC

+ggEBAK/u+GARP5innhpXK0c0q7s1Su1VTEaIgmZr8VWI6S8amf5cU3ktV7WT9SuV

+TsAm2i2A5P+Ctw7iZkfnHWlsC3HhPUcd6mvzGZ4moxnamM7r+a9otRp3owYoGStX

+ylVTQusAjbq9do8CMV4hcBTepCd+0w0v4h6UlXU8xjhj1xeUIz4DKbRgf36q0rv4

+VIX46X72rMJSETKOSxuwLkov1ZOVbfSlPaygXIxqsHVlj1iMkYRbQmaTib6XWHKf

+MibDaqDejOhukkCjzpptGZOPFQ8002UtTTNv1TiaKxkjMQJNwz6jfZ53ws3fh1I0

+RWT6WfM4oeFRFnyFRmc4uYTUgAkCAwEAAaNjMGEwDwYDVR0TAQH/BAUwAwEB/zAf

+BgNVHSMEGDAWgBSSNQzgDx4rRfZNOfN7X6LmEpdAczAdBgNVHQ4EFgQUkjUM4A8e

+K0X2TTnze1+i5hKXQHMwDgYDVR0PAQH/BAQDAgGGMA0GCSqGSIb3DQEBCwUAA4IB

+AQBoQHvwsR34hGO2m8qVR9nQ5Klo5HYPyd6ySKNcT36OZ4AQfaCGsk+SecTi35QF

+RHL3g2qffED4tKR0RBNGQSgiLavmHGCh3YpDupKq2xhhEeS9oBmQzxanFwWFod4T

+nnsG2cCejyR9WXoRzHisw0KJWeuNlwjUdJY0xnn16srm1zL/M/f0PvCyh9HU1mF1

+ivnOSqbDD2Z7JSGyckgKad1Omsg/rr5XYtCeyJeXUPcmpeX6erWJJNTUh6yWC/hY

+G/dFC4xrJhfXwz6Z0ytUygJO32bJG4Np2iGAwvvgI9EfxzEv/KP+FGrJOvQJAq4/

+BU36ZAa80W/8TBnqZTkNnqZV

+-----END CERTIFICATE-----

diff --git a/src/dataprepper/trace_analytics_no_ssl_2x.yml b/src/dataprepper/trace_analytics_no_ssl_2x.yml

new file mode 100644

index 0000000000..26afa364cd

--- /dev/null

+++ b/src/dataprepper/trace_analytics_no_ssl_2x.yml

@@ -0,0 +1,37 @@

+entry-pipeline:

+ delay: "100"

+ source:

+ otel_trace_source:

+ ssl: false

+ sink:

+ - pipeline:

+ name: "raw-pipeline"

+ - pipeline:

+ name: "service-map-pipeline"

+raw-pipeline:

+ source:

+ pipeline:

+ name: "entry-pipeline"

+ processor:

+ - otel_trace_raw:

+ sink:

+ - opensearch:

+ hosts: [ "https://opensearch-node1:9200" ]

+ insecure: true

+ username: "admin"

+ password: "admin"

+ index_type: trace-analytics-raw

+service-map-pipeline:

+ delay: "100"

+ source:

+ pipeline:

+ name: "entry-pipeline"

+ processor:

+ - service_map_stateful:

+ sink:

+ - opensearch:

+ hosts: ["https://opensearch-node1:9200"]

+ insecure: true

+ username: "admin"

+ password: "admin"

+ index_type: trace-analytics-service-map

\ No newline at end of file

diff --git a/src/featureflagservice/mix.exs b/src/featureflagservice/mix.exs

index febc7ab014..6f8e197322 100644

--- a/src/featureflagservice/mix.exs

+++ b/src/featureflagservice/mix.exs

@@ -63,8 +63,13 @@ defmodule Featureflagservice.MixProject do

{:opentelemetry_api, "~> 1.2.1"},

{:opentelemetry, "~> 1.2.1"},

{:opentelemetry_phoenix, "~> 1.0.0"},

- {:opentelemetry_ecto, "~> 1.0.0"}

- ]

+ #{:opentelemetry_ecto, "~> 1.0.0"},

+ {:opentelemetry_ecto, git: "https://github.com/styblope/opentelemetry_ecto.git"},

+ # for opentelemetry_ecto

+ {:telemetry, "~> 0.4 or ~> 1.0"},

+ {:ex_doc, "~> 0.28.0", only: [:dev], runtime: false},

+ {:dialyxir, "~> 1.1", only: [:dev, :test], runtime: false},

+ {:opentelemetry_process_propagator, "~> 0.1.0"} ]

end

# Aliases are shortcuts or tasks specific to the current project.

diff --git a/src/fluent-bit/README.md b/src/fluent-bit/README.md

new file mode 100644

index 0000000000..ef7371b223

--- /dev/null

+++ b/src/fluent-bit/README.md

@@ -0,0 +1,38 @@

+

+

+## Fluent-bit

+

+Fluent-bit is a lightweight and flexible data collector and forwarder, designed to handle a large volume of log data in real-time.

+It is an open-source projectpart of the Cloud Native Computing Foundation (CNCF). and has gained popularity among developers for simplicity and ease of use.

+

+Fluent-bit is designed to be lightweight, which means that it has a small footprint and can be installed on resource-constrained environments like embedded systems or containers. It is written in C language, making it fast and efficient, and it has a low memory footprint, which allows it to consume minimal system resources.

+

+Fluent-bit is a versatile tool that can collect data from various sources, including files, standard input, syslog, and TCP/UDP sockets. It also supports parsing different log formats like JSON, Apache, and Syslog. Fluent-bit provides a flexible configuration system that allows users to tailor their log collection needs, which makes it easy to adapt to different use cases.

+

+One of the main advantages of Fluent-bit is its ability to forward log data to various destinations, including Opensearch, InfluxDB, and Kafka. Fluent-bit provides multiple output plugins that allow users to route their log data to different destinations based on their requirements. This feature makes Fluent-bit ideal for distributed systems where log data needs to be collected and centralized in a central repository.

+

+Fluent-bit also provides a powerful filtering mechanism that allows users to manipulate log data in real-time. It supports various filter plugins, including record modifiers, parsers, and field extraction. With these filters, users can parse and enrich log data, extract fields, and modify records before sending them to their destination.

+

+## Setting Up Fluent-bit agent

+

+For setting up a fluent-bit agent on Nginx, please follow the next instructions

+

+- Install Fluent-bit on the Nginx server. You can download the latest package from the official Fluent-bit website or use your package manager to install it.

+

+- Once Fluent-bit is installed, create a configuration file named [fluent-bit.conf](fluent-bit.conf) in the /etc/fluent-bit/ directory. Add the following configuration to the file:

+

+Here, we specify the input plugin as tail, set the path to the Nginx access log file, and specify a tag to identify the logs in Fluent-bit. We also set some additional parameters such as memory buffer limit and skipping long lines.

+

+For the output, we use the `opensearch` plugin to send the logs to Opensearch. We specify the Opensearch host, port, and index name.

+

+ - Modify the Opensearch host and port in the configuration file to match your Opensearch installation.

+ - Depending on the system where Fluent Bit is installed:

+ - Start the Fluent-bit service by running the following command:

+

+```text

+sudo systemctl start fluent-bit

+```

+- Verify that Fluent-bit is running by checking its status:

+```text

+sudo systemctl status fluent-bit

+```

diff --git a/src/fluent-bit/fluent-bit.conf b/src/fluent-bit/fluent-bit.conf

new file mode 100644

index 0000000000..3f87c833c6

--- /dev/null

+++ b/src/fluent-bit/fluent-bit.conf

@@ -0,0 +1,36 @@

+[SERVICE]

+ Parsers_File parsers.conf

+ Log_Level info

+ Daemon off

+[INPUT]

+ Name forward

+ Port 24224

+

+[FILTER]

+ Name parser

+ Match nginx.access

+ Key_Name log

+ Parser nginx

+

+[FILTER]

+ Name lua

+ Match nginx.access

+ code function cb_filter(a,b,c)local d={}local e=os.date("!%Y-%m-%dT%H:%M:%S.000Z")d["observerTime"]=e;d["body"]=c.remote.." "..c.host.." "..c.user.." ["..os.date("%d/%b/%Y:%H:%M:%S %z").."] \""..c.method.." "..c.path.." HTTP/1.1\" "..c.code.." "..c.size.." \""..c.referer.."\" \""..c.agent.."\""d["trace_id"]="102981ABCD2901"d["span_id"]="abcdef1010"d["attributes"]={}d["attributes"]["data_stream"]={}d["attributes"]["data_stream"]["dataset"]="nginx.access"d["attributes"]["data_stream"]["namespace"]="production"d["attributes"]["data_stream"]["type"]="logs"d["event"]={}d["event"]["category"]={"web"}d["event"]["name"]="access"d["event"]["domain"]="nginx.access"d["event"]["kind"]="event"d["event"]["result"]="success"d["event"]["type"]={"access"}d["http"]={}d["http"]["request"]={}d["http"]["request"]["method"]=c.method;d["http"]["response"]={}d["http"]["response"]["bytes"]=tonumber(c.size)d["http"]["response"]["status_code"]=c.code;d["http"]["flavor"]="1.1"d["http"]["url"]=c.path;d["communication"]={}d["communication"]["source"]={}d["communication"]["source"]["address"]="127.0.0.1"d["communication"]["source"]["ip"]=c.remote;return 1,b,d end

+ call cb_filter

+

+[OUTPUT]

+ Name opensearch

+ Match nginx.*

+ Host opensearch-node1

+ Port 9200

+ tls On

+ tls.verify Off

+ HTTP_Passwd admin

+ HTTP_User admin

+ Index sso_logs-nginx-prod

+ Generate_ID On

+ Retry_Limit False

+ Suppress_Type_Name On

+[OUTPUT]

+ Name stdout

+ Match nginx.access

\ No newline at end of file

diff --git a/src/fluent-bit/fluentbit.png b/src/fluent-bit/fluentbit.png

new file mode 100644

index 0000000000..770a562a6c

Binary files /dev/null and b/src/fluent-bit/fluentbit.png differ

diff --git a/src/fluent-bit/parsers.conf b/src/fluent-bit/parsers.conf

new file mode 100644

index 0000000000..d9015f210a

--- /dev/null

+++ b/src/fluent-bit/parsers.conf

@@ -0,0 +1,6 @@

+[PARSER]

+ Name nginx

+ Format regex

+ Regex ^(?[^ ]*) (?[^ ]*) (?[^ ]*) \[(?