diff --git a/.github/workflows/tests.yml b/.github/workflows/tests.yml

index c0550349b..10e6339ea 100644

--- a/.github/workflows/tests.yml

+++ b/.github/workflows/tests.yml

@@ -37,6 +37,8 @@ jobs:

run: |

python -m pip install --upgrade pip

pip install pytest

+ # sending files in form data throwing error in flask 3 while running tests

+ pip install Werkzeug==2.0.2 flask==2.0.2

pip install .

- name: Test with pytest

diff --git a/README.md b/README.md

index 47b0cfae6..8462279dd 100644

--- a/README.md

+++ b/README.md

@@ -341,7 +341,7 @@ cd scripts

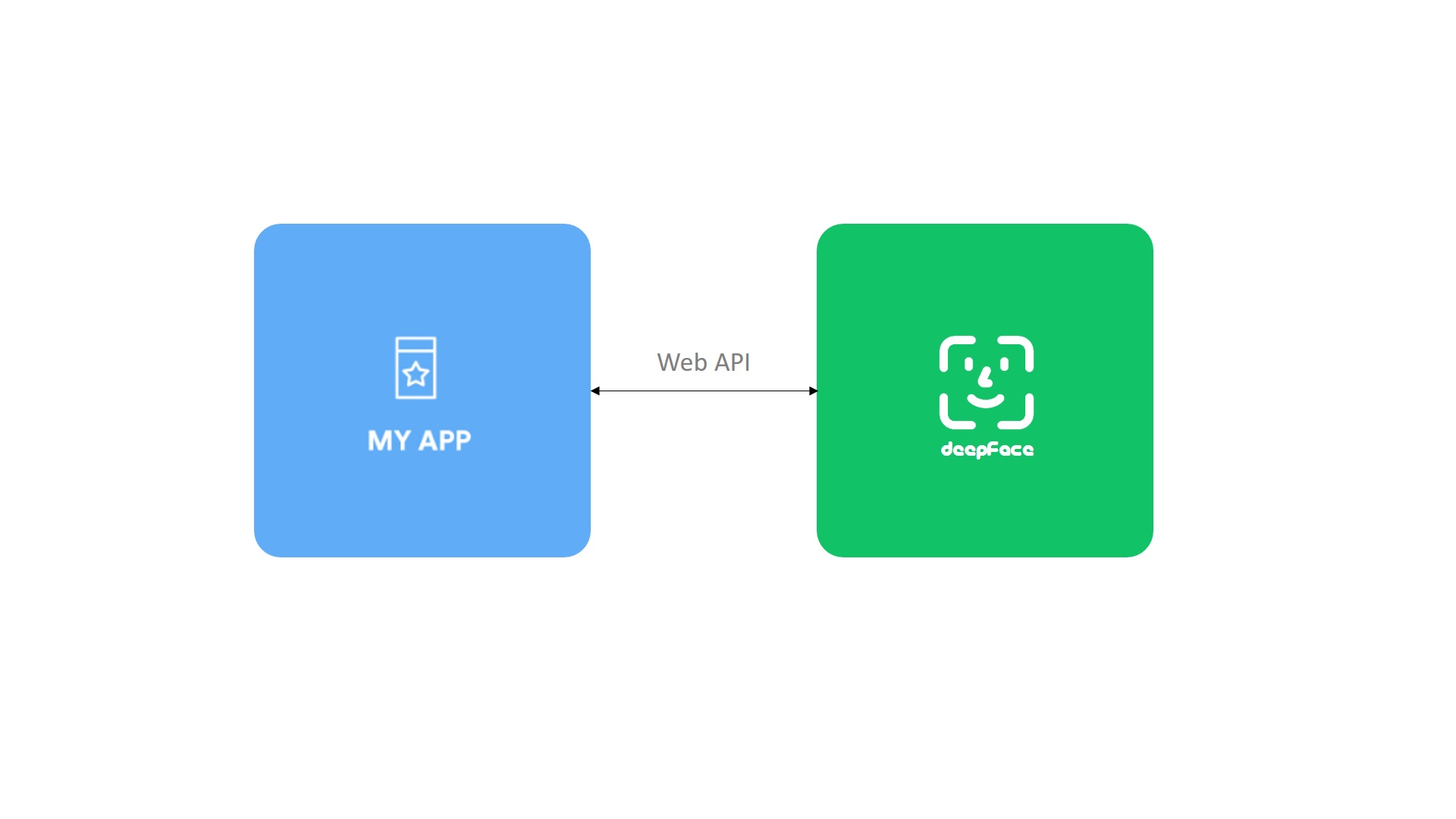

-Face recognition, facial attribute analysis and vector representation functions are covered in the API. You are expected to call these functions as http post methods. Default service endpoints will be `http://localhost:5005/verify` for face recognition, `http://localhost:5005/analyze` for facial attribute analysis, and `http://localhost:5005/represent` for vector representation. You can pass input images as exact image paths on your environment, base64 encoded strings or images on web. [Here](https://github.com/serengil/deepface/tree/master/deepface/api/postman), you can find a postman project to find out how these methods should be called.

+Face recognition, facial attribute analysis and vector representation functions are covered in the API. You are expected to call these functions as http post methods. Default service endpoints will be `http://localhost:5005/verify` for face recognition, `http://localhost:5005/analyze` for facial attribute analysis, and `http://localhost:5005/represent` for vector representation. The API accepts images as file uploads (via form data), or as exact image paths, URLs, or base64-encoded strings (via either JSON or form data), providing versatile options for different client requirements. [Here](https://github.com/serengil/deepface/tree/master/deepface/api/postman), you can find a postman project to find out how these methods should be called.

**Dockerized Service** - [`Demo`](https://youtu.be/9Tk9lRQareA)

diff --git a/deepface/api/postman/deepface-api.postman_collection.json b/deepface/api/postman/deepface-api.postman_collection.json

index 0cbb0a388..539993d81 100644

--- a/deepface/api/postman/deepface-api.postman_collection.json

+++ b/deepface/api/postman/deepface-api.postman_collection.json

@@ -1,12 +1,54 @@

{

"info": {

- "_postman_id": "4c0b144e-4294-4bdd-8072-bcb326b1fed2",

+ "_postman_id": "26c5ee53-1f4b-41db-9342-3617c90059d3",

"name": "deepface-api",

"schema": "https://schema.getpostman.com/json/collection/v2.1.0/collection.json"

},

"item": [

{

- "name": "Represent",

+ "name": "Represent - form data",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "formdata",

+ "formdata": [

+ {

+ "key": "img",

+ "type": "file",

+ "src": "/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg"

+ },

+ {

+ "key": "model_name",

+ "value": "Facenet",

+ "type": "text"

+ }

+ ],

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5005/represent",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5005",

+ "path": [

+ "represent"

+ ]

+ }

+ },

+ "response": []

+ },

+ {

+ "name": "Represent - default",

"request": {

"method": "POST",

"header": [],

@@ -20,7 +62,7 @@

}

},

"url": {

- "raw": "http://127.0.0.1:5000/represent",

+ "raw": "http://127.0.0.1:5005/represent",

"protocol": "http",

"host": [

"127",

@@ -28,7 +70,7 @@

"0",

"1"

],

- "port": "5000",

+ "port": "5005",

"path": [

"represent"

]

@@ -37,13 +79,60 @@

"response": []

},

{

- "name": "Face verification",

+ "name": "Face verification - default",

"request": {

"method": "POST",

"header": [],

"body": {

"mode": "raw",

- "raw": " {\n \t\"img1_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg\",\n \"img2_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/img2.jpg\",\n \"model_name\": \"Facenet\",\n \"detector_backend\": \"mtcnn\",\n \"distance_metric\": \"euclidean\"\n }",

+ "raw": " {\n \t\"img1\": \"/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg\",\n \"img2\": \"/Users/sefik/Desktop/deepface/tests/dataset/img2.jpg\",\n \"model_name\": \"Facenet\",\n \"detector_backend\": \"mtcnn\",\n \"distance_metric\": \"euclidean\"\n }",

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5005/verify",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5005",

+ "path": [

+ "verify"

+ ]

+ }

+ },

+ "response": []

+ },

+ {

+ "name": "Face verification - form data",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "formdata",

+ "formdata": [

+ {

+ "key": "img1",

+ "type": "file",

+ "src": "/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg"

+ },

+ {

+ "key": "img2",

+ "type": "file",

+ "src": "/Users/sefik/Desktop/deepface/tests/dataset/img2.jpg"

+ },

+ {

+ "key": "model_name",

+ "value": "Facenet",

+ "type": "text"

+ }

+ ],

"options": {

"raw": {

"language": "json"

@@ -51,7 +140,7 @@

}

},

"url": {

- "raw": "http://127.0.0.1:5000/verify",

+ "raw": "http://127.0.0.1:5005/verify",

"protocol": "http",

"host": [

"127",

@@ -59,7 +148,7 @@

"0",

"1"

],

- "port": "5000",

+ "port": "5005",

"path": [

"verify"

]

@@ -68,13 +157,13 @@

"response": []

},

{

- "name": "Face analysis",

+ "name": "Face analysis - default",

"request": {

"method": "POST",

"header": [],

"body": {

"mode": "raw",

- "raw": "{\n \"img_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/couple.jpg\",\n \"actions\": [\"age\", \"gender\", \"emotion\", \"race\"]\n}",

+ "raw": "{\n \"img\": \"/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg\",\n \"actions\": [\"age\", \"gender\", \"emotion\", \"race\"]\n}",

"options": {

"raw": {

"language": "json"

@@ -82,7 +171,7 @@

}

},

"url": {

- "raw": "http://127.0.0.1:5000/analyze",

+ "raw": "http://127.0.0.1:5005/analyze",

"protocol": "http",

"host": [

"127",

@@ -90,7 +179,46 @@

"0",

"1"

],

- "port": "5000",

+ "port": "5005",

+ "path": [

+ "analyze"

+ ]

+ }

+ },

+ "response": []

+ },

+ {

+ "name": "Face analysis - form data",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "formdata",

+ "formdata": [

+ {

+ "key": "img",

+ "type": "file",

+ "src": "/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg"

+ },

+ {

+ "key": "actions",

+ "value": "\"[age, gender]\"",

+ "type": "text"

+ }

+ ],

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://localhost:5005/analyze",

+ "protocol": "http",

+ "host": [

+ "localhost"

+ ],

+ "port": "5005",

"path": [

"analyze"

]

diff --git a/deepface/api/src/modules/core/routes.py b/deepface/api/src/modules/core/routes.py

index 4830bec21..9cb2e747a 100644

--- a/deepface/api/src/modules/core/routes.py

+++ b/deepface/api/src/modules/core/routes.py

@@ -1,31 +1,86 @@

+# built-in dependencies

+from typing import Union

+

+# 3rd party dependencies

from flask import Blueprint, request

+import numpy as np

+

+# project dependencies

from deepface import DeepFace

from deepface.api.src.modules.core import service

+from deepface.commons import image_utils

from deepface.commons.logger import Logger

logger = Logger()

blueprint = Blueprint("routes", __name__)

+# pylint: disable=no-else-return, broad-except

+

@blueprint.route("/")

def home():

return f"Welcome to DeepFace API v{DeepFace.__version__}!

"

+def extract_image_from_request(img_key: str) -> Union[str, np.ndarray]:

+ """

+ Extracts an image from the request either from json or a multipart/form-data file.

+

+ Args:

+ img_key (str): The key used to retrieve the image data

+ from the request (e.g., 'img1').

+

+ Returns:

+ img (str or np.ndarray): Given image detail (base64 encoded string, image path or url)

+ or the decoded image as a numpy array.

+ """

+

+ # Check if the request is multipart/form-data (file input)

+ if request.files:

+ # request.files is instance of werkzeug.datastructures.ImmutableMultiDict

+ # file is instance of werkzeug.datastructures.FileStorage

+ file = request.files.get(img_key)

+

+ if file is None:

+ raise ValueError(f"Request form data doesn't have {img_key}")

+

+ if file.filename == "":

+ raise ValueError(f"No file uploaded for '{img_key}'")

+

+ img = image_utils.load_image_from_file_storage(file)

+

+ return img

+ # Check if the request is coming as base64, file path or url from json or form data

+ elif request.is_json or request.form:

+ input_args = request.get_json() or request.form.to_dict()

+

+ if input_args is None:

+ raise ValueError("empty input set passed")

+

+ # this can be base64 encoded image, and image path or url

+ img = input_args.get(img_key)

+

+ if not img:

+ raise ValueError(f"'{img_key}' not found in either json or form data request")

+

+ return img

+

+ # If neither JSON nor file input is present

+ raise ValueError(f"'{img_key}' not found in request in either json or form data")

+

+

@blueprint.route("/represent", methods=["POST"])

def represent():

- input_args = request.get_json()

+ input_args = request.get_json() or request.form.to_dict()

- if input_args is None:

- return {"message": "empty input set passed"}

-

- img_path = input_args.get("img") or input_args.get("img_path")

- if img_path is None:

- return {"message": "you must pass img_path input"}

+ try:

+ img = extract_image_from_request("img")

+ except Exception as err:

+ return {"exception": str(err)}, 400

obj = service.represent(

- img_path=img_path,

+ img_path=img,

model_name=input_args.get("model_name", "VGG-Face"),

detector_backend=input_args.get("detector_backend", "opencv"),

enforce_detection=input_args.get("enforce_detection", True),

@@ -41,23 +96,21 @@ def represent():

@blueprint.route("/verify", methods=["POST"])

def verify():

- input_args = request.get_json()

-

- if input_args is None:

- return {"message": "empty input set passed"}

-

- img1_path = input_args.get("img1") or input_args.get("img1_path")

- img2_path = input_args.get("img2") or input_args.get("img2_path")

+ input_args = request.get_json() or request.form.to_dict()

- if img1_path is None:

- return {"message": "you must pass img1_path input"}

+ try:

+ img1 = extract_image_from_request("img1")

+ except Exception as err:

+ return {"exception": str(err)}, 400

- if img2_path is None:

- return {"message": "you must pass img2_path input"}

+ try:

+ img2 = extract_image_from_request("img2")

+ except Exception as err:

+ return {"exception": str(err)}, 400

verification = service.verify(

- img1_path=img1_path,

- img2_path=img2_path,

+ img1_path=img1,

+ img2_path=img2,

model_name=input_args.get("model_name", "VGG-Face"),

detector_backend=input_args.get("detector_backend", "opencv"),

distance_metric=input_args.get("distance_metric", "cosine"),

@@ -73,18 +126,31 @@ def verify():

@blueprint.route("/analyze", methods=["POST"])

def analyze():

- input_args = request.get_json()

-

- if input_args is None:

- return {"message": "empty input set passed"}

-

- img_path = input_args.get("img") or input_args.get("img_path")

- if img_path is None:

- return {"message": "you must pass img_path input"}

+ input_args = request.get_json() or request.form.to_dict()

+

+ try:

+ img = extract_image_from_request("img")

+ except Exception as err:

+ return {"exception": str(err)}, 400

+

+ actions = input_args.get("actions", ["age", "gender", "emotion", "race"])

+ # actions is the only argument instance of list or tuple

+ # if request is form data, input args can either be text or file

+ if isinstance(actions, str):

+ actions = (

+ actions.replace("[", "")

+ .replace("]", "")

+ .replace("(", "")

+ .replace(")", "")

+ .replace('"', "")

+ .replace("'", "")

+ .replace(" ", "")

+ .split(",")

+ )

demographies = service.analyze(

- img_path=img_path,

- actions=input_args.get("actions", ["age", "gender", "emotion", "race"]),

+ img_path=img,

+ actions=actions,

detector_backend=input_args.get("detector_backend", "opencv"),

enforce_detection=input_args.get("enforce_detection", True),

align=input_args.get("align", True),

diff --git a/deepface/api/src/modules/core/service.py b/deepface/api/src/modules/core/service.py

index 299430055..45fc8c452 100644

--- a/deepface/api/src/modules/core/service.py

+++ b/deepface/api/src/modules/core/service.py

@@ -1,15 +1,22 @@

# built-in dependencies

import traceback

-from typing import Optional

+from typing import Optional, Union

+

+# 3rd party dependencies

+import numpy as np

# project dependencies

from deepface import DeepFace

+from deepface.commons.logger import Logger

+

+logger = Logger()

+

# pylint: disable=broad-except

def represent(

- img_path: str,

+ img_path: Union[str, np.ndarray],

model_name: str,

detector_backend: str,

enforce_detection: bool,

@@ -32,12 +39,14 @@ def represent(

return result

except Exception as err:

tb_str = traceback.format_exc()

+ logger.error(str(err))

+ logger.error(tb_str)

return {"error": f"Exception while representing: {str(err)} - {tb_str}"}, 400

def verify(

- img1_path: str,

- img2_path: str,

+ img1_path: Union[str, np.ndarray],

+ img2_path: Union[str, np.ndarray],

model_name: str,

detector_backend: str,

distance_metric: str,

@@ -59,11 +68,13 @@ def verify(

return obj

except Exception as err:

tb_str = traceback.format_exc()

+ logger.error(str(err))

+ logger.error(tb_str)

return {"error": f"Exception while verifying: {str(err)} - {tb_str}"}, 400

def analyze(

- img_path: str,

+ img_path: Union[str, np.ndarray],

actions: list,

detector_backend: str,

enforce_detection: bool,

@@ -85,4 +96,6 @@ def analyze(

return result

except Exception as err:

tb_str = traceback.format_exc()

+ logger.error(str(err))

+ logger.error(tb_str)

return {"error": f"Exception while analyzing: {str(err)} - {tb_str}"}, 400

diff --git a/deepface/commons/image_utils.py b/deepface/commons/image_utils.py

index c2ae1ed67..b72ce0b43 100644

--- a/deepface/commons/image_utils.py

+++ b/deepface/commons/image_utils.py

@@ -11,6 +11,7 @@

import numpy as np

import cv2

from PIL import Image

+from werkzeug.datastructures import FileStorage

def list_images(path: str) -> List[str]:

@@ -133,6 +134,21 @@ def load_image_from_base64(uri: str) -> np.ndarray:

return img_bgr

+def load_image_from_file_storage(file: FileStorage) -> np.ndarray:

+ """

+ Loads an image from a FileStorage object and decodes it into an OpenCV image.

+ Args:

+ file (FileStorage): The FileStorage object containing the image file.

+ Returns:

+ img (np.ndarray): The decoded image as a numpy array (OpenCV format).

+ """

+ file_bytes = np.frombuffer(file.read(), np.uint8)

+ image = cv2.imdecode(file_bytes, cv2.IMREAD_COLOR)

+ if image is None:

+ raise ValueError("Failed to decode image")

+ return image

+

+

def load_image_from_web(url: str) -> np.ndarray:

"""

Loading an image from web

diff --git a/requirements_local b/requirements_local

index 22bbe11d1..1705b13a2 100644

--- a/requirements_local

+++ b/requirements_local

@@ -3,4 +3,4 @@ pandas==2.0.3

Pillow==9.0.0

opencv-python==4.9.0.80

tensorflow==2.13.1

-keras==2.13.1

+keras==2.13.1

\ No newline at end of file

diff --git a/tests/test_api.py b/tests/test_api.py

index ef2db73d2..0506143f8 100644

--- a/tests/test_api.py

+++ b/tests/test_api.py

@@ -1,16 +1,29 @@

# built-in dependencies

+import os

import base64

import unittest

+# 3rd party dependencies

+import gdown

+

# project dependencies

from deepface.api.src.app import create_app

from deepface.commons.logger import Logger

logger = Logger()

+IMG1_SOURCE = (

+ "https://raw.githubusercontent.com/serengil/deepface/refs/heads/master/tests/dataset/img1.jpg"

+)

+IMG2_SOURCE = (

+ "https://raw.githubusercontent.com/serengil/deepface/refs/heads/master/tests/dataset/img2.jpg"

+)

+

class TestVerifyEndpoint(unittest.TestCase):

def setUp(self):

+ download_test_images(IMG1_SOURCE)

+ download_test_images(IMG2_SOURCE)

app = create_app()

app.config["DEBUG"] = True

app.config["TESTING"] = True

@@ -18,8 +31,8 @@ def setUp(self):

def test_tp_verify(self):

data = {

- "img1_path": "dataset/img1.jpg",

- "img2_path": "dataset/img2.jpg",

+ "img1": "dataset/img1.jpg",

+ "img2": "dataset/img2.jpg",

}

response = self.app.post("/verify", json=data)

assert response.status_code == 200

@@ -40,8 +53,8 @@ def test_tp_verify(self):

def test_tn_verify(self):

data = {

- "img1_path": "dataset/img1.jpg",

- "img2_path": "dataset/img2.jpg",

+ "img1": "dataset/img1.jpg",

+ "img2": "dataset/img2.jpg",

}

response = self.app.post("/verify", json=data)

assert response.status_code == 200

@@ -83,14 +96,11 @@ def test_represent(self):

def test_represent_encoded(self):

image_path = "dataset/img1.jpg"

with open(image_path, "rb") as image_file:

- encoded_string = "data:image/jpeg;base64," + \

- base64.b64encode(image_file.read()).decode("utf8")

+ encoded_string = "data:image/jpeg;base64," + base64.b64encode(image_file.read()).decode(

+ "utf8"

+ )

- data = {

- "model_name": "Facenet",

- "detector_backend": "mtcnn",

- "img": encoded_string

- }

+ data = {"model_name": "Facenet", "detector_backend": "mtcnn", "img": encoded_string}

response = self.app.post("/represent", json=data)

assert response.status_code == 200

@@ -112,7 +122,7 @@ def test_represent_url(self):

data = {

"model_name": "Facenet",

"detector_backend": "mtcnn",

- "img": "https://github.com/serengil/deepface/blob/master/tests/dataset/couple.jpg?raw=true"

+ "img": "https://github.com/serengil/deepface/blob/master/tests/dataset/couple.jpg?raw=true",

}

response = self.app.post("/represent", json=data)

@@ -155,8 +165,9 @@ def test_analyze(self):

def test_analyze_inputformats(self):

image_path = "dataset/couple.jpg"

with open(image_path, "rb") as image_file:

- encoded_image = "data:image/jpeg;base64," + \

- base64.b64encode(image_file.read()).decode("utf8")

+ encoded_image = "data:image/jpeg;base64," + base64.b64encode(image_file.read()).decode(

+ "utf8"

+ )

image_sources = [

# image path

@@ -164,7 +175,7 @@ def test_analyze_inputformats(self):

# image url

f"https://github.com/serengil/deepface/blob/master/tests/{image_path}?raw=true",

# encoded image

- encoded_image

+ encoded_image,

]

results = []

@@ -189,25 +200,38 @@ def test_analyze_inputformats(self):

assert i.get("dominant_emotion") is not None

assert i.get("dominant_race") is not None

- assert len(results[0]["results"]) == len(results[1]["results"])\

- and len(results[0]["results"]) == len(results[2]["results"])

-

- for i in range(len(results[0]['results'])):

- assert results[0]["results"][i]["dominant_emotion"] == results[1]["results"][i]["dominant_emotion"]\

- and results[0]["results"][i]["dominant_emotion"] == results[2]["results"][i]["dominant_emotion"]

-

- assert results[0]["results"][i]["dominant_gender"] == results[1]["results"][i]["dominant_gender"]\

- and results[0]["results"][i]["dominant_gender"] == results[2]["results"][i]["dominant_gender"]

-

- assert results[0]["results"][i]["dominant_race"] == results[1]["results"][i]["dominant_race"]\

- and results[0]["results"][i]["dominant_race"] == results[2]["results"][i]["dominant_race"]

+ assert len(results[0]["results"]) == len(results[1]["results"]) and len(

+ results[0]["results"]

+ ) == len(results[2]["results"])

+

+ for i in range(len(results[0]["results"])):

+ assert (

+ results[0]["results"][i]["dominant_emotion"]

+ == results[1]["results"][i]["dominant_emotion"]

+ and results[0]["results"][i]["dominant_emotion"]

+ == results[2]["results"][i]["dominant_emotion"]

+ )

+

+ assert (

+ results[0]["results"][i]["dominant_gender"]

+ == results[1]["results"][i]["dominant_gender"]

+ and results[0]["results"][i]["dominant_gender"]

+ == results[2]["results"][i]["dominant_gender"]

+ )

+

+ assert (

+ results[0]["results"][i]["dominant_race"]

+ == results[1]["results"][i]["dominant_race"]

+ and results[0]["results"][i]["dominant_race"]

+ == results[2]["results"][i]["dominant_race"]

+ )

logger.info("✅ different inputs test is done")

def test_invalid_verify(self):

data = {

- "img1_path": "dataset/invalid_1.jpg",

- "img2_path": "dataset/invalid_2.jpg",

+ "img1": "dataset/invalid_1.jpg",

+ "img2": "dataset/invalid_2.jpg",

}

response = self.app.post("/verify", json=data)

assert response.status_code == 400

@@ -227,3 +251,87 @@ def test_invalid_analyze(self):

}

response = self.app.post("/analyze", json=data)

assert response.status_code == 400

+

+ def test_analyze_for_multipart_form_data(self):

+ with open("/tmp/img1.jpg", "rb") as img_file:

+ response = self.app.post(

+ "/analyze",

+ content_type="multipart/form-data",

+ data={

+ "img": (img_file, "test_image.jpg"),

+ "actions": '["age", "gender"]',

+ "detector_backend": "mtcnn",

+ },

+ )

+ assert response.status_code == 200

+ result = response.json

+ assert isinstance(result, dict)

+ assert result.get("age") is not True

+ assert result.get("dominant_gender") is not True

+ logger.info("✅ analyze api for multipart form data test is done")

+

+ def test_verify_for_multipart_form_data(self):

+ with open("/tmp/img1.jpg", "rb") as img1_file:

+ with open("/tmp/img2.jpg", "rb") as img2_file:

+ response = self.app.post(

+ "/verify",

+ content_type="multipart/form-data",

+ data={

+ "img1": (img1_file, "first_image.jpg"),

+ "img2": (img2_file, "second_image.jpg"),

+ "model_name": "Facenet",

+ "detector_backend": "mtcnn",

+ "distance_metric": "euclidean",

+ },

+ )

+ assert response.status_code == 200

+ result = response.json

+ assert isinstance(result, dict)

+ assert result.get("verified") is not None

+ assert result.get("model") == "Facenet"

+ assert result.get("similarity_metric") is not None

+ assert result.get("detector_backend") == "mtcnn"

+ assert result.get("threshold") is not None

+ assert result.get("facial_areas") is not None

+

+ logger.info("✅ verify api for multipart form data test is done")

+

+ def test_represent_for_multipart_form_data(self):

+ with open("/tmp/img1.jpg", "rb") as img_file:

+ response = self.app.post(

+ "/represent",

+ content_type="multipart/form-data",

+ data={

+ "img": (img_file, "first_image.jpg"),

+ "model_name": "Facenet",

+ "detector_backend": "mtcnn",

+ },

+ )

+ assert response.status_code == 200

+ result = response.json

+ assert isinstance(result, dict)

+ logger.info("✅ represent api for multipart form data test is done")

+

+ def test_represent_for_multipart_form_data_and_filepath(self):

+ response = self.app.post(

+ "/represent",

+ content_type="multipart/form-data",

+ data={

+ "img": "/tmp/img1.jpg",

+ "model_name": "Facenet",

+ "detector_backend": "mtcnn",

+ },

+ )

+ assert response.status_code == 200

+ result = response.json

+ assert isinstance(result, dict)

+ logger.info("✅ represent api for multipart form data and file path test is done")

+

+

+def download_test_images(url: str):

+ file_name = url.split("/")[-1]

+ target_file = f"/tmp/{file_name}"

+ if os.path.exists(target_file) is True:

+ return

+

+ gdown.download(url, target_file, quiet=False)

![]()