diff --git a/.buildkite/pipeline.yml b/.buildkite/pipeline.yml

index b4eb094389..ff9bd2a6c4 100644

--- a/.buildkite/pipeline.yml

+++ b/.buildkite/pipeline.yml

@@ -2,110 +2,57 @@ env:

RETENTION_DAYS: "10"

steps:

- - label: ":white_check_mark: Check Shell"

- key: "check-shell"

- command: ./ops/check.sh shell

-

- - label: ":lock: Check Security"

- key: "check-security"

- command: ./ops/check.sh security

-

- - label: ":lock: Check CSS px"

- key: "check-px"

- command: ./ops/check.sh px

-

- - label: ":lock: Deny CSS hex"

- key: "deny-css-hex-check"

- command: ./ops/check.sh hex

-

- - label: ":lock: Deny CSS rgba"

- key: "deny-css-rgba-check"

- command: ./ops/check.sh rgba

-

- - label: ":lock: Check .* in backend"

- key: "check-dot-star"

- command: ./ops/check.sh dot-star

-

- - label: ":white_check_mark: Check Backend"

- if: build.branch == "main" && build.message =~ /(?i)\[backend\]/

- key: "check-backend"

- command: ./ops/check.sh backend

-

- - label: ":white_check_mark: Check Frontend"

- if: build.branch == "main" && build.message =~ /(?i)\[frontend\]/

- key: "check-frontend"

- command: ./ops/check.sh frontend

-

- - label: ":mag: Check Frontend License"

- key: "check-frontend-license"

- commands: ./ops/check.sh frontend-license

-

- - label: ":mag: Check Backend License"

- key: "check-backend-license"

- commands: ./ops/check.sh backend-license

- plugins:

- - artifacts#v1.9.0:

- upload:

- - "backend/build/reports/dependency-license/**/*"

- name: "backend-license-report"

- expire_in: "${RETENTION_DAYS} days"

-

- label: ":cloudformation: Deploy infra"

if: build.branch == "main" && build.message =~ /(?i)\[infra\]/

key: "deploy-infra"

- depends_on:

- - "check-shell"

- - "check-security"

- - "check-frontend"

- - "check-px"

- - deny-css-rgba-check

- - deny-css-hex-check

- - "check-backend"

- - "check-frontend-license"

- - "check-backend-license"

env:

AWSHost: "$AWS_HOST"

AWSAccountId: "$AWS_ACCOUNT_ID"

AWSRegion: "$AWS_REGION"

command: ./ops/deploy.sh infra

+ - label: ":white_check_mark: GitHub Basic Check"

+ if: build.branch == "main"

+ key: "check-github-basic"

+ command: ./ops/check.sh github-basic-passed

+ env:

+ COMMIT_SHA: "$BUILDKITE_COMMIT"

+ GITHUB_TOKEN: "$E2E_TOKEN_GITHUB"

+ BRANCH: "$BUILDKITE_BRANCH"

+ depends_on:

+ - "deploy-infra"

+

- label: ":react: Build Frontend"

if: build.branch == "main" && build.message =~ /(?i)\[frontend\]/

key: "build-frontend"

- depends_on: "deploy-infra"

+ depends_on:

+ - "check-github-basic"

command: ./ops/build.sh frontend

- label: ":java: Build Backend"

if: build.branch == "main" && build.message =~ /(?i)\[backend\]/

key: "build-backend"

- depends_on: "deploy-infra"

+ depends_on:

+ - "check-github-basic"

command: ./ops/build.sh backend

- label: ":rocket: Deploy e2e"

- if: build.branch == "main" && (build.message =~ /(?i)\[frontend\]/ || build.message =~ /(?i)\[backend\]/)

+ if: build.branch == "main"

key: "deploy-e2e"

depends_on:

- "build-frontend"

- "build-backend"

+ - "check-github-basic"

command: ./ops/deploy.sh e2e

- label: ":rocket: Run e2e"

- branches: main

+ if: build.branch == "main"

key: "check-e2e"

depends_on:

- "deploy-e2e"

- - "check-shell"

- - "check-security"

- - "check-frontend"

- - "check-px"

- - deny-css-rgba-check

- - deny-css-hex-check

- - "check-backend"

- - "check-frontend-license"

- - "check-backend-license"

command: ./ops/check.sh e2e-container

plugins:

- - artifacts#v1.9.0:

+ - artifacts#v1.9.3:

upload: "./e2e-reports.tar.gz"

expire_in: "${RETENTION_DAYS} days"

diff --git a/.github/ISSUE_TEMPLATE/feature_request.yml b/.github/ISSUE_TEMPLATE/feature_request.yml

index 46b1c6c1a6..969401ce55 100644

--- a/.github/ISSUE_TEMPLATE/feature_request.yml

+++ b/.github/ISSUE_TEMPLATE/feature_request.yml

@@ -6,6 +6,8 @@ body:

- type: markdown

attributes:

value: |

+ ## Request Detail

+

The issue list is reserved exclusively for bug reports and feature requests.

For usage questions, please use the following resources:

@@ -54,8 +56,51 @@ body:

description: What tools will support your request feature?

multiple: true

options:

- - Board

- - Pipeline Tool

- - Source Control

+ - Board (like Jira)

+ - Pipeline Tool (like buildkite)

+ - Source Control (like github)

+ validations:

+ required: true

+

+ - type: markdown

+ attributes:

+ value: |

+ ## Account Detail

+

+ Let's know more about you and your account. We will horizontally evaluate all received requests to adjust the priority.

+

+ **Below information are important in terms of prioritization.**

+

+ - type: input

+ id: account_info

+ attributes:

+ label: Account name

+ description: What's your account name?

+ placeholder: Make sure it could be found in jigsaw

+ validations:

+ required: true

+

+ - type: input

+ id: account_location

+ attributes:

+ label: Account location

+ description: Which country you account locate at?

+ validations:

+ required: true

+

+ - type: input

+ id: account_size

+ attributes:

+ label: Teams in Account

+ description: How many teams will adopt heartbeat after feature release?

+ validations:

+ required: true

+

+ - type: input

+ id: expected_date

+ attributes:

+ label: Expected launch date

+ description: What is the latest possible launch date you can accept?

+ placeholder: 2024-12

validations:

- required: true

\ No newline at end of file

+ required: false

diff --git a/.github/workflows/Docs.yaml b/.github/workflows/Docs.yaml

index 9507aebc86..f691316b16 100644

--- a/.github/workflows/Docs.yaml

+++ b/.github/workflows/Docs.yaml

@@ -28,7 +28,7 @@ jobs:

- name: Build docs

run: pnpm run build

- name: Deploy to github pages

- uses: peaceiris/actions-gh-pages@v3

+ uses: peaceiris/actions-gh-pages@v4

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

publish_dir: ./docs/dist

diff --git a/.github/workflows/Release.yaml b/.github/workflows/Release.yaml

index 7314fcb29d..4319387ab5 100644

--- a/.github/workflows/Release.yaml

+++ b/.github/workflows/Release.yaml

@@ -24,7 +24,7 @@ jobs:

- name: Validate Gradle wrapper

uses: gradle/wrapper-validation-action@v2

- name: Set up Gradle

- uses: gradle/gradle-build-action@v3.1.0

+ uses: gradle/gradle-build-action@v3.2.1

- name: Build

run: ./gradlew clean build

- uses: actions/upload-artifact@v4

@@ -91,10 +91,23 @@ jobs:

tags: |

ghcr.io/${{ env.LOWCASE_REPO_NAME }}_backend:${{ env.TAG_NAME }}

ghcr.io/${{ env.LOWCASE_REPO_NAME }}_backend:latest

- release:

+

+ build-sbom:

runs-on: ubuntu-latest

needs:

- build_and_push_image

+ steps:

+ - uses: actions/checkout@v4

+ - uses: anchore/sbom-action@v0

+ with:

+ path: ./

+ artifact-name: ${{ env.REPO_NAME }}.${{ env.TAG_NAME }}.sbom.spdx.json

+ - uses: anchore/sbom-action/publish-sbom@v0

+

+ release:

+ runs-on: ubuntu-latest

+ needs:

+ - build-sbom

steps:

- uses: actions/checkout@v4

- name: Download frontend artifact

@@ -119,7 +132,7 @@ jobs:

ls

echo "TAG_NAME=$(git tag --sort version:refname | tail -n 1)" >> "$GITHUB_ENV"

- name: Upload zip file

- uses: softprops/action-gh-release@v1

+ uses: softprops/action-gh-release@v2

with:

files: ${{ env.REPO_NAME }}-${{ env.TAG_NAME }}.zip

diff --git a/.github/workflows/build-and-deploy.yml b/.github/workflows/build-and-deploy.yml

index 8b30ba63a4..9fd7814e0d 100644

--- a/.github/workflows/build-and-deploy.yml

+++ b/.github/workflows/build-and-deploy.yml

@@ -91,7 +91,7 @@ jobs:

- name: Validate Gradle wrapper

uses: gradle/wrapper-validation-action@v2

- name: Set up Gradle

- uses: gradle/gradle-build-action@v3.1.0

+ uses: gradle/gradle-build-action@v3.2.1

- name: Test and check

run: ./gradlew clean check

- name: Build

@@ -120,7 +120,7 @@ jobs:

- name: Validate Gradle wrapper

uses: gradle/wrapper-validation-action@v2

- name: Set up Gradle

- uses: gradle/gradle-build-action@v3.1.0

+ uses: gradle/gradle-build-action@v3.2.1

- name: License check

run: ./gradlew clean checkLicense

- uses: actions/upload-artifact@v4

@@ -228,21 +228,23 @@ jobs:

run: |

./ops/check.sh frontend-license

- # check-buildkite-status:

- # if: ${{ github.event_name == 'pull_request' }}

- # runs-on: ubuntu-latest

- # steps:

- # - name: Checkout code

- # uses: actions/checkout@v4

- #

- # - name: Check BuildKite status

- # run: |

- # buildkite_status=$(curl -H "Authorization: Bearer ${{ secrets.BUILDKITE_TOKEN }}" "https://api.buildkite.com/v2/organizations/thoughtworks-Heartbeat/pipelines/heartbeat/builds?branch=main"| jq -r '.[0].state')

- #

- # if [ "$buildkite_status" != "passed" ]; then

- # echo "BuildKite build failed. Cannot merge the PR."

- # exit 1

- # fi

+ check-buildkite-status:

+ runs-on: ubuntu-latest

+ steps:

+ - name: Checkout code

+ uses: actions/checkout@v4

+ - name: Check BuildKite status

+ env:

+ BUILDKITE_TOKEN: ${{ secrets.BUILDKITE_TOKEN }}

+ GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

+ GITHUB_REPOSITORY: ${{ github.repository }}

+ CURRENT_ACTOR: ${{ github.actor }}

+ EVENT_NAME: ${{ github.event_name }}

+ CURRENT_BRANCH_NAME: ${{ github.ref }}

+ PULL_REQUEST_TITLE: ${{ github.event.pull_request.title }}

+ run: |

+ ./ops/check.sh buildkite-status

+

images-check:

runs-on: ubuntu-latest

steps:

@@ -289,6 +291,7 @@ jobs:

- credential-check

- frontend-license-check

- backend-license-check

+ - check-buildkite-status

runs-on: ubuntu-latest

permissions:

id-token: write

@@ -459,13 +462,22 @@ jobs:

npm install -g pnpm

- name: Set env

run: echo "HOME=/root" >> $GITHUB_ENV

+ - name: Install shell deps

+ run: |

+ apt-get update && apt-get install -y jq

+ jq --version

+ - name: Check e2e deployment

+ env:

+ BUILDKITE_TOKEN: ${{ secrets.BUILDKITE_TOKEN }}

+ COMMIT_SHA: ${{ github.sha }}

+ run: ./ops/check.sh buildkite-e2e-deployed

- name: Run E2E

env:

APP_ORIGIN: ${{ vars.APP_HTTP_SCHEDULE }}://${{ secrets.AWS_EC2_IP_E2E }}:${{ secrets.AWS_EC2_IP_E2E_FRONTEND_PORT }}

E2E_TOKEN_JIRA: ${{ secrets.E2E_TOKEN_JIRA }}

E2E_TOKEN_BUILD_KITE: ${{ secrets.E2E_TOKEN_BUILD_KITE }}

E2E_TOKEN_GITHUB: ${{ secrets.E2E_TOKEN_GITHUB }}

- E2E_TOKEN_FLAG_AS_BLOCK_JIRA: ${{ secrets.E2E_TOKEN_FLAG_AS_BLOCK_JIRA }}

+ E2E_TOKEN_PIPELINE_NO_ORG_CONFIG_BUILDKITE: ${{ secrets.E2E_TOKEN_PIPELINE_NO_ORG_CONFIG_BUILDKITE }}

shell: bash {0}

run: ./ops/check.sh e2e

- uses: actions/upload-artifact@v4

@@ -474,6 +486,14 @@ jobs:

name: playwright-report

path: frontend/e2e/reports/

retention-days: 30

+ - name: Slack Notification

+ uses: rtCamp/action-slack-notify@v2

+ if: always()

+ env:

+ SLACK_WEBHOOK: ${{ secrets.SLACK_WEBHOOK }}

+ SLACK_ICON_EMOJI: ":heart-beat:"

+ SLACK_COLOR: ${{ job.status }}

+ SLACK_USERNAME: "Heartbeat E2E Status"

deploy:

runs-on: ubuntu-latest

diff --git a/.gitignore b/.gitignore

index 09186b0ace..d0e1e07fa9 100644

--- a/.gitignore

+++ b/.gitignore

@@ -7,6 +7,7 @@

/out-tsc

/logs

/app

+/stubs/logs/*

frontend/cypress/

# Only exists if Bazel was run

/bazel-out

@@ -51,3 +52,4 @@ volume

csv

gitleaks-report.json

+*.sbom.spdx.json

diff --git a/.gitleaksignore b/.gitleaksignore

index 27eafd5816..7307666c36 100644

--- a/.gitleaksignore

+++ b/.gitleaksignore

@@ -19,3 +19,4 @@ e001f3e4dc70deb4638d106d2ebfab520b9a2745:docs/src/components/Header/DocSearch.ts

6cff3275f5fcff29462e33b0508359b5d619ffec:docs/src/components/Header/DocSearch.tsx:generic-api-key:54

9102192bbe6790a348e5558cefbb051caa092411:_astro/DocSearch.d9740404.js:generic-api-key:13

a3fe6c206ca324e9e5e9a0e1422fd8c72845d855:_astro/DocSearch.d5fd0ff0.js:generic-api-key:13

+cb693e0c6117cb8f383b72e4bb1c8f2635b7b041:_astro/DocSearch.E1RdsI6d.js:generic-api-key:13

diff --git a/.trivyignore b/.trivyignore

index 048fbfd363..b693001686 100644

--- a/.trivyignore

+++ b/.trivyignore

@@ -12,3 +12,5 @@ CVE-2023-49468

CVE-2024-0553

CVE-2024-0567

CVE-2024-22201

+CVE-2024-22259

+CVE-2024-28085

diff --git a/README.md b/README.md

index 2106ab99b9..4dc2aa139a 100644

--- a/README.md

+++ b/README.md

@@ -1,45 +1,57 @@

-# Heartbeat Project(2023/07)

+# Heartbeat Project

[](https://buildkite.com/heartbeat-backup/heartbeat)[](https://www.codacy.com/gh/au-heartbeat/HeartBeat/dashboard?utm_source=github.com&utm_medium=referral&utm_content=au-heartbeat/HeartBeat&utm_campaign=Badge_Grade)[](https://www.codacy.com/gh/au-heartbeat/HeartBeat/dashboard?utm_source=github.com&utm_medium=referral&utm_content=au-heartbeat/HeartBeat&utm_campaign=Badge_Coverage)

-[](https://github.com/au-heartbeat/HeartBeat/actions/workflows/Docs.yaml) [](https://github.com/au-heartbeat/HeartBeat/actions/workflows/frontend.yml) [](https://github.com/au-heartbeat/HeartBeat/actions/workflows/backend.yml) [](https://github.com/au-heartbeat/HeartBeat/actions/workflows/Security.yml) [](https://github.com/au-heartbeat/Heartbeat/actions/workflows/build-and-deploy.yml)

+[](https://sonarcloud.io/summary/new_code?id=au-heartbeat-heartbeat-frontend)

+[](https://sonarcloud.io/summary/new_code?id=au-heartbeat-heartbeat-frontend)

+[](https://sonarcloud.io/summary/new_code?id=au-heartbeat-heartbeat-backend)

+

+[](https://github.com/au-heartbeat/HeartBeat/actions/workflows/Docs.yaml) [](https://github.com/au-heartbeat/Heartbeat/actions/workflows/build-and-deploy.yml)

[](https://opensource.org/licenses/MIT)

[](https://app.fossa.com/projects/custom%2B23211%2Fgithub.com%2Fau-heartbeat%2FHeartbeat?ref=badge_large)

-- [Heartbeat Project(2023/07)](#heartbeat-project202307)

+- [Heartbeat Project](#heartbeat-project)

- [News](#news)

- [1 About Heartbeat](#1-about-heartbeat)

- [2 Support tools](#2-support-tools)

- [3 Product Features](#3-product-features)

-

- [3.1 Config project info](#31-config-project-info)

- [3.1.1 Config Board/Pipeline/Source data](#311-config-boardpipelinesource-data)

- [3.1.2 Config search data](#312-config-search-data)

+ - [3.1.2.1 Date picker validation rules](#3121-date-picker-validation-rules)

- [3.1.3 Config project account](#313-config-project-account)

+ - [3.1.3.1 Guideline for generating Jira token](#3131-guideline-for-generating-jira-token)

+ - [3.1.3.2 Guideline for generating Buildkite token](#3132-guideline-for-generating-buildkite-token)

+ - [3.1.3.3 Guideline for generating GitHub token](#3133-guideline-for-generating-github-token)

+ - [3.1.3.4 Authorize GitHub token with correct organization](#3134-authorize-github-token-with-correct-organization)

- [3.2 Config Metrics data](#32-config-metrics-data)

- - [3.2.1 Config Crews/Cycle Time](#321-config-crewscycle-time)

+ - [3.2.1 Config Crews/Board Mappings](#321-config-crewsboard-mappings)

- [3.2.2 Setting Classification](#322-setting-classification)

- - [3.2.3 Setting advanced settings](#323-setting-advanced-setting)

- - [3.2.4 Pipeline configuration](#324-pipeline-configuration)

+ - [3.2.3 Rework times Setting](#323-rework-times-setting)

+ - [3.2.4 Setting advanced Setting](#324-setting-advanced-setting)

+ - [3.2.5 Pipeline configuration](#325-pipeline-configuration)

- [3.3 Export and import config info](#33-export-and-import-config-info)

- [3.3.1 Export Config Json File](#331-export-config-json-file)

- [3.3.2 Import Config Json File](#332-import-config-json-file)

- - [3.4 Generate Metrics Data](#34-generate-metrics-data)

+ - [3.4 Generate Metrics report](#34-generate-metrics-report)

- [3.4.1 Velocity](#341-velocity)

- [3.4.2 Cycle Time](#342-cycle-time)

- [3.4.3 Classification](#343-classification)

- - [3.4.4 Deployment Frequency](#344-deployment-frequency)

- - [3.4.5 Lead time for changes Data](#345-lead-time-for-changes-data)

- - [3.4.6 Change Failure Rate](#346-change-failure-rate)

- - [3.4.7 Mean time to recovery](#347-mean-time-to-recovery)

+ - [3.4.4 Rework](#344-rework)

+ - [3.4.5 Deployment Frequency](#345-deployment-frequency)

+ - [3.4.6 Lead time for changes Data](#346-lead-time-for-changes-data)

+ - [3.4.7 Dev Change Failure Rate](#347-dev-change-failure-rate)

+ - [3.4.8 Dev Mean time to recovery](#348-dev-mean-time-to-recovery)

- [3.5 Export original data](#35-export-original-data)

- [3.5.1 Export board data](#351-export-board-data)

+ - [3.5.1.1 Done card exporting](#3511-done-card-exporting)

+ - [3.5.1.1 Undone card exporting](#3511-undone-card-exporting)

- [3.5.2 Export pipeline data](#352-export-pipeline-data)

- [3.6 Caching data](#36-caching-data)

- [4 Known issues](#4-known-issues)

- - [4.1 Change status name in Jira board](#41-change-status-name-in-jira-board-setting-when-there-are-cards-in-this-status)

+ - [4.1 Change status name in Jira board setting when there are cards in this status](#41--change-status-name-in-jira-board-setting-when-there-are-cards-in-this-status)

- [5 Instructions](#5-instructions)

- [5.1 Prepare for Jira Project](#51-prepare-for-jira-project)

- [5.2 Prepare env to use Heartbeat tool](#52-prepare-env-to-use-heartbeat-tool)

@@ -48,16 +60,19 @@

- [6.1.1 How to build and local preview](#611-how-to-build-and-local-preview)

- [6.1.2 How to run unit tests](#612-how-to-run-unit-tests)

- [6.1.3 How to generate a test report](#613-how-to-generate-a-test-report)

- - [6.1.4 How to run e2e tests locally](#614-how-to-run-e2e-tests-locally)

+ - [6.1.4 How to run E2E tests locally](#614-how-to-run-e2e-tests-locally)

+ - [6.2 How to run backend](#62-how-to-run-backend)

- [7 How to trigger BuildKite Pipeline](#7-how-to-trigger-buildkite-pipeline)

- [Release](#release)

- [Release command in main branch](#release-command-in-main-branch)

-- [7 How to use](#7-how-to-use)

- - [7.1 Docker-compose](#71-docker-compose)

- - [7.1.1 Customize story point field in Jira](#711-customize-story-point-field-in-jira)

- - [7.1.2 Multiple instance deployment](#712-multiple-instance-deployment)

- - [7.2 K8S](#72-k8s)

- - [7.2.1 Multiple instance deployment](#721-multiple-instance-deployment)

+- [8 How to use](#8-how-to-use)

+ - [8.1 Docker-compose](#81-docker-compose)

+ - [8.1.1 Customize story point field in Jira](#811-customize-story-point-field-in-jira)

+ - [8.1.2 Multiple instance deployment](#812-multiple-instance-deployment)

+ - [8.2 K8S](#82-k8s)

+ - [8.2.1 Multiple instance deployment](#821-multiple-instance-deployment)

+- [9 Contribution](#9-contribution)

+- [10 Pipeline Strategy](#10-pipeline-strategy)

# News

@@ -67,24 +82,27 @@

- [Nov 6 2023 - Release Heartbeat - 1.1.2](release-notes/20231106.md)

- [Nov 21 2023 - Release Heartbeat - 1.1.3](release-notes/20231121.md)

- [Dev 4 2023 - Release Heartbeat - 1.1.4](release-notes/20231204.md)

- - [Feb 29 2024 - Release Heartbeat - 1.1.5](release-notes/20240229.md)

+- [Feb 29 2024 - Release Heartbeat - 1.1.5](release-notes/20240229.md)

+- [Apr 2 2024 - Release heartbeat - 1.1.6](release-notes/20240402.md)

# 1 About Heartbeat

Heartbeat is a tool for tracking project delivery metrics that can help you get a better understanding of delivery performance. This product allows you easily get all aspects of source data faster and more accurate to analyze team delivery performance which enables delivery teams and team leaders focusing on driving continuous improvement and enhancing team productivity and efficiency.

-State of DevOps Report is launching in 2019. In this webinar, The 4 key metrics research team and Google Cloud share key metrics to measure DevOps performance, measure the effectiveness of development and delivery practices. They searching about six years, developed four metrics that provide a high-level systems view of software delivery and performance.

+State of DevOps Report is launching in 2019. In this webinar, The 4 key metrics research team and Google Cloud share key metrics to measure DevOps performance, measure the effectiveness of development and delivery practices. They searching about six years, developed four metrics that provide a high-level systems view of software delivery and performance. Based on that, Heartbeat introduce below metrics as below.

-**Here are the four Key meterics:**

+**8 metrics supported by heartbeat:**

-1. Deployment Frequency (DF)

-2. Lead Time for changes (LTC)

-3. Mean Time To Recover (MTTR)

-4. Change Failure Rate (CFR)

-In Heartbeat tool, we also have some other metrics, like: Velocity, Cycle Time and Classification. So we can collect DF, LTC, CFR, Velocity, Cycle Time and Classification.

+1. [Velocity](#341-velocity)

+2. [Cycle time](#341-velocity)

+3. [Classification](#343-classification)

+4. [Rework](#344-rework)

+5. [Deployment Frequency](#345-deployment-frequency)

+6. [Lead Time for changes](#346-lead-time-for-changes-data)

+7. [Dev Change Failure Rate](#347-dev-change-failure-rate)

+8. [Dev Mean Time To Recovery](#348-dev-mean-time-to-recovery)

-For MTTR meter, specifically, if the pipeline stay in failed status during the selected period, the unfixed part will not be included for MTTR calculation.

# 2 Support tools

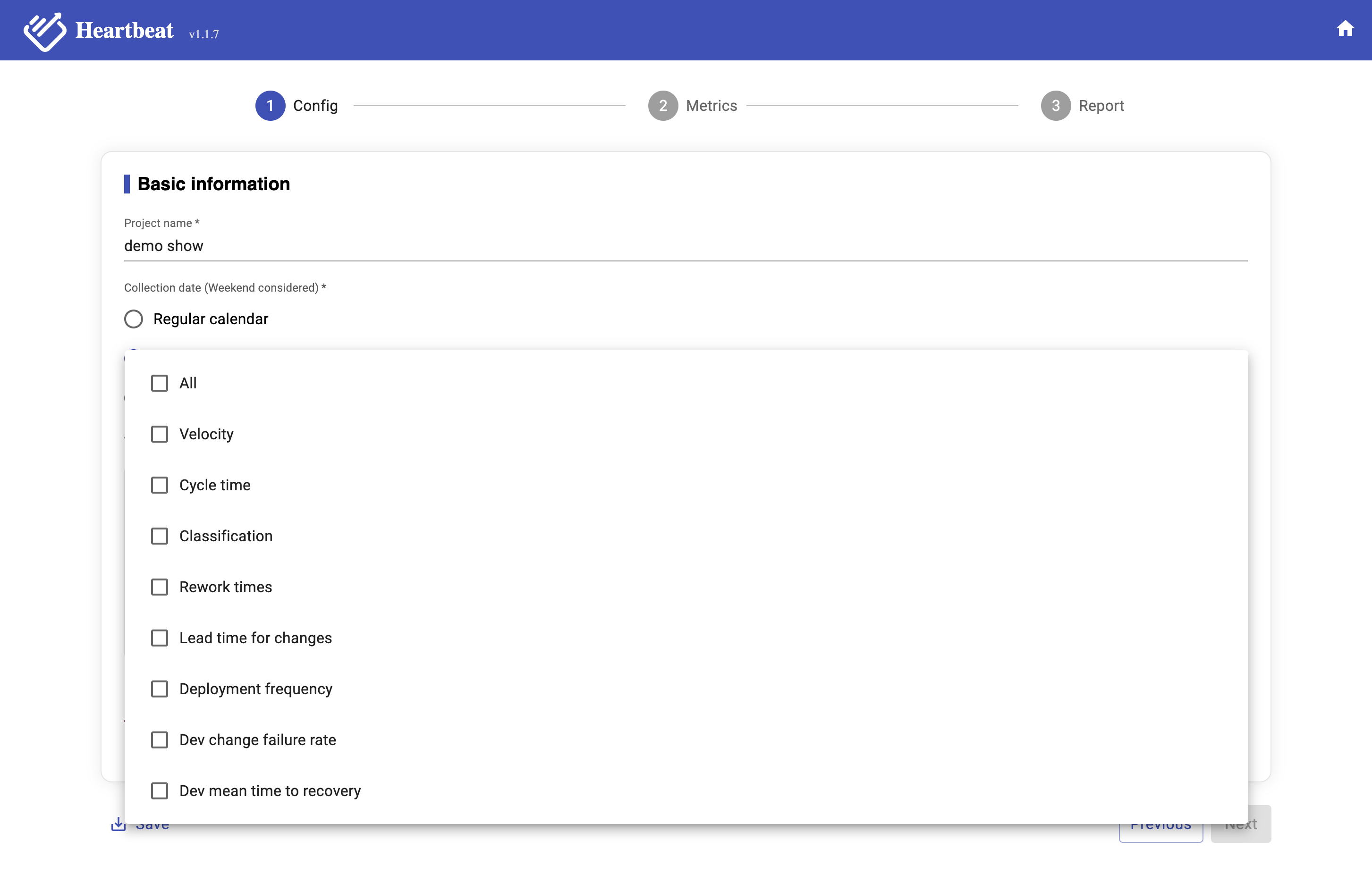

@@ -129,21 +147,30 @@ All need to select which data you want to get, for now, we support seven metrics

\

_Image 3-3,Metrics Data_

+##### 3.1.2.1 Date picker validation rules

+

+User can not select future time in calendar (both start time & end time). The max date interval between start time and end time is 31 days (e.g. 01/01/2024 - 01/31/2024).

+

+Invalid dates may be, e.g. future dates, interval between start time and end time is more than 31 days, end time is before start time, etc.

+

+If user selects and invalid date, a warning may be shown.

+

#### 3.1.3 Config project account

Because all metrics data from different tools that your projects use. Need to have the access to these tools then you can get the data. So after select time period and metrics data, then you need to input the config for different tools(Image 3-4).

According to your selected required data, you need to input account settings for the respective data source. Below is the mapping between your selected data to data source.

-| Required Data | Datasource |

-| --------------------- | -------------- |

-| Velocity | Board |

-| Cycle time | Board |

-| Classification | Board |

-| Lead time for changes | Repo,Pipeline |

-| Deployment frequency | Pipeline |

-| Change failure rate | Pipeline |

-| Mean time to recovery | Pipeline |

+| Required Data | Datasource |

+|---------------------------| -------------- |

+| Velocity | Board |

+| Cycle time | Board |

+| Classification | Board |

+| Rework times | Board |

+| Lead time for changes | Repo,Pipeline |

+| Deployment frequency | Pipeline |

+| Dev change failure rate | Pipeline |

+| Dev mean time to recovery | Pipeline |

\

Image 3-4,Project config

@@ -157,27 +184,44 @@ Image 3-4,Project config

|Site|Site is the domain for your jira board, like below URL, `dorametrics` is the site

https://dorametrics.atlassian.net/jira/software/projects/ADM/boards/2 |

|Email|The email can access to the Jira board |

|Token|Generate a new token with below link, https://id.atlassian.com/manage-profile/security/api-tokens |

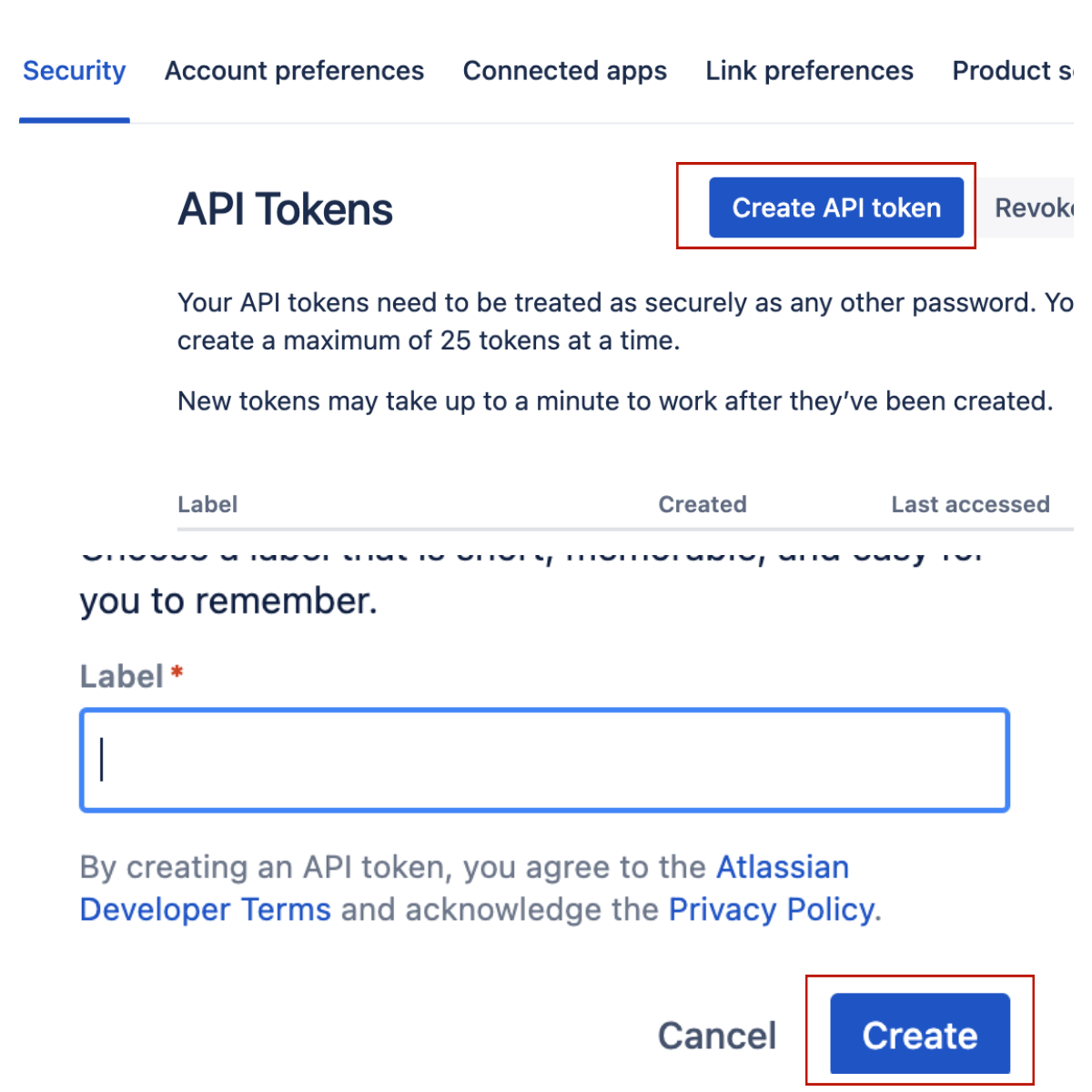

+##### 3.1.3.1 Guideline for generating Jira token

+

+_Image 3-5, create Jira token_

**The details for Pipeline:**

|Items|Description|

|---|---|

|PipelineTool| The pipeline tool you team use, currently heartbeat only support buildkite|

|Token|Generate buildkite token with below link, https://buildkite.com/user/api-access-tokens|

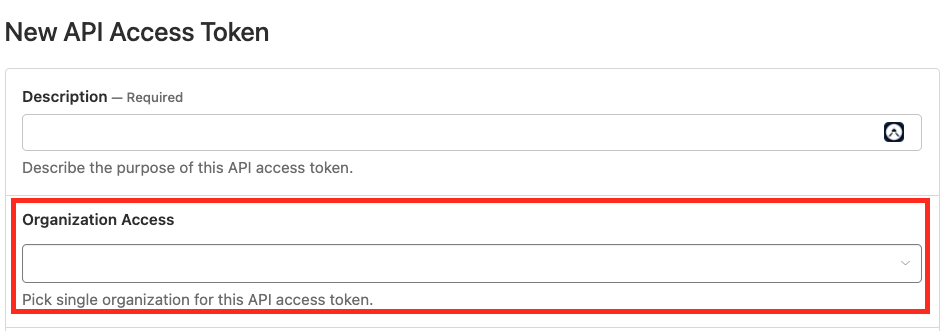

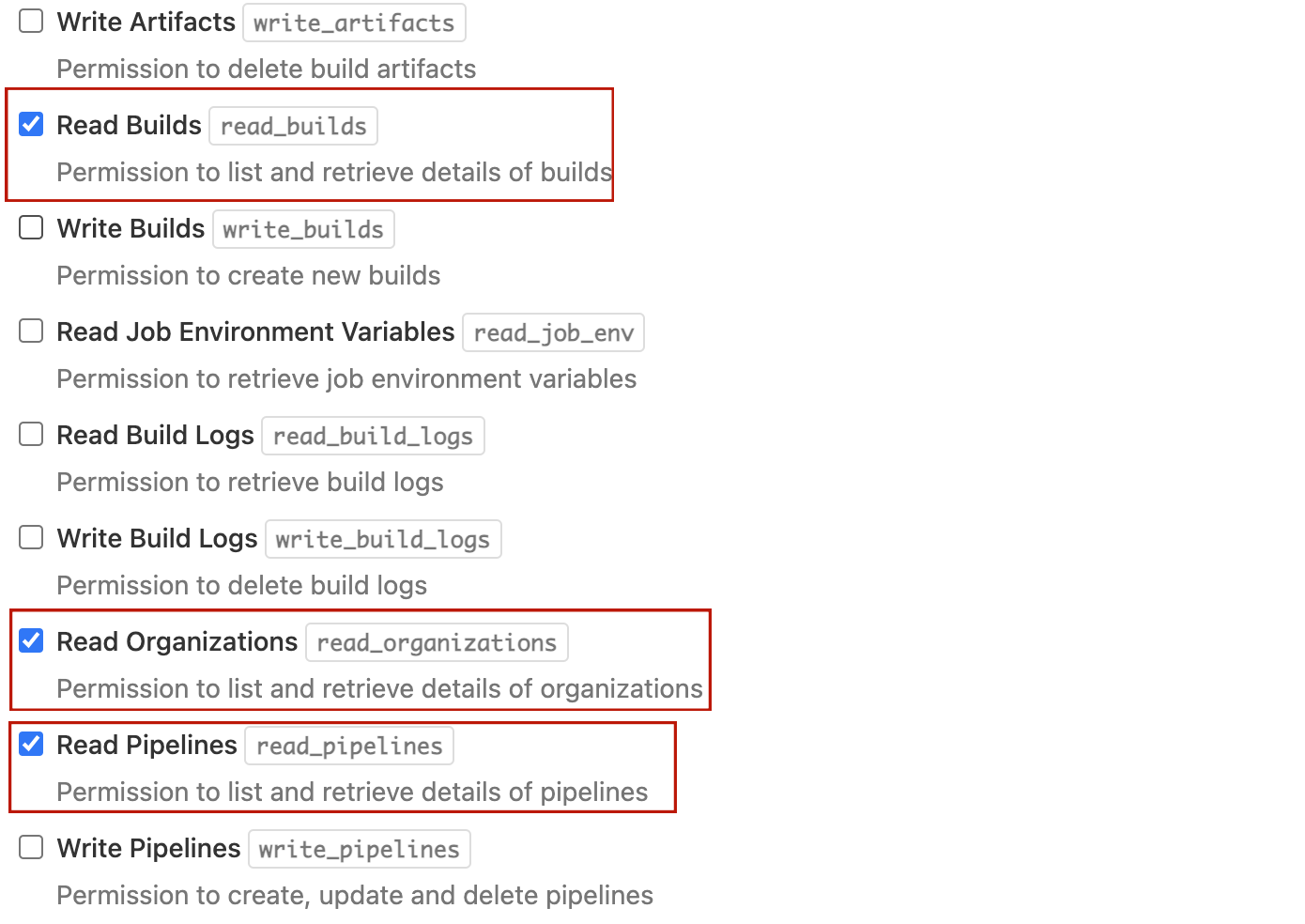

+##### 3.1.3.2 Guideline for generating Buildkite token

+Select organization for you pipeline

+

+Choose "Read Builds","Read Organizations" and "Read Pipelines".

+

+_Image 3-6, generate Buildkite token_

**The details for SourceControl:**

|Items|Description|

|---|---|

|SourceControl|The source control tool you team use, currently heartbeat only support Github|

|Token|Generate Github token with below link(classic one), https://github.com/settings/tokens|

-

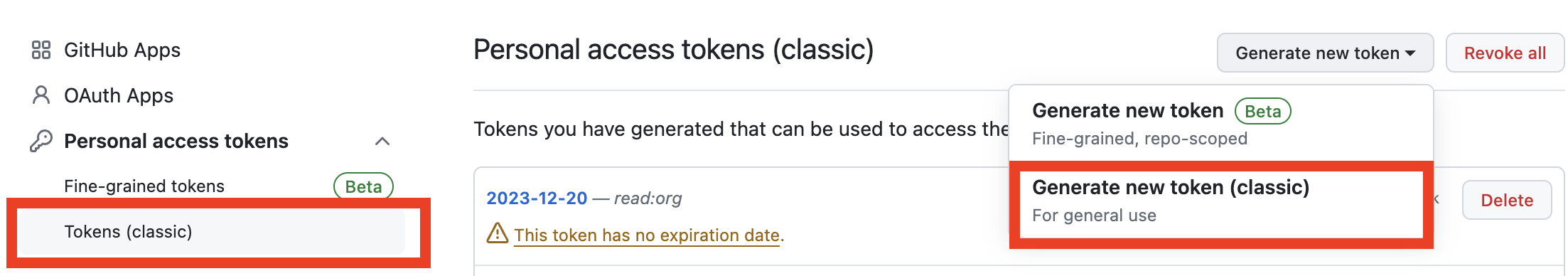

+##### 3.1.3.3 Guideline for generating GitHub token

+Generate new token (classic)

+

+Select repo from scopes

+

+_Image 3-7, generate classic GitHub token_

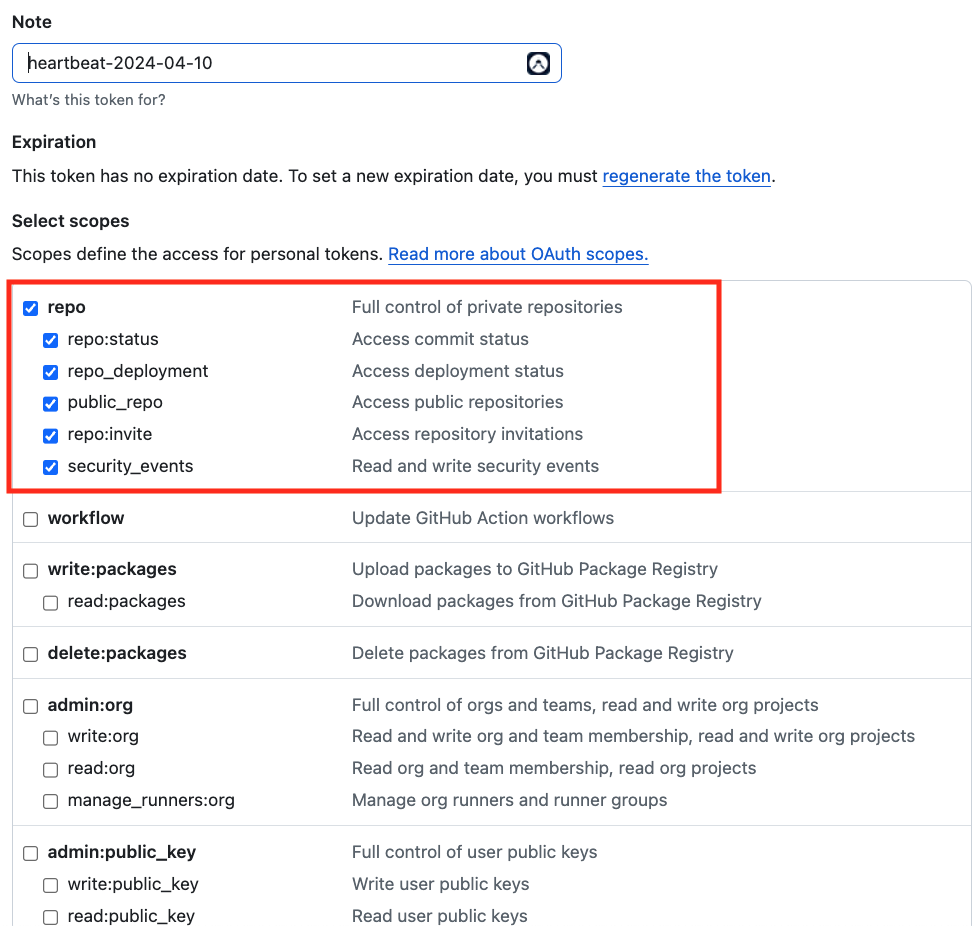

+##### 3.1.3.4 Authorize GitHub token with correct organization

+

+_Image 3-8, authorize GitHub token with correct organization_

### 3.2 Config Metrics data

After inputting the details info, users need to click the `Verify` button to verify if can access to these tool. Once verified, they could click the `Next` button go to next page -- Config Metrics page(Image 3-5,Image 3-6,Image 3-7)

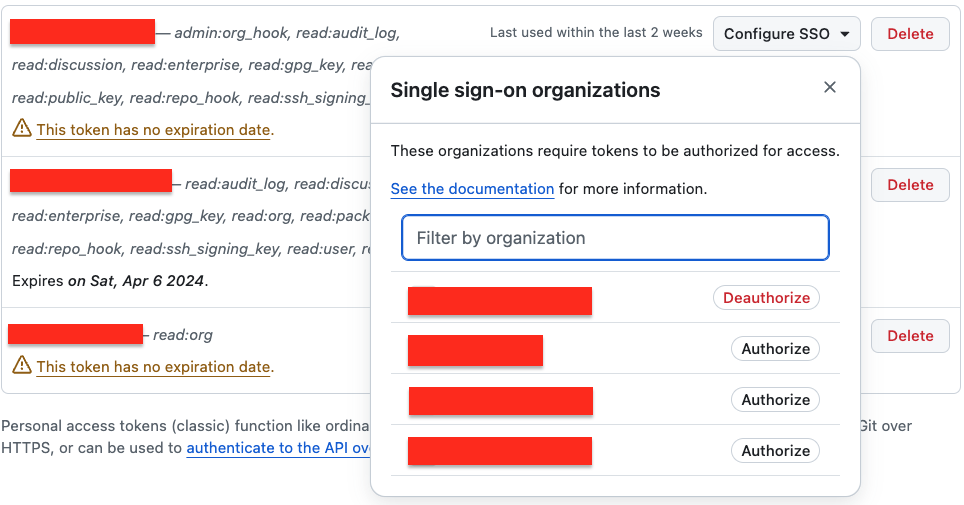

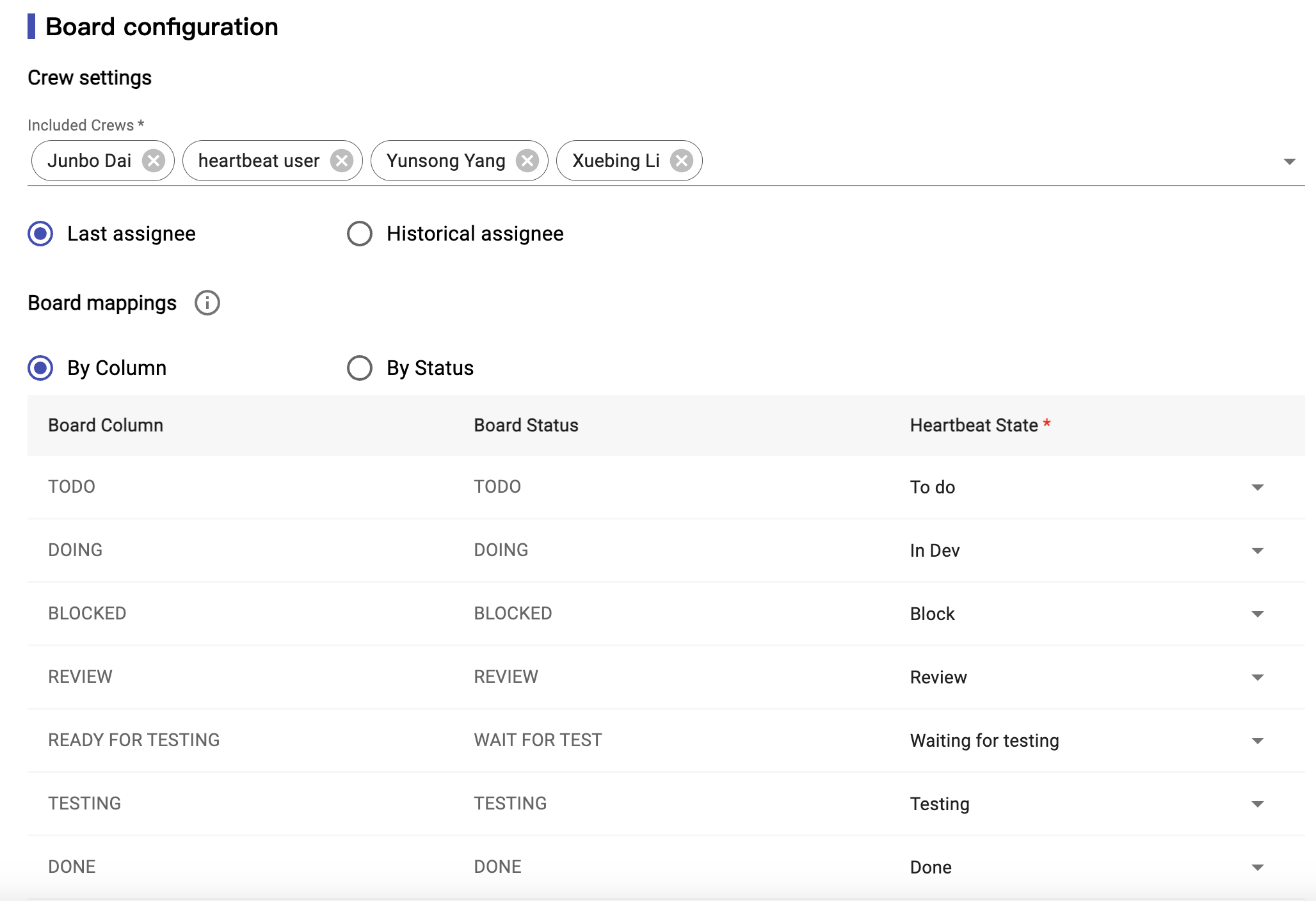

-#### 3.2.1 Config Crews/Cycle Time

+#### 3.2.1 Config Crews/Board Mappings

-\

-_Image 3-5, Crews/Cycle Time config_

+\

+_Image 3-9, Crews/Board Mappings config_

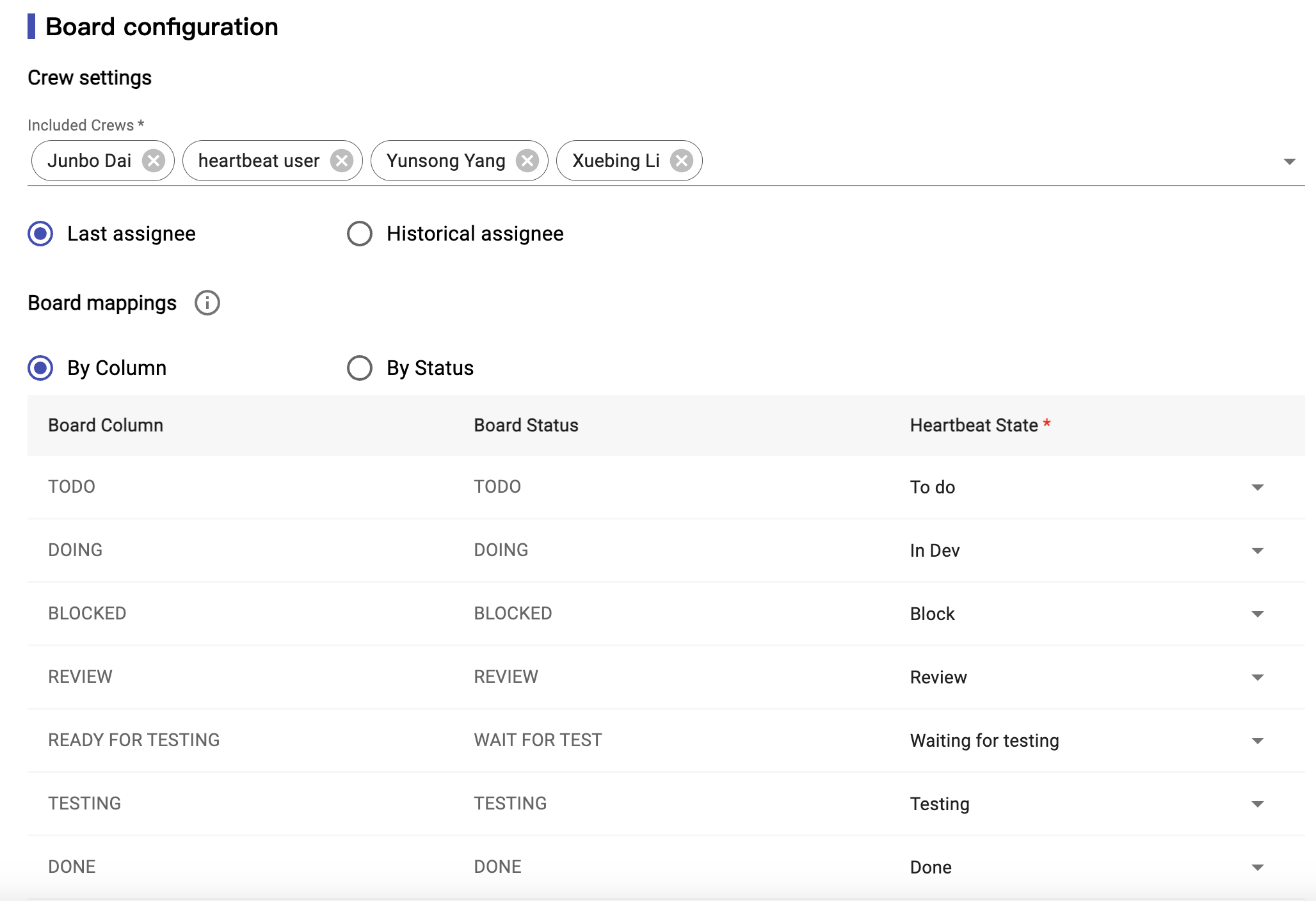

**Crew Settings:** You could select your team members from a list get from board source. The list will include the assignees for those tickets that finished in the time period selected in the last step.

@@ -195,46 +239,57 @@ _Image 3-5, Crews/Cycle Time config_

| Done | It means the tickets are already done. Cycle time doesn't include this time. |

| -- | If you don't need to map, you can select -- |

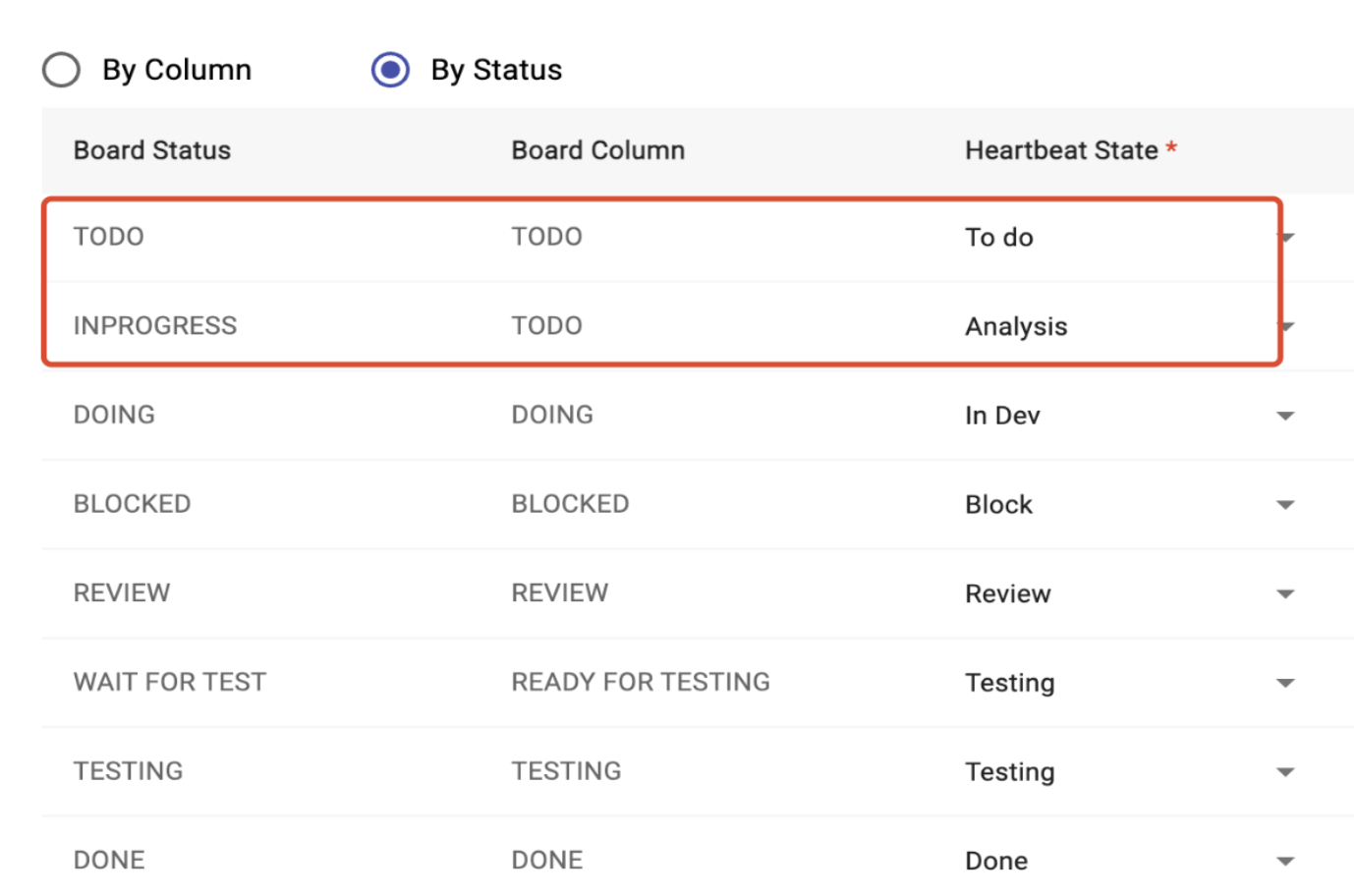

+**By Status**: user can click the toggle selected button to choose the mapping relationship by column or by status. It support multiple status map in to one column, just as the picture shows the TODO and INPROGRESS board status can be mapped to different heartbeat states.

+

+\

+_Image 3-10,By Status_

+

#### 3.2.2 Setting Classification

-\

-_Image 3-6,Classification Settings_

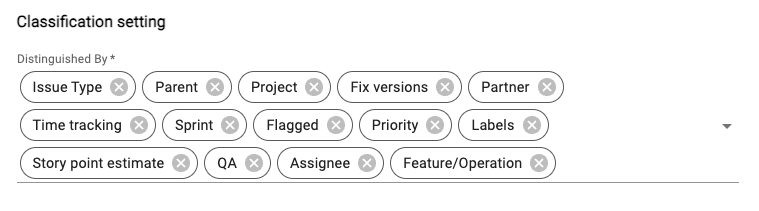

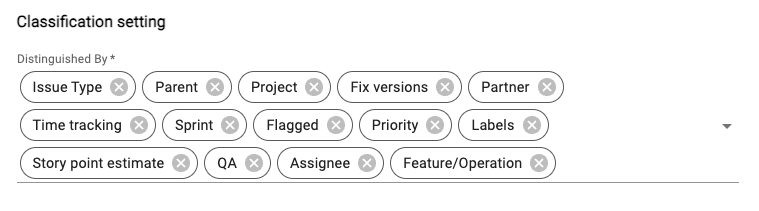

+\

+_Image 3-11,Classification Settings_

In classification settings, it will list all Context fields for your jira board. Users can select anyone to get the data for them. And according to your selection, in the export page, you will see the classification report to provide more insight with your board data.

-#### 3.2.3 Setting advanced Setting

+#### 3.2.3 Rework times Setting

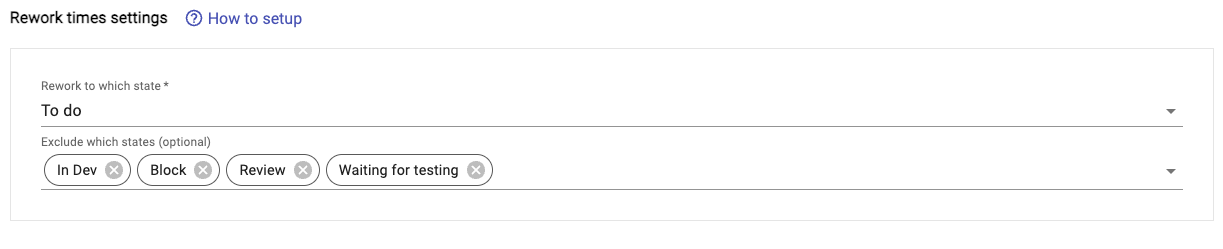

+\

+_Image 3-12,Rework times Settings_

+

+In Rework times settings, it contains Rework to which state Input and Exclude which states(optional) Input. The options in the Rework to which state Input are all from Board mappings, the options are ordered, and when an option is selected, the rework information of the option and all subsequent options will be counted in the report page and export file. The Exclude which states(optional) Input can help you exclude certain subsequent options (image 3-7).

-\

-_Image 3-7,advanced Settings_

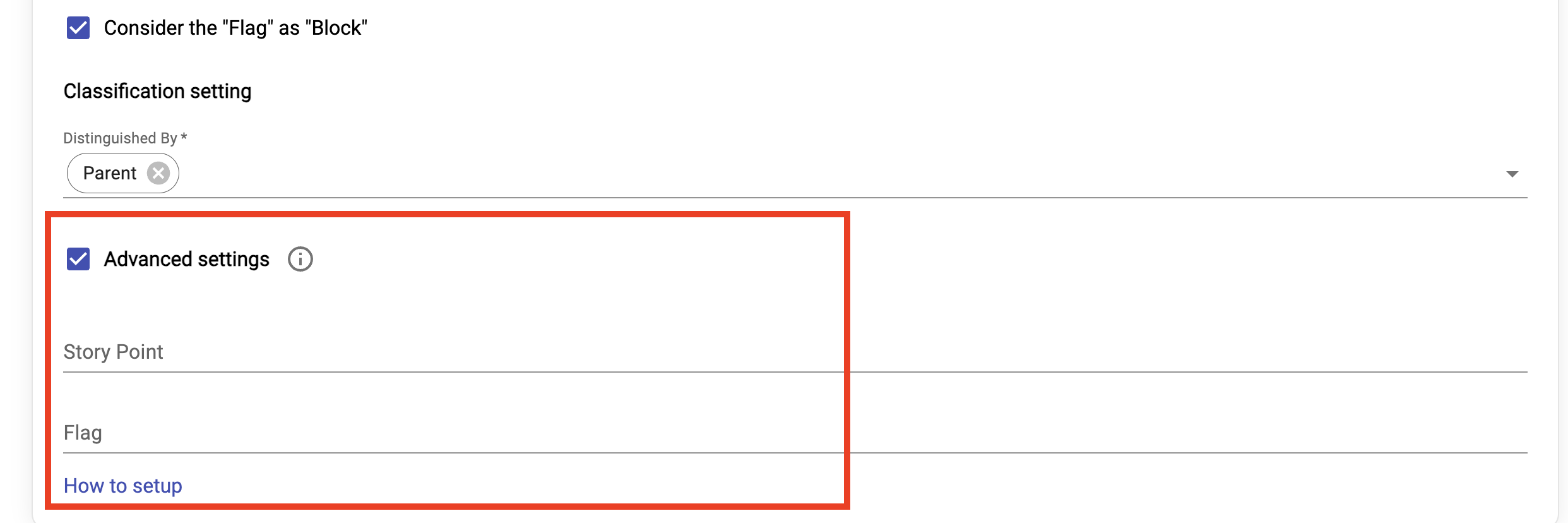

+#### 3.2.4 Setting advanced Setting

+

+\

+_Image 3-13,advanced Settings_

In advanced settings, it contains story points Input and Flagged Input. Users can input story points and Flagged custom-field on their own when the jira board has permission restriction . And according to these input, in the export page, user can get correct story points and block days

how to find the story points and Flagged custom-field?

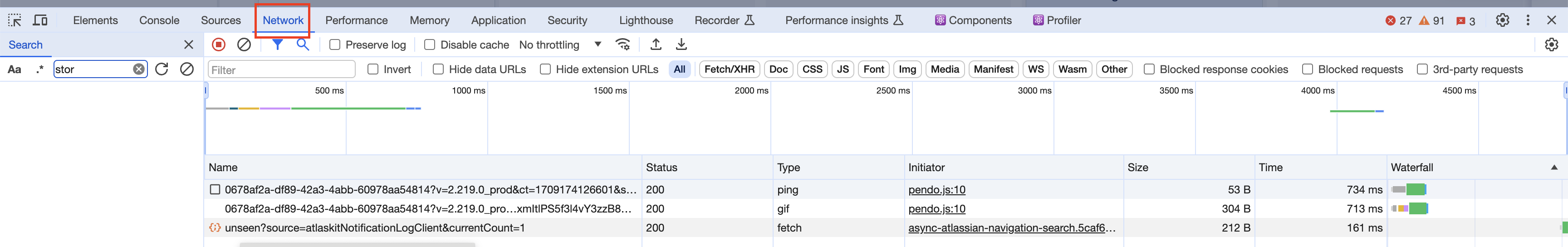

-\

-_Image 3-8,devTool-network-part_

+\

+_Image 3-14,devTool-network-part_

-\

-_Image 3-9,card-history_

+\

+_Image 3-15,card-history_

-\

-_Image 3-10,find-custom-field-api_

+\

+_Image 3-16,find-custom-field-api_

-\

-_Image 3-11,story-point-custom-field_

+\

+_Image 3-17,story-point-custom-field_

-\

-_Image 3-12,flagged-custom-field_

+\

+_Image 3-18,flagged-custom-field_

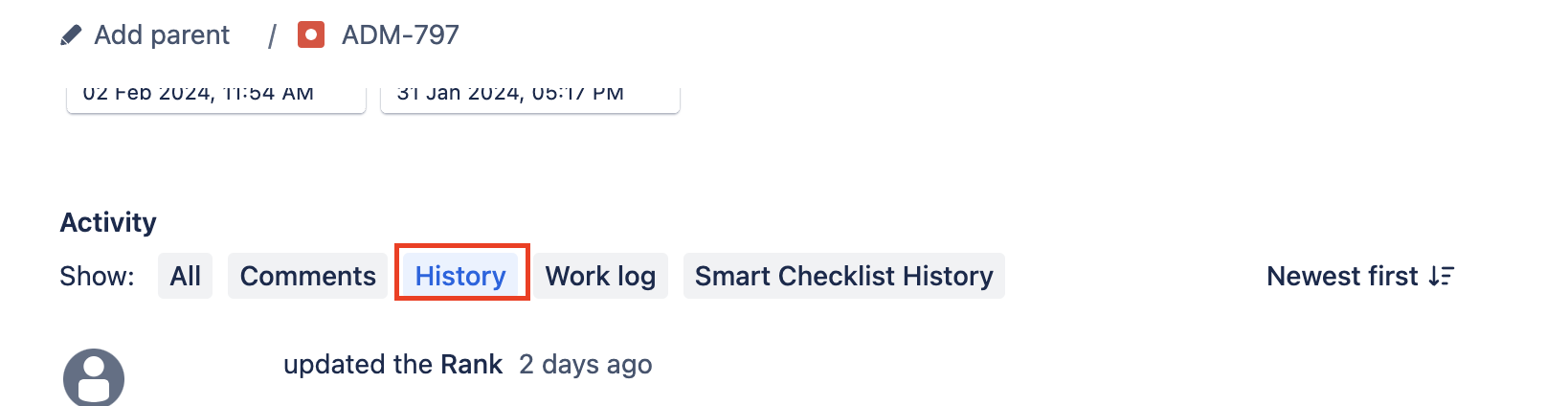

1. user need to go to the jira board and click one card , then open dev tool switch to network part.

2. then click card's history part.

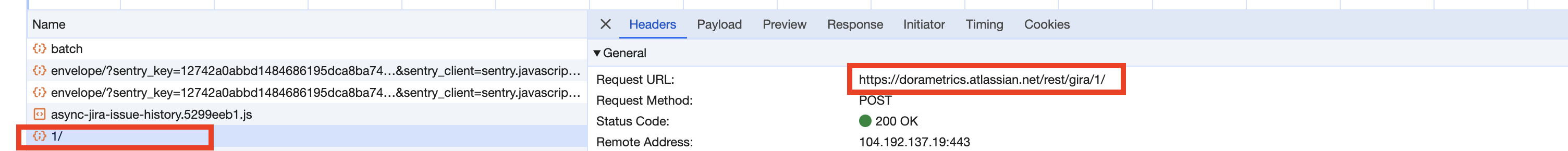

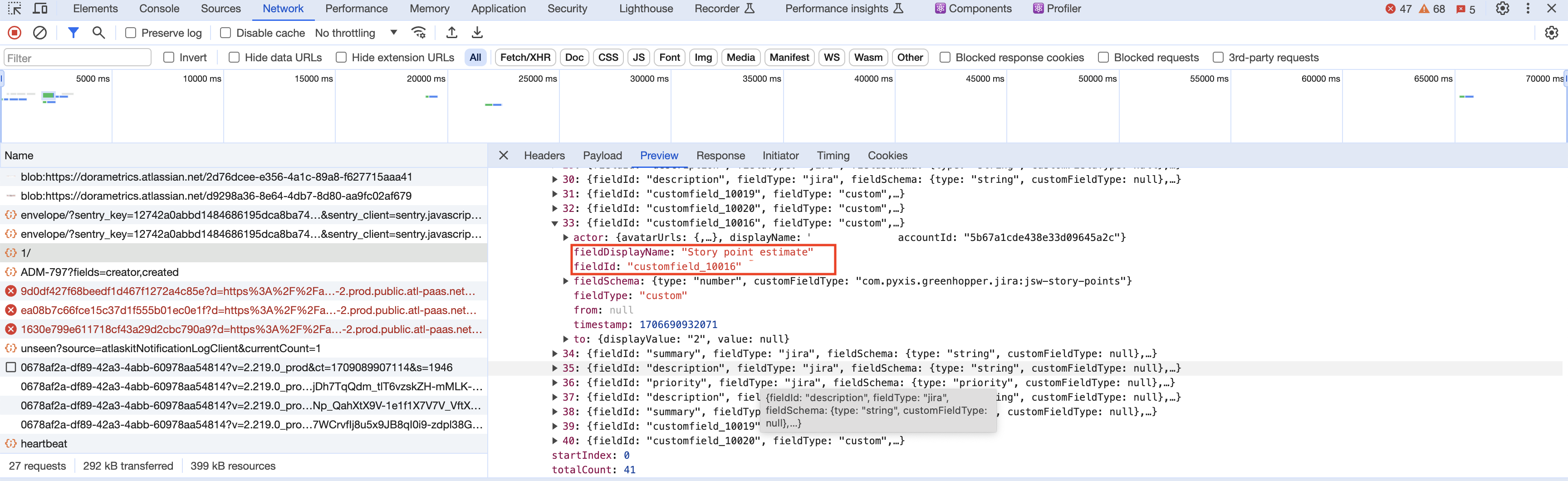

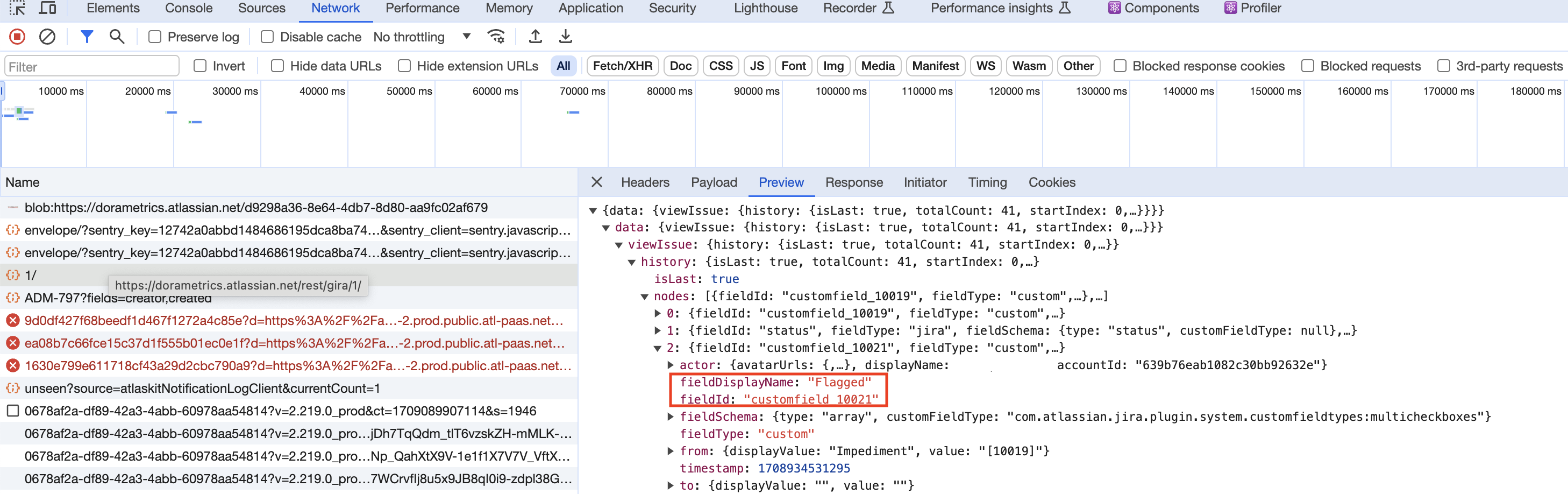

3. at that time, user can see one api call which headers request URL is https://xxx.atlassian.net/rest/gira/1/ .

-4. then go to review part, find fieldDisplayName which show Flagged and story point estimate and get the fieldId as the custom-field that user need to input in advanced settings. from image 3-11 and 3-12 we can find that flagged custom field is customfield_10021, story points custom field is customfield_10016.

+4. then go to review part, find fieldDisplayName which show Flagged and story point estimate and get the fieldId as the custom-field that user need to input in advanced settings. from image 3-13 and 3-14 we can find that flagged custom field is customfield_10021, story points custom field is customfield_10016.

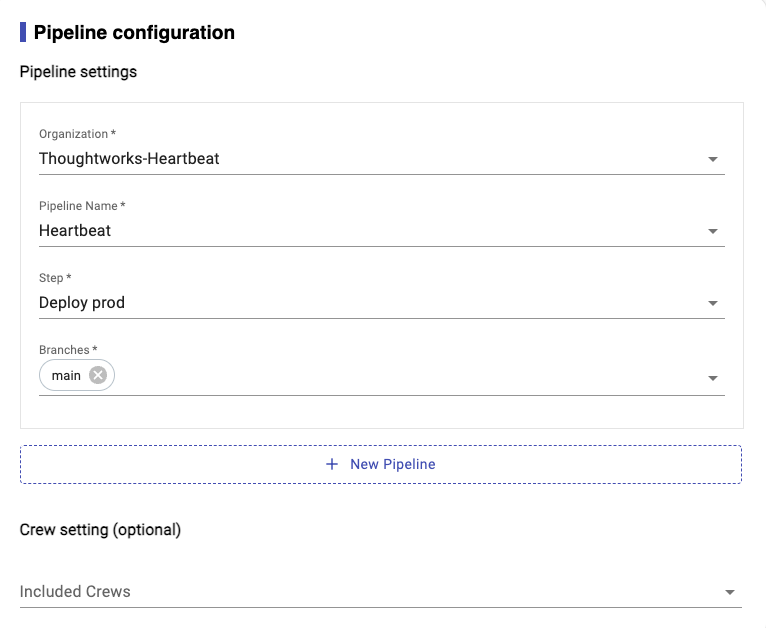

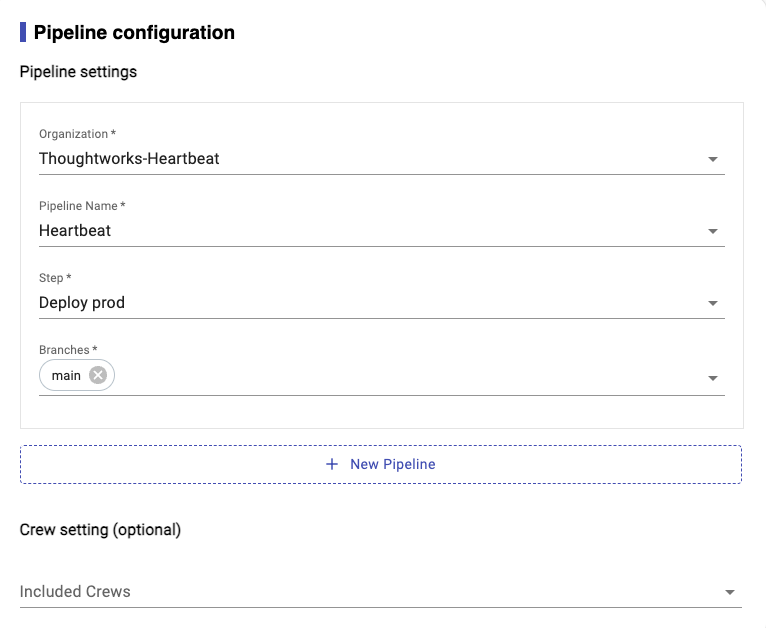

-#### 3.2.4 Pipeline configuration

+#### 3.2.5 Pipeline configuration

-\

-_Image 3-13,Settings for Pipeline_

+\

+_Image 3-19,Settings for Pipeline_

They are sharing the similar settings which you need to specify the pipeline step so that Heartbeat will know in which pipeline and step, team consider it as deploy to PROD. So that we could use it to calculate metrics.

@@ -248,69 +303,116 @@ They are sharing the similar settings which you need to specify the pipeline ste

### 3.3.1 Export Config Json File

-When user first use this tool, need to create a project, and do some config. To avoid the user entering configuration information repeatedly every time, we provide a “Save” button in the config and metrics pages. In config page, click the save button, it will save all items in config page in a Json file. If you click the save button in the metrics page, it will save all items in config and metrics settings in a Json file. Here is the json file (Image 3-8)。Note: Below screenshot just contains a part of data.

+When user first use this tool, need to create a project, and do some config. To avoid the user entering configuration information repeatedly every time, we provide a “Save” button in the config and metrics pages. In config page, click the save button, it will save all items in config page in a Json file. If you click the save button in the metrics page, it will save all items in config and metrics settings in a Json file. Here is the json file (Image 3-16)。Note: Below screenshot just contains a part of data.

-\

-_Image 3-14, Config Json file_

+\

+_Image 3-20, Config Json file_

### 3.3.2 Import Config Json File

-When user already saved config file before, then you don’t need to create a new project. In the home page, can click Import Project from File button(Image 3-1) to select the config file. If your config file is too old, and the tool already have some new feature change, then if you import the config file, it will get some warning info(Image 3-9). You need to re-select some info, then go to the next page.

+When user already saved config file before, then you don’t need to create a new project. In the home page, can click Import Project from File button(Image 3-1) to select the config file. If your config file is too old, and the tool already have some new feature change, then if you import the config file, it will get some warning info(Image 3-17). You need to re-select some info, then go to the next page.

-\

-_Image 3-15, Warning message_

+\

+_Image 3-21, Warning message_

## 3.4 Generate Metrics report

After setup and configuration, then it will generate the heartbeat dashboard.

-

+

+_Image 3-22, Report page_

You could find the drill down from `show more >` link from dashboard.

### 3.4.1 Velocity

-In Velocity Report, it will list the corresponding data by Story Point and the number of story tickets. (image 3-10)

-\

-_Image 3-16,Velocity Report_

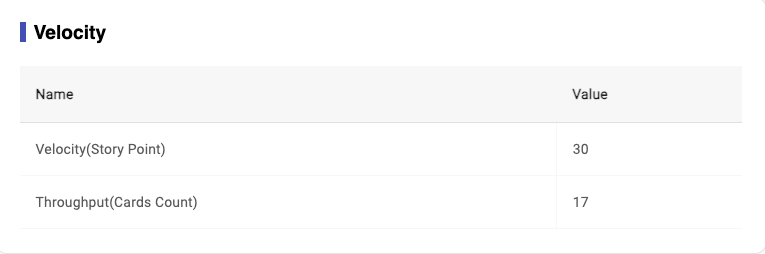

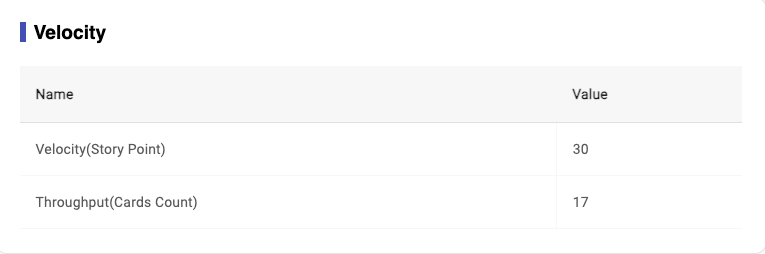

+In Velocity Report, it will list the corresponding data by Story Point and the number of story tickets. (image 3-19)

+- `Velocity` : includes how many story points and cards we have completed within selected time period.

+- Definition for 'Velocity(Story Point)‘: how many story point we have completed within selected time period.

+- Formula for 'Velocity(Story Point): sum of story points for done cards in selected time period

+- Definition for 'Throughput(Cards Count): how many story cards we have completed within selected time period.

+- Formula for 'Throughput(Cards Count): sum of cards count for done cards in selected time period

+

+

+\

+_Image 3-23,Velocity Report_

### 3.4.2 Cycle Time

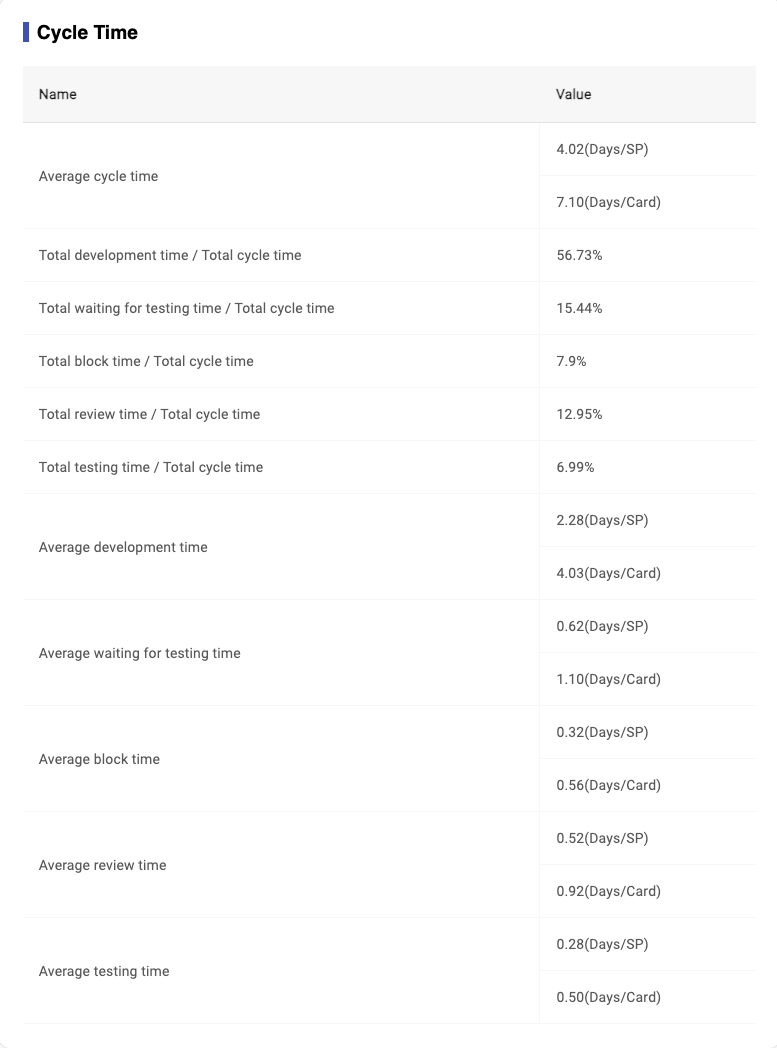

The calculation process data and final result of Cycle Time are calculated by rounding method, and two digits are kept after the decimal point. Such as: 3.567... Is 3.56; 3.564... Is 3.56.

+- `Cycle time`: the time it take for each card start ‘to do’ until move to ‘done’.

+- Definition for ‘Average Cycle Time(Days/SP)’: how many days does it take on average to complete a point?

+- Formula for ‘Average Cycle Time(Days/SP)’: sum of cycle time for done cards/done cards story points

+- Definition for ‘Average Cycle Time(Days/Card)’: how many days does it take on average to complete a card?

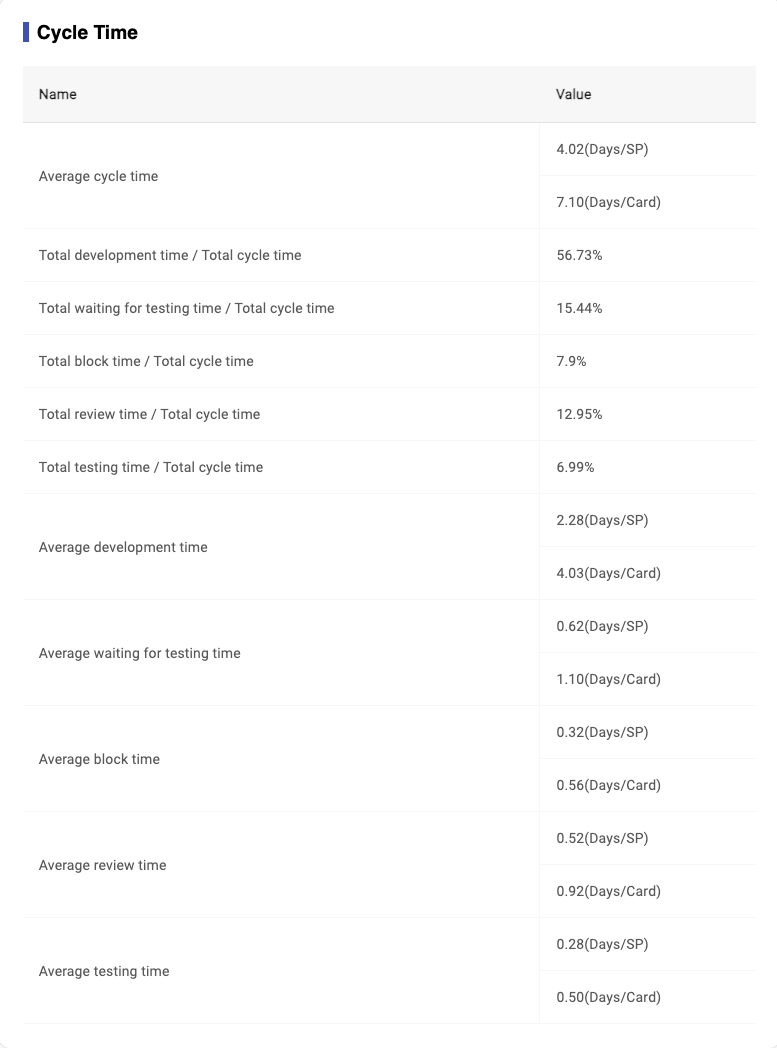

+- Formula for ‘Average Cycle Time(Days/Card)’: sum of cycle time for done cards/done cards count

-\

-_Image 3-17,Cycle Time Report_

+\

+_Image 3-24,Cycle Time Report_

### 3.4.3 Classification

It will show the classification data of Board based on your selection on `Classification Settings` in metrics page.

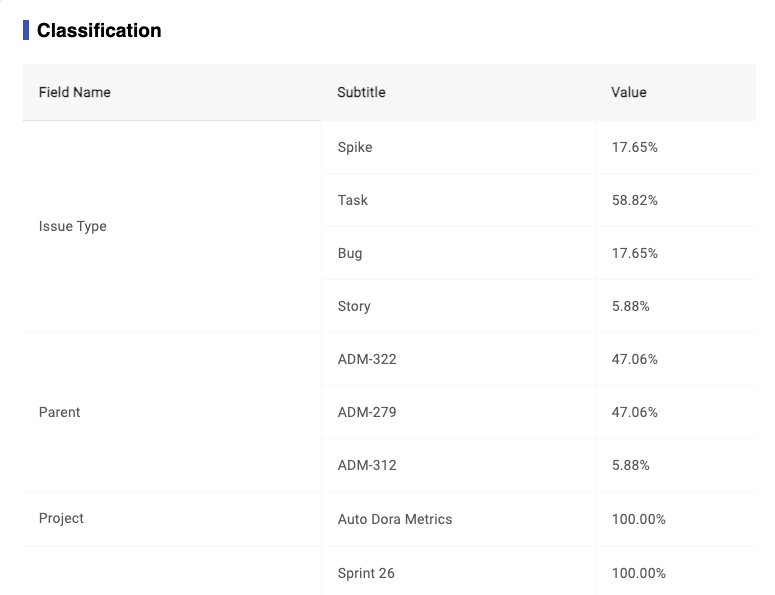

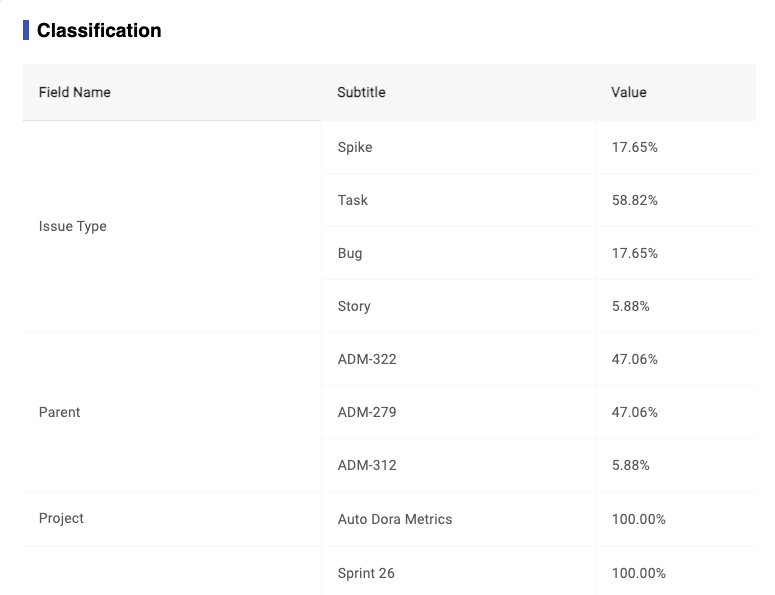

The percentage value represent the count of that type tickets vs total count of tickets.

+- `Classification`: provide different dimensions to view how much efforts team spent within selected time period.

+- for example: spike cards account for 17.65% of the total completed cards

+

+\

+_Image 3-25,Classification Report_

+

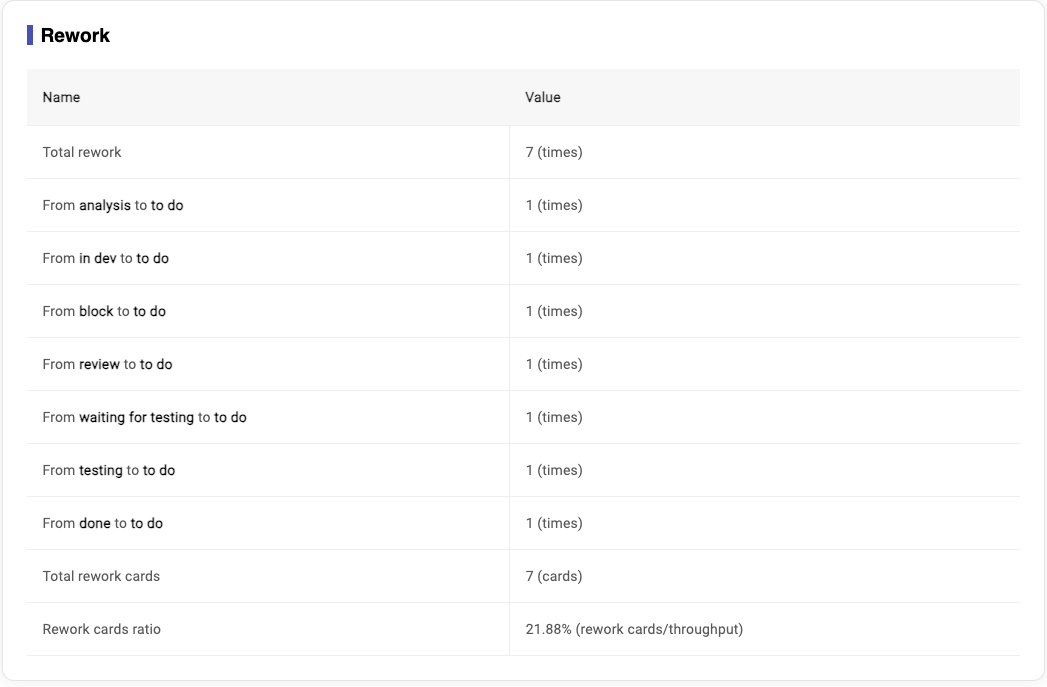

+### 3.4.4 Rework

+

+- Definition for ‘Rework': cards roll back from a later state to a previous state, for example, one card move from 'testing' state to 'in dev' state, which means this card is reworked.

+- Formula for 'Total rework times': the total number of rework times in all done cards

+- Formula for 'Total rework cards': the total number of rework cards in all done cards

+- Formula for 'Rework cards ratio': total rework cards/throughput

+

+It will show the rework data of board on your selection on `Rework times settins` in metrics page (image 3-21).

+

+If "to do" is selected in the "Rework to which column", we will count the number of times the subsequent options in the options are reworked back to the "to do" state.

+

-\

-_Image 3-18,Classification Report_

+

+\

+_Image 3-26,Rework Report_

-### 3.4.4 Deployment Frequency

+### 3.4.5 Deployment Frequency

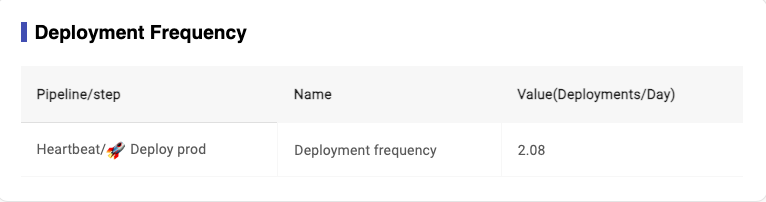

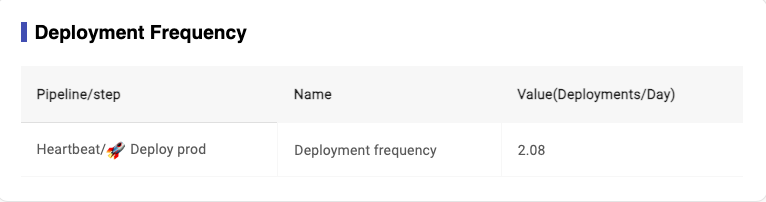

+- Definition for ‘Deployment Frequency': this metrics records how often you deploy code to production on a daily basis.

+- Formula for ‘Deployment Frequency': the umber of build for(Status = passed & Valid = true)/working days

+\

+_Image 3-27,export pipline data_

+\

+_Image 3-28,Deployment Frequency Report_

-\

-_Image 3-19,Deployment Frequency Report_

+### 3.4.6 Lead time for changes Data

+- Formula for ‘PR lead time':

+ - if PR exist : PR lead time = PR merged time - first code committed time

+ - if no PR or revert PR: PR lead time = 0

-### 3.4.5 Lead time for changes Data

+- Formula for ‘Pipeline lead time':

+ - if PR exist: Pipeline lead time = Job Complete Time - PR merged time

+ - if no PR: Pipeline lead time = Job Complete Time - Job Start Time

-\

-_Image 3-20,Lead time for changes Report_

-### 3.4.6 Change Failure Rate

-\

-_Image 3-21,Change Failure Rate Report_

+\

+_Image 3-29,Lead time for changes Report_

-### 3.4.7 Mean time to recovery

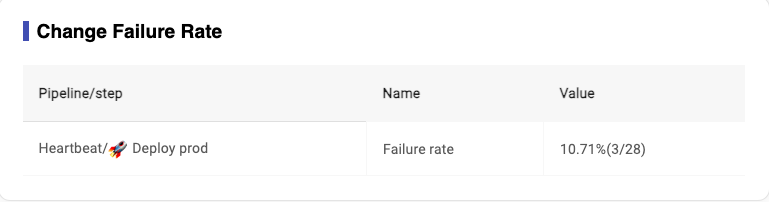

+### 3.4.7 Dev Change Failure Rate

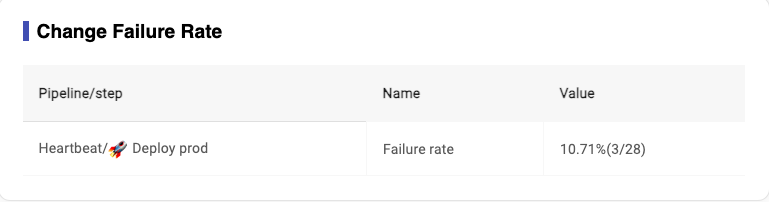

+- Definition for ‘Dev Change Failure Rate': this metrics is different from the official definition of change failure rate, in heartbeat, we definite this metrics based on development,which is the percentage of failed pipelines in the total pipelines, and you chan select different pipeline as your final step,and this value is lower means failed pipeline is fewer.

+- Formula for ‘Dev Change Failure Rate': the number of build for (Status = failed)/the number of build for [(Status = passed & Valid = true)+ the number of build for (status=failed)]

-\

-_Image 3-22,mean time to recovery

+\

+_Image 3-30,Dev Change Failure Rate Report_

+

+### 3.4.8 Dev Mean time to recovery

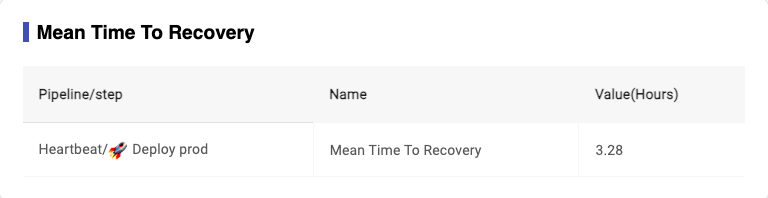

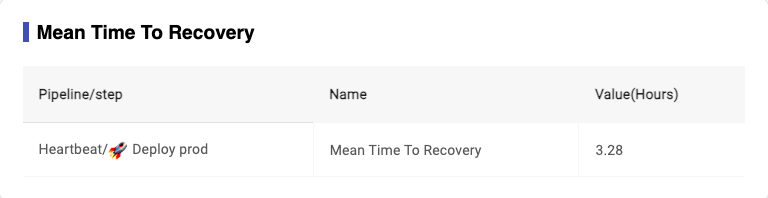

+- Definition for ‘Dev Mean time to recovery': this metrics is also different from the official definition of Mean time to recovery. This metrics comes from pipeline, and it records how long it generally takes to restore when pipeline failed, and If this value is less than 8 hours, it means ‘red does not last overnight’, which means our repair speed is relatively good.

+- Formula for ‘Dev Mean time to recovery': sum[he time difference from the first fail to the first pass for deployment completed time]/ the number of repairs

+

+\

+_Image 3-31,mean time to recovery

## 3.5 Export original data

-After generating the report, you can export the original data for your board and pipeline (Image 3-15). Users can click the “Export board data” or “Export pipeline data” button to export the original data.

+After generating the report, you can export the original data for your board and pipeline (Image 3-18). Users can click the “Export board data” or “Export pipeline data” button to export the original data.

### 3.5.1 Export board data

@@ -318,14 +420,14 @@ It will export a csv file for board data

#### 3.5.1.1 Done card exporting

-Export the all done tickets during the time period(Image 1)

+Export the all done tickets during the time period(Image 3-18)

#### 3.5.1.1 Undone card exporting

-Export the latest updated 50 non-done tickets in your current active board. And it will order by heartbeat state and then last status change date(Image 3-16)

+Export the latest updated 50 non-done tickets in your current active board. And it will order by heartbeat state and then last status change date(Image 3-28)

-\

-_Image 3-22,Exported Board Data_

+\

+_Image 3-32,Exported Board Data_

**All columns for Jira board:**

|Column name |Description|

@@ -352,13 +454,14 @@ _Image 3-22,Exported Board Data_

|Block Days|Blocked days for each ticket|

|Review Days|--|

|Original Cycle Time: {Column Name}|The data for Jira board original data |

-

+|Rework: total - {rework state} | The total number of rework times |

+|Rework: from {subsequent status} | The number of rework times |

### 3.5.2 Export pipeline data

-It will export a csv file for pipeline data (image 3-17).

+It will export a csv file for pipeline data (image 3-29).

-\

-_Image 3-23,Exported Pipeline Data_

+\

+_Image 3-33,Exported Pipeline Data_

**All columns for pipeline data:**

|Column name |Description|

@@ -443,7 +546,7 @@ pnpm test

pnpm coverage

```

-## 6.1.4 How to run e2e tests locally

+## 6.1.4 How to run E2E tests locally

2. Start the backend service

@@ -459,12 +562,14 @@ cd HearBeat/frontend

pnpm start

```

-4. Run the e2e tests

+4. Run the E2E tests

```

cd HearBeat/frontend

-pnpm e2e

+pnpm run e2e:headed

```

+## 6.2 How to run backend

+Refer to [run backend](backend/README.md#1-how-to-start-backend-application)

# 7 How to trigger BuildKite Pipeline

@@ -491,9 +596,9 @@ git tag -d {tag name}

git push origin :refs/tags/{tag name}

```

-# 7 How to use

+# 8 How to use

-## 7.1 Docker-compose

+## 8.1 Docker-compose

First, create a `docker-compose.yml` file, and copy below code into the file.

@@ -523,7 +628,7 @@ Then, execute this command

docker-compose up -d frontend

```

-### 7.1.1 Customize story point field in Jira

+### 8.1.1 Customize story point field in Jira

Specifically, story point field can be indicated in `docker-compose.yml`. You can do it as below.

@@ -548,7 +653,7 @@ services:

restart: always

```

-### 7.1.2 Multiple instance deployment

+### 8.1.2 Multiple instance deployment

Specifically, if you want to run with multiple instances. You can do it with below docker compose file.

@@ -579,7 +684,7 @@ volumes:

file_volume:

```

-## 7.2 K8S

+## 8.2 K8S

First, create a `k8s-heartbeat.yml` file, and copy below code into the file.

@@ -634,7 +739,7 @@ spec:

apiVersion: v1

kind: Service

metadata:

- name: frontend

+ name: **frontend**

spec:

selector:

app: frontend

@@ -651,6 +756,50 @@ Then, execute this command

kubectl apply -f k8s-heartbeat.yml

```

-### 7.2.1 Multiple instance deployment

+### 8.2.1 Multiple instance deployment

You also can deploy Heartbeats in multiple instances using K8S through the following [documentation](https://au-heartbeat.github.io/Heartbeat/en/devops/how-to-deploy-heartbeat-in-multiple-instances-by-k8s/).

+

+# 9 Contribution

+

+We love your input! Please see our [contributing guide](contribution.md) to get started. Thank you 🙏 to all our contributors!

+

+# 10 Pipeline Strategy

+

+Now, Heartbeat uses `GitHub Actions` and `BuildKite` to build and deploy Heartbeat application.

+

+But there is some constrains, like some pipeline dependency.

+

+So, committer should pay attention to this flow when there is some pipeline issues.

+

+```mermaid

+ sequenceDiagram

+ actor Committer

+ participant GitHub_Actions as GitHub Actions

+ participant BuildKite

+

+ Committer ->> GitHub_Actions : Push code

+ Committer ->> BuildKite : Push code

+ loop 30s/40 times

+ BuildKite->> GitHub_Actions: Check the basic check(all check before 'deploy-infra' job) has been passed

+ GitHub_Actions -->> BuildKite: Basic check has passed?

+ alt Yes

+ BuildKite ->> BuildKite: Build and deploy e2e env

+ Note over BuildKite, GitHub_Actions: Some times passed

+ loop 30s/60 times

+ GitHub_Actions ->> BuildKite: Request to check if the e2e has been deployed

+ BuildKite -->> GitHub_Actions: e2e deployment status, if the e2e has been deployed?

+ alt Yes

+ GitHub_Actions ->> GitHub_Actions: Run e2e check on GitHub actions

+ Note over BuildKite, GitHub_Actions: Some times passed

+ GitHub_Actions -->> Committer: Response the pipeline result to committer

+ BuildKite -->> Committer: Response the pipeline result to committer

+ else No

+ GitHub_Actions -->> Committer: Break the pipeline

+ end

+ end

+ else No

+ BuildKite -->> Committer: Break the pipeline

+ end

+ end

+```

diff --git a/backend/build.gradle b/backend/build.gradle

index 46692478e5..25fe3a9b70 100644

--- a/backend/build.gradle

+++ b/backend/build.gradle

@@ -3,9 +3,9 @@ plugins {

id 'jacoco'

id 'pmd'

id 'org.springframework.boot' version '3.1.9'

- id 'io.spring.dependency-management' version '1.1.0'

- id "io.spring.javaformat" version "0.0.38"

- id 'com.github.jk1.dependency-license-report' version '2.1'

+ id 'io.spring.dependency-management' version '1.1.4'

+ id "io.spring.javaformat" version "0.0.41"

+ id 'com.github.jk1.dependency-license-report' version '2.6'

id "org.sonarqube" version "4.4.1.3373"

}

@@ -29,7 +29,7 @@ dependencies {

implementation 'org.springframework.boot:spring-boot-starter-actuator'

implementation 'org.springframework.boot:spring-boot-starter-log4j2'

implementation 'org.springframework.boot:spring-boot-starter-validation'

- implementation 'org.springframework:spring-core:6.1.3'

+ implementation 'org.springframework:spring-core:6.1.5'

implementation("org.springframework.cloud:spring-cloud-starter-openfeign:4.0.2") {

exclude group: 'commons-fileupload', module: 'commons-fileupload'

}

@@ -41,22 +41,23 @@ dependencies {

implementation 'org.springframework.boot:spring-boot-starter-cache'

implementation 'org.ehcache:ehcache:3.10.8'

implementation 'javax.annotation:javax.annotation-api:1.3.2'

- implementation 'com.google.code.gson:gson:2.8.9'

+ implementation 'com.google.code.gson:gson:2.10.1'

testImplementation 'junit:junit:4.13.2'

- compileOnly 'org.projectlombok:lombok:1.18.26'

- annotationProcessor 'org.projectlombok:lombok:1.18.26'

+ compileOnly 'org.projectlombok:lombok:1.18.32'

+ annotationProcessor 'org.projectlombok:lombok:1.18.32'

testImplementation 'org.springframework.boot:spring-boot-starter-test'

- testImplementation 'org.junit.jupiter:junit-jupiter:5.9.2'

- testCompileOnly 'org.projectlombok:lombok:1.18.26'

- testAnnotationProcessor 'org.projectlombok:lombok:1.18.26'

- implementation 'com.opencsv:opencsv:5.5.2'

- implementation 'org.apache.commons:commons-text:1.10.0'

+ testImplementation 'org.junit.jupiter:junit-jupiter:5.10.2'

+ testCompileOnly 'org.projectlombok:lombok:1.18.32'

+ testAnnotationProcessor 'org.projectlombok:lombok:1.18.32'

+ implementation 'com.opencsv:opencsv:5.9'

+ implementation 'org.apache.commons:commons-text:1.11.0'

+ implementation 'org.awaitility:awaitility:3.1.6'

}

tasks.named('test') {

useJUnitPlatform()

testLogging {

- events "passed", "skipped", "failed"

+ events "skipped", "failed"

}

finalizedBy jacocoTestReport

}

@@ -72,6 +73,7 @@ sonar {

property "sonar.projectKey", "au-heartbeat-heartbeat-backend"

property "sonar.organization", "au-heartbeat"

property "sonar.host.url", "https://sonarcloud.io"

+ property "sonar.exclusions", "src/main/java/heartbeat/HeartbeatApplication.java,src/main/java/heartbeat/config/**,src/main/java/heartbeat/util/SystemUtil.java"

}

}

@@ -100,6 +102,36 @@ jacocoTestCoverageVerification {

violationRules {

rule {

limit {

+ counter = 'INSTRUCTION'

+ value = 'COVEREDRATIO'

+ minimum = 1.0

+ }

+ }

+ rule {

+ limit {

+ counter = 'LINE'

+ value = 'COVEREDRATIO'

+ minimum = 1.0

+ }

+ }

+ rule {

+ limit {

+ counter = 'METHOD'

+ value = 'COVEREDRATIO'

+ minimum = 1.0

+ }

+ }

+ rule {

+ limit {

+ counter = 'BRANCH'

+ value = 'COVEREDRATIO'

+ minimum = 0.90

+ }

+ }

+ rule {

+ limit {

+ counter = 'CLASS'

+ value = 'COVEREDRATIO'

minimum = 1.0

}

}

diff --git a/backend/gradle/wrapper/gradle-wrapper.jar b/backend/gradle/wrapper/gradle-wrapper.jar

index c1962a79e2..e6441136f3 100644

Binary files a/backend/gradle/wrapper/gradle-wrapper.jar and b/backend/gradle/wrapper/gradle-wrapper.jar differ

diff --git a/backend/gradle/wrapper/gradle-wrapper.properties b/backend/gradle/wrapper/gradle-wrapper.properties

index 37aef8d3f0..b82aa23a4f 100644

--- a/backend/gradle/wrapper/gradle-wrapper.properties

+++ b/backend/gradle/wrapper/gradle-wrapper.properties

@@ -1,6 +1,7 @@

distributionBase=GRADLE_USER_HOME

distributionPath=wrapper/dists

-distributionUrl=https\://services.gradle.org/distributions/gradle-8.1.1-bin.zip

+distributionUrl=https\://services.gradle.org/distributions/gradle-8.7-bin.zip

networkTimeout=10000

+validateDistributionUrl=true

zipStoreBase=GRADLE_USER_HOME

zipStorePath=wrapper/dists

diff --git a/backend/gradlew b/backend/gradlew

index aeb74cbb43..1aa94a4269 100755

--- a/backend/gradlew

+++ b/backend/gradlew

@@ -83,7 +83,8 @@ done

# This is normally unused

# shellcheck disable=SC2034

APP_BASE_NAME=${0##*/}

-APP_HOME=$( cd "${APP_HOME:-./}" && pwd -P ) || exit

+# Discard cd standard output in case $CDPATH is set (https://github.com/gradle/gradle/issues/25036)

+APP_HOME=$( cd "${APP_HOME:-./}" > /dev/null && pwd -P ) || exit

# Use the maximum available, or set MAX_FD != -1 to use that value.

MAX_FD=maximum

@@ -130,10 +131,13 @@ location of your Java installation."

fi

else

JAVACMD=java

- which java >/dev/null 2>&1 || die "ERROR: JAVA_HOME is not set and no 'java' command could be found in your PATH.

+ if ! command -v java >/dev/null 2>&1

+ then

+ die "ERROR: JAVA_HOME is not set and no 'java' command could be found in your PATH.

Please set the JAVA_HOME variable in your environment to match the

location of your Java installation."

+ fi

fi

# Increase the maximum file descriptors if we can.

@@ -141,7 +145,7 @@ if ! "$cygwin" && ! "$darwin" && ! "$nonstop" ; then

case $MAX_FD in #(

max*)

# In POSIX sh, ulimit -H is undefined. That's why the result is checked to see if it worked.

- # shellcheck disable=SC3045

+ # shellcheck disable=SC2039,SC3045

MAX_FD=$( ulimit -H -n ) ||

warn "Could not query maximum file descriptor limit"

esac

@@ -149,7 +153,7 @@ if ! "$cygwin" && ! "$darwin" && ! "$nonstop" ; then

'' | soft) :;; #(

*)

# In POSIX sh, ulimit -n is undefined. That's why the result is checked to see if it worked.

- # shellcheck disable=SC3045

+ # shellcheck disable=SC2039,SC3045

ulimit -n "$MAX_FD" ||

warn "Could not set maximum file descriptor limit to $MAX_FD"

esac

@@ -198,11 +202,11 @@ fi

# Add default JVM options here. You can also use JAVA_OPTS and GRADLE_OPTS to pass JVM options to this script.

DEFAULT_JVM_OPTS='"-Xmx64m" "-Xms64m"'

-# Collect all arguments for the java command;

-# * $DEFAULT_JVM_OPTS, $JAVA_OPTS, and $GRADLE_OPTS can contain fragments of

-# shell script including quotes and variable substitutions, so put them in

-# double quotes to make sure that they get re-expanded; and

-# * put everything else in single quotes, so that it's not re-expanded.

+# Collect all arguments for the java command:

+# * DEFAULT_JVM_OPTS, JAVA_OPTS, JAVA_OPTS, and optsEnvironmentVar are not allowed to contain shell fragments,

+# and any embedded shellness will be escaped.

+# * For example: A user cannot expect ${Hostname} to be expanded, as it is an environment variable and will be

+# treated as '${Hostname}' itself on the command line.

set -- \

"-Dorg.gradle.appname=$APP_BASE_NAME" \

diff --git a/backend/gradlew.bat b/backend/gradlew.bat

index 6689b85bee..7101f8e467 100644

--- a/backend/gradlew.bat

+++ b/backend/gradlew.bat

@@ -43,11 +43,11 @@ set JAVA_EXE=java.exe

%JAVA_EXE% -version >NUL 2>&1

if %ERRORLEVEL% equ 0 goto execute

-echo.

-echo ERROR: JAVA_HOME is not set and no 'java' command could be found in your PATH.

-echo.

-echo Please set the JAVA_HOME variable in your environment to match the

-echo location of your Java installation.

+echo. 1>&2

+echo ERROR: JAVA_HOME is not set and no 'java' command could be found in your PATH. 1>&2

+echo. 1>&2

+echo Please set the JAVA_HOME variable in your environment to match the 1>&2

+echo location of your Java installation. 1>&2

goto fail

@@ -57,11 +57,11 @@ set JAVA_EXE=%JAVA_HOME%/bin/java.exe

if exist "%JAVA_EXE%" goto execute

-echo.

-echo ERROR: JAVA_HOME is set to an invalid directory: %JAVA_HOME%

-echo.

-echo Please set the JAVA_HOME variable in your environment to match the

-echo location of your Java installation.

+echo. 1>&2

+echo ERROR: JAVA_HOME is set to an invalid directory: %JAVA_HOME% 1>&2

+echo. 1>&2

+echo Please set the JAVA_HOME variable in your environment to match the 1>&2

+echo location of your Java installation. 1>&2

goto fail

diff --git a/backend/src/main/java/heartbeat/client/BuildKiteFeignClient.java b/backend/src/main/java/heartbeat/client/BuildKiteFeignClient.java

index c129c531be..1d1a8fdd2f 100644

--- a/backend/src/main/java/heartbeat/client/BuildKiteFeignClient.java

+++ b/backend/src/main/java/heartbeat/client/BuildKiteFeignClient.java

@@ -3,11 +3,8 @@

import heartbeat.client.decoder.BuildKiteFeignClientDecoder;

import heartbeat.client.dto.pipeline.buildkite.BuildKiteBuildInfo;

import heartbeat.client.dto.pipeline.buildkite.BuildKiteOrganizationsInfo;

-import heartbeat.client.dto.pipeline.buildkite.BuildKiteTokenInfo;

import heartbeat.client.dto.pipeline.buildkite.BuildKitePipelineDTO;

-

-import java.util.List;

-

+import heartbeat.client.dto.pipeline.buildkite.BuildKiteTokenInfo;

import org.springframework.cache.annotation.Cacheable;

import org.springframework.cloud.openfeign.FeignClient;

import org.springframework.http.HttpStatus;

@@ -19,6 +16,8 @@

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.ResponseStatus;

+import java.util.List;

+

@FeignClient(name = "buildKiteFeignClient", url = "${buildKite.url}", configuration = BuildKiteFeignClientDecoder.class)

public interface BuildKiteFeignClient {

@@ -32,10 +31,9 @@ public interface BuildKiteFeignClient {

@ResponseStatus(HttpStatus.OK)

List getBuildKiteOrganizationsInfo(@RequestHeader("Authorization") String token);

- @Cacheable(cacheNames = "pipelineInfo", key = "#token+'-'+#organizationId+'-'+#page+'-'+#perPage")

@GetMapping(path = "v2/organizations/{organizationId}/pipelines?page={page}&per_page={perPage}")

@ResponseStatus(HttpStatus.OK)

- List getPipelineInfo(@RequestHeader("Authorization") String token,

+ ResponseEntity> getPipelineInfo(@RequestHeader("Authorization") String token,

@PathVariable String organizationId, @PathVariable String page, @PathVariable String perPage);

@GetMapping(path = "v2/organizations/{organizationId}/pipelines/{pipelineId}/builds",

diff --git a/backend/src/main/java/heartbeat/client/JiraFeignClient.java b/backend/src/main/java/heartbeat/client/JiraFeignClient.java

index 402f47008b..c9c8c24e64 100644

--- a/backend/src/main/java/heartbeat/client/JiraFeignClient.java

+++ b/backend/src/main/java/heartbeat/client/JiraFeignClient.java

@@ -51,4 +51,8 @@ CardHistoryResponseDTO getJiraCardHistoryByCount(URI baseUrl, @PathVariable Stri

@GetMapping(path = "rest/api/2/project/{projectIdOrKey}")

JiraBoardProject getProject(URI baseUrl, @PathVariable String projectIdOrKey, @RequestHeader String authorization);

+ // This api is solely used for site url checking

+ @GetMapping(path = "/rest/api/3/dashboard")

+ String getDashboard(URI baseUrl, @RequestHeader String authorization);

+

}

diff --git a/backend/src/main/java/heartbeat/client/decoder/BuildKiteFeignClientDecoder.java b/backend/src/main/java/heartbeat/client/decoder/BuildKiteFeignClientDecoder.java

index 8ec1c38811..c7706925ae 100644

--- a/backend/src/main/java/heartbeat/client/decoder/BuildKiteFeignClientDecoder.java

+++ b/backend/src/main/java/heartbeat/client/decoder/BuildKiteFeignClientDecoder.java

@@ -1,6 +1,5 @@

package heartbeat.client.decoder;

-import feign.FeignException;

import feign.Response;

import feign.codec.ErrorDecoder;

import heartbeat.util.ExceptionUtil;

@@ -12,12 +11,17 @@ public class BuildKiteFeignClientDecoder implements ErrorDecoder {

@Override

public Exception decode(String methodKey, Response response) {

+ String errorMessage = switch (methodKey) {

+ case "getTokenInfo" -> "Failed to get token info";

+ case "getBuildKiteOrganizationsInfo" -> "Failed to get BuildKite OrganizationsInfo info";

+ case "getPipelineInfo" -> "Failed to get pipeline info";

+ case "getPipelineSteps" -> "Failed to get pipeline steps";

+ case "getPipelineStepsInfo" -> "Failed to get pipeline steps info";

+ default -> "Failed to get buildkite info";

+ };

+

log.error("Failed to get BuildKite info_response status: {}, method key: {}", response.status(), methodKey);

HttpStatus statusCode = HttpStatus.valueOf(response.status());

- FeignException exception = FeignException.errorStatus(methodKey, response);

- String errorMessage = String.format("Failed to get BuildKite info_status: %s, reason: %s", statusCode,

- exception.getMessage());

-

return ExceptionUtil.handleCommonFeignClientException(statusCode, errorMessage);

}

diff --git a/backend/src/main/java/heartbeat/client/decoder/GitHubFeignClientDecoder.java b/backend/src/main/java/heartbeat/client/decoder/GitHubFeignClientDecoder.java

index cd0dbb235d..a71b1c8b57 100644

--- a/backend/src/main/java/heartbeat/client/decoder/GitHubFeignClientDecoder.java

+++ b/backend/src/main/java/heartbeat/client/decoder/GitHubFeignClientDecoder.java

@@ -1,6 +1,5 @@

package heartbeat.client.decoder;

-import feign.FeignException;

import feign.Response;

import feign.codec.ErrorDecoder;

import heartbeat.util.ExceptionUtil;

@@ -12,13 +11,18 @@ public class GitHubFeignClientDecoder implements ErrorDecoder {

@Override

public Exception decode(String methodKey, Response response) {

+ String errorMessage = switch (methodKey) {

+ case "verifyToken" -> "Failed to verify token";

+ case "verifyCanReadTargetBranch" -> "Failed to verify canRead target branch";

+ case "getCommitInfo" -> "Failed to get commit info";

+ case "getPullRequestCommitInfo" -> "Failed to get pull request commit info";

+ case "getPullRequestListInfo" -> "Failed to get pull request list info";

+ default -> "Failed to get github info";

+ };

+

log.error("Failed to get GitHub info_response status: {}, method key: {}", response.status(), methodKey);

HttpStatus statusCode = HttpStatus.valueOf(response.status());

- FeignException exception = FeignException.errorStatus(methodKey, response);

- String errorMessage = String.format("Failed to get GitHub info_status: %s, reason: %s", statusCode,

- exception.getMessage());

return ExceptionUtil.handleCommonFeignClientException(statusCode, errorMessage);

-

}

}

diff --git a/backend/src/main/java/heartbeat/client/decoder/JiraFeignClientDecoder.java b/backend/src/main/java/heartbeat/client/decoder/JiraFeignClientDecoder.java

index a858ad4b97..8af55eed41 100644

--- a/backend/src/main/java/heartbeat/client/decoder/JiraFeignClientDecoder.java

+++ b/backend/src/main/java/heartbeat/client/decoder/JiraFeignClientDecoder.java

@@ -1,6 +1,5 @@

package heartbeat.client.decoder;

-import feign.FeignException;

import feign.Response;

import feign.codec.ErrorDecoder;

import heartbeat.util.ExceptionUtil;

@@ -12,11 +11,19 @@ public class JiraFeignClientDecoder implements ErrorDecoder {

@Override

public Exception decode(String methodKey, Response response) {

+ String errorMessage = switch (methodKey) {

+ case "getJiraBoardConfiguration" -> "Failed to get jira board configuration";

+ case "getColumnStatusCategory" -> "Failed to get column status category";

+ case "getJiraCards" -> "Failed to get jira cards";

+ case "getJiraCardHistoryByCount" -> "Failed to get jira card history by count";

+ case "getTargetField" -> "Failed to get target field";

+ case "getBoard" -> "Failed to get board";

+ case "getProject" -> "Failed to get project";

+ default -> "Failed to get jira info";

+ };

+

log.error("Failed to get Jira info_response status: {}, method key: {}", response.status(), methodKey);

HttpStatus statusCode = HttpStatus.valueOf(response.status());

- FeignException exception = FeignException.errorStatus(methodKey, response);

- String errorMessage = String.format("Failed to get Jira info_status: %s, reason: %s", statusCode,

- exception.getMessage());

return ExceptionUtil.handleCommonFeignClientException(statusCode, errorMessage);

}

diff --git a/backend/src/main/java/heartbeat/client/dto/board/jira/HistoryDetail.java b/backend/src/main/java/heartbeat/client/dto/board/jira/HistoryDetail.java

index 2ce8c78b28..bed9f960b1 100644

--- a/backend/src/main/java/heartbeat/client/dto/board/jira/HistoryDetail.java

+++ b/backend/src/main/java/heartbeat/client/dto/board/jira/HistoryDetail.java

@@ -22,6 +22,8 @@ public class HistoryDetail implements Serializable {

private Actor actor;

+ private String fieldDisplayName;

+

@Getter

@Setter

@Builder

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/AuthorOuter.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/AuthorOuter.java

deleted file mode 100644

index 566a81519c..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/AuthorOuter.java

+++ /dev/null

@@ -1,66 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import com.fasterxml.jackson.annotation.JsonProperty;

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class AuthorOuter implements Serializable {

-

- private String login;

-

- private String id;

-

- @JsonProperty("node_id")

- private String nodeId;

-

- @JsonProperty("avatar_url")

- private String avatarUrl;

-

- @JsonProperty("gravatar_id")

- private String gravatarId;

-

- private String url;

-

- @JsonProperty("html_url")

- private String htmlUrl;

-

- @JsonProperty("followers_url")

- private String followersUrl;

-

- @JsonProperty("following_url")

- private String followingUrl;

-

- @JsonProperty("gists_url")

- private String gistsUrl;

-

- @JsonProperty("starred_url")

- private String starredUrl;

-

- @JsonProperty("subscriptions_url")

- private String subscriptionsUrl;

-

- @JsonProperty("organizations_url")

- private String organizationsUrl;

-

- @JsonProperty("repos_url")

- private String reposUrl;

-

- @JsonProperty("events_url")

- private String eventsUrl;

-

- @JsonProperty("received_events_url")

- private String receivedEventsUrl;

-

- private String type;

-

- private Boolean siteAdmin;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/Base.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/Base.java

deleted file mode 100644

index e2bc62608b..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/Base.java

+++ /dev/null

@@ -1,26 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class Base implements Serializable {

-

- private String label;

-

- private String ref;

-

- private String sha;

-

- private User user;

-

- private Repo repo;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/Comment.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/Comment.java

deleted file mode 100644

index 84d4dfbfcb..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/Comment.java

+++ /dev/null

@@ -1,18 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class Comment implements Serializable {

-

- private String href;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/Commits.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/Commits.java

deleted file mode 100644

index 6a6087d8fc..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/Commits.java

+++ /dev/null

@@ -1,18 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class Commits implements Serializable {

-

- private String href;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/CommitterOuter.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/CommitterOuter.java

deleted file mode 100644

index 23eb7d5cef..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/CommitterOuter.java

+++ /dev/null

@@ -1,67 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import com.fasterxml.jackson.annotation.JsonProperty;

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class CommitterOuter implements Serializable {

-

- private String login;

-

- private String id;

-

- @JsonProperty("node_id")

- private String nodeId;

-

- @JsonProperty("avatar_url")

- private String avatarUrl;

-

- @JsonProperty("gravatar_id")

- private String gravatarId;

-

- private String url;

-

- @JsonProperty("html_url")

- private String htmlUrl;

-

- @JsonProperty("followers_url")

- private String followersUrl;

-

- @JsonProperty("following_url")

- private String followingUrl;

-

- @JsonProperty("gists_url")

- private String gistsUrl;

-

- @JsonProperty("starred_url")

- private String starredUrl;

-

- @JsonProperty("subscriptions_url")

- private String subscriptionsUrl;

-

- @JsonProperty("organizations_url")

- private String organizationsUrl;

-

- @JsonProperty("repos_url")

- private String reposUrl;

-

- @JsonProperty("events_url")

- private String eventsUrl;

-

- @JsonProperty("received_events_url")

- private String receivedEventsUrl;

-

- private String type;

-

- @JsonProperty("site_admin")

- private Boolean siteAdmin;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/File.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/File.java

deleted file mode 100644

index 5279069781..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/File.java

+++ /dev/null

@@ -1,40 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import com.fasterxml.jackson.annotation.JsonProperty;

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class File implements Serializable {

-

- private String sha;

-

- private String filename;

-

- private String status;

-

- private Integer additions;

-

- private Integer deletions;

-

- private Integer changes;

-

- @JsonProperty("blob_url")

- private String blobUrl;

-

- @JsonProperty("raw_url")

- private String rawUrl;

-

- @JsonProperty("contents_url")

- private String contentsUrl;

-

- private String patch;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/GitHubPull.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/GitHubPull.java

deleted file mode 100644

index 5ff0372e2b..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/GitHubPull.java

+++ /dev/null

@@ -1,20 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class GitHubPull {

-

- private String createdAt;

-

- private String mergedAt;

-

- private Integer number;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/Head.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/Head.java

deleted file mode 100644

index dab44a8da7..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/Head.java

+++ /dev/null

@@ -1,26 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class Head implements Serializable {

-

- private String label;

-

- private String ref;

-

- private String sha;

-

- private User user;

-

- private Repo repo;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/Html.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/Html.java

deleted file mode 100644

index c9f7b0d42c..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/Html.java

+++ /dev/null

@@ -1,18 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class Html implements Serializable {

-

- private String href;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/Issue.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/Issue.java

deleted file mode 100644

index b935061bb3..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/Issue.java

+++ /dev/null

@@ -1,18 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class Issue implements Serializable {

-

- private String href;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/License.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/License.java

deleted file mode 100644

index 46ce69b932..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/License.java

+++ /dev/null

@@ -1,29 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import com.fasterxml.jackson.annotation.JsonProperty;

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class License implements Serializable {

-

- private String key;

-

- private String name;

-

- @JsonProperty("spdx_id")

- private String spdxId;

-

- private String url;

-

- @JsonProperty("node_id")

- private String nodeId;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/LinkCollection.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/LinkCollection.java

deleted file mode 100644

index 5caf031822..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/LinkCollection.java

+++ /dev/null

@@ -1,35 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import com.fasterxml.jackson.annotation.JsonProperty;

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class LinkCollection implements Serializable {

-

- private Self self;

-

- private Html html;

-

- private Issue issue;

-

- private Comment comment;

-

- @JsonProperty("review_comments")

- private ReviewComments reviewComments;

-

- @JsonProperty("review_comment")

- private ReviewComment reviewComment;

-

- private Commits commits;

-

- private Status status;

-

-}

diff --git a/backend/src/main/java/heartbeat/client/dto/codebase/github/Owner.java b/backend/src/main/java/heartbeat/client/dto/codebase/github/Owner.java

deleted file mode 100644

index 24fd8a1a92..0000000000

--- a/backend/src/main/java/heartbeat/client/dto/codebase/github/Owner.java

+++ /dev/null

@@ -1,67 +0,0 @@

-package heartbeat.client.dto.codebase.github;

-

-import com.fasterxml.jackson.annotation.JsonProperty;

-import lombok.AllArgsConstructor;

-import lombok.Builder;

-import lombok.Data;

-import lombok.NoArgsConstructor;

-

-import java.io.Serializable;

-

-@Data

-@Builder

-@NoArgsConstructor

-@AllArgsConstructor

-public class Owner implements Serializable {

-

- private String login;

-

- private Integer id;

-

- @JsonProperty("node_id")

- private String nodeId;

-

- @JsonProperty("avatar_url")

- private String avatarUrl;

-

- @JsonProperty("gravatar_id")

- private String gravatarId;

-

- private String url;

-

- @JsonProperty("html_url")

- private String htmlUrl;

-

- @JsonProperty("followers_url")

- private String followersUrl;

-

- @JsonProperty("following_url")

- private String followingUrl;