diff --git a/README.md b/README.md

index d409b3fdeadf..02908db0fd18 100755

--- a/README.md

+++ b/README.md

@@ -6,36 +6,43 @@

This repository represents Ultralytics open-source research into future object detection methods, and incorporates lessons learned and best practices evolved over thousands of hours of training and evolution on anonymized client datasets. **All code and models are under active development, and are subject to modification or deletion without notice.** Use at your own risk.

-

+

+

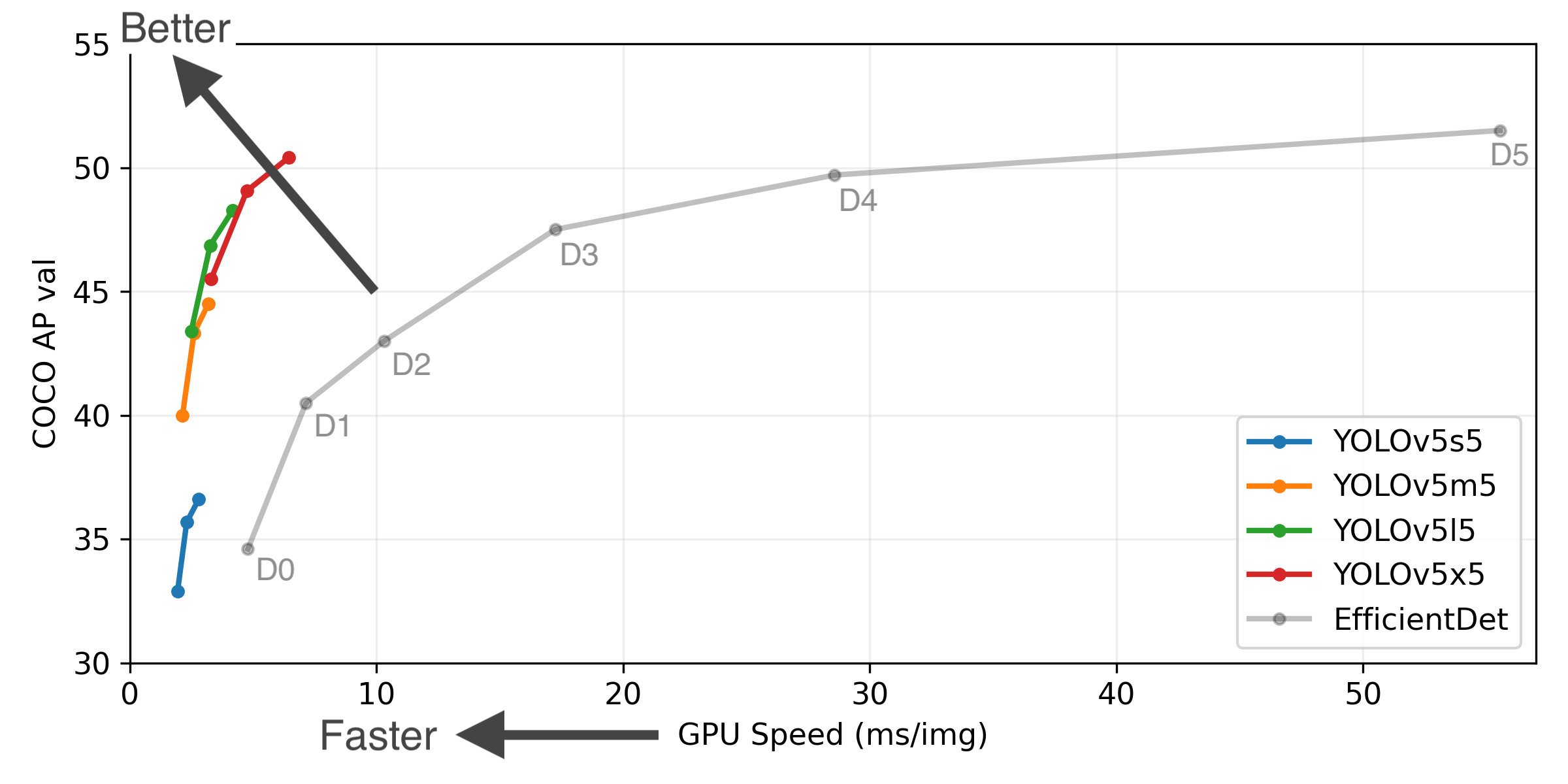

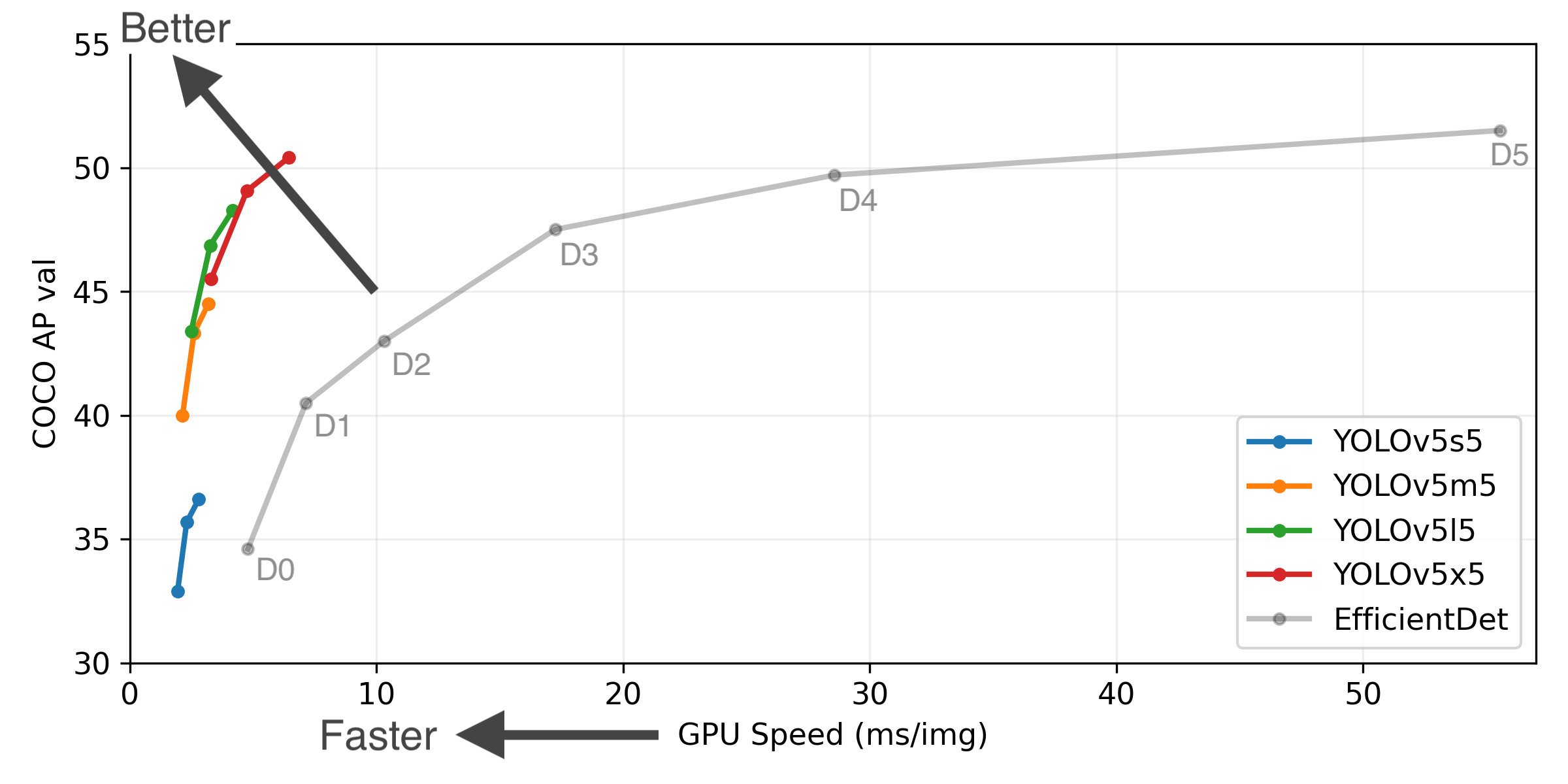

+ YOLOv5-P5 640 Figure (click to expand)

+

+

+

Figure Notes (click to expand)

* GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS.

* EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

+ * **Reproduce** by `python test.py --task study --data coco.yaml --iou 0.7 --weights yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

+- **April 11, 2021**: [v5.0 release](https://github.com/ultralytics/yolov5/releases/tag/v5.0): YOLOv5-P6 1280 models, [AWS](https://github.com/ultralytics/yolov5/wiki/AWS-Quickstart), [Supervise.ly](https://github.com/ultralytics/yolov5/issues/2518) and [YouTube](https://github.com/ultralytics/yolov5/pull/2752) integrations.

- **January 5, 2021**: [v4.0 release](https://github.com/ultralytics/yolov5/releases/tag/v4.0): nn.SiLU() activations, [Weights & Biases](https://wandb.ai/site?utm_campaign=repo_yolo_readme) logging, [PyTorch Hub](https://pytorch.org/hub/ultralytics_yolov5/) integration.

- **August 13, 2020**: [v3.0 release](https://github.com/ultralytics/yolov5/releases/tag/v3.0): nn.Hardswish() activations, data autodownload, native AMP.

- **July 23, 2020**: [v2.0 release](https://github.com/ultralytics/yolov5/releases/tag/v2.0): improved model definition, training and mAP.

-- **June 22, 2020**: [PANet](https://arxiv.org/abs/1803.01534) updates: new heads, reduced parameters, improved speed and mAP [364fcfd](https://github.com/ultralytics/yolov5/commit/364fcfd7dba53f46edd4f04c037a039c0a287972).

-- **June 19, 2020**: [FP16](https://pytorch.org/docs/stable/nn.html#torch.nn.Module.half) as new default for smaller checkpoints and faster inference [d4c6674](https://github.com/ultralytics/yolov5/commit/d4c6674c98e19df4c40e33a777610a18d1961145).

## Pretrained Checkpoints

-| Model | size | APval | APtest | AP50 | SpeedV100 | FPSV100 || params | GFLOPS |

-|---------- |------ |------ |------ |------ | -------- | ------| ------ |------ | :------: |

-| [YOLOv5s](https://github.com/ultralytics/yolov5/releases) |640 |36.8 |36.8 |55.6 |**2.2ms** |**455** ||7.3M |17.0

-| [YOLOv5m](https://github.com/ultralytics/yolov5/releases) |640 |44.5 |44.5 |63.1 |2.9ms |345 ||21.4M |51.3

-| [YOLOv5l](https://github.com/ultralytics/yolov5/releases) |640 |48.1 |48.1 |66.4 |3.8ms |264 ||47.0M |115.4

-| [YOLOv5x](https://github.com/ultralytics/yolov5/releases) |640 |**50.1** |**50.1** |**68.7** |6.0ms |167 ||87.7M |218.8

-| | | | | | | || |

-| [YOLOv5x](https://github.com/ultralytics/yolov5/releases) + TTA |832 |**51.9** |**51.9** |**69.6** |24.9ms |40 ||87.7M |1005.3

-

-

+[assets]: https://github.com/ultralytics/yolov5/releases

+

+Model |size

(pixels) |mAPval

0.5:0.95 |mAPtest

0.5:0.95 |mAPval

0.5 |Speed

V100 (ms) | |params

(M) |FLOPS

640 (B)

+--- |--- |--- |--- |--- |--- |---|--- |---

+[YOLOv5s][assets] |640 |36.7 |36.7 |55.4 |**2.0** | |7.3 |17.0

+[YOLOv5m][assets] |640 |44.5 |44.5 |63.3 |2.7 | |21.4 |51.3

+[YOLOv5l][assets] |640 |48.2 |48.2 |66.9 |3.8 | |47.0 |115.4

+[YOLOv5x][assets] |640 |**50.4** |**50.4** |**68.8** |6.1 | |87.7 |218.8

+| | | | | | || |

+[YOLOv5s6][assets] |1280 |43.3 |43.3 |61.9 |**4.3** | |12.7 |17.4

+[YOLOv5m6][assets] |1280 |50.5 |50.5 |68.7 |8.4 | |35.9 |52.4

+[YOLOv5l6][assets] |1280 |53.4 |53.4 |71.1 |12.3 | |77.2 |117.7

+[YOLOv5x6][assets] |1280 |**54.4** |**54.4** |**72.0** |22.4 | |141.8 |222.9

+| | | | | | || |

+[YOLOv5x6][assets] TTA |1280 |**55.0** |**55.0** |**72.0** |70.8 | |- |-

Table Notes (click to expand)

@@ -44,7 +51,7 @@ This repository represents Ultralytics open-source research into future object d

* AP values are for single-model single-scale unless otherwise noted. **Reproduce mAP** by `python test.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

* SpeedGPU averaged over 5000 COCO val2017 images using a GCP [n1-standard-16](https://cloud.google.com/compute/docs/machine-types#n1_standard_machine_types) V100 instance, and includes FP16 inference, postprocessing and NMS. **Reproduce speed** by `python test.py --data coco.yaml --img 640 --conf 0.25 --iou 0.45`

* All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).

- * Test Time Augmentation ([TTA](https://github.com/ultralytics/yolov5/issues/303)) includes reflection and scale augmentation. **Reproduce TTA** by `python test.py --data coco.yaml --img 832 --iou 0.65 --augment`

+ * Test Time Augmentation ([TTA](https://github.com/ultralytics/yolov5/issues/303)) includes reflection and scale augmentation. **Reproduce TTA** by `python test.py --data coco.yaml --img 1536 --iou 0.7 --augment`

@@ -85,7 +92,7 @@ YOLOv5 may be run in any of the following up-to-date verified environments (with

## Inference

-detect.py runs inference on a variety of sources, downloading models automatically from the [latest YOLOv5 release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`.

+`detect.py` runs inference on a variety of sources, downloading models automatically from the [latest YOLOv5 release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`.

```bash

$ python detect.py --source 0 # webcam

file.jpg # image

diff --git a/hubconf.py b/hubconf.py

index 0f9aa150a34e..d26db45695de 100644

--- a/hubconf.py

+++ b/hubconf.py

@@ -55,84 +55,68 @@ def create(name, pretrained, channels, classes, autoshape):

raise Exception(s) from e

-def yolov5s(pretrained=True, channels=3, classes=80, autoshape=True):

- """YOLOv5-small model from https://github.com/ultralytics/yolov5

+def custom(path_or_model='path/to/model.pt', autoshape=True):

+ """YOLOv5-custom model https://github.com/ultralytics/yolov5

- Arguments:

- pretrained (bool): load pretrained weights into the model, default=False

- channels (int): number of input channels, default=3

- classes (int): number of model classes, default=80

+ Arguments (3 options):

+ path_or_model (str): 'path/to/model.pt'

+ path_or_model (dict): torch.load('path/to/model.pt')

+ path_or_model (nn.Module): torch.load('path/to/model.pt')['model']

Returns:

pytorch model

"""

- return create('yolov5s', pretrained, channels, classes, autoshape)

+ model = torch.load(path_or_model) if isinstance(path_or_model, str) else path_or_model # load checkpoint

+ if isinstance(model, dict):

+ model = model['ema' if model.get('ema') else 'model'] # load model

+ hub_model = Model(model.yaml).to(next(model.parameters()).device) # create

+ hub_model.load_state_dict(model.float().state_dict()) # load state_dict

+ hub_model.names = model.names # class names

+ if autoshape:

+ hub_model = hub_model.autoshape() # for file/URI/PIL/cv2/np inputs and NMS

+ device = select_device('0' if torch.cuda.is_available() else 'cpu') # default to GPU if available

+ return hub_model.to(device)

-def yolov5m(pretrained=True, channels=3, classes=80, autoshape=True):

- """YOLOv5-medium model from https://github.com/ultralytics/yolov5

- Arguments:

- pretrained (bool): load pretrained weights into the model, default=False

- channels (int): number of input channels, default=3

- classes (int): number of model classes, default=80

+def yolov5s(pretrained=True, channels=3, classes=80, autoshape=True):

+ # YOLOv5-small model https://github.com/ultralytics/yolov5

+ return create('yolov5s', pretrained, channels, classes, autoshape)

- Returns:

- pytorch model

- """

+

+def yolov5m(pretrained=True, channels=3, classes=80, autoshape=True):

+ # YOLOv5-medium model https://github.com/ultralytics/yolov5

return create('yolov5m', pretrained, channels, classes, autoshape)

def yolov5l(pretrained=True, channels=3, classes=80, autoshape=True):

- """YOLOv5-large model from https://github.com/ultralytics/yolov5

-

- Arguments:

- pretrained (bool): load pretrained weights into the model, default=False

- channels (int): number of input channels, default=3

- classes (int): number of model classes, default=80

-

- Returns:

- pytorch model

- """

+ # YOLOv5-large model https://github.com/ultralytics/yolov5

return create('yolov5l', pretrained, channels, classes, autoshape)

def yolov5x(pretrained=True, channels=3, classes=80, autoshape=True):

- """YOLOv5-xlarge model from https://github.com/ultralytics/yolov5

+ # YOLOv5-xlarge model https://github.com/ultralytics/yolov5

+ return create('yolov5x', pretrained, channels, classes, autoshape)

- Arguments:

- pretrained (bool): load pretrained weights into the model, default=False

- channels (int): number of input channels, default=3

- classes (int): number of model classes, default=80

- Returns:

- pytorch model

- """

- return create('yolov5x', pretrained, channels, classes, autoshape)

+def yolov5s6(pretrained=True, channels=3, classes=80, autoshape=True):

+ # YOLOv5-small model https://github.com/ultralytics/yolov5

+ return create('yolov5s6', pretrained, channels, classes, autoshape)

-def custom(path_or_model='path/to/model.pt', autoshape=True):

- """YOLOv5-custom model from https://github.com/ultralytics/yolov5

+def yolov5m6(pretrained=True, channels=3, classes=80, autoshape=True):

+ # YOLOv5-medium model https://github.com/ultralytics/yolov5

+ return create('yolov5m6', pretrained, channels, classes, autoshape)

- Arguments (3 options):

- path_or_model (str): 'path/to/model.pt'

- path_or_model (dict): torch.load('path/to/model.pt')

- path_or_model (nn.Module): torch.load('path/to/model.pt')['model']

- Returns:

- pytorch model

- """

- model = torch.load(path_or_model) if isinstance(path_or_model, str) else path_or_model # load checkpoint

- if isinstance(model, dict):

- model = model['ema' if model.get('ema') else 'model'] # load model

+def yolov5l6(pretrained=True, channels=3, classes=80, autoshape=True):

+ # YOLOv5-large model https://github.com/ultralytics/yolov5

+ return create('yolov5l6', pretrained, channels, classes, autoshape)

- hub_model = Model(model.yaml).to(next(model.parameters()).device) # create

- hub_model.load_state_dict(model.float().state_dict()) # load state_dict

- hub_model.names = model.names # class names

- if autoshape:

- hub_model = hub_model.autoshape() # for file/URI/PIL/cv2/np inputs and NMS

- device = select_device('0' if torch.cuda.is_available() else 'cpu') # default to GPU if available

- return hub_model.to(device)

+

+def yolov5x6(pretrained=True, channels=3, classes=80, autoshape=True):

+ # YOLOv5-xlarge model https://github.com/ultralytics/yolov5

+ return create('yolov5x6', pretrained, channels, classes, autoshape)

if __name__ == '__main__':

diff --git a/utils/plots.py b/utils/plots.py

index 47e7b7b74f1c..5b23a34f5141 100644

--- a/utils/plots.py

+++ b/utils/plots.py

@@ -243,7 +243,7 @@ def plot_study_txt(path='', x=None): # from utils.plots import *; plot_study_tx

# ax = ax.ravel()

fig2, ax2 = plt.subplots(1, 1, figsize=(8, 4), tight_layout=True)

- # for f in [Path(path) / f'study_coco_{x}.txt' for x in ['yolov5s', 'yolov5m', 'yolov5l', 'yolov5x']]:

+ # for f in [Path(path) / f'study_coco_{x}.txt' for x in ['yolov5s6', 'yolov5m6', 'yolov5l6', 'yolov5x6']]:

for f in sorted(Path(path).glob('study*.txt')):

y = np.loadtxt(f, dtype=np.float32, usecols=[0, 1, 2, 3, 7, 8, 9], ndmin=2).T

x = np.arange(y.shape[1]) if x is None else np.array(x)

@@ -253,7 +253,7 @@ def plot_study_txt(path='', x=None): # from utils.plots import *; plot_study_tx

# ax[i].set_title(s[i])

j = y[3].argmax() + 1

- ax2.plot(y[6, :j], y[3, :j] * 1E2, '.-', linewidth=2, markersize=8,

+ ax2.plot(y[6, 1:j], y[3, 1:j] * 1E2, '.-', linewidth=2, markersize=8,

label=f.stem.replace('study_coco_', '').replace('yolo', 'YOLO'))

ax2.plot(1E3 / np.array([209, 140, 97, 58, 35, 18]), [34.6, 40.5, 43.0, 47.5, 49.7, 51.5],

@@ -261,7 +261,7 @@ def plot_study_txt(path='', x=None): # from utils.plots import *; plot_study_tx

ax2.grid(alpha=0.2)

ax2.set_yticks(np.arange(20, 60, 5))

- ax2.set_xlim(0, 30)

+ ax2.set_xlim(0, 57)

ax2.set_ylim(30, 55)

ax2.set_xlabel('GPU Speed (ms/img)')

ax2.set_ylabel('COCO AP val')