-

Notifications

You must be signed in to change notification settings - Fork 1.1k

Configuration with Minigraph (~Sep 2017)

SONiC is using device minigraph as a single entry to configure the box. The minigraph file is at /etc/sonic/minigraph.xml. The actually configuration for each docker is automatically generated based on this file.

Device minigraph contains all the information about a given device. In particularly it has all the information need to generate the device configuration as well as other data plan and control plane related information.

Sample minigraphs could be found at sonic-mgmt repo:

In detail, the device minigraph contains following information.

Hostname and SKU information is defined as child elements of root element <DeviceMiniGraph>

<DeviceMiniGraph>

...

<Hostname>switch1</Hostname>

<HwSku>AS7512</HwSku>

</DeviceMiniGraph>

The same information can also be found in the <Devices> element in <PngDec> session and the Type/Role for the device is also defined there.

<PngDec>

...

<Devices>

<Device i:type="LeafRouter">

<Hostname>switch1</Hostname>

<HwSku>AS7512</HwSku>

</Device>

</Devices>

</PngDec>

Loopback address (IPV4 and IPV6) and Management address (IPV4 and IPV6) are defined in <DpgDec> session.

<DpgDec>

<DeviceDataPlaneInfo>

<IPSecTunnels/>

<LoopbackIPInterfaces xmlns:a="http://schemas.datacontract.org/2004/07/Microsoft.Search.Autopilot.Evolution">

<a:LoopbackIPInterface>

<Name>HostIP</Name>

<AttachTo>Loopback0</AttachTo>

<a:Prefix xmlns:b="Microsoft.Search.Autopilot.NetMux">

<b:IPPrefix>100.0.0.10/32</b:IPPrefix>

</a:Prefix>

<a:PrefixStr>100.0.0.10/32</a:PrefixStr>

</a:LoopbackIPInterface>

</LoopbackIPInterfaces>

<ManagementIPInterfaces xmlns:a="http://schemas.datacontract.org/2004/07/Microsoft.Search.Autopilot.Evolution">

<a:ManagementIPInterface>

<Name>ManagementIP1</Name>

<AttachTo>Management0</AttachTo>

<a:Prefix xmlns:b="Microsoft.Search.Autopilot.NetMux">

<b:IPPrefix>192.168.200.19/24</b:IPPrefix>

</a:Prefix>

<a:PrefixStr>192.168.200.19/24</a:PrefixStr>

</a:ManagementIPInterface>

</ManagementIPInterfaces>

...

</DeviceDataPlaneInfo>

</DpgDec>

DHCP servers, NTP servers and Syslog servers should be defined in the device's minigraph file as properties of the device's metadata, in a semicolon-delimited list.

<MetadataDeclaration>

<Devices xmlns:a="http://schemas.datacontract.org/2004/07/Microsoft.Search.Autopilot.Evolution">

<a:DeviceMetadata>

<a:Name>switch1</a:Name>

<a:Properties>

<a:DeviceProperty>

<a:Name>DhcpResources</a:Name>

<a:Reference i:nil="true"/>

<a:Value>192.0.0.1;192.0.0.2;192.0.0.3;192.0.0.4</a:Value>

</a:DeviceProperty>

<a:DeviceProperty>

<a:Name>NtpResources</a:Name>

<a:Reference i:nil="true"/>

<a:Value>0.debian.pool.ntp.org;1.debian.pool.ntp.org;2.debian.pool.ntp.org;3.debian.pool.ntp.org</a:Value>

</a:DeviceProperty>

<a:DeviceProperty>

<a:Name>SyslogResources</a:Name>

<a:Reference i:nil="true"/>

<a:Value>192.0.0.1</a:Value>

</a:DeviceProperty>

</a:Properties>

</a:DeviceMetadata>

</Devices>

</MetadataDeclaration>

All the links start and end at the device and Metadata associated with all the links (name value pairs) are in <PngDec> session.

<PngDec>

<DeviceInterfaceLinks>

<DeviceLinkBase i:type="DeviceInterfaceLink">

<Bandwidth>40000</Bandwidth>

<ElementType>DeviceInterfaceLink</ElementType>

<EndDevice>OCPSCH0104001MS</EndDevice>

<EndPort>Ethernet0</EndPort>

<StartDevice>OCPSCH01040HHLF</StartDevice>

<StartPort>Ethernet0</StartPort>

</DeviceLinkBase>

<DeviceLinkBase i:type="DeviceInterfaceLink">

<Bandwidth>40000</Bandwidth>

<ElementType>DeviceInterfaceLink</ElementType>

<EndDevice>OCPSCH0104002MS</EndDevice>

<EndPort>Ethernet0</EndPort>

<StartDevice>OCPSCH01040HHLF</StartDevice>

<StartPort>Ethernet4</StartPort>

</DeviceLinkBase>

</DeviceInterfaceLinks>

...

</PngDec>

All the device data plane information are defined in the <DpgDec> session, including IP addresses, Port channel settings, Vlan definitions, and ACL attaching information.

Please note the AclInterfaces element in minigraph only contains the mapping from ACL table names to interfaces. The detailed ACL tables are defined with same table names in another file, /etc/sonic/acl.json, following OpenConfig Yang model.

<DpgDec>

<DeviceDataPlaneInfo>

...

<PortChannelInterfaces>

<PortChannel>

<Name>PortChannel01</Name>

<AttachTo>Ethernet112</AttachTo>

<SubInterface/>

</PortChannel>

<PortChannel>

<Name>PortChannel02</Name>

<AttachTo>Ethernet116</AttachTo>

<SubInterface/>

</PortChannel>

</PortChannelInterfaces>

<VlanInterfaces>

<VlanInterface>

<Name>Vlan1000</Name>

<AttachTo>Ethernet8;Ethernet12;Ethernet16;Ethernet20</AttachTo>

<Type i:nil="true"/>

<VlanID>1000</VlanID>

<Tag>1000</Tag>

<Subnets>192.168.0.0/27</Subnets>

</VlanInterface>

</VlanInterfaces>

<IPInterfaces>

<IPInterface>

<Name i:nil="true"/>

<AttachTo>PortChannel01</AttachTo>

<Prefix>10.0.0.56/31</Prefix>

</IPInterface>

<IPInterface>

<Name i:Name="true"/>

<AttachTo>PortChannel01</AttachTo>

<Prefix>FC00::71/126</Prefix>

</IPInterface>

<IPInterface>

<Name i:nil="true"/>

<AttachTo>PortChannel02</AttachTo>

<Prefix>10.0.0.58/31</Prefix>

</IPInterface>

<IPInterface>

<Name i:Name="true"/>

<AttachTo>PortChannel02</AttachTo>

<Prefix>FC00::75/126</Prefix>

</IPInterface>

<IPInterface>

<Name i:nil="true"/>

<AttachTo>Vlan1000</AttachTo>

<Prefix>192.168.0.1/27</Prefix>

</IPInterface>

<IPInterface>

<Name i:nil="true"/>

<AttachTo>Ethernet0</AttachTo>

<Prefix>10.10.1.1/30</Prefix>

</IPInterface>

<IPInterface>

<Name i:nil="true"/>

<AttachTo>Ethernet4</AttachTo>

<Prefix>10.10.2.1/30</Prefix>

</IPInterface>

</IPInterfaces>

<AclInterfaces>

<AclInterface>

<AttachTo>ERSPAN</AttachTo>

<InAcl>everflow</InAcl>

</AclInterface>

<AclInterface>

<AttachTo>

PortChannel01;PortChannel02

</AttachTo>

<InAcl>data_acl</InAcl>

</AclInterface>

</AclInterfaces>

...

</DeviceDataPlaneInfo>

</DpgDec>

BGP AS Number, Peers and Peering sessions are defined in the <CpgDec> session.

<CpgDec>

...

<PeeringSessions>

<BGPSession>

<StartRouter>OCPSCH0104001MS</StartRouter>

<StartPeer>10.10.1.18</StartPeer>

<EndRouter>OCPSCH01040EELF</EndRouter>

<EndPeer>10.10.1.17</EndPeer>

<Multihop>1</Multihop>

<HoldTime>10</HoldTime>

<KeepAliveTime>3</KeepAliveTime>

</BGPSession>

<BGPSession>

<StartRouter>OCPSCH0104002MS</StartRouter>

<StartPeer>10.10.2.18</StartPeer>

<EndRouter>OCPSCH01040EELF</EndRouter>

<EndPeer>10.10.2.17</EndPeer>

<Multihop>1</Multihop>

<HoldTime>10</HoldTime>

<KeepAliveTime>3</KeepAliveTime>

</BGPSession>

</PeeringSessions>

<Routers xmlns:a="http://schemas.datacontract.org/2004/07/Microsoft.Search.Autopilot.Evolution">

<a:BGPRouterDeclaration>

<a:ASN>64536</a:ASN>

<a:Hostname>OCPSCH01040EELF</a:Hostname>

<a:Peers>

<BGPPeer>

<Address>10.10.1.18</Address>

<RouteMapIn i:nil="true"/>

<RouteMapOut i:nil="true"/>

</BGPPeer>

<BGPPeer>

<Address>10.10.2.18</Address>

<RouteMapIn i:nil="true"/>

<RouteMapOut i:nil="true"/>

</BGPPeer>

</a:Peers>

<a:RouteMaps/>

</a:BGPRouterDeclaration>

<a:BGPRouterDeclaration>

<a:ASN>64542</a:ASN>

<a:Hostname>OCPSCH0104001MS</a:Hostname>

<a:RouteMaps/>

</a:BGPRouterDeclaration>

<a:BGPRouterDeclaration>

<a:ASN>64543</a:ASN>

<a:Hostname>OCPSCH0104002MS</a:Hostname>

<a:RouteMaps/>

</a:BGPRouterDeclaration>

</Routers>

</CpgDec>

Minigraph is thus a broken minigraph could lead to multiple or all SONiC feature not functioning correctly. The following command line tool can be used to verify the integrity of current minigraph after manual editing:

sonic-cfggen -m /etc/sonic/minigraph.xml --print-data

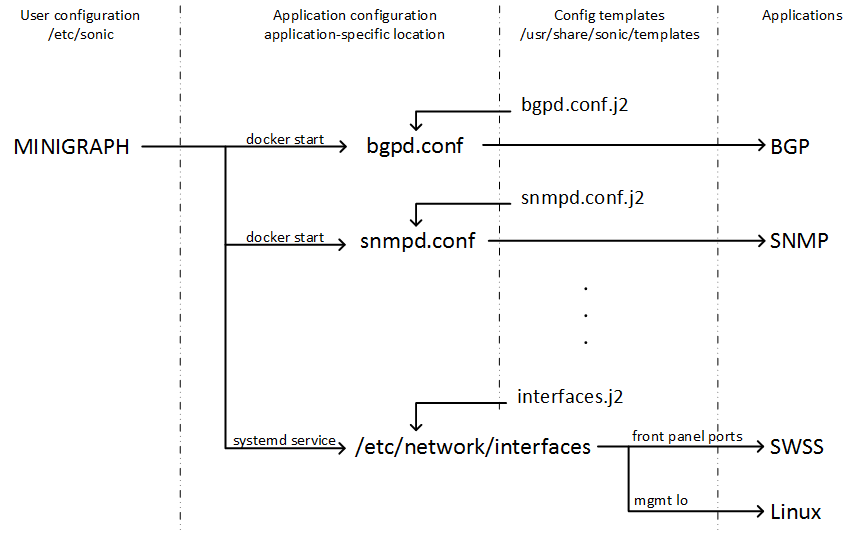

The application configuration file for each docker / SONiC feature are automatically generated base on minigraph data, as illustrated in the following figure.

-

User Configurations are under

/etc/sonic. This is the place where all user input should goes, and is mounted into every docker container. The files here are of pure data and bound to no application-specific format.The most important file in this folder is

minigraph.xml, however there is also multiple other files such assonic_version.ymlwhich contains SONiC build version information. -

Configuration Templates are the templates that are used to generate application-specific configs. Each application has its own templates and those templates handle all the specific config file formats that are required by the application. SONiC uses Jinja2 as its configuration templating language.

Configuration templates can be found at

/usr/share/sonic/templates, either within each container for docker-contained applications, or in base Linux file system for applications in base OS. -

Running Configurations are application-specific and could be located in different location as required by appilication (such as

/etc/quagga/for BGP or/etc/teamd/for TEAMD). Those config files are only visible inside of the container. -

The Config Generation Scripts for base OS applications (such as NTP) and docker-contained applications (such as BGP) are slightly different.

-

Config for base OS applications are managed by

systemctl. The script and service definitions can be found at/etc/systemd/system/*-config.service. -

Config script for docker-contained application is at

/usr/bin/config.sh. This script is called upon every time when container is started or restarted.

-

If you update the minigraph or other user configuration and want to regenerate config –

- For base image application, rerun the configuration service:

systemctl start interfaces-config, then restart container if needed: systemctl restart swss - For application container, restart the container:

systemctl restart bgp

- For base image application, rerun the configuration service:

-

If you manually edited the application config and don’t want it to be overwritten –

- For base image, disable configuration service:

systemctl disable interfaces-config - For application container, will be support later

(A workaround: you could restart the application within the container without restart the container itself, like

service quagga restartin BGP container)

- For base image, disable configuration service:

-

Config debugging:

- Usually the error log will either goes into

/var/logs/syslogor docker logs such asdocker logs bgp

- Usually the error log will either goes into

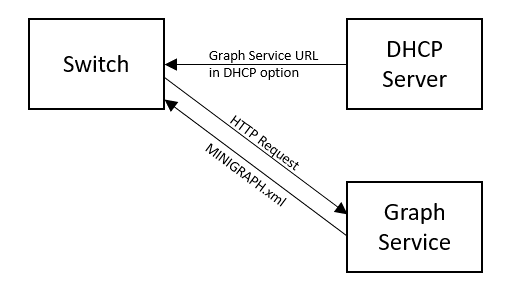

SONiC has implemented a feature to let the box automatically download the minigraph from a graph service, for the purpose of support pure-automatic deployment.

The workflow for the minigraph download is simple, as illustrated in the following figure. The switch gets the template of graph service URL from DHCP server as a custom option (option 225), combine the template with its hostname to become the full graph service URL, then download the minigraph and put it at /etc/sonic/minigraph.xml.

The same workflow is also used to download ACL definition json if configured, with DHCP custom option 226.

This feature is disabled by default. To enable it, set ENABLE_GRAPH_SERVICE=y when building the image. It is also possible to enable this service run-time by modify /etc/updategraph.conf into one of the following values:

-

For static service URL scenario (no DHCP):

enabled=true src="http://10.2.10.2/minigraph.xml" -

For DHCP scenario (service URL is updated upon every DHCP option received):

enabled=true src=dhcp -

For first-time-only DHCP scenario (after got the URL from DHCP once write it into config as static):

enabled=true src=dhcp dhcp_as_static=true

-

For Users

-

For Developers

-

Subgroups/Working Groups

-

Presentations

-

Join Us