-

Notifications

You must be signed in to change notification settings - Fork 1.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

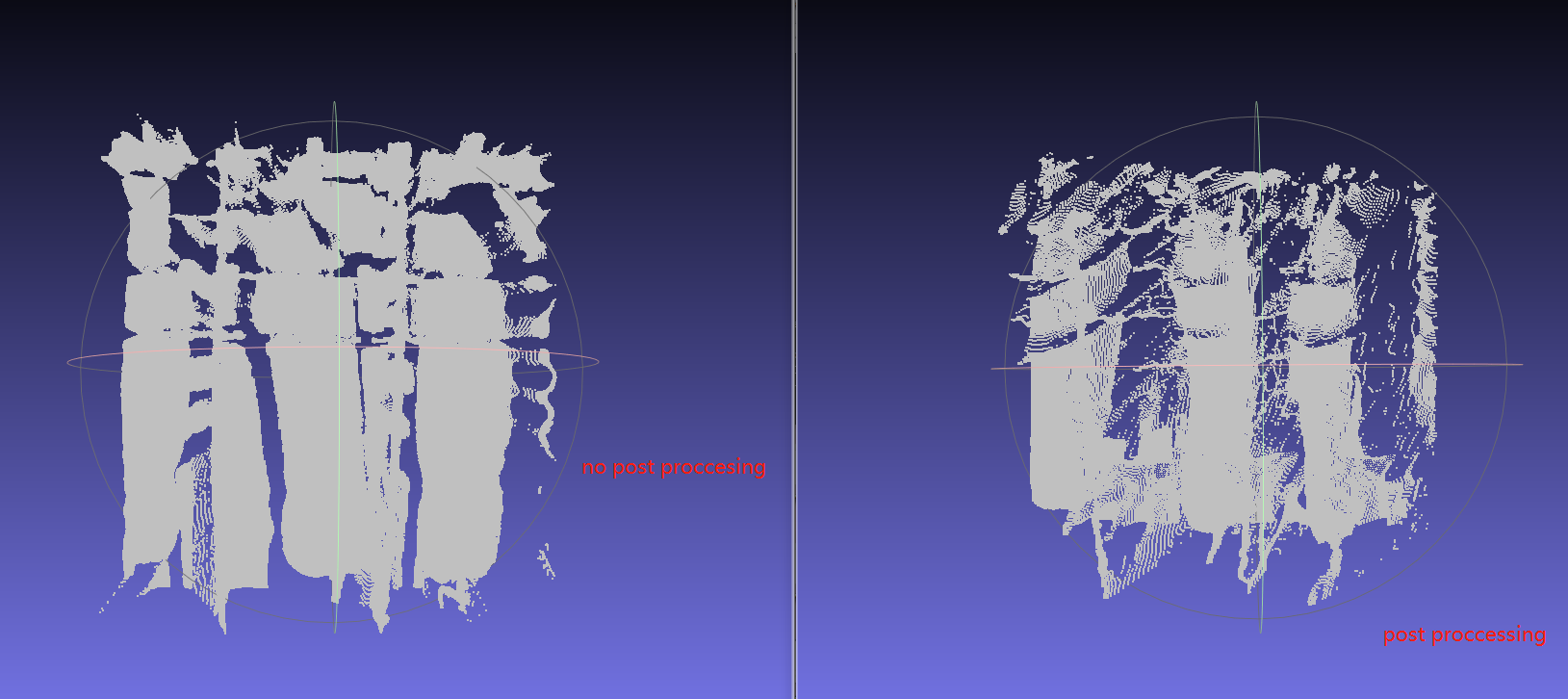

The point cloud image is abnormal after adding the post proccessing function #1333

Comments

|

Hi @dingbaiyin The decimation filter scales the resolution down by a defined scaling factor (the Magnitude). https://dev.intelrealsense.com/docs/post-processing-filters#section-decimation-filter In your script you have set the magnitude to '4' for the decimation filter. So if the original resolution was 640x480, the decimation filter's magnitude would scale it down by x4 to 160x120 (640 / 4 and 480 / 4). If the resolution was originally set to 1280x720 and the magnitude was again 4, I would expect it to be scaled down to 320x180 Do you get better results if you set the decimation filter magnitude to '2' (its default value) insted of '4', please? |

|

If my target image size is 640480, set the magnitude to '2' for the decimation filter. Can the 320240 image be restored to 640480 size? If not, can I only set the image size to 1280720? |

|

What does the image look like if you use the 3D point cloud function of the RealSense Viewer instead of your own script, please? |

|

I have found the cause of the problem. The problem lies in the decimation filter. I blocked the code and the point cloud graph in the result became normal. But I don't know why this happens. |

|

The decimation filter should apply the resolution scale-down to both the width and height of the original resolution in order to maintain the aspect ratio. It reminds me of a point cloud effect where the points can get "dragged out" so that the point cloud appears to be stretched in a particular direction. IntelRealSense/librealsense#3741 I'm pleased that you found a solution that may work for you. Do you still get the distortion if you have decimation enabled and disable the spatial filter instead? |

|

The test found that the final point cloud image generated by decimation enabled and disable the other filters has been deformed. |

|

There will be limits to what can be achieved with RealSense on a Pi 3B+ with a USB 2 port, compared to a more powerful Pi 4 with more memory and a USB 3 port. You can increase the size of the swap file on Pi to create virtual memory from the SD card space to handle applications with high memory demands. As the SD card access speed is slower than that of the real RAM though, you may experience slowdown once the Pi starts using the virtual memory. This is why the higher RAM of the Pi 4 is useful, as there is less need to use the slow virtual memory of the swap file. Dorodnic the RealSense SDK Manager has said in a past case that the spatial filter is one that, in his experience, takes the most time and does not add much benefit, so it may be worth taking out the spatial filter if that is possible for your project. IntelRealSense/librealsense#4468 (comment) In that link, Dorodnic also mentions rs-gl. https://github.com/IntelRealSense/librealsense/tree/master/examples/gl This is a processing block function of the SDK that can offload processing from the CPU to the GPU to improve performance (similar to CUDA support on devices with an Nvidia GPU such as Jetson). It may not have a noticeable effect when used on low-power devices such as Pi though. |

|

I don’t know the return value data type of get_depth_frame() so that I can’t store the frames data in the memory correctly. |

|

Intel's post-processing guide states that the temporal filter can reduce depth noise by more than 2x so you should keep that filter. https://dev.intelrealsense.com/docs/depth-post-processing#section-conclusion Turning the projector (IR Emitter) off to remove the IR dot pattern can also reduce the RMS error (depth error over distance) by around 30% if your project is not dependent on the dot pattern for analyzing the depth of surfaces. for example, if the scene that the camera is in is well lit then the camera can use ambient light to analyze depth detail instead of the dot pattern. |

|

Thank you very much for your technical support all the time. The solution of closing the projector does not meet my application scenario. thank you very much |

|

Today I encountered this problem while refactoring the post processing function code, so I reopened this closed problem. } |

|

I do not have the ability to test your code, unfortunately and I cannot see an obvious error amongst the code (ignoring all the parts that are commented out). If the code worked before but did not in more recent tests though, I would suggest making sure that your camera is in USB 3 mode and not USB 2 mode. This will ensure that the script is not calling a stream configuration that is not supported in the more limited USB 2 mode. |

|

I tested it with usb3.0 cable, but it didn't solved. This should not be the problem. |

|

I compared your use of depth_to_disparity.process() and disparity_to_depth.process() to other scripts that use this method. I could not see anything obviously mistaken. I did observe though that the scripts that I saw set up the true and false first and then set up depth_frame = depth_to_disparity.process(depth_frame) and depth_frame = disparity_to_depth.process(depth_frame) secondly. Your script seems to do the reverse of that, setting up true and false secondly. So you could try the instructions in this order instead: rs2::disparity_transform depth_to_disparity(true); |

|

depth_frame = depth_to_disparity.process(depth_frame); |

|

Do you have the two halves of the process in separate programs? (i.e the true / false statements in one program and the .process (depth frame) instructions in another program). If they are in separate programs (not in the same script file) then I would think that the .process(depth frame) statements need some linkage to the other program. Otherwise the second program is not going to know whether the disparity_to_depth and depth_to_disparity functions are set to true or false. One of them must be true and the other false in order for it to work. Unless the second script can read the true and false statuses from the first script, it will probably assume that both are false. |

|

Hi @dingbaiyin Do you still require assistance with this case, please? Thanks! |

|

@ MartyG-RealSense Hi,I have found the cause of the application problem of my program's post-proccessing function cv::Mat intelCameraControl::getDepthMa () New program I did a deep copy of the filtered image in the function, and the problem was solved. Thank you very much for your help and continue to pay attention to this issue. |

After adding the post proccessing filter function, the effect seen from the point cloud image quotient is that the image is distorted, and the quality of the point cloud image is worse than when the post proccessing filter function is not applied. I checked that the size of the depth map collected by the post proccessing function is 320240. In some cases, the size is 160120, but the true image size is set 640*480. Why does the collected pictures size change?

The original data depth map, point cloud map and filtered depth map have been packaged in the data.zip

data.zip

The post proccessing function uses decimation_filter, spatial_filter and temporal_filter.

this is the Point cloud map comparison effect picture:

The specific parameters are set as follows:

dec_filter.set_option(RS2_OPTION_FILTER_MAGNITUDE, 4);

spat_filter.set_option(RS2_OPTION_FILTER_MAGNITUDE, 2);

spat_filter.set_option(RS2_OPTION_FILTER_SMOOTH_ALPHA, 0.55f);

spat_filter.set_option(RS2_OPTION_FILTER_SMOOTH_DELTA, 8);

temp_filter.set_option(RS2_OPTION_FILTER_SMOOTH_ALPHA, 0.4f);

temp_filter.set_option(RS2_OPTION_FILTER_SMOOTH_DELTA, 20);

this is the code:

`#include <librealsense2/rs.hpp> // Include RealSense Cross Platform API

#include <opencv2/opencv.hpp>

#include

#include <stdio.h>

#include <librealsense2/rsutil.h>

#include

#include <unistd.h>

using namespace std;

using namespace cv;

cv::Mat frame_to_mat(const rs2::frame& f);

int main()

{

rs2::context ctx; //以下为读取设备型号和序列号

rs2::device_list devices = ctx.query_devices();

rs2::device selected_device;

if (devices.size() == 0)

{

std::cerr << "没有 Intel 摄像头连接,请连接摄像头!" << std::endl;

rs2::device_hub device_hub(ctx);

selected_device = device_hub.wait_for_device();

}

else

{

selected_device = devices[0];

}

rs2::pipeline pipe;

rs2::config cfg;

//std::cout << "0" << endl;

sleep(5);

std::string name = "";

if (selected_device.supports(RS2_CAMERA_INFO_NAME))

name = selected_device.get_info(RS2_CAMERA_INFO_NAME);

cfg.enable_stream(RS2_STREAM_DEPTH, 640, 480, RS2_FORMAT_Z16, 30); //RS2_FORMAT_ANY changed RS2_FORMAT_Z16

pipe.start(cfg); //启动设备

sleep(3);

/****** Post-processing filters ******/

// Decalre filters

rs2::decimation_filter dec_filter; // Decimation - reduces depth frame density

rs2::spatial_filter spat_filter; // Spatial - edge-preserving spatial smoothing

rs2::temporal_filter temp_filter; // Temporal - reduces temporal noise

rs2::disparity_transform depth_to_disparity(true);

rs2::disparity_transform disparity_to_depth(false);

//rs2::depth_to_disparity = rs2::disparity_transform(True)

//rs2::disparity_to_depth = rs2::disparity_transform(False)

//rs2::threshold_filter thr_filter; // Threshold - removes values outside recommended range // Threshold - removes values outside recommended range

// Declare disparity transform from depth to disparity and vice versa

}

cv::Mat frame_to_mat(const rs2::frame& f)

{

using namespace cv;

using namespace rs2;

}

`

The text was updated successfully, but these errors were encountered: