-

Notifications

You must be signed in to change notification settings - Fork 230

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

UAV unable to replan due to terminal point of trajectory in collision #52

Comments

|

edit: |

|

Q1: Just try it. |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

#1 A major problem I've noticed with the planner is that it can't plan when tall or wide structures are in front of it, and because of its limited FOV, it gets stuck in an infinite loop of re-planning. This is what replan looks like in rviz -

If you look closely in the point cloud, there is a tall obstacle in front of the drone, my theory is that its limited FOV makes it impossible to plan through.

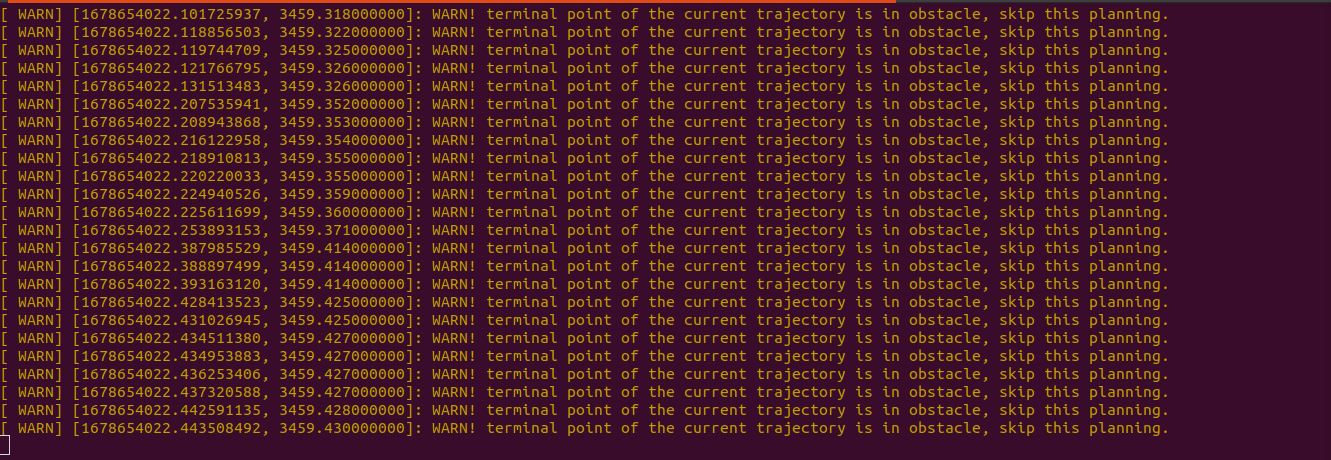

This is what the ego_planner node continuously prints:

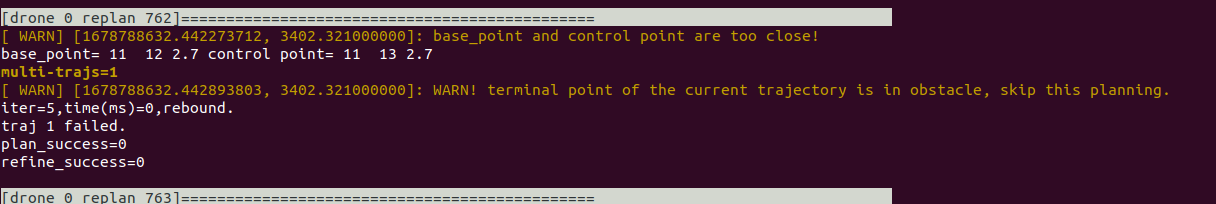

The node also prints this if it's of any help, what does "base_point and control_point too close" mean?

I wonder if we need hamiltonian perturbation to solve this problem?

#2 I also noticed the fact that although$dist=0.5$ the drone is still afraid to enter obstacles more than 2 meters most of the time, while again when it is slightly out of its range it will directly hit the obstacle when it yaws suddenly. I think this is due to lack of mapping with history.

#3 I want to ask why start_pos is different from odom_pos in ego_replan_fsm , according to me, the trajectory should start planning the drone from the current position, otherwise, if the controller lags slightly, the trajectory will run without considering the position of the drone. This raises the question, shouldn't we have closed-loop feedback between the controller and the trajectory planner? Any idea how to merge?

@bigsuperZZZX Any help in modifying the codebase is appreciated. Thank you.

The text was updated successfully, but these errors were encountered: