[中文|English]

A Tight-fisted Optimizer, an optimizer that is extremely budget-conscious!

- Achieves comparable performance to AdamW and LAMB.

- Minimizes memory requirements when using gradient accumulation.

- Adaptive learning rates per parameter, similar to LAMB.

- Simple strategy to prevent the model from collapsing to NaN.

- Can simulate any lr schedule with piecewise linear learning rates.

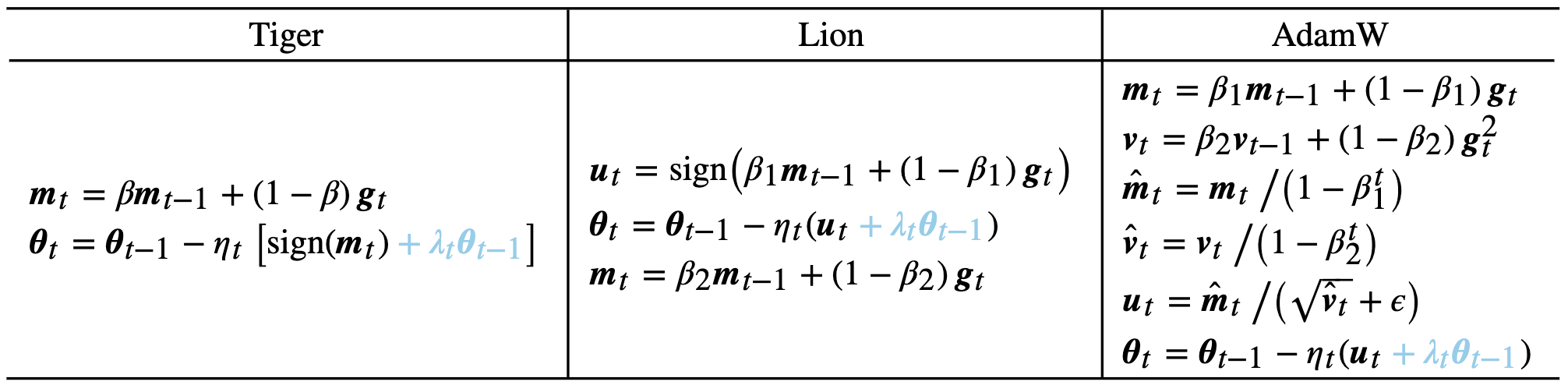

Tiger, Lion, and AdamW comparison:

From the perspective of Lion, Tiger is a simplified version of Lion (beta1=beta2). From the perspective of SignSGD, Tiger is a SignSGD with momentum and weight decay.

Tiger only uses momentum to build the update procedure. According to the conclusion of "Gradient Accumulation Hidden in Momentum," we don't need to add another group of parameters to "unobtrusively" implement gradient accumulation! This also means that when we have a gradient accumulation requirement, Tiger has already achieved the best solution for memory usage, which is why this optimizer is called Tiger (Tight-fisted Optimizer)!

- Blog 1: https://kexue.fm/archives/9512

- Blog 2: https://kexue.fm/archives/8634

The current implementation is developed under tensorflow 1.15, and is likely to work in the first few versions of tensorflow 2.x. Later versions have not been tested and their compatibility is unknown.

Reference code:

from tiger import Tiger

optimizer = Tiger(

learning_rate=1.76e-3, # Global relative learning rate lr

beta=0.965, # beta parameter

weight_decay=0.01, # Weight decay rate

grad_accum_steps=4, # Gradient accumulation steps

lr_schedule={

40000: 1, # For the first 40k steps, linearly increase the learning rate from 0 to 1*lr (i.e. warm-up steps are 40000/4 steps)

160000: 0.5, # For steps 40k-160k, linearly decrease the learning rate from 1*lr to 0.5*lr

640000: 0.1, # For steps 160k-640k, linearly decrease the learning rate from 0.5*lr to 0.1*lr

1280000: 0.01, # For steps 640k-1280k, linearly decrease the learning rate from 0.1*lr to 0.01*lr, and then keep it constant

}

)

model.compile(loss='categorical_crossentropy', optimizer=optimizer)Special thanks to Lion's thorough experiments and the friendly communication with Lion's authors.

@misc{tigeropt,

title={Tiger: A Tight-fisted Optimizer},

author={Jianlin Su},

year={2023},

howpublished={\url{https://github.com/bojone/tiger}},

}

QQ group: 808623966, WeChat group: add the robot WeChat account spaces_ac_cn.