You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Although some features are mixed together in this PR, this PR is not

that large, and these features are all related.

Actually there are more than 70 lines are for a toy "test queue", so

this PR is quite simple.

Major features:

1. Allow site admin to clear a queue (remove all items in a queue)

* Because there is no transaction, the "unique queue" could be corrupted

in rare cases, that's unfixable.

* eg: the item is in the "set" but not in the "list", so the item would

never be able to be pushed into the queue.

* Now site admin could simply clear the queue, then everything becomes

correct, the lost items could be re-pushed into queue by future

operations.

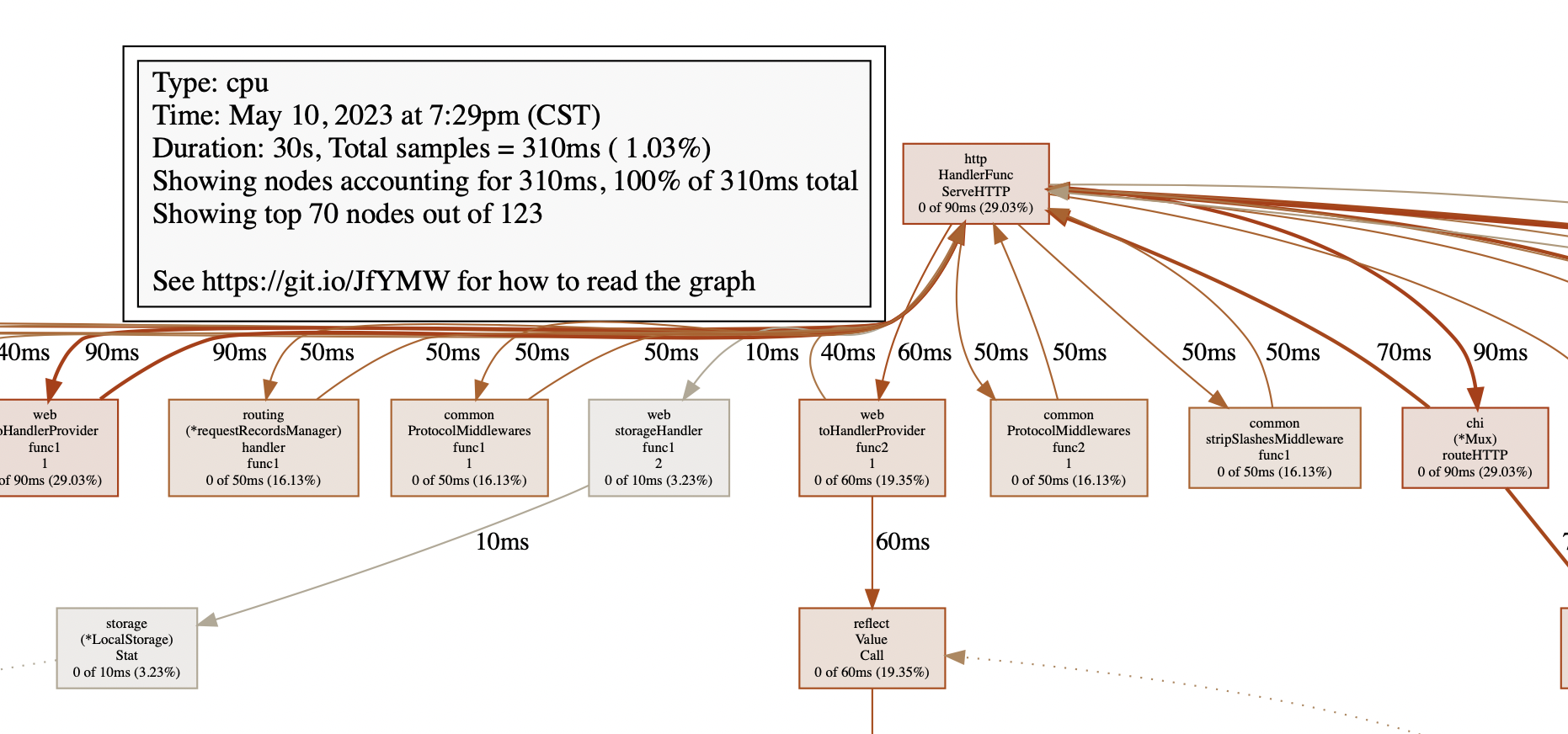

3. Split the "admin/monitor" to separate pages

4. Allow to download diagnosis report

* In history, there were many users reporting that Gitea queue gets

stuck, or Gitea's CPU is 100%

* With diagnosis report, maintainers could know what happens clearly

The diagnosis report sample:

[gitea-diagnosis-20230510-192913.zip](https://github.com/go-gitea/gitea/files/11441346/gitea-diagnosis-20230510-192913.zip)

, use "go tool pprof profile.dat" to view the report.

Screenshots:

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Giteabot <teabot@gitea.io>

monitor.queue.nopool.desc = This queue wraps other queues and does not itself have a worker pool.

3066

-

monitor.queue.wrapped.desc = A wrapped queue wraps a slow starting queue, buffering queued requests in a channel. It does not have a worker pool itself.

3067

-

monitor.queue.persistable-channel.desc = A persistable-channel wraps two queues, a channel queue that has its own worker pool and a level queue for persisted requests from previous shutdowns. It does not have a worker pool itself.

monitor.queue.pool.addworkers.desc = Add Workers to this pool with or without a timeout. If you set a timeout these workers will be removed from the pool after the timeout has lapsed.

3073

-

monitor.queue.pool.addworkers.numberworkers.placeholder = Number of Workers

3074

-

monitor.queue.pool.addworkers.timeout.placeholder = Set to 0 for no timeout

3075

-

monitor.queue.pool.addworkers.mustnumbergreaterzero = Number of Workers to add must be greater than zero

3076

-

monitor.queue.pool.addworkers.musttimeoutduration = Timeout must be a golang duration eg. 5m or be 0

3077

-

monitor.queue.pool.flush.title = Flush Queue

3078

-

monitor.queue.pool.flush.desc = Flush will add a worker that will terminate once the queue is empty, or it times out.

monitor.queue.pool.flush.added = Flush Worker added for %[1]s

3081

-

monitor.queue.pool.pause.title = Pause Queue

3082

-

monitor.queue.pool.pause.desc = Pausing a Queue will prevent it from processing data

3083

-

monitor.queue.pool.pause.submit = Pause Queue

3084

-

monitor.queue.pool.resume.title = Resume Queue

3085

-

monitor.queue.pool.resume.desc = Set this queue to resume work

3086

-

monitor.queue.pool.resume.submit = Resume Queue

3087

-

3088

3065

monitor.queue.settings.title = Pool Settings

3089

-

monitor.queue.settings.desc = Pools dynamically grow with a boost in response to their worker queue blocking. These changes will not affect current worker groups.

3090

-

monitor.queue.settings.timeout = Boost Timeout

3091

-

monitor.queue.settings.timeout.placeholder = Currently %[1]v

3092

-

monitor.queue.settings.timeout.error = Timeout must be a golang duration eg. 5m or be 0

3093

-

monitor.queue.settings.numberworkers = Boost Number of Workers

3094

-

monitor.queue.settings.numberworkers.placeholder = Currently %[1]d

3095

-

monitor.queue.settings.numberworkers.error = Number of Workers to add must be greater than or equal to zero

3066

+

monitor.queue.settings.desc = Pools dynamically grow in response to their worker queue blocking.

3096

3067

monitor.queue.settings.maxnumberworkers = Max Number of workers

3097

3068

monitor.queue.settings.maxnumberworkers.placeholder = Currently %[1]d

3098

3069

monitor.queue.settings.maxnumberworkers.error = Max number of workers must be a number

3099

3070

monitor.queue.settings.submit = Update Settings

3100

3071

monitor.queue.settings.changed = Settings Updated

3101

-

monitor.queue.settings.blocktimeout = Current Block Timeout

3102

-

monitor.queue.settings.blocktimeout.value = %[1]v

3103

-

3104

-

monitor.queue.pool.none = This queue does not have a Pool

3105

-

monitor.queue.pool.added = Worker Group Added

3106

-

monitor.queue.pool.max_changed = Maximum number of workers changed

3107

-

monitor.queue.pool.workers.title = Active Worker Groups

3108

-

monitor.queue.pool.workers.none = No worker groups.

3109

-

monitor.queue.pool.cancel = Shutdown Worker Group

3110

-

monitor.queue.pool.cancelling = Worker Group shutting down

3111

-

monitor.queue.pool.cancel_notices = Shutdown this group of %s workers?

3112

-

monitor.queue.pool.cancel_desc = Leaving a queue without any worker groups may cause requests to block indefinitely.

3072

+

monitor.queue.settings.remove_all_items = Remove all

3073

+

monitor.queue.settings.remove_all_items_done = All items in the queue have been removed.

0 commit comments