You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

{{ message }}

This repository was archived by the owner on Aug 23, 2023. It is now read-only.

we have been seeing this for a little while now. possibly since somewhere july.

i just observed an instance doing it, running version 0.9.0-322-g5e667b3

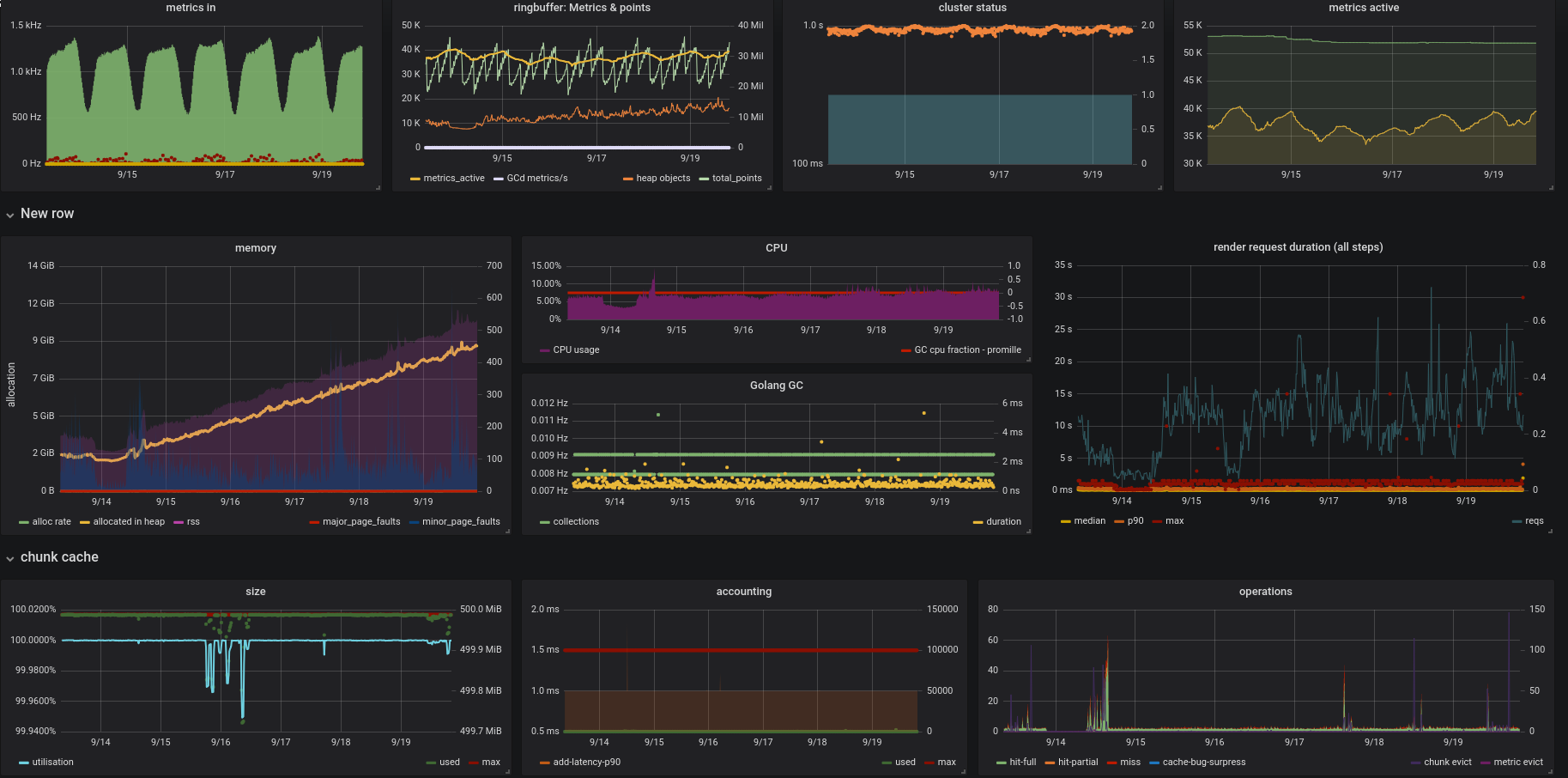

can't share dashboard snapshot cause it contains confidential info, but i'm attaching a screenie of the relevant bits.

observations:

heap usage and rss usage gradually growing and growing

note how cache was already full for a while before mem started increasing. but that timegap didn't really have much cache activity, leading me to think it only kicks in once evictions happen, but memory also growth without any evictions happening.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

we have been seeing this for a little while now. possibly since somewhere july.

i just observed an instance doing it, running version

0.9.0-322-g5e667b3can't share dashboard snapshot cause it contains confidential info, but i'm attaching a screenie of the relevant bits.

observations:

#963 was also meant to troubleshoot this and may contain useful bits as well

full profile: mt-1057.zip

The text was updated successfully, but these errors were encountered: