This project aims to encourage the creation of DPO/ORPO datasets for more languages. By providing these tools, we aim to foster a community of people building DPO/ORPO datasets for different languages. Currently, many languages do not have DPO/ORPO datasets openly shared on the Hugging Face Hub. The DIBT/preference_data_by_language Space gives an overview of the language coverage. At the time of this commit, only 14 languages with DPO/ORPO datasets are available on the Hugging Face Hub. Following this recipe, you can easily generate a DPO/ORPO dataset for a new language.

This project has the following goals:

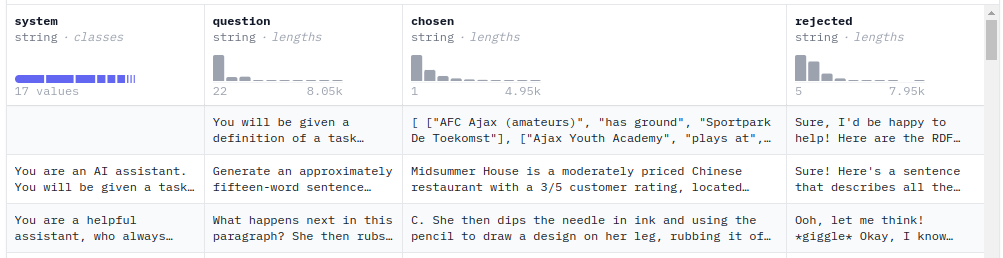

- An Argilla Interface for ranking responses generated by a human Aya annotator and a generated response. See the aya_dutch_dpoexample.

- A "raw dataset" with LLM feedback for each prompt. See the DIBT/aya_dutch_dpo_raw for an example.

- A growing dataset with human-verified preferences for each response. See the DIBT/aya_dutch_dpo for an example dataset.

What is Direct Preference Optimization (DPO)?

Direct Preference Optimization (DPO) is a technique for training models to optimize for human preferences.Direct Preference Optimization (DPO) has emerged as a promising alternative for aligning Large Language Models (LLMs) to human or AI preferences. Unlike traditional alignment methods, which are based on reinforcement learning, DPO recasts the alignment formulation as a simple loss function that can be optimized directly on a dataset of preferences

${(x, y_w, y_l)}$ , where$x$ is a prompt and$(y_w,y_l)$ are the preferred and dispreferred responses. source

Or, in other words, to train a model using DPO you need a dataset of prompts and responses where one response is preferred over the other. This type of data is also used for ORPO, another alignment technique we'll describe in the next section.

DPO datasets are a powerful tool for fine-tuning language models to generate responses that are more aligned with human preferences, so are a valuable resource for improving the quality of chatbots and other generative models. However, currently, there are only a few DPO datasets available for a limited number of languages. By generating more DPO datasets for different languages, we can help to improve the quality of generative models in a wider range of languages.

Recently, Odds Ratio Preference Optimization (ORPO) has been proposed as an alternative to DPO. ORPO is a novel approach to fine-tuning language models that incorporates preference alignment directly into the supervised fine-tuning (SFT) process by using the odds ratio to contrast favored and disfavored generation styles. By applying a minor penalty to the disfavored style during SFT, ORPO effectively guides the model toward the desired behavior without the need for an additional alignment step.

tl;dr: if you have a DPO-style dataset + a strong base model you can use ORPO to train a chat model. Recently Argilla, KAIST, and Hugging Face used this approach to train HuggingFaceH4/zephyr-orpo-141b-A35b-v0.1 a very strong chat model using only 7k data preference pairs!

As part of Data Is Better Together, we're supporting the community in generating more DPO/ORPO datasets for different languages. If you would like to help, you can follow the steps below to generate a DPO/ORPO dataset for a language that you are interested in. There are already many language communities working together on the Hugging Face Discord server, so you can also join the server to collaborate with others on this project 🤗.

Aya, an open science initiative to accelerate multilingual AI progress, has released a dataset of human-annotated prompt-completion pairs across 71 languages. We can use this dataset to generate DPO/ORPO datasets for languages for which they don't currently exist.

Here are the steps we'll take to generate a DPO/ORPO dataset for a new language:

- Start from the CohereForAI/aya_dataset.

- Filter the Aya dataset to the language you are focusing on.

- Use

distilabelto generate a second response for each prompt in the filtered Aya dataset. - (Optional) Send the generated dataset to Argilla for annotation where the community can choose which response is better.

- (Optional) Train a model using the generated DPO/ORPO dataset and push forward the state of the art in your language 🚀🚀🚀

You can find more detailed instructions on how to generate a DPO/ORPO dataset for a new language in the instructions.

Yes! The example scripts in this repository use Hugging Face Inference Endpoints for the inference component. This means you can run the scripts on your local machine without needing a GPU. We can provide you with GPU grants to run the distilabel script if you need them. Please reach out to us on the Hugging Face Discord server if you need a GPU grant. Note: We will want to ensure that you have a plan for how you will use the GPU grant before providing it, in particular, we'll want to see that you have set up an Argilla Space for your project already and have already done some work to identify the language you want to work on and the models you want to use.

The current notebooks and code currently only show how to generate the synthetic data and create a preference dataset annotation Space. The next steps would be to collect human feedback on the synthetic data and then use this to train a model. We will cover this in a future notebook.