-

Notifications

You must be signed in to change notification settings - Fork 295

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

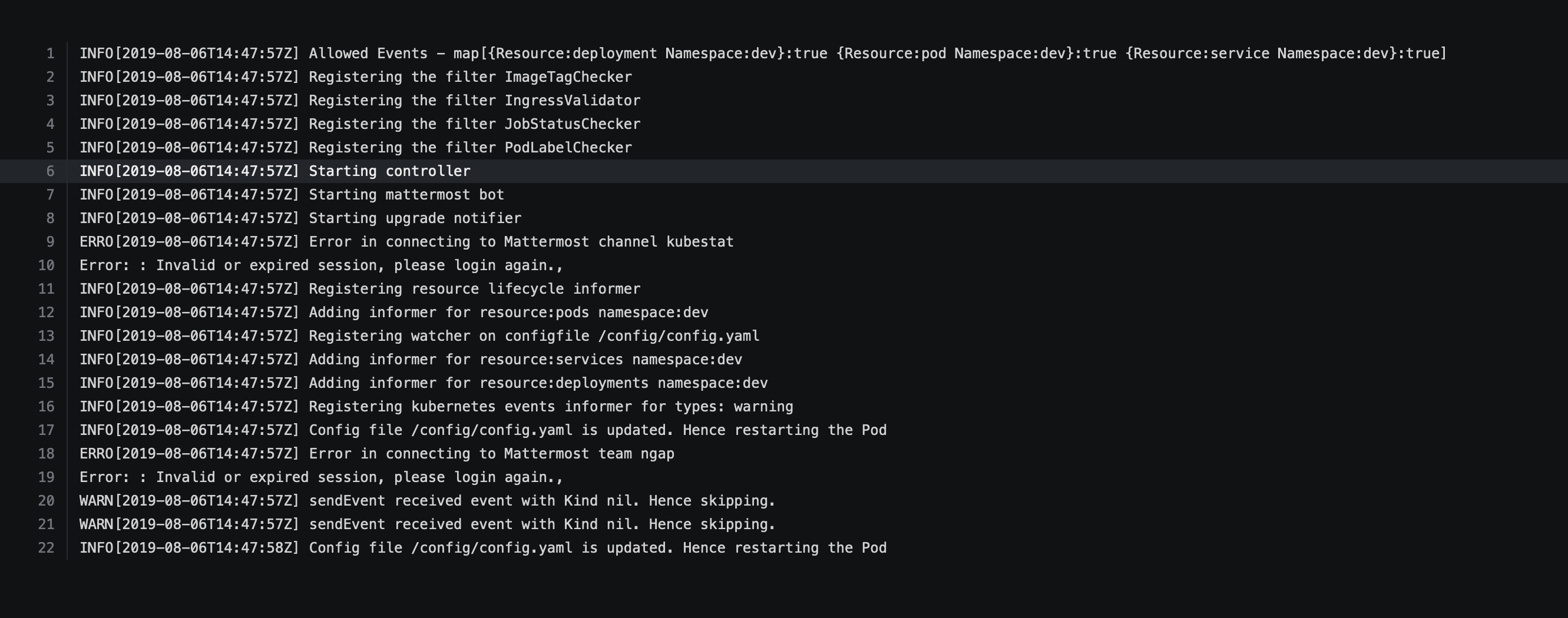

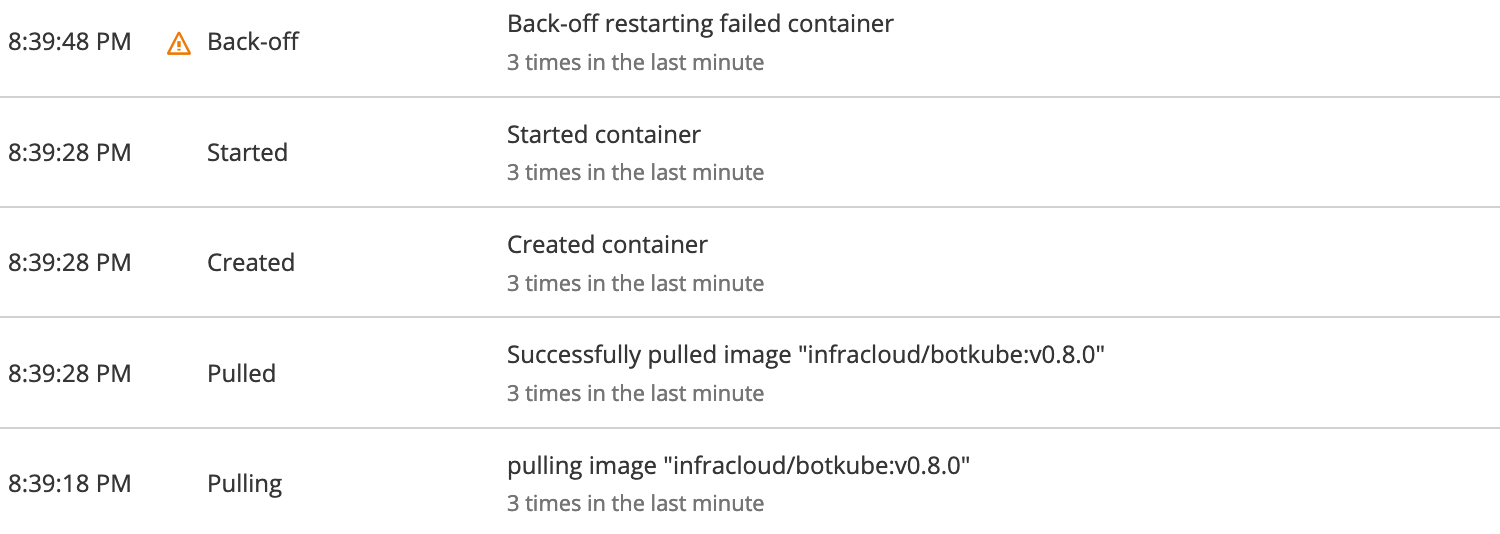

[Openshift] Pod keeps restarting as the registered watcher says "Config file /config/config.yaml is updated" #142

Comments

|

Hi @iamvvra , |

|

Are you still getting config update logs after setting OCP |

|

That's strange. I hope you are using the latest release for deployment. Which cluster you are using? Also is there some process/job which might be updating the configmap? Could you please watch and tell me the status of configmap |

|

Could you please send logs of the BotKube pod? Also please make sure that you are using the latest helm chart from |

|

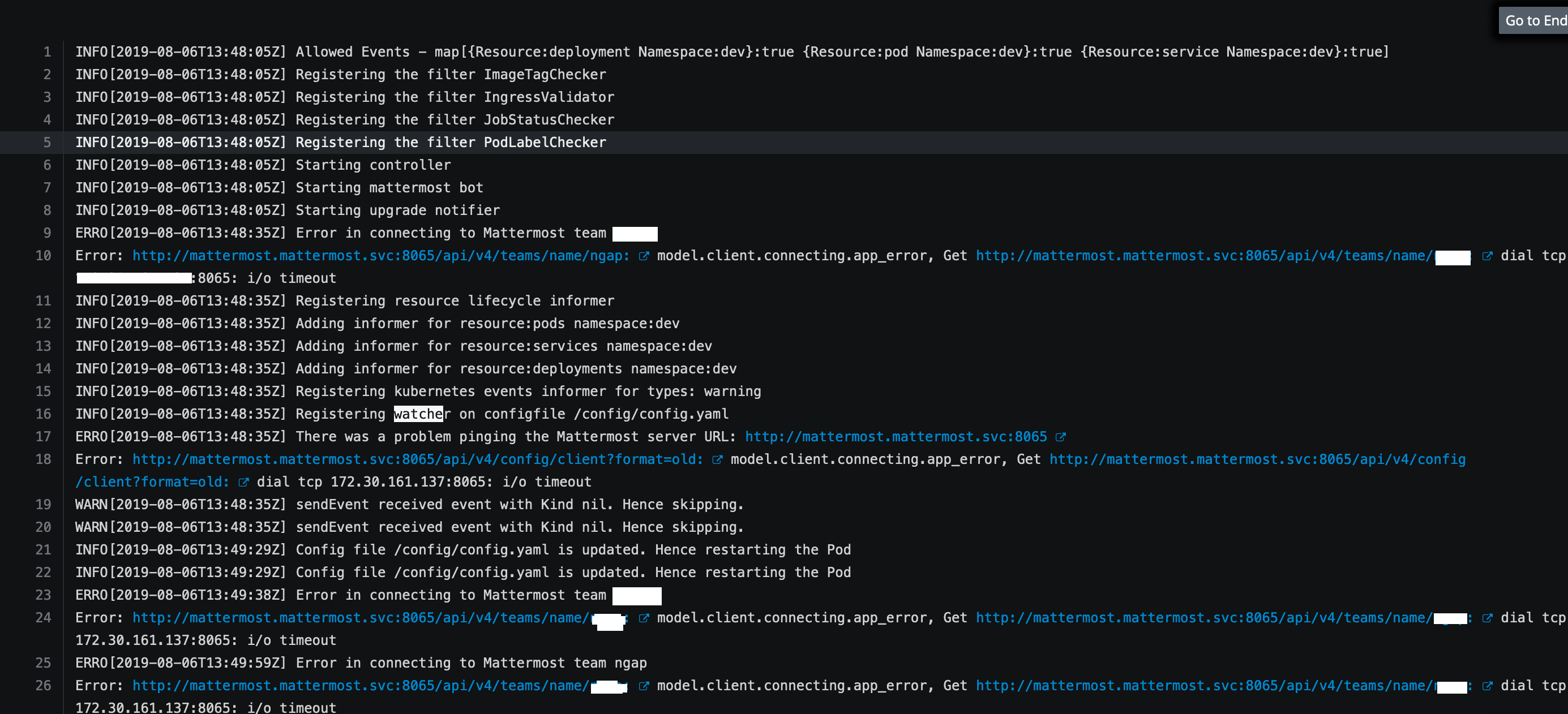

Log attached. I configured from your release branch source. |

Are you using correct token? Can you please try regenerating token? |

|

Hi,

So it is good to check with this prespective too, by trying with privous stable version of mattermost. |

|

@aananthraj I am running the bot in log level set to debug, also, I have provided one of the log in this thread. Moreover, I got the same error having tried with previous Having tried with new token today, I get the same error

|

|

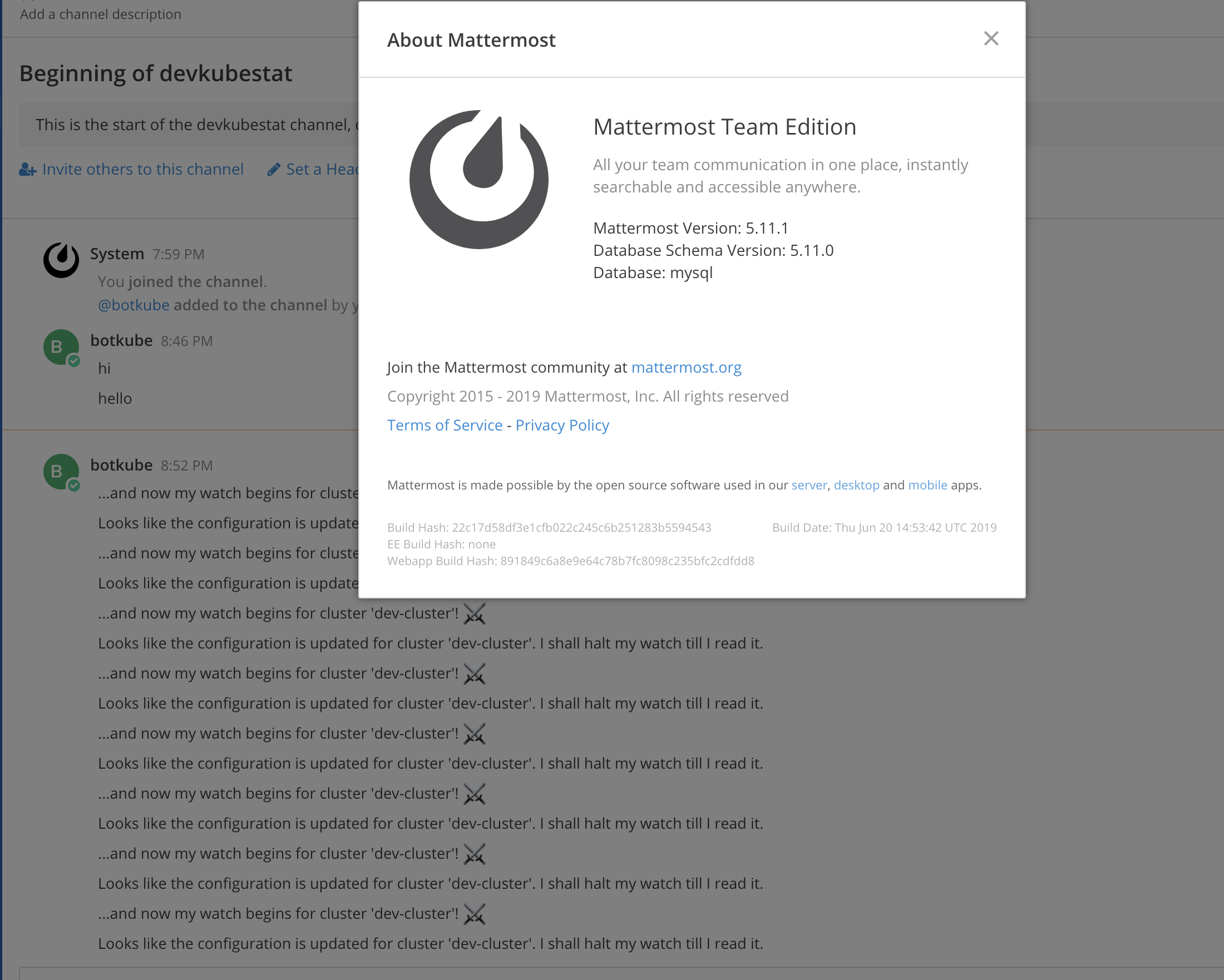

@iamvvra please don't use the older version of BotKube with newer helm charts. Which version of Mattermost you are using? We have tested it with Mattermost v5.11.1. We are trying to understand if the issue is with the latest version of Mattermost or from client side. |

|

Can you please try with v5.11.1+ version? |

|

Sure, I'll try that. |

|

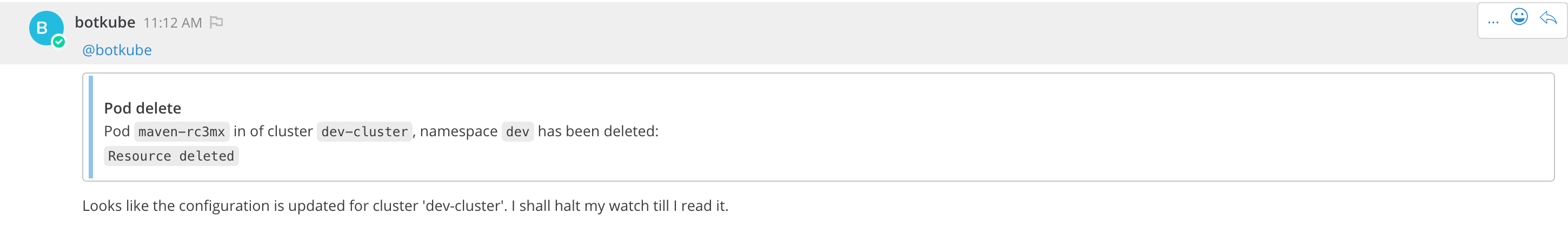

I have deployed the said Mattermost 5.11.1 app separately. The BotKube reacts the same assuming the config.yaml was modified, keeps restarting the pod. However, the initial notification hits the Mattermost. I am not attaching logs as it was similar to one provided earlier. Version & the channel the BotKube sends notifications. You can see multiple notifications everytime the pod restarts. |

|

Is this have anything to do with Websockets? |

|

@iamvvra I don't think it is a problem related to communication. Could you please verify that any other service is not updating |

|

I'm having the same issue trying to connect Botkube 0.8.0 to slack on OpenShift. |

|

I'm running OpenShift 3.11, based on Kubernetes 1.11. |

|

I tried reproducing the issue on K8s v1.11 - minikube, GKE and EKS, couldn't reproduce it. I think it has to do with how Openshift manages resources. I think it updates mounted files for some reason. For now, I am opening another issue to have an ability to disable config file watcher and disable automatic restart on config changed |

|

Yes, a flag passed (helm too) on to the watcher to disable the auto reboot, default being true. |

|

I have tested this fix, it works! |

1st, this project is good. I came here by chance before I wanted to write my own cluster event listener (kind of) to publish events directly to Mattermost.

Though I have deployed this bot in my Openshift cluster running on-premise, I have trouble running it.

The bug description

The deployed pod keeps restarting as the registerd watcher of the

config/config.yamlsays the file was modified. There is no sign of change from external source or manual.To Reproduce

I use

helmto create botkube in the botkube namespace. My Mattermost too is deployed in Openshift, but in a different namespace,mattermost.helm install --name botkube --namespace botkube \ --set config.communications.mattermost.enabled=true \ --set config.communications.mattermost.url=http://mattermost.mattermost.svc:8065 \ --set config.communications.mattermost.token=generatedtoken \ --set config.communications.mattermost.team=my-team \ --set config.communications.mattermost.channel=cluster-stat \ --set config.settings.clustername=development-cluster \ --set config.settings.allowkubectl=true \ helm/botkube(I have also used OCP specific

routeinstead ofsvc).Expected behavior

The bot should start with the provided configuration loaded in the

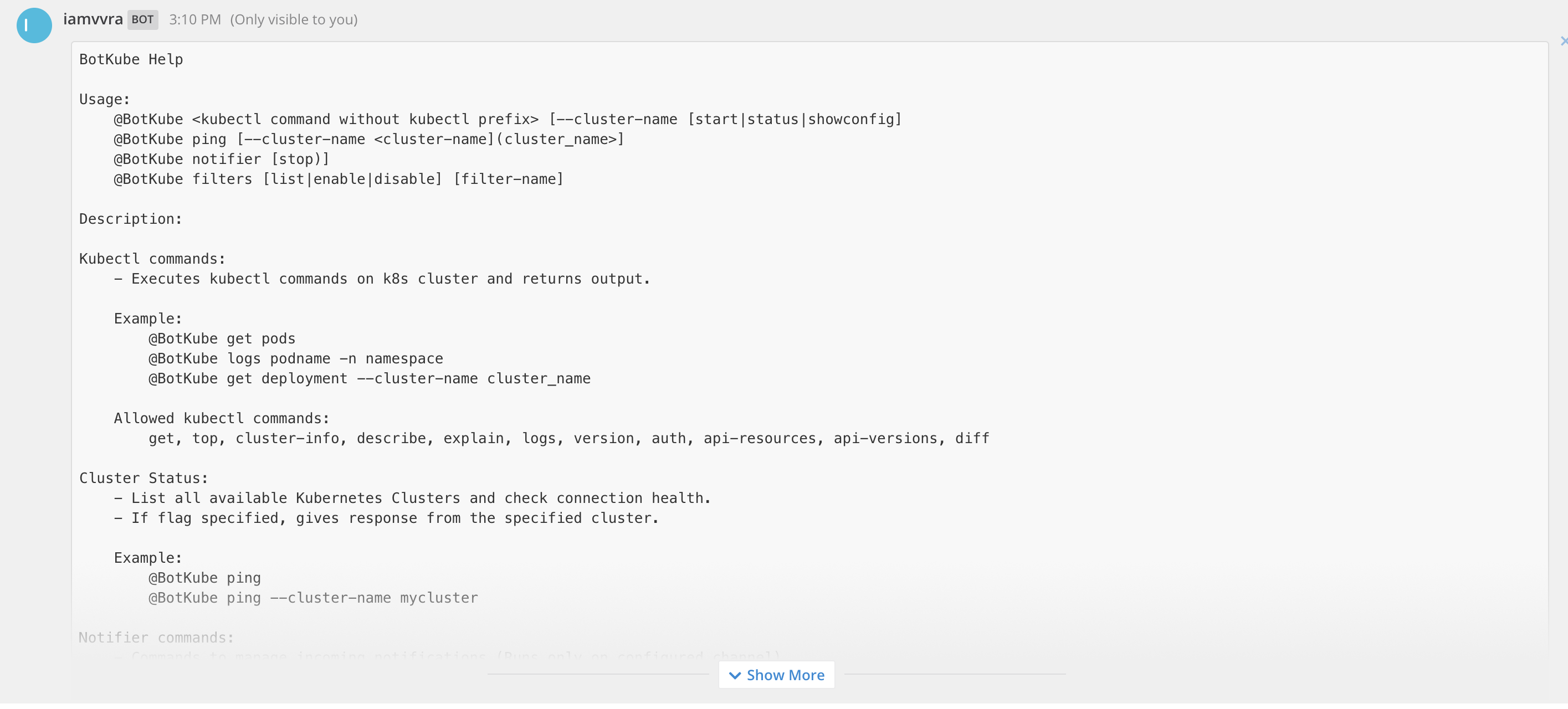

/config/config.yaml.Screenshots

Find a screenshot attached.

Let me know if you need any other detail. Also, tell me, please, if I am doing it right.

The text was updated successfully, but these errors were encountered: