There are a lot of options for configuring cnn-vis; this page will show some examples to get you started.

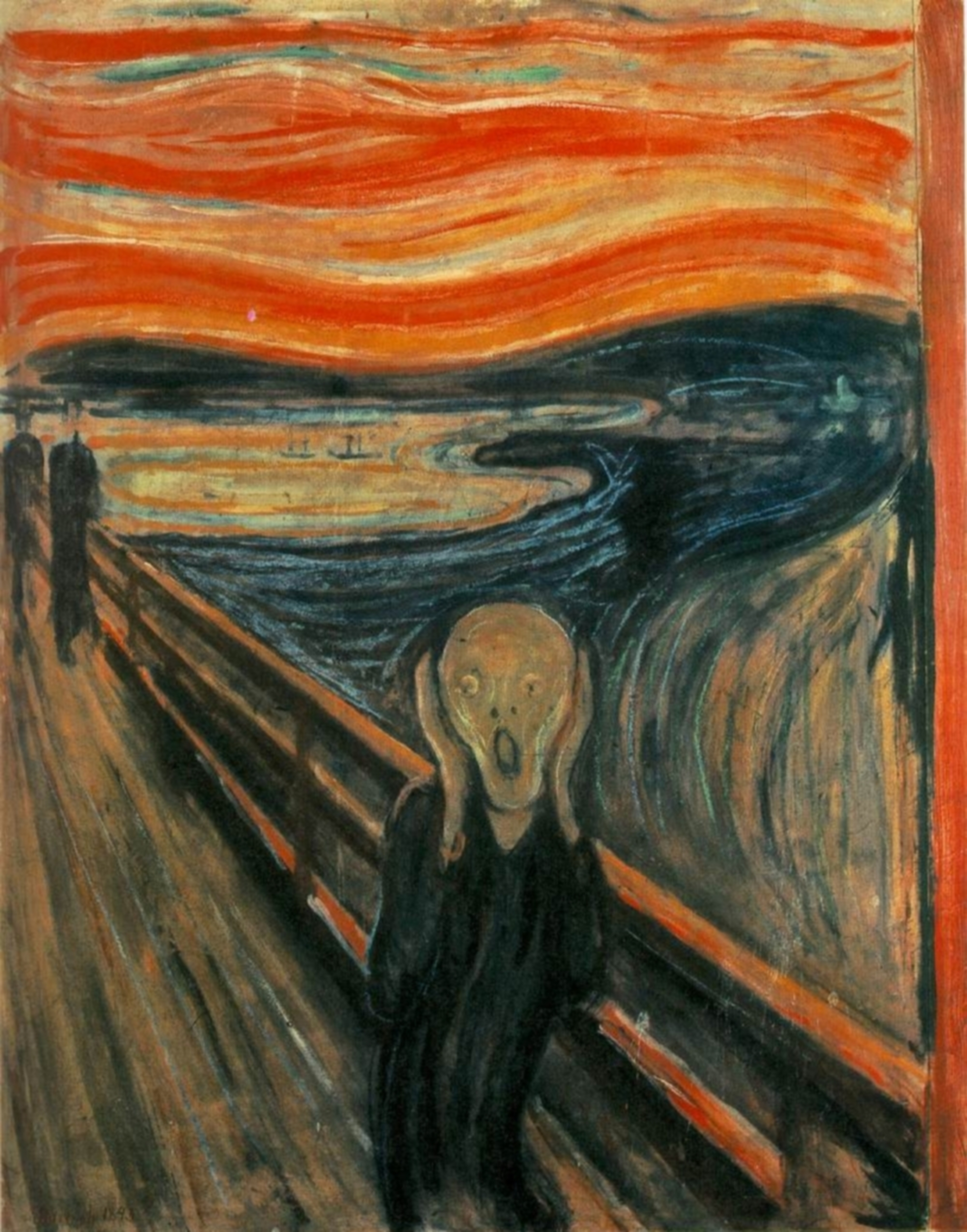

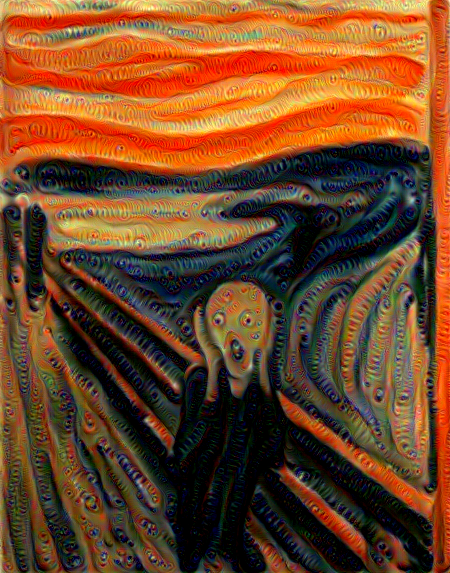

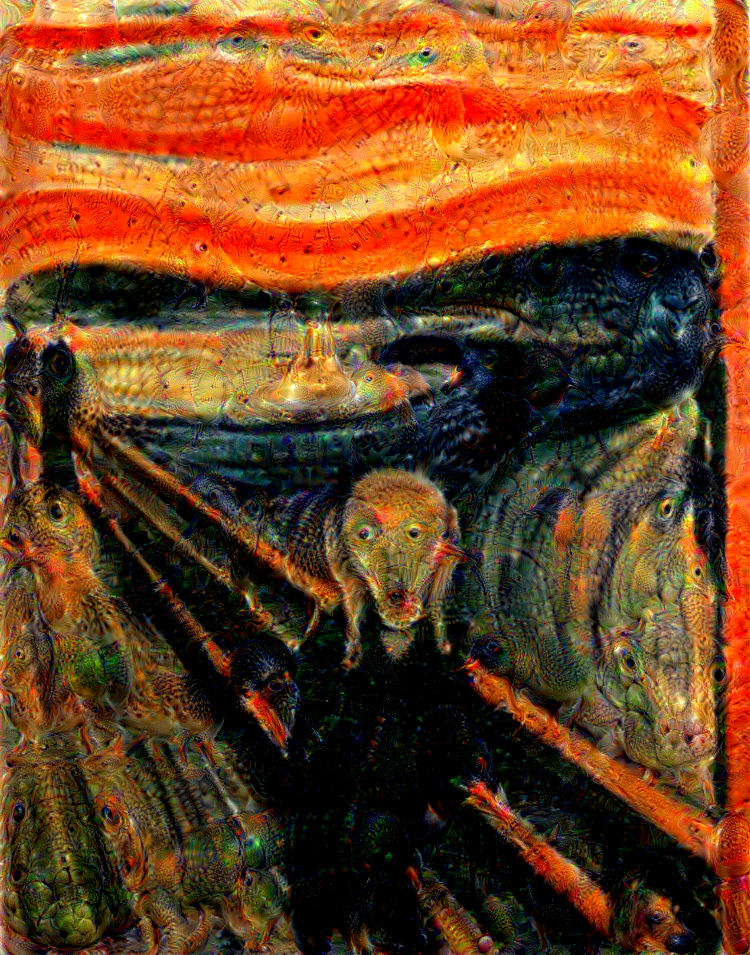

When making Inceptionism-style images, one of the most important parameters is the target layer. Using lower layers will tend to emphasize low-level image features, while higher layers will tend to emphasize object parts. As an example, we will start with Edvard Munch's classic painting "The Scream" and modify it using several different CNN layers:

- Upper left: The original image.

- Upper right: Produced using the script example7.sh which amplifies the relatively early

inception_3a/1x1layer. - Lower left: Produced using the script example8.sh which amplifies the relatively early

inception_3a/3x3_reducelayer. - Lower right: Produced using the script example9.sh which amplifies the later layer

inception_4d/outputlayer, which is a bit later. Amplifying this layer causes animal parts to appear.

Here are a few more examples, using some of the same starting images as the original Google blog post:

This one is generated by the script example10.sh.

This one is generated by the script example11.sh. Note that for this example we made use of the auxiliary p-norm regularization; without it the region near the clouds tends to become oversaturated.

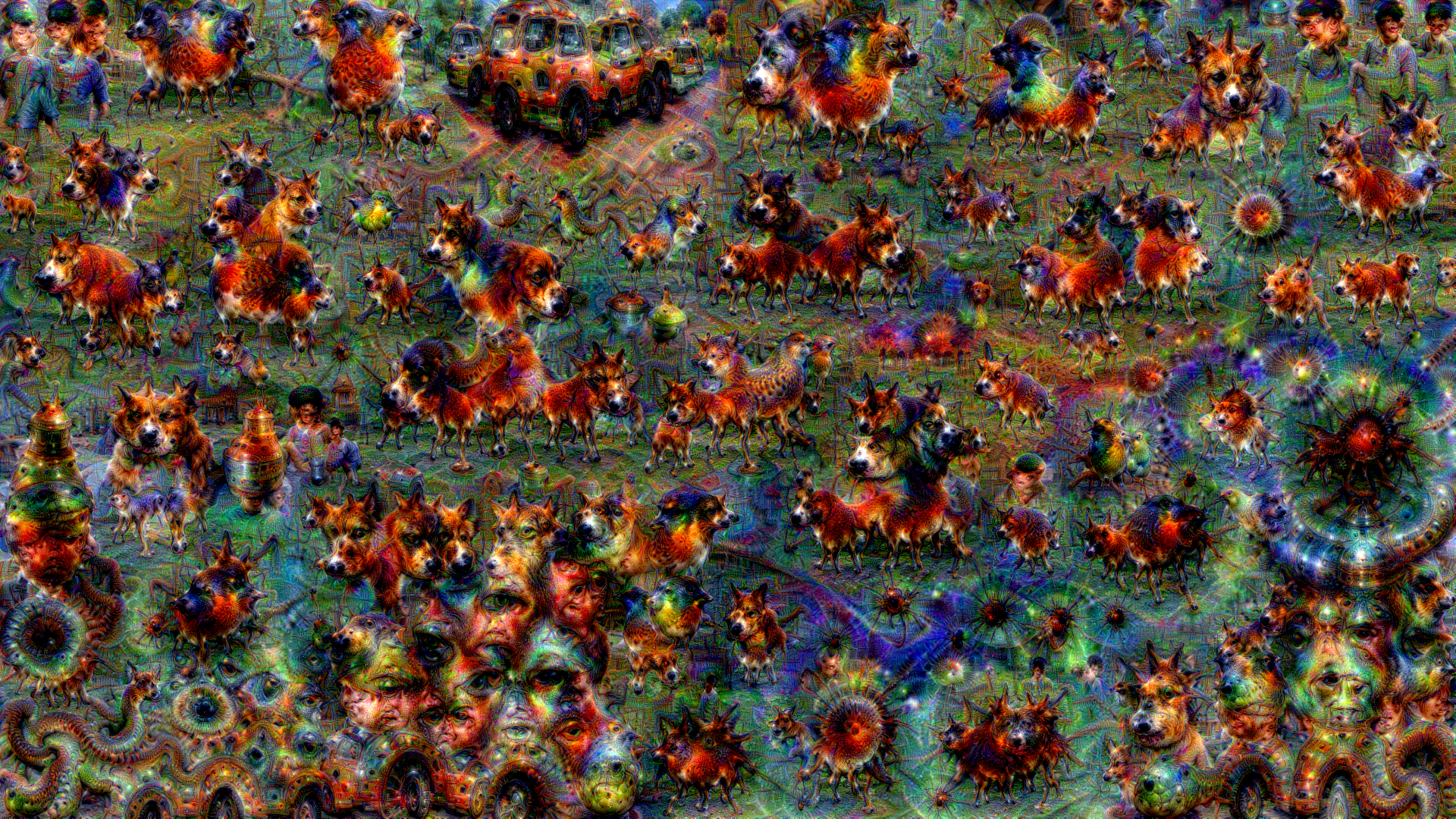

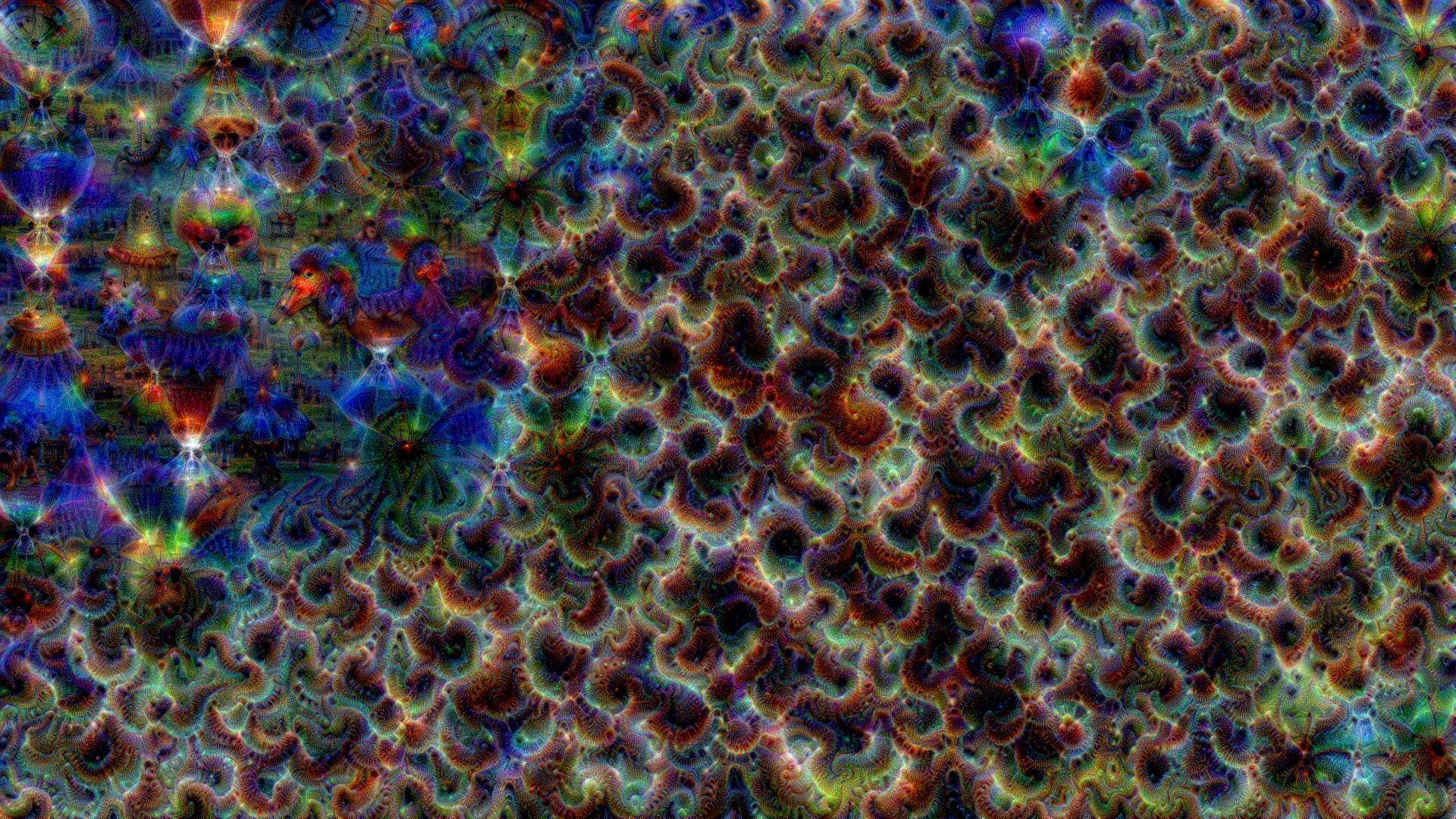

Instead of starting with an existing image, you can also initialize using a random noise image. Here's an example, generated by the script example2.sh which amplifies the inception_4c/output layer:

Keep in mind that the output depends on the random noise used to initialize the image, so you may get a slightly different result if you rerun the script. It should however contain similar types of objects.

As we saw above, choosing different layers to amplify gives rise to different sorts of patterns; here's another exapmle generated by the script example3.sh:

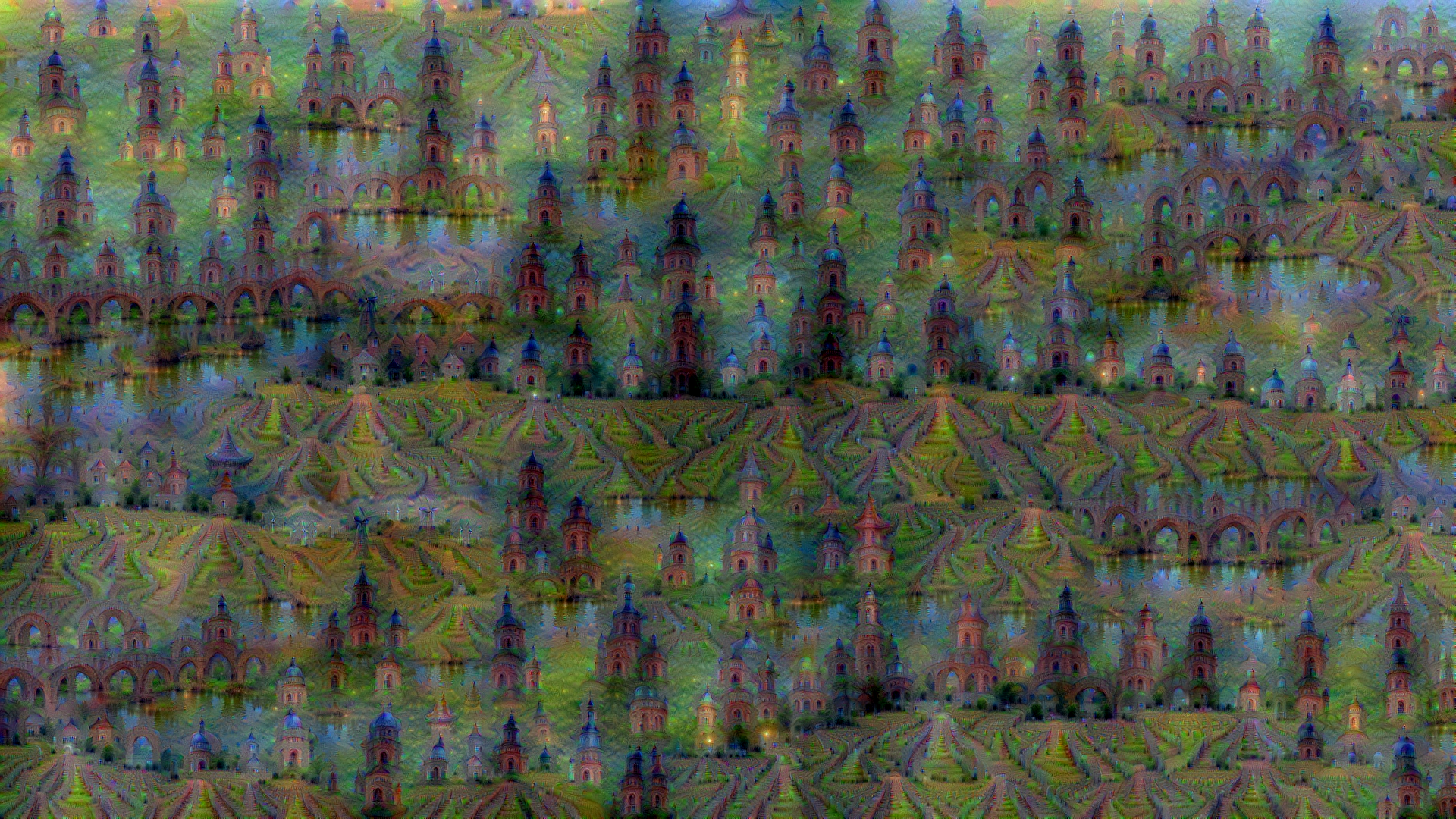

In all of the examples so far, the underlying CNN was trained on the ImageNet dataset. If we use a CNN that was instead trained on the MIT places dataset, the generated images will tend to contain parts of buildings rather than parts of images. Here's an example, generated with the script example5.sh which amplifies the inception_4b/3x3_reduce layer: