-

Notifications

You must be signed in to change notification settings - Fork 870

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Quarkus Netty broken traces on Kubernetes #2493

Labels

bug

Something isn't working

Comments

|

same with agent 1.0.0 version fyi |

|

@previousdeveloper Do you have a small example application that demonstrate these problems? |

|

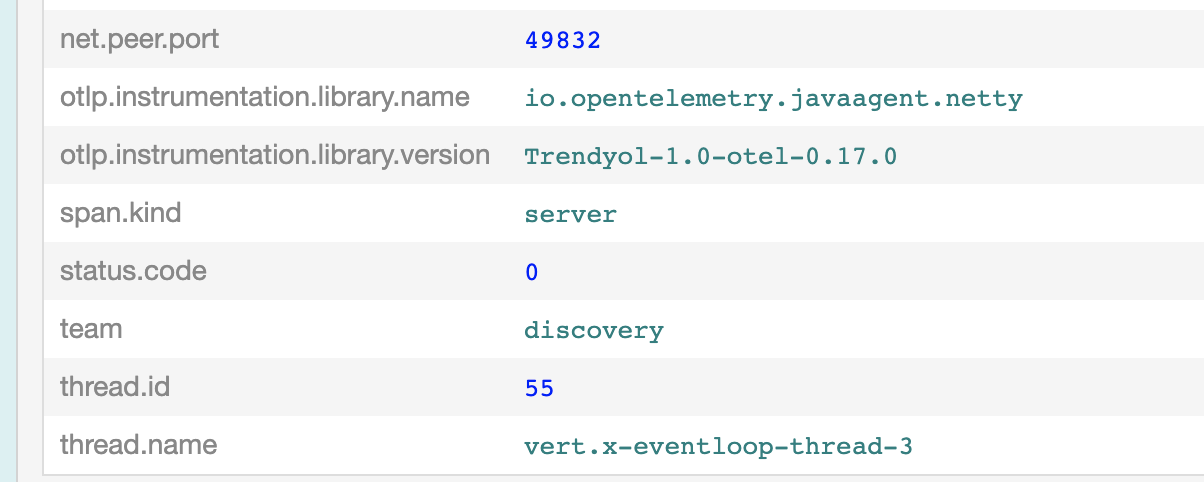

@iNikem I will try to do an example application. Application span has started with internal span kind that's a problem I think Example log: [opentelemetry.auto.trace 2021-03-09 14:00:29:787 +0300] [executor-thread-30] DEBUG io.opentelemetry.javaagent.instrumentation.api.concurrent.RunnableWrapper - Wrapping runnable task io.vertx.core.net.impl.ConnectionBase$$Lambda$526/0000000000000000@8958f290

[opentelemetry.auto.trace 2021-03-09 14:00:29:788 +0300] [vert.x-eventloop-thread-2] INFO io.opentelemetry.exporter.logging.LoggingSpanExporter - 'HTTP GET' : 4a15043e00899721c8b6bba750e6092d b4862821a5c4f338 SERVER [tracer: io.opentelemetry.javaagent.netty-4.1:Trendyol-1.0.0-otel-1.0.0] AttributesMap{data={thread.id=54, http.flavor=1.1, http.url=http://stage-discovery-reco-favorite-service.earth.trendyol.com/favorite/products?culture=tr-TR&limit=1&page=0&userId=25040761, http.status_code=200, thread.name=vert.x-eventloop-thread-2, net.peer.ip=127.0.0.1, http.method=GET, net.peer.port=32940, http.client_ip=10.2.80.240}, capacity=128, totalAddedValues=9}

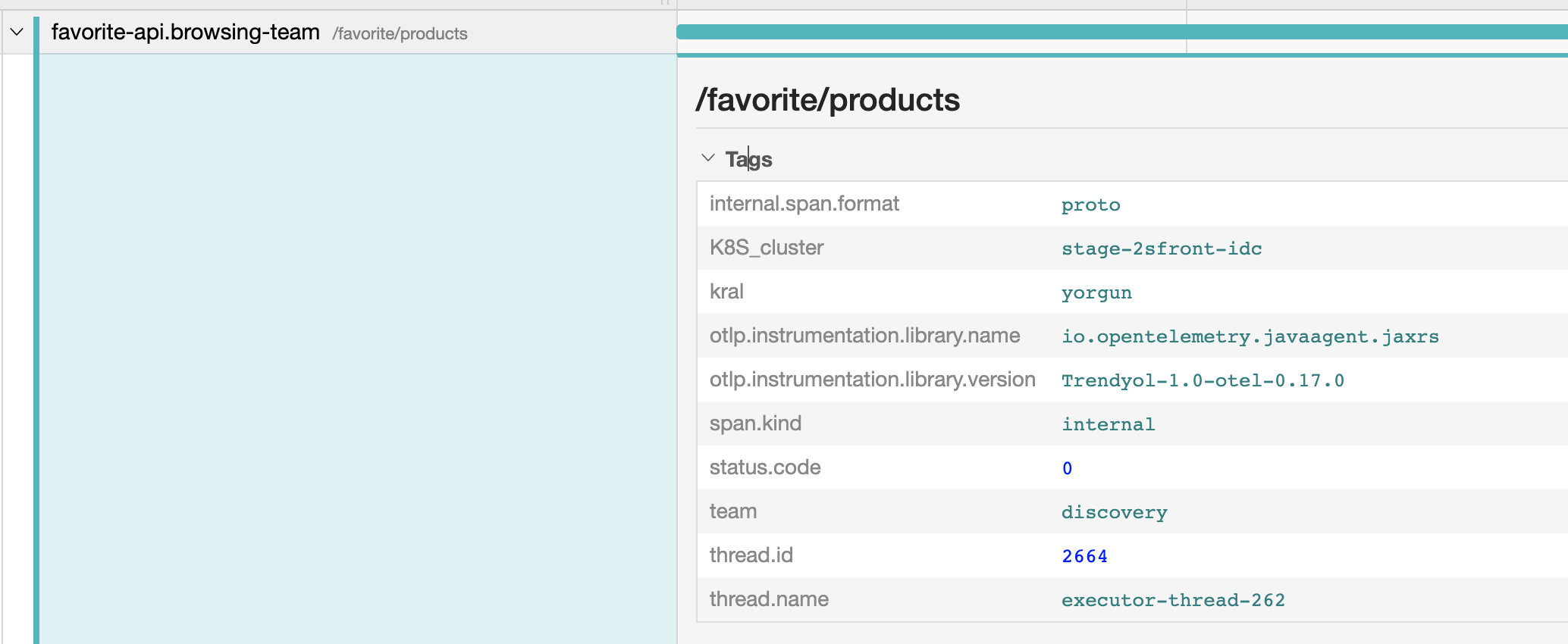

[opentelemetry.auto.trace 2021-03-09 14:00:29:795 +0300] [executor-thread-30] INFO io.opentelemetry.exporter.logging.LoggingSpanExporter - 'PaginatedFavoriteGetter.getUserFavorites' : ee4aec6e8205992cb3f71383124d5016 7f2562b19aafaf63 INTERNAL [tracer: io.opentelemetry.javaagent.external-annotations:Trendyol-1.0.0-otel-1.0.0] AttributesMap{data={thread.id=525, thread.name=executor-thread-30}, capacity=128, totalAddedValues=2}

[opentelemetry.auto.trace 2021-03-09 14:00:29:801 +0300] [executor-thread-35] INFO io.opentelemetry.exporter.logging.LoggingSpanExporter - 'PaginatedFavoriteGetter.getUserFavorites' : e5204c2c7eee5ce4db7d9c33d3c1c7e0 3c7abd68401c2821 INTERNAL [tracer: io.opentelemetry.javaagent.external-annotations:Trendyol-1.0.0-otel-1.0.0] AttributesMap{data={thread.id=530, thread.name=executor-thread-35}, capacity=128, totalAddedValues=2}

[opentelemetry.auto.trace 2021-03-09 14:00:29:802 +0300] [executor-thread-30] INFO io.opentelemetry.exporter.logging.LoggingSpanExporter - 'HTTP GET' : ee4aec6e8205992cb3f71383124d5016 6d0b7f2c30588239 CLIENT [tracer: io.opentelemetry.javaagent.jaxrs-client-2.0-resteasy-2.0:Trendyol-1.0.0-otel-1.0.0] AttributesMap{data={net.transport=IP.TCP, net.peer.name=stageproductdetailapi.trendyol.com, thread.id=525, http.flavor=1.1, http.url=http://stageproductdetailapi.trendyol.com/product-contents/bulk-pdp?filterOverPriceListings=true&culture=tr-TR&contentIds=10998177&isDeliveryDateRequired=false&filterStockOuts=false, http.status_code=200, thread.name=executor-thread-30, http.method=GET}, capacity=128, totalAddedValues=8}This log represents server trace id different from internal span trace |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Describe the bug

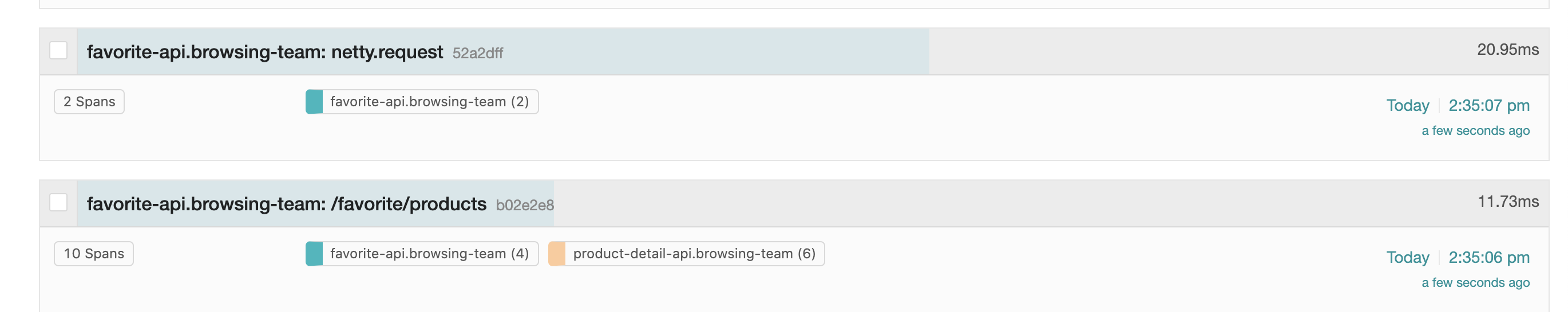

I am working on integrating Open Telemetry to an existing microservices architecture with nested libraries such as Kafka, couchbase, netty. There are some problems with tracing data. For one HTTP request, netty creates separated two traces. I think that is a context pass problem.

What did you expect to see?

For one request one trace.

What did you see instead?

For one request two separate traces.

What version are you using?

Opentelemetry agent version

v0.17.0Environment

OS: Linux 4.4.0-197

Container platform: Kubernetes

Java version: 1.8.0_265-b01

Additional context

The text was updated successfully, but these errors were encountered: