TensorFlow V2 implementation (See DMSP-tensorflow page for V1 implementation, and DMSP page for Caffe and MatCovNet implementations). This repo. includes more general restoration formulation with sub-sampling (used in e.g. super-resolution)

Deep Mean-Shift Priors for Image Restoration (project page)

Siavash Arjomand Bigdeli, Meiguang Jin, Paolo Favaro, Matthias Zwicker

Advances in Neural Information Processing Systems (NIPS), 2017

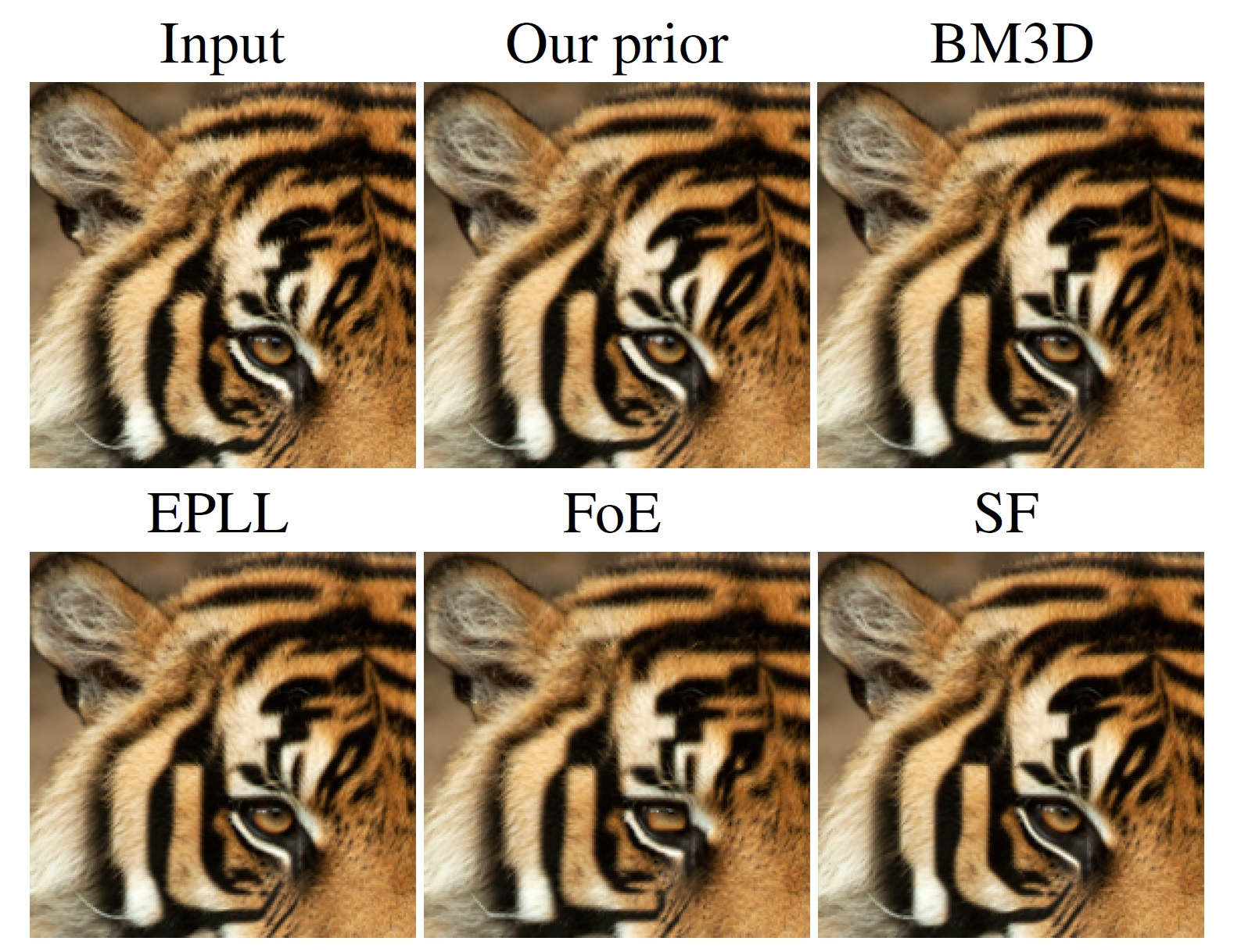

In this paper we introduce a natural image prior that directly represents a Gaussian-smoothed version of the natural image distribution. We include our prior in a formulation of image restoration as a Bayes estimator that also allows us to solve noise-blind image restoration problems. We show that the gradient of our prior corresponds to the mean-shift vector on the natural image distribution. In addition, we learn the mean-shift vector field using denoising autoencoders, and use it in a gradient descent approach to perform Bayes risk minimization. We demonstrate competitive results for noise-blind deblurring, super-resolution, and demosaicing.

See manuscript for details of the method.

This code runs in Python and you need to install TensorFlow.

demo_DMSP.ipynb: Includes an example jupyter notebook for non-blind and noise-blind image restoration.

DMSPRestore.py: Implements MAP function for non-blind image restoration. Use Python's help function to learn about the input and output arguments.

evaluation.ipynb: Includes a jupyter notebook for running evaluations for image super-resolution (see below)

DAE/: Includes DAE model (tf.saved_model).

data: Includes sample image(s).

The benchmark for this is from https://github.com/zsyOAOA/VDNet: Average PSNR/SSIM results of comparing methods under different combinations of scale factors, blur kernels and noise levels on Set14. The best results are highlighted in bold. The results highlighted in gray color indicate unfair comparison due to mismatched degraded assumptions. Code for reproducing results can be found in evaluation.ipynb.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.