-

Notifications

You must be signed in to change notification settings - Fork 626

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Low Delivery Rate for Rabbit #644

Comments

|

You are not comparing apples with apples; there is no qos (prefetch limit) in your raw test. There is some overhead when using |

|

@garyrussell thanks for reaching out. I was testing with prefetch set to 1, 100, 1000. Always had the same results |

|

That is with spring; what about the "official client code" ( That said, it is odd you got the "same" results; qos=1 (default) is definitely much slower because it needs a round trip to the broker for each message - we are increasing the default in 2.0 (AMQP-748). It is currently 1. If you see no difference between 1 and 100, it sounds like the configuration is not being applied for some reason. Again, if you can share your project, I can take a look. BTW, we use the same client under the covers. |

|

I get 22,900/second with this app... @SpringBootApplication

public class Gh644Application implements CommandLineRunner {

public static void main(String[] args) {

SpringApplication.run(Gh644Application.class, args).close();

}

@Autowired

private RabbitTemplate template;

@Autowired

private RabbitListenerEndpointRegistry registry;

private final CountDownLatch latch = new CountDownLatch(1_000_000);

@Override

public void run(String... arg0) throws Exception {

for (int i = 0; i < 1_000_000; i++) {

template.convertAndSend("perf", "foo");

}

StopWatch watch = new StopWatch();

watch.start();

this.registry.start();

this.latch.await();

watch.stop();

System.out.println(watch.getTotalTimeMillis() + " rate: " + 1_000_000_000.0 / watch.getTotalTimeMillis());

}

@Bean

public Queue perf() {

return new Queue("perf", false, false, true);

}

@RabbitListener(queues = "perf")

public void listen(Message message) {

this.latch.countDown();

}

}and When I remove the When I used the native consumer, I got very similar results (17k/sec). ...

// this.registry.start();

ConnectionFactory cf = new ConnectionFactory();

Connection conn = cf.newConnection();

Channel channel = conn.createChannel();

channel.basicQos(100);

channel.basicConsume("perf", new DefaultConsumer(channel) {

@Override

public void handleDelivery(String consumerTag, Envelope envelope, BasicProperties properties, byte[] body)

throws IOException {

latch.countDown();

channel.basicAck(envelope.getDeliveryTag(), false);

}

});

this.latch.await();

watch.stop();

System.out.println(watch.getTotalTimeMillis() + " rate: " + 1_000_000_000.0 / watch.getTotalTimeMillis());

channel.close();

conn.close();So there must be something else going on in your code. |

|

@garyrussell thanks for update! Let me check that in couple of days cause I am away from keyboard at the moment ;) |

|

Closing for now due to no response; please reopen if you still have an issue. |

I was testing performance of Rabbit clients in Java. With Spring AMQP I was able to get Delivery Rate as 800 msgs/s. I was using testing for a 1 consumer on a single node.

At the same time I was able to get even 6000 msgs/s while using official Rabbit client.

Spring AMQP code: https://gist.github.com/dmydlarz/635c961ceca42befba89161d3399a75d

Rabbit official client code: https://gist.github.com/dmydlarz/f2ea5f4fe7ea88cfafb3862353bdbd4e

Settings for Spring AMQP where:

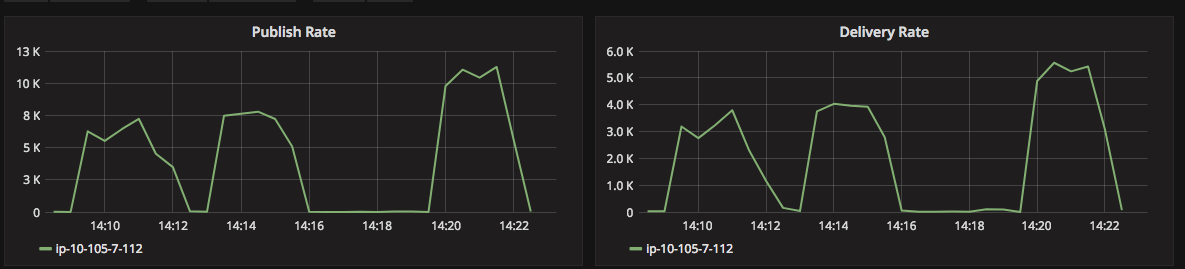

This is delivery rate I got while running on Spring:

And this is what I get running on official client:

Similar numbers (as in Spring AMQP) where received by @softwaremill team in their tests descirbed in blogpost: https://softwaremill.com/mqperf/#rabbitmq. They got ~680 msgs/second.

Question is: why Spring is not able to get same numbers as official client.

What is more I was able to get ~6k rate with official Rabbit Java Perf Tool. So it should be achievable.

It was run as:

(1024B payload, 1 producer, 1 consumer, 180 seconds, persistent queue)

More on that: https://www.rabbitmq.com/blog/2012/04/17/rabbitmq-performance-measurements-part-1/

The text was updated successfully, but these errors were encountered: