-

Notifications

You must be signed in to change notification settings - Fork 74

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

computational cost of training per Epoch #23

Comments

|

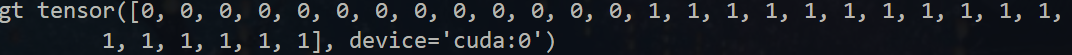

I trained on my own dataset(200train +30val),each slide were cut into 500~1500 tiles, and then embeded into 2048 dim vectors. It cost about 1min per epoch. I wonder how many slide is in your dataset(train and val) and how long it takes per epoch. By the way, my result were pretty poor and my training procedure were not stable, don't know where maybe wrong. |

Because we set the batch size to one, the training step in one Epoch is equal to the number of your training slide. Meanwhile, because we preprocess all the WSIs into features, we test the training is very quick in RTX3090, roughly 0.5 min per epoch if we have 400 slides. |

1、Each slide has 500~1500 tiles, have you process the WSI in the 20x magnification or higher? 2、You can also test the performance of other MIL methods in your dataset, such as ABMIL or CLAM, if the task is challenging or the dataset is limited. |

|

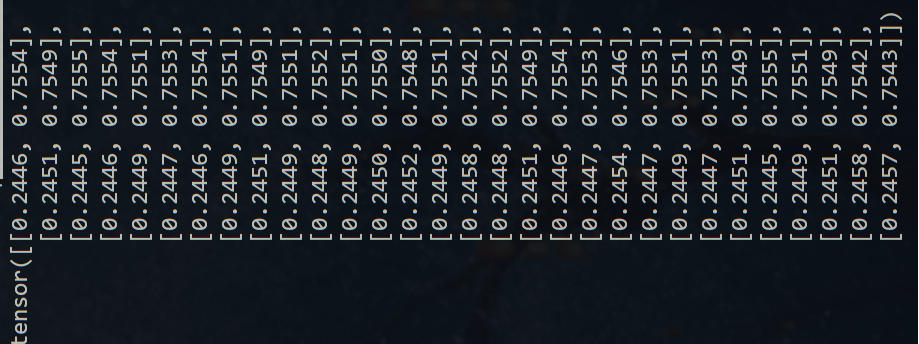

This result seems strange, as it appears to show that the model is overfitting. Because the DTFD model is built on a smaller model ABMIL, you might experiment with Transformer aggregation in lower dimensional aggregation, such as lowering from 2048 to 128 or 256. Furthermore, have you used our Pytorch lightning framework and the Ranger optimizer. |

|

Nice work. Any recommendations to preprocess the WSIs into features (since the quality of features may influence the classification directly)? |

i would like to ask how much the training step takes per Epoch, i used your built model and i modified the PPGE model by adding FFT to reduce the dimension Convolution operation, only issue i noticed was that the Trainer took a lot of time to finish single Epoch, that's is related to size the shape of the image (2154,1024) or i missed something

The text was updated successfully, but these errors were encountered: