-

-

Notifications

You must be signed in to change notification settings - Fork 3.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Yolo v3 take a lot of time to train on custom data #1458

Comments

|

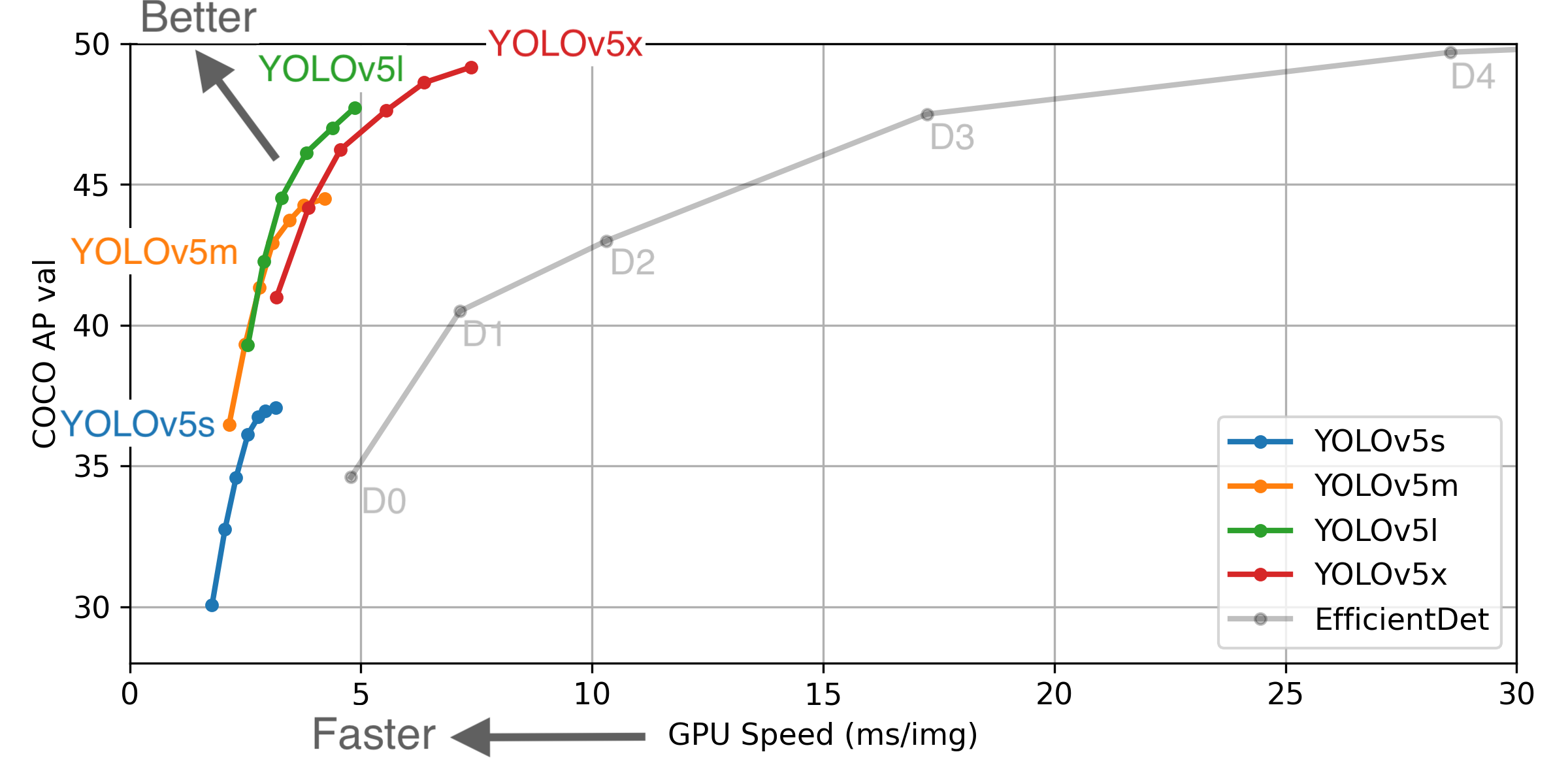

@FlorianRuen Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

Pretrained Checkpoints

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy. For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you! |

|

Thanks for the link @glenn-jocher, I'm currently running a trainning using Yolo v5 for the same dataset Thanks for your help |

|

@glenn-jocher To make a quick update on this topic, the training make around 10 epochs in 1h and 10 minutes |

|

@FlorianRuen sure, sounds fine. |

|

@glenn-jocher Do you think the time taked is normal on this kind of machine ? For now, it reach epoch 78 in 9 hours and 48 minutes, so if the time for an epoch is stable, it should take around 40 hours for 300 epochs Here is the charts from tensorboard (epoch 78 in 9h 48 min) => https://ibb.co/rw43zm1 Maybe I need to take a bigger machine (maybe with 16go video memory) to get it done faster (2x faster if the performances is x2 ?) Thanks for your help |

|

@FlorianRuen this is not a question for me, just compare to publicly available environments like google colab. |

|

@glenn-jocher I will try to search again, but any results I found run on only 3 epochs for COCO dataset on only 8 or 128 images, so the epochs is very fast in this case (I have around 700 images per epochs on my side, so if we make a comparation with this, on the public results 8 images in 9 seconds should be around 10 minutes for an epoch) But if we use the results on you page, that said training on full COCO dataset:

As COCO as 118k images for training and 5K for validation, my training is very low on just 12k images (even if I use 8 gio GPU instead of 16 gio) |

|

Hey @FlorianRuen , I am facing the same problem with YOLOV3. Did you find any solutions yet? Thanks |

|

👋 Hello! Thanks for asking about training speed issues. YOLOv5 🚀 can be trained on CPU (slowest), single-GPU, or multi-GPU (fastest). If you would like to increase your training speed some options are:

Good luck 🍀 and let us know if you have any other questions! |

❔Question

Hello everyone,

I'm using the code from this repo to train my model on images (around 12k images, labelled using labelbox in correct format), and there is around 17 classes.

I'm training my model on AWS EC2 instance (instance type is g3s.xlarge with Tesla M60 GPU and almost 8 gio video memory), but the training take a lot of time, and it's very hard to find why.

I'm explaining: I'm trying to make 500 epochs, and one epochs take around 25-30 minuts on this kind of instance. On my side, I think it's very long (my model isn't very big to take this time to train)

Hyperparameter was default one, I'm using batch size = 4 (> 4 look like to cause CUDA Out of Memore error), my test size is 20% of my 12k images.

What do you think about this ? Is it normal or to long ? If it's very long, any way to find why ?

Don't hesitate if I miss some data that can help

Kind regards,

Florian

The text was updated successfully, but these errors were encountered: