-

-

Notifications

You must be signed in to change notification settings - Fork 6.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

feat: improved cold start using deps scanner #8869

Conversation

✅ Deploy Preview for vite-docs-main canceled.

|

|

Our conclusions from the last team meeting:

After this PR: Same findings:

|

|

It took me a while to find the race condition that was causing the errors. I think some of the errors we've seen lately may be related to the SSR devs optimizer running before the scanner (or when the scanner was not even run). We need to find a way later to implement the preAlias plugin without the scanner. Now only if the scanner finds an aliased and optimized dependency, the plugin works correctly. I think it is the only place left where the scanner needs to be awaited. For the moment, the PR implements guards to start the optimizers after the scanner is done. I'll try to modify the preAlias plugin after v3 is out. |

|

@sapphi-red @bluwy I'm using this PR to check the CI flakiness, since after enabling the scanner again it becomes more evident

This explains why the CSS tests were one of the most usual to fail, and also the sourcemaps had failures regularly. I wasn't checking this myself, so it is something to be aware of when we review PRs in the future. This commit in the PR removes these: 6c72736 I'm still fixing other things, so fails are expected |

|

@sapphi-red I see why you did the |

|

@patak-dev I don't completely grasp the situation but maybe using inline snapshot helps? (It makes the test file long and messy so it is not a good choice though...) |

|

@sapphi-red the problem is that vitest is deleting the snapshots if we call |

|

@bluwy I tried to implement auto-exclude of explicit external deps here: You can see the tests in that commit working well. But VitePress failed to build with it. Vue is setting itself as @bholmesdev this may also affect Astro, as I think you were counting on having So if someone has an issue with a optimizedDeps: ssr => {

if (ssr) {

return {

include: [ ... ]

exclude: [ ... ]

}

}

}Or we could have a function like What do you think? I would implement this in another PR, and it is a feature we could add later also. Did another run of vite-ecosystem-ci and the svelte tests are still failing with the same error: https://github.com/vitejs/vite-ecosystem-ci/runs/7171919769?check_suite_focus=true Maybe optimizing during SSR shouldn't be shared at all? And if we don't get the functional form in optimizeDeps it means that it only applies for the browser? |

|

The function form could cause issues in the ecosystem, as there are a lot of plugins that are expecting an object only there. I think it would be better to keep things as objects. We could do: optimizeDeps: {

...

},

ssr: {

optimizeDeps: {

...

},

...

}That I think is better compared to Since we are discussing this API, @DylanPiercey proposed a few days ago having something similar using optimizeDeps: {

include: ['stuff included in all targets'],

exclude: ['stuff excluded in all targets'],

overrides: {

node: {

include: ['only included for node']

},

webworker: {}.

browser: {}

}

}I think that if we want something like this, it is already better to use multiple config files. We need the entry in |

|

We discussed with @bluwy that we should merge to continue to build on top of this PR. There is an issue in vite-plugin-svelte with this but looks like it is on the plugin side. And it may be resolved once we correct the noExternal/optimizeDeps logic in a PR I'll send next |

|

|

||

| // Add deps found by the scanner to the discovered deps while crawling | ||

| for (const dep of scanDeps) { | ||

| if (!crawlDeps.includes(dep)) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Excuse me, why does the scanner dependency not exist in the crawling discovered dependency

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This line checks that when we crawl for dependencies, those that are in scanDeps (already optimized), shouldn't be added as a missing import since it's already optimized.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is one point that I don't quite understand.

Dependencies found during the scan phase will be stored in metadata.discovered, and then runOptimizeDeps will generate result metadata.optimized(scanDeps). In the craw phase, crawlDeps should contain all the dependencies of the scan phase (scanDeps). Think this conditional statement will not hit, right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry for the late reply. I think it's a bit out of my territory now since I haven't been following up on this part of the code. Maybe @patak-dev knows more about this. Also, are you suggesting of a perf improvement, or a bug?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

With the current code, it could still hit, but I think you have a point @Veath. We should move

| const crawlDeps = Object.keys(metadata.discovered) |

after the await of the scanner

| const result = await postScanOptimizationResult |

and then it should never hit. PR welcome if you would like to tackle that

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think this part of the code can be deleted.

vite/packages/vite/src/node/optimizer/optimizer.ts

Lines 608 to 612 in 881050d

| for (const dep of scanDeps) { | |

| if (!crawlDeps.includes(dep)) { | |

| addMissingDep(dep, result.metadata.optimized[dep].src!) | |

| } | |

| } |

I understand it this way. The scan phase found foo, bar dependencies. A new dependency C is found during the crawling phase, then at the end of the crawling, it should be scanDeps = [foo,bar], crawlDeps = [foo,bar,C].

The precondition for executing this part of the code is scanDeps = [foo, bar], crawlDeps = [C], Will there be such a scene? @patak-dev

Fix #8867

Description

See comment #8869 (comment)

Initial exploration of diff strategies

Click to read the original intent of the PR

Adds a new `optimizeDeps.devStrategy` option to allow users to define the cold start strategy.

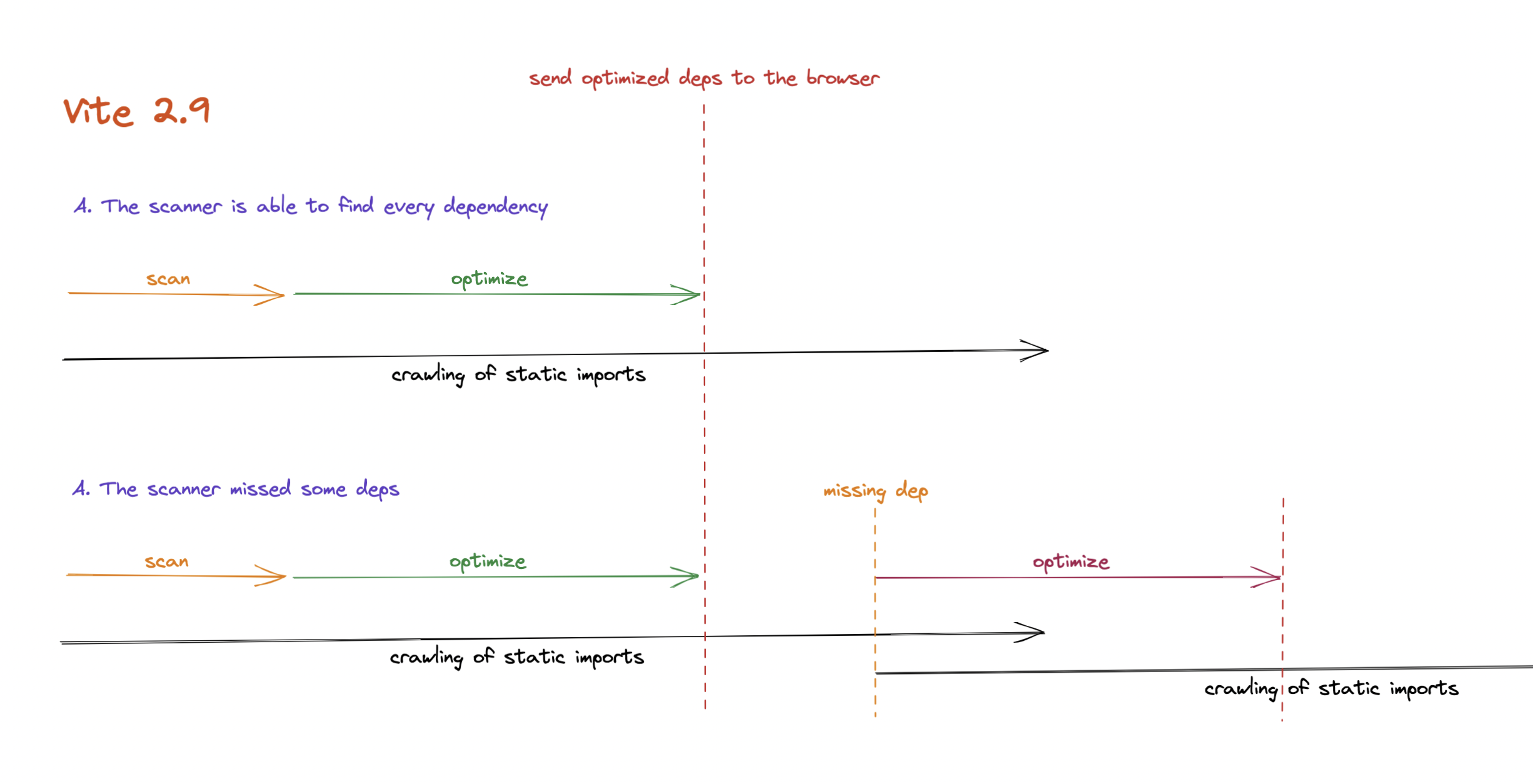

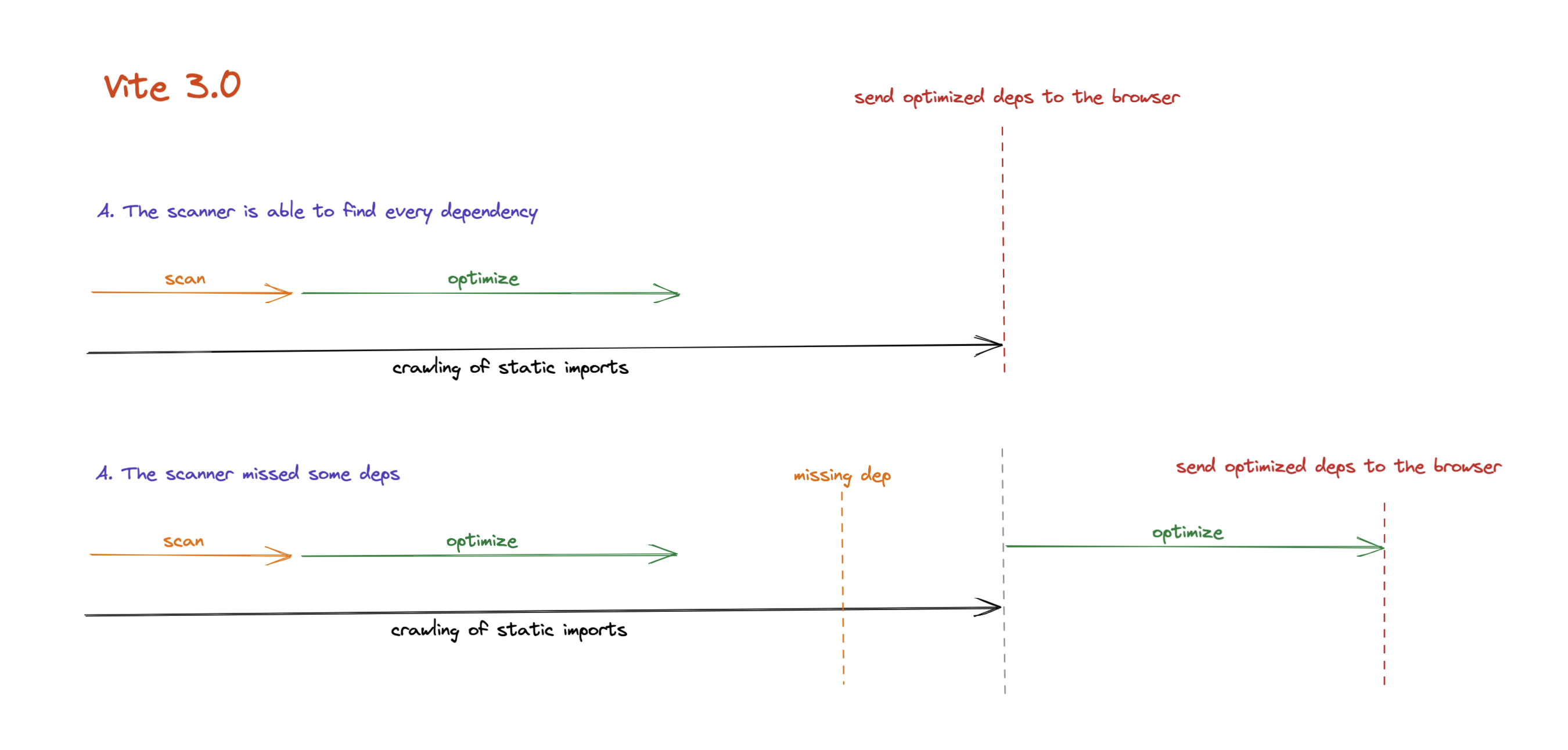

'pre-scan': pre-scan user code with esbuild to find the first batch of dependencies to optimize. This is the v2.9 scheme (this option replaceslegacy.devDepsScanner, removed in this PR)'lazy': only static imports are crawled, leading to the fastest cold start experience with the tradeoff of possible full page reload when navigating to dynamic routes. This is the current default.'eager': both static and dynamic imports are processed on cold start completely removing the need for full page reloads at the expense of a slower cold start.And there is a new strategy that I think should be the default for non MPAs:

'dynamic-scan': delay optimization until static imports are crawled, then scan with esbuild dynamic import entries found in the source code.Edit: after some discussions, I think if we have to use the scanner then the best would be a new mode (to be implemented)

'scan': perform scanning in the background, then once the server is idle, use the scanned deps list to complete what was found normally. It would be like'lazy'+ completed with the scanned depsData point for a SPA (57 dynamic routes)

What is the purpose of this pull request?