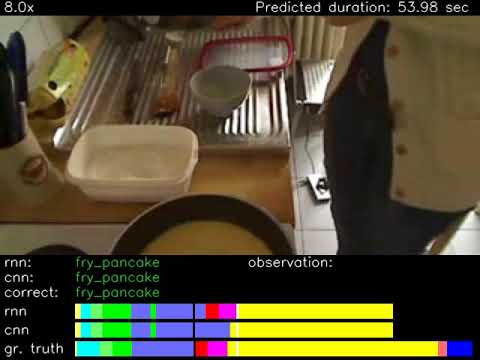

This repository provides a TensorFlow implementation of the paper When will you do what? - Anticipating Temporal Occurrences of Activities.

Click on the image.

- download the data from https://uni-bonn.sciebo.de/s/3Wyqu3cxYSm47Kg.

- extract it so that you have the

datafolder in the same directory asmain.py. - To train the model on split1 of Breakfast dataset run

python main.py --model=MODEL --action=train --vid_list_file=./data/train.split1.bundlewhereMODELiscnnorrnn. - To change the default saving directory or the model parameters, check the list of options by running

python main.py -h.

- Run

python main.py --model=MODEL --action=predict --vid_list_file=./data/test.split1.bundlefor evaluating the the model on split1 of Breakfast. - To predict from ground truth observation set

--input_typeoption togt. - To check the list of options run

python main.py -h.

Run python eval.py --obs_perc=OBS-PERC --recog_dir=RESULTS-DIR. Where RESULTS-DIR contains the output predictions for a specific observation and prediction percentage, and OBS-PERC is the corresponding observation percentage. For example python eval.py --obs_perc=.3 --recog_dir=./save_dir/results/rnn/obs0.3-pred0.5 will evaluate the output corresponding to 0.3 observation and 0.5 prediction.

If you use the code, please cite

Y. Abu Farha, A. Richard, J. Gall:

When will you do what? - Anticipating Temporal Occurrences of Activities

in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018

To download the used features please visit: An end-to-end generative framework for video segmentation and recognition.