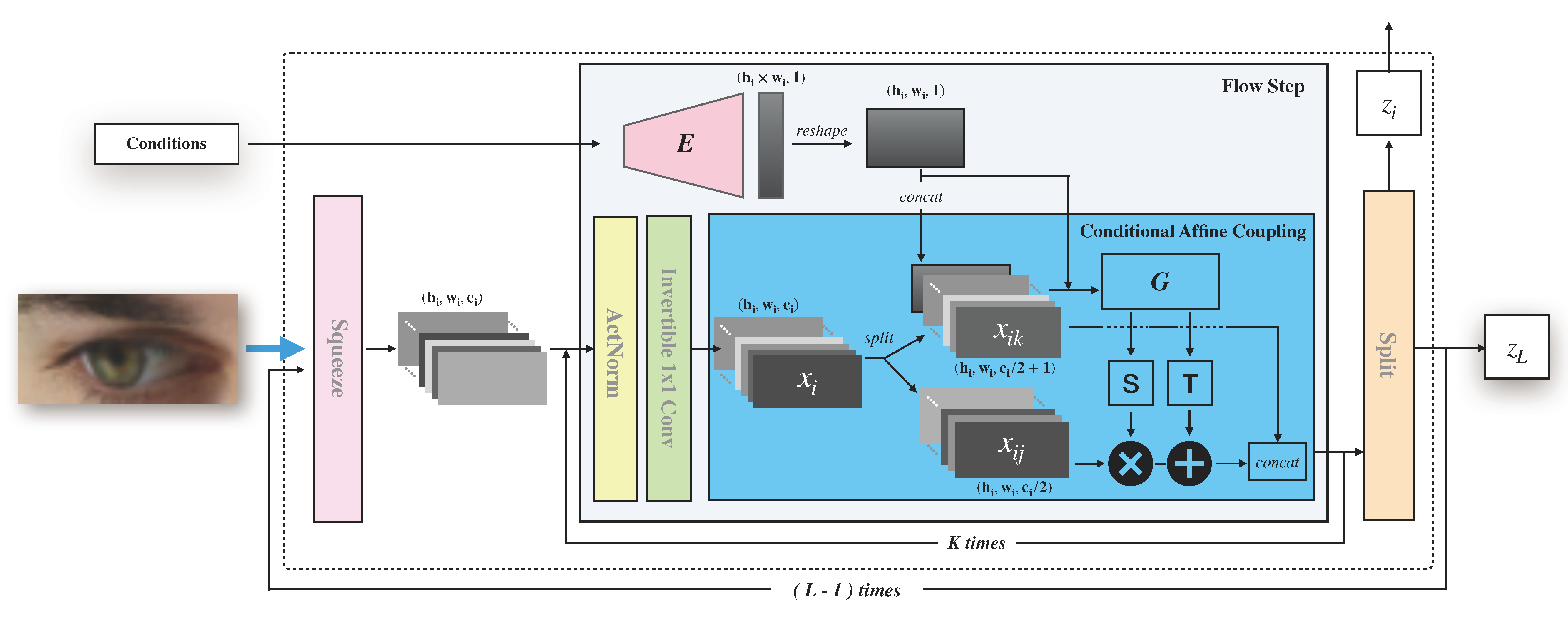

The offical implementation of our paper GazeFlow: Gaze Redirection with Normalizing Flows. pdf

model.py: GazeFlow model.train.py: Trainer for GazeFlow.evaluation.py: Containing methodsencodeanddecodefor gaze redirection.imgs: Images for testing. Accessing more images for testing can be found inGazeFlowDemo.ipynb.GazeFlowDemo.ipynb: A pre-trained GazeFlow demo for testing. DEMO is now available.

Our codes are based on tensorflow 2.3 and open source normalizing flows package TFGENZOO. Codes should work fine on tensorflow ≥ 2.3.

You can easily build your environment by pip install -r requirements.txt.

- Download MPIIGaze dataset from official website.

- Download ETH-XGaze dataset from official website.

- Download UT-MultiView dataset from official website.

Please check the data/data_preprocess.py, it provides a simple scripts for processing the MPIIGaze dataset.

If you want to train your own dataset, just keep the input format like this:

{

'right_eye':

{'img': right_eye_img, 'headpose': right_headpose, 'gaze': right_gaze},

'left_eye':

{'img': left_eye_img, 'headpose': left_headpose, 'gaze': left_gaze},

}-

MPIIGaze dataset is uploaded to GoogleDrive.

-

The other processed datasets (UT, ETH-XGaze) are lost. But for those full face images, our methods just need to crop and normalize the eye image from the face image. You can fellow the step in

data/data_preprocess.pyto process your own dataset.

CUDA_VISIBLE_DEVICES=0 python train.py --images-width=64 --images-height=32 --K=18 --L=3 --datapath=/your_path_to_preprocess_data/xgaze_64x32.tfrecordsWe provided a ETH-XGaze pre-trained GazeFlow demo for gaze redirection. Check out GazeFlow Colab Demo. Pre-trained model will be downloaded by running the notebook. For convenience, you can upload it to Colab and run it.

CUDA_VISIBLE_DEVICES=0 python train.py --BATCH-SIZE=32 --images-width=128 --images-height=128 --K=18 --L=5 --condition-shape=4 --total-take=34000 --datapath=/path_to_your_preprocessed_data/mpiiface.tfrecords- ETH-XGaze Samples

- MPII-Gaze Samples

- UT-MultiView Samples

- Gaze Correction, Crop eyes image and paste back.

- Full face gaze redirect, crop eyes and paste with poisson blending

- Google Drive: ETH-XGaze

model.py: GazeFlow model.train.py: Trainer for GazeFlow.evaluation.py: Containing methodsencodeanddecodefor gaze redirection.imgs: Images for testing. Accessing more images for testing can be found inGazeFlowDemo.ipynb.GazeFlowDemo.ipynb: A pre-trained GazeFlow demo for testing. DEMO is now available.

Our codes are based on tensorflow 2.3 and open source normalizing flows package TFGENZOO. Codes should work fine on tensorflow ≥ 2.3.

You can easily build your environment by pip install -r requirements.txt.

- Download MPIIGaze dataset from official website.

- Download ETH-XGaze dataset from official website.

- Download UT-MultiView dataset from official website.

Please check the data/data_preprocess.py, it provides a simple scripts for processing the MPIIGaze dataset.

If you want to train your own dataset, just keep the input format like this:

{

'right_eye':

{'img': right_eye_img, 'headpose': right_headpose, 'gaze': right_gaze},

'left_eye':

{'img': left_eye_img, 'headpose': left_headpose, 'gaze': left_gaze},

}CUDA_VISIBLE_DEVICES=0 python train.py --images-width=64 --images-height=32 --K=18 --L=3 --datapath=/your_path_to_preprocess_data/xgaze_64x32.tfrecordsWe provided a ETH-XGaze pre-trained GazeFlow demo for gaze redirection. Check out GazeFlow Colab Demo. Pre-trained model will be downloaded by running the notebook. For convenience, you can upload it to Colab and run it.

CUDA_VISIBLE_DEVICES=0 python train.py --BATCH-SIZE=32 --images-width=128 --images-height=128 --K=18 --L=5 --condition-shape=4 --total-take=34000 --datapath=/path_to_your_preprocessed_data/mpiiface.tfrecords- ETH-XGaze Samples

- MPII-Gaze Samples

- UT-MultiView Samples

- Gaze Correction, Crop eyes image and paste back.

- Full face gaze redirect, crop eyes and paste with poisson blending