-

Notifications

You must be signed in to change notification settings - Fork 61

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

refactor and fix normalmap, parallax, reflectionmap, glowmap, GL/DX normal switch #180

Conversation

4df4061 to

47101ac

Compare

|

I also refactored parallax code and fixed orientation the way it seems right to do (transforming |

c584b6e to

06a71aa

Compare

|

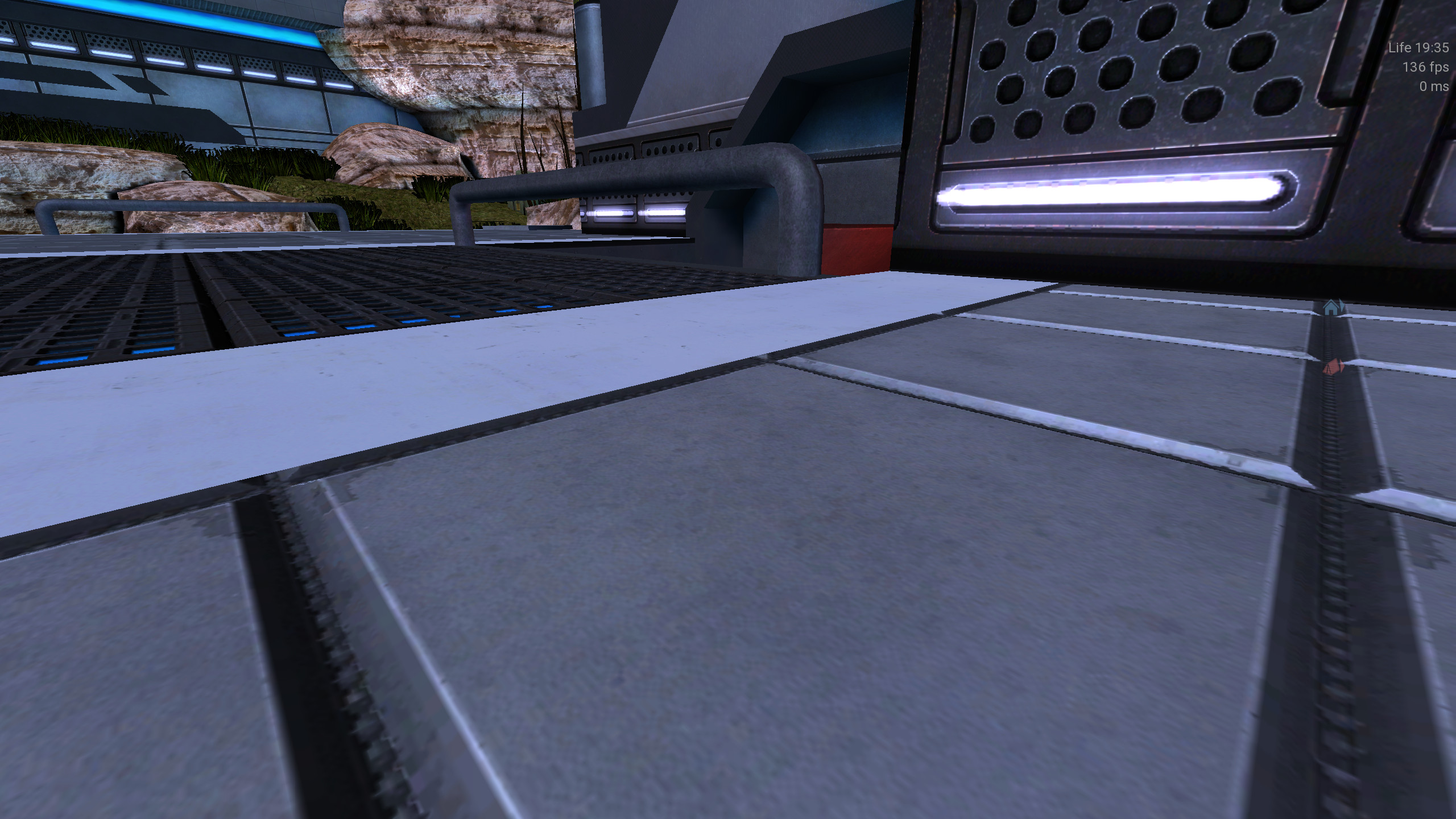

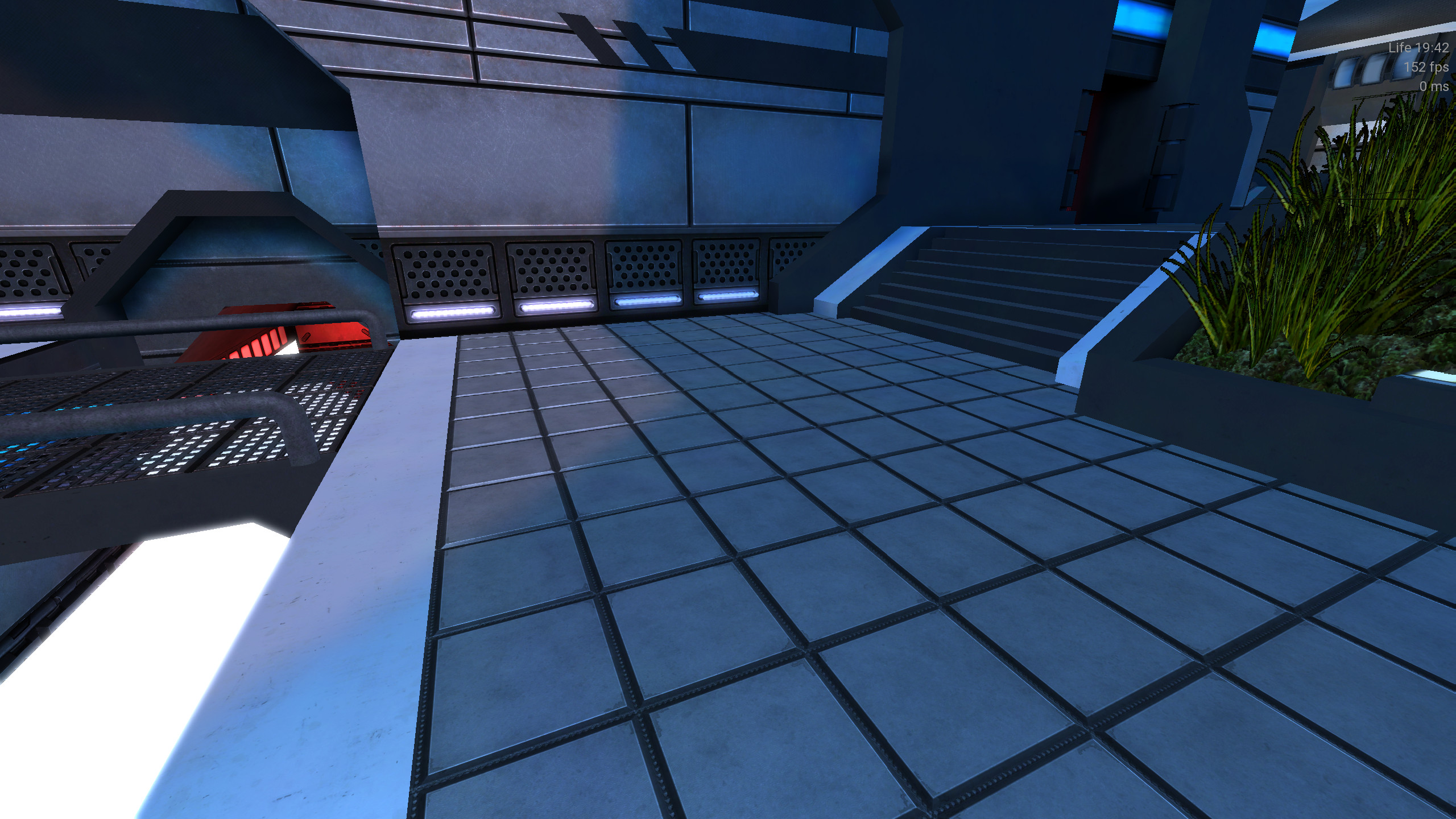

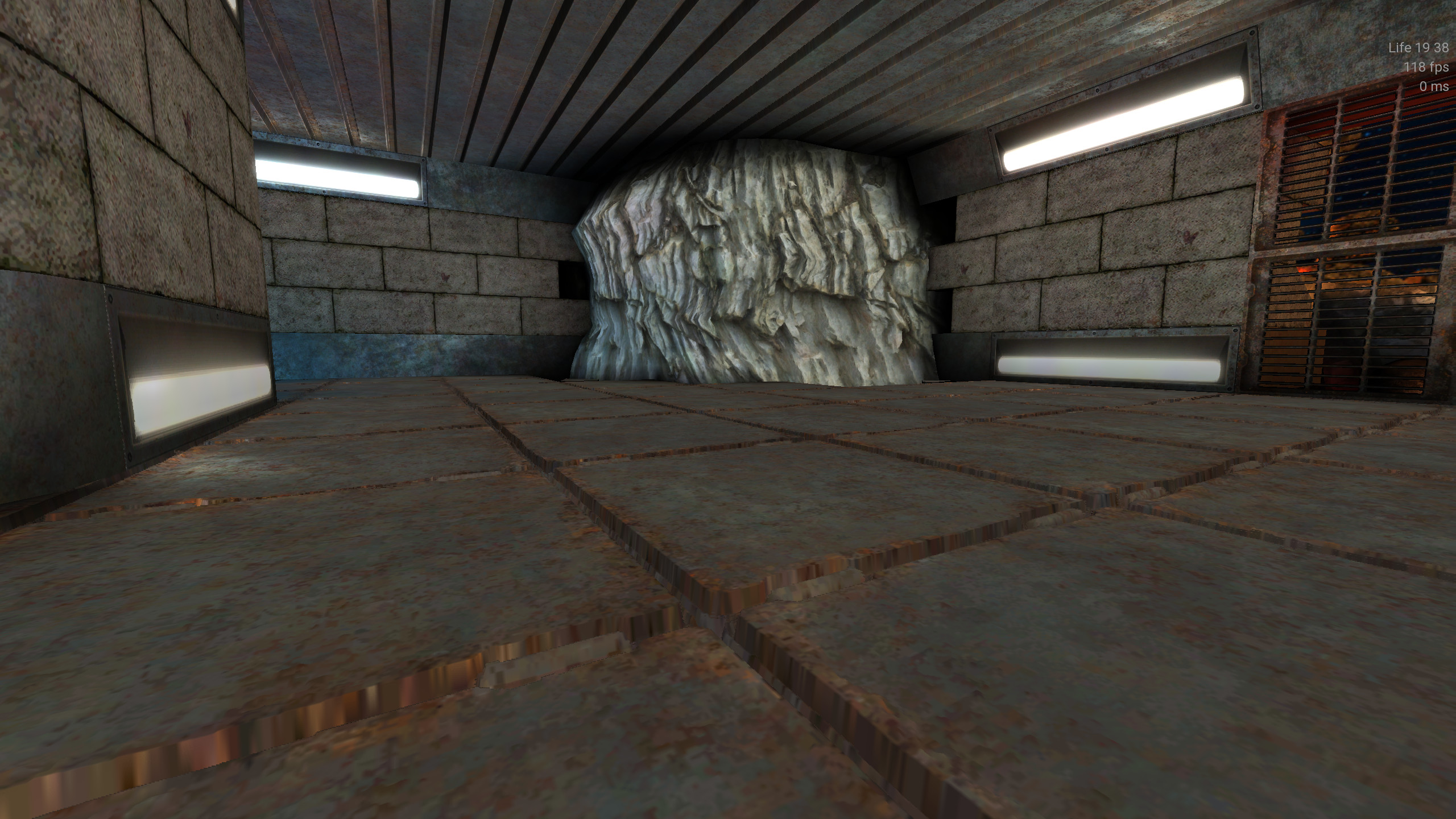

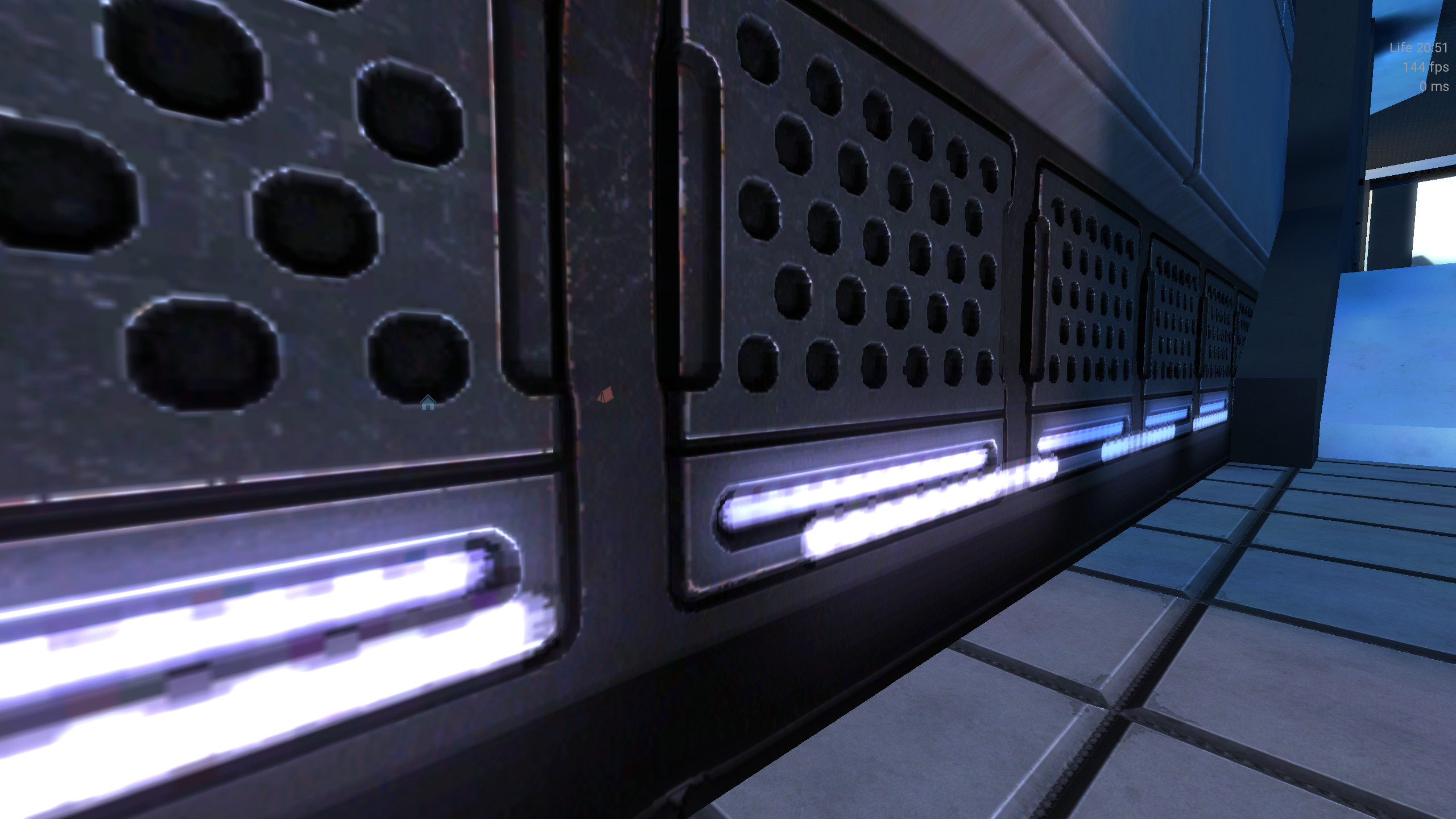

I added a commit that implements See the texture in foreground, the edge is not correct because the heightmap provides height from the bottom instead of depth from the top, hence the whole texture is displayed lower than the surface itself: The same texture once Some more screenshots, light mapping on edges: Realtime light mapping on edges: OK from the dretch point of view floor with Note that the bricks on the wall are flats because those textures use loose heightmap (and darkplaces computes normalmap from heightmap), something we don't do yet, otherwise everything else is right, notice there is no dark blotches in grooves anymore: Some more coolness: Note that I'm adding engine code to GPL files or sometime rewriting code but I prefer to contribute it under BSD , perhaps I will add some comments to identify what is more permissive. The normal map code is brand new, the dpoffsetmapping code does not copypaste DarkPlaces (and the way our engine is designed is not very compatible with the way it is implemented in Darkplaces). |

|

Very cool! @gimhael if you get a chance, could you review? |

|

So, more awesome stuff: Reflection maps are now loaded as cubemap (that was a bug), a new kind of cubemap naming scheme is added (for compatibility with Darkplaces). The Parallax reflection cubemap is now implemented. Shader stage collapse was revamped with a lot more simpler code, and less buggy one. It allowed to merge the implicit lightmap that comes with diffusemap and the legacy explicit ones, to avoid double shadowing and shadows being painted over glow maps. A bug is also fixed when |

8b0d2e7 to

d199763

Compare

|

OK, people must know about the fact that ALL Unvanquished maps are broken with this branch because I fixed a bug that was hiding another. Basically, all our normal maps are compressed with The current tree is meant to be correct. I created a test map with assets I created to be sure they are the way they must be, then fixed the engine to render the test map. The correct engine in this tree is able to render correctly xonotic maps and unvanquished maps if their normal maps were not compressed with The symptom is a map being almost entirely dark. No need for special map to reproduce the bug, just load released |

9601fb7 to

9ce3547

Compare

material does not define what to do relative to what engine expects material defines what format the file is using relative to OpenGL format this way the material file is engine independant enable DX format for DarkPlaces normal maps

it remains disabled though

30a65ac to

1b06c22

Compare

|

Just to say that I have some other changes in local branches that become really hard to rebase each one I edit this PR and/or #184, so I hope this is getting merged soon. 😁 |

| @@ -199,59 +202,133 @@ void computeDLights( vec3 P, vec3 N, vec3 I, vec4 diffuse, vec4 specular, | |||

| #endif | |||

| } | |||

|

|

|||

| vec3 normalFlip(vec3 normal) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IMHO this function should not use if()s, just compute normal * u_NormalFormat and make sure that the u_NormalFormat components are either 1.0 or -1.0 when the uniform is written. Even better would be to rename it to vec3 u_NormalScale and premultiply r_NormalScale into this uniform and delete r_NormalScale in the shaders. Then changing the r_NormalScale cVar wouldn't require a shader recompile.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I plan to revamp the u_NormalScale thing in a future PR because yes that feature is currently broken. There is both r_NormalScale (which is global and may be useful for testing purposes) and normalScale which is per-shader but lost in translation. My idea is to remove r_normalScale define in glsl and just pass u_NormalScale which would be a r_normalScale × shader.normalScale.

I'm not very happy with the idea of multiplying u_normalScale with u_normalFormat, I was thinking about a define when normalFormat's x × y × z < 0 so the code is even not compiled in glsl at all if there is no flip to do.

In any way, is this blocking? I have a lot of work to push in other PRs that relies on this code, and code that may even rewrite some part of this. This is just iterating process, I need something getting done before going to the next step, and at one point it's hard to put everything doable (or to reach perfectibility) in one PR. This is a stable state on which I can base other work. Can I really postpone the the u_normalScale refactor? for sure I will do it, I already planned it, but there is a lot of clean-up in tr_shade.cpp to do first and that will be my next PR.

@gimhael I have a special question for you. I've read it may be better to do operations for nothing than branching in glsl (like multiplying by 1.0 instead of doing an if) because of the way GPU works. But what is the performance hit of #ifdef in glsl? People with a C heritage would think nothing, but that's probably wrong as you just said changing r_normalScale requires a shader recompile, and I noticed that behaviour.

Basically there is a lot of things that is done every pixel every frame for nothing. We have options to disable normalMapping for example, but it just has no influence on performance since that option is just mapping a flat image and do the computation on it instead (which is wrong but that's out of topic). I have not-yet pushed branches with added r_deluxeMapping, r_specularMapping and ifdef that just disable the related code in glsl not only on global variable state, but on per-shader state. Is it a problem regarding to GPU architecture if a given texture is using lightMapping_fp.glsl with all features enabled (normal, parallax, specular, glow), and another texture is using lightMapping_fp.glsl with all features disabled but diffuse and lightmap? There is basically way to have many versions of the same shader in memory, one with disabled lightmap and one with disabled specularmap, is that an issue as long as those shaders don't do themselves if branching in their code?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Passing just one uniform variable that contains the product of all scale factors is definitely the right way. It's just a matter of scale, the multiplication in the pixel shader runs millions of times per frame while the premultiplication runs only once per shader binding. But if you want to use such a scale factor anyway, you can use it for normal flipping too.

#ifdef works like in C and C++, GLSL has a precompiler that removes all conditional code before it is passed to the real compiler, so the removed code has no runtime cost at all. The problem with #ifdef or similar is that you end up with a much larger number of shaders, which means a) long compile times at startup and b) that you have to switch shaders more often within a frame.

The cost of switching shaders is higher than the cost of switching texture bindings and much higher than the cost of switching uniforms (see e.g. page 48 of http://media.steampowered.com/apps/steamdevdays/slides/beyondporting.pdf), so most engines try to minimize the number of shader changes by using very generic "supershaders". In more modern APIs like DX12 and Vulkan the cost of shader switches is much lower, but for GLSL it is usually not advisable to double the number of shader variants just to save a few multiplications in one of them.

The cost of texture fetches in a shader is more significant, so removing some unneeded texture fetches may be profitable, however the flat images we bind are very small, so that they should be completely in the texture cache and the additional cost is hopefully also low.

Regarding regular if statements, you have to know that GPUs are not really good at branching, in fact the ability to jump over unused instructions was only introduced in DX10-era GPUs. The problem is that GPUs are based on SIMD, when a GPU manufacturer claims that his GPU supports e.g. 2048 threads in parallel, then means likely that the GPU has say 32 compute units with 64xSIMD each.

A compute unit is like a CPU core, it can run code independently from all the other compute units, and it runs the vertex or pixel shader for up to 64 vertices or pixels in parallel by using the 64 components of the SIMD registers it has. Ergo there is only one program counter for all 64 pixels and if there is a conditional jump instruction, the compute unit can only jump over the code, if all pixels have the same condition.

For non-uniform jumps the compute unit will still step through the code, but the results of the computation is discarded at the end. And pre-DX10 GPUs regard all jumps as non-uniform, so it usually is not a big gain to introduce ifs for performance reasons.

I don't think that this particular issue should block the PR, but I'm pretty sure that performance-wise the ifs in this function don't help.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for all those explanations, are you ok to put those words under CC By SA 3.0 so we may one day copy paste them in the wiki? knowledge is precious.

Regarding ifdef, I noticed that our code writing them looks to be broken. I tried to replace the if (u_HeightMapInNormalMap) code by some ifdef USE_HEIGHTMAP_IN_NORMALMAP and the normalmaps are now flipping on/off while I move. The macro code is really asking for a revamp in any way but it's really not the time to do that revamp. Especially because I have a lot of commits in the pipe that rely on the current code. Also, some of those commits are cleaning-up things, and it's always easier to revamp after a clean-up/refactor.

So I let the current code this way since it works with code we know to not be buggy (uniforms). We will be able to switch to more efficient code (macro) when the buggy code will not be buggy anymore.

This PR first refactors some normalmap code to avoid it to be copypasted in 6 files, and to be sure the same features are supported anywhere, and that was not true since r_NormalScale was not used anytime.

Then, the PR adds a keyword shader to allow people to revert a normalmap that is stored the wrong way, without changing the file itself.

Then the PR auto enables the DirectX format for DarkPlaces detected normalmaps.

So, this code not only fixed bugs and is not only more readable, it also enables engine to load a xonotic map after an unvanquished map in a totally transparent manner.

The PR also refactors parallax code and fixes the orientation.

Reflection maps are now loaded as cubemap (that was a bug), a new kind of cubemap naming scheme is added (for compatibility with Darkplaces).

The

parallaxkeyword can now receive the same argumentsdpoffsetmappingexpects.Parallax reflection cubemap is now implemented.