-

Notifications

You must be signed in to change notification settings - Fork 242

Architecture, difference between HELM 2 and HELM 3

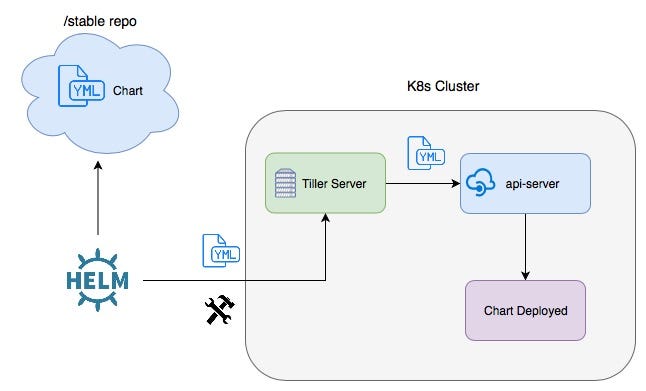

In helm 2 we had components like helm client and tiller server pod

Helm client

- Manage charts

- Communicate with tiller helm server

Tiller

- Helps in rendering charts

- Also uses rendered charts and deploys to kubernetes cluster by communicating with kube-api-server

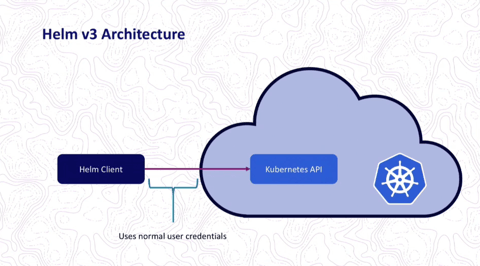

In Helm 3 tiller component is removed and helm client directly interacts with kube api server, this is because from k8s 1.6 version RABC objects are released which helps helm to authenticate directly with api server

in Helm 3 its quite simple all the activities right from managing, rendering and deploying will be done by helm client

Yes, Tiller has been removed and that’s a good thing but to understand why we need to get a bit of background. Tiller was a server-side (running on Kubernetes cluster) component of Helm and its main purpose was to make possible for multiple different operators to work with the same set of releases. When Helm 2 was developed, Kubernetes did not yet have role-based access control (RBAC) therefore to achieve mentioned goal, Helm had to take care of that itself. It had to keep track of who and where is allowed to installs what. This complexity isn’t needed anymore as from Kubernetes 1.6 RBAC is enabled by default, so there is no need for Helm to do the same job which can be done natively by Kubernetes and that’s why in Helm 3 tiller was removed completely.

Tiller was also used as a central hub for Helm release information and for maintaining the Helm state. In Helm 3 the same information are fetched directly from Kubernetes API Server and Charts are rendered client-side which makes Helm 3 more “native” and simpler for Kubernetes.

With Tiller gone, also the security model for Helm has been simplified (with RBAC enabled locking down Tiller for use in a production scenario was quite difficult to manage). Helm permissions are now simply evaluated using kubeconfig file. Cluster administrators can, therefore, restrict user permissions at whatever level they want while releases are still recorded in-cluster, and the rest of Helm functionality remains the same.

Helm 2 used a two-way strategic merge patch. It means that when you wanted to perform any helm operation, it compared the most recent manifest chart against the proposed chart manifest. It checked for the differences between these two charts to determine what changes needed to be applied to the resources in Kubernetes. Sounds pretty smart, right? The problem is that if changes were applied to the cluster “manually” (for example via kubectl edit), they were not considered. This resulted in resources being unable to roll back to its previous state because Helm2 only checked the last applied chart’s manifest as its current state, and since there were no changes in the chart’s state (we only changed live state on the cluster) Helm simply did not see the need for performing a rollback.

And that’s were three-way strategic merge patch comes to the rescue. How does Helm3 do that? It simply takes the live state into consideration too (thus 3-way instead of 2-way since now we have the old manifest, its live state, and the new manifest). For example, let’s say you deployed an application with:

helm install very_important_app ./very_important_app

and for example, this application Chart was set to have 3 replica sets. Now, if someone by mistake will perform kubectl edit or:

kubectl scale -replicas=0 deployment/very_important_app

and then someone from your team will realise that for some mysterious reason very_important_app is down and will try to execute:

helm rollback very_important_app

In Helm 2, it would generate a patch, comparing the old manifest against the new manifest. Because this is a rollback and someone only changed live state(so the manifest did not change) Helm would determine that there is nothing to rollback because there is no difference between the old manifest and the new manifest (both expect to have 3 replicas). Rollback is not performed then and the replica count continues to stay at zero. You start panicking now…

In Helm 3 on the other hand, the patch is generated using the old manifest, the live state, and the new manifest. Helm recognizes that the old state was at three, the live state is at zero and so it determines that the new manifest wishes change it back to three, so it generates a patch to fix that. You stop panicking now…

A similar process happens with Helm 3 when performing upgrades. Since it takes the live state into account now, then for example if some controller-based application (or something like a service-mesh) inject anything into kubernetes object which was deployed via Helm — it will get removed during helm upgrade process using Helm2. Helm 3 doesn’t do that —again, it takes the live state into consideration. Let’s assume that we want to install for example Istio on our cluster. Istio will start injecting sidecar containers into any deployment, so assuming you deployed something with Helm and your deployment consist of

containers:

- name: server

image: my_app:2.0.0

Then you installed Istio so your container definition looks like this now instead:

containers:

- name: server

image: my_app:2.0.0

- name: istio-sidecar

image: istio-sidecar-proxy:1.0.0

And if you now execute upgrade process with Helm2, you’ll end up with this:

containers:

- name: server

image: my_app:2.1.0

Istio sidecar gets removed since it wasn’t in the Chart. Helm 3 however, generates a patch of the containers object between the old manifest, the live state, and the new manifest. It notices that the new manifest changes the image tag to 2.1.0, but live state contains something extra. So upgrade with Helm 3 will work as you would expect:

containers:

- name: server

image: my_app:2.1.0

- name: istio-sidecar

image: istio-sidecar-proxy:1.0.0

Secrets as the default storage driver!!!! Helm 2 used ConfigMaps to store release information. In Helm 3, Secrets are used instead (with a secret type of helm.sh/release) as the default storage driver. This brings a few advantages and has greatly simplified the functionality of Helm. To get (and apply) the config, Helm2 had to perform quite some operations as config itself was stored encrypted and archived in one of the keys or ConfigMap. Helm3 stores the config directly in Secret so instead of performing multiple operations to get it, it can simply pull out the secret, decrypt and apply.

Release name does not have to be unique across the cluster anymore. Secrets containing releases are stored in the namespaces where the release was installed. So you can have multiple releases with the same name as soon as they are in different namespaces.

A JSON Schema validation can now be forced upon chart values. With that functionality, you can ensure that values provided by the user follow the schema created by the chart maintainer. This creates more possibilities for OPS vs DEV cooperation (OPS team can give more freedom to DEVs) and provides better error reporting when the user tries to use an incorrect set of values for a chart.

In Helm 2, if no name was provided, a random name would be generated. Helm 3 will throw an error if no name is provided with helm install (eventually you can use — generate-name flag if you want to still use random names)

When creating a release in a namespace that does not exist, Helm 2 would create it automatically. Helm 3 follows the behaviour of other Kubernetes tooling and returns an error if the namespace does not exist.

helm delete - > helm uninstall helm inspect -> helm show helm fetch -> helm pull