-

Notifications

You must be signed in to change notification settings - Fork 233

Tactic meeting minutes

-

2016-08-03Victor, Clément: Fix native tactics bootstraping issues (see Native tactics) -

2016-07-27Nik Propagate ranges through the normalizer and the tactic interpreter -

2016-07-27Victor Document the newproof-statemessage of the IDE protocol -

2016-07-27Victor Look at Nick's slow native tactics example

Main topics: Status reports ∙ Reflective proofs ∙ Native computation ∙ Native tactics ∙ repeat-match-goal demo

Nik is having trouble with the unifier, but it could also be an issue with a specific use a squash.

Clément is working on pattern-matching.

Clément wondered whether reflective proofs will be as enticing in F* as in Coq.

-

In Coq, tactics have to build proof terms. Reflective proofs are essentially a way to compress proof terms: concretely, to prove that 12 is even, one constructs this term:

Even_is_even 12 eq_refl

instead of this term:

Even2 (Even2 (Even2 (Even2 (Even2 (Even2 Even0)))))

where

Even2: ∀ n, Even n → Even (S (S n))andEven0: Even 0are the constructors of the inductiveEven, andEven_is_even: ∀ n, evenp n = true → Even nis a proof of proof of correctness for the decision procedureevenp. Theeq_reflthere has typeevenp 12 = true, which takes linear time (but constant memory) to typecheck, unlike the explicit proof withEven2, which takes linear time and linear memory. Of course, fully reducing the first proof term would in fact yield the second one, but that reduction doesn't need to take place.Reflective proofs in Coq are thus faster because they build smaller proof terms, and because they leverage Coq's efficient reduction mechanisms.

-

In F*, we don't produce proofs, and our tactics run in the same normalizer that's used to run computations. Do we gain anything from doing reflective proofs? We might save some overhead that comes from state-tracking in tactics, but I (Clément) would be on this not being that large.

Post-meeting notes: As a corollary, shouldn't F* tactics be much faster than Coq/Lean, since they don't need to produce proof terms? We could potentially get a similar trust story by proving our primitives correct, possibly even by giving a constructive model of what they do (it goes like this: our core tactics could produce proof terms (we prove this somehow) ∧ the tactic interpreter is correct ⇒ all our tactics could produce proof terms (they are combinations of core tactics) ⇒ all our tactic-based proofs are correct — and we don't need to pay the cost of building proof terms)

Coq has a way to reduce terms using compilation to OCaml and native execution. This is distinct from extraction, which loses information. [CoqReduction]. Could the same be used in F*? That'd speed up the normalization parts of our tactics.

| [CoqReduction] | Mathieu Boespflug, Maxime Dénès, and Benjamin Grégoire. Full reduction at full throttle. In Jean-Pierre Jouannaud and Zhong Shao, editors, Certified Programs and Proofs - First International Conference, CPP 2011, Kenting, Taiwan, December 7-9, 2011. Proceedings, volume 7086 of Lecture Notes in Computer Science, pages 362–377. Springer, 2011. https://hal.inria.fr/file/index/docid/650940/filename/full_throttle.pdf |

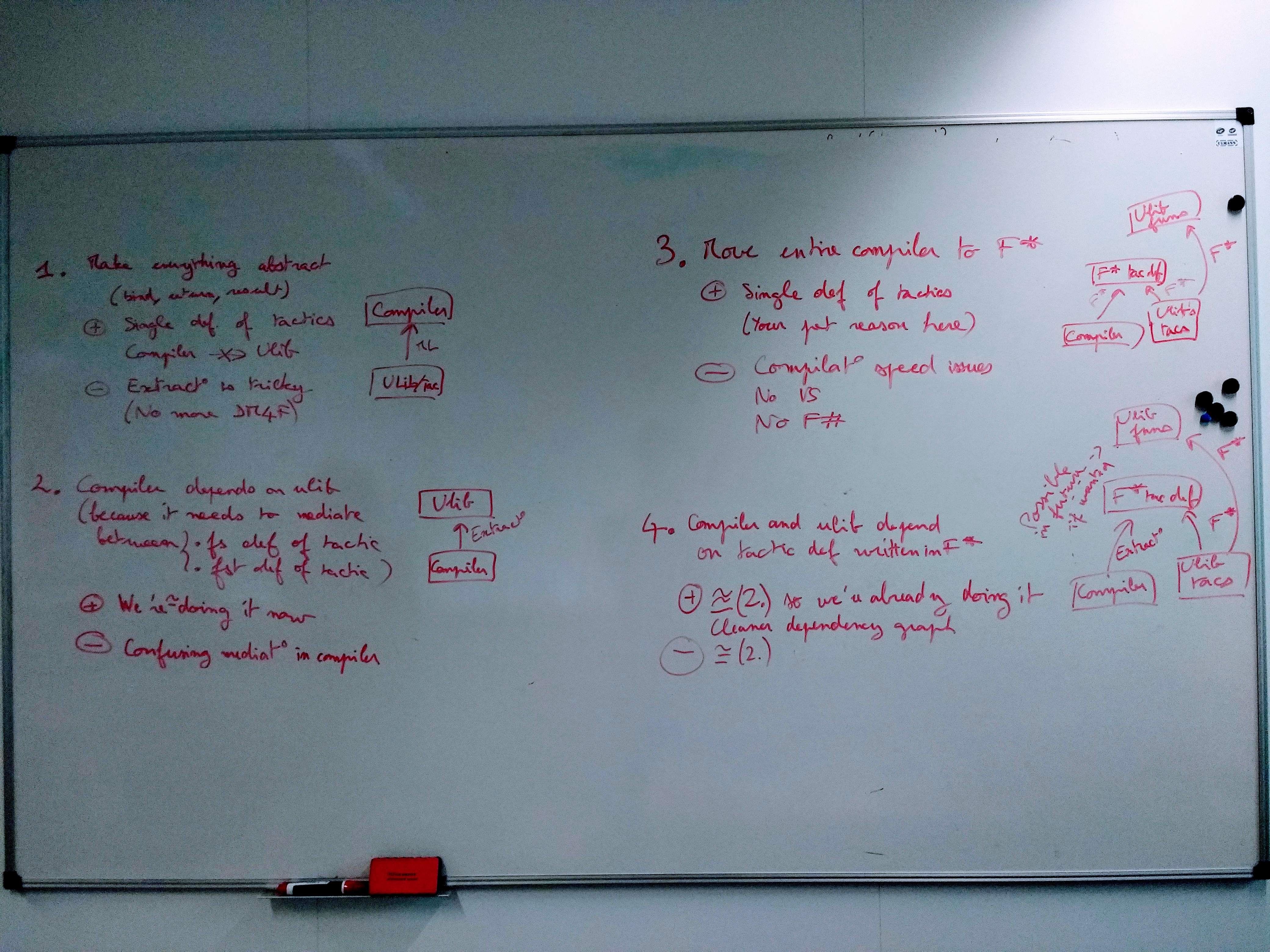

A summary of the preceding day's debate on how to best handle bootstrapping given dependencies from the compiler onto bits of ulib and of ulib onto bits of the compiler included a recap of 4 options, and the emergence of a fifth one:

Option 1 and 4 were most debated. Option 1 would have added extra work in the compiler for extraction, and turned tactics into a native effect instead of a DM4F. Option 3 created a dependency from the compiler to ulib, preventing dependencies in the other direction and forcing us to move (in the long run) any bit of the compiler that we wanted to use from ulib out of the compiler and into ulib. Option 1 was rejected due to the DM4F part (we'd like tactics to benefit from future DM4F improvements).

Option 0, similar to option 1 and rejected on the previous day, was to have the tactics parts of ulib depend on the compiler's definitions through opaque interfaces. Unfortunately, this meant that DM4F couldn't be used.

Option 5 is a clever twist from Jonathan on option 0: write the all the bits of the compiler that ulib currently depends on for native tactics in F*∩F# instead of OCaml, and let the tactics in ulib depend on that (not on an interface — on the definitions themselves) to enable DM4F. Then, at extraction time, do not extract the actual definitions: they are already in the compiler.

Final decision: option 5. Clément and Victor will do the required engineering mid-august.

Clément has been working on pattern matching and demoed a tiny crush implementation. We discussed two aspects, and future perspectives:

-

Current implementation: the tactic quotes a lambda term, considering each binder as unification variable and converting terms into a simple pattern type. An interpreter then runs the query, doing the required amount of inspect-ing.

Before:

g <-- cur_goal; match inspect g with | Tv_App squash g -> (match inspect squash with | Tv_FVar squash -> if inspect_fv squash = squash_qn then (match inspect g with | Tv_App eq_type_x y -> (match inspect eq_type_x with | Tv_App eq_type x -> (match inspect eq_type with | Tv_App eq typ -> (match inspect eq with | Tv_FVar eq -> if inspect_fv eq = eq2_qn then <some tactic here> else fail "not an equality" | _ -> fail "not an app2 of fvar: ") | _ -> fail "not an app3") | _ -> fail "not an app2") | _ -> fail "not an app under squash") else fail "not a squash" | _ -> fail "not an app of fvar at top level") | _ -> fail "not an app at top level"After:

(fun x y -> squash (x == y)) --> <some tactic here>

-

There's a lot of overlap here with unification here, and some tings like

contextin Coq (matching subterms) imply solving tricky unification problems. We could add hooks to use the unifier instead of reimplementing things here.Pros: Lots of existing code can be reused; the unifier is pretty good; this would give it extra testing; Nik says it does higher-order unification like a pro.

Cons: This would be using the unifier for two relatively different purposes — optimizations suitable for one might not work well for the other; the unifier is trusted, but the pattern matcher doesn't need to be, so extensions and optimizations would have to clear a higher threshold; the unifier is implemented in F*∩F#, not in full F*, so that's less dogfooding; this prevents some of the optimizations suggested in “perspectives” below.

Perspectives:

- Introducing better syntax for quoting and unquoting and for patterns would be nice. This is fuzzy: it could mean making the existing notion of patterns accessible outside the compiler. Or it could mean adding syntax to more easily define lambdas (like e.g.

@($x + $y)to mean(fun x y -> x + y); these would be named arguments, so no issues with argument ordering). - There's plenty of fun optimization to do for patterns. We can use

synth_by_tacticto synthesize efficient pattern-matching tactics, for example (think partial-evaluation-style specializations of a unification engine to specific patterns, with additional side-tables for faster-matching). This is possible if we do all pattern-matching on the tactics side.

Main topics: Tactic debugging/tracing ∙ tactic edition in fstar-mode ∙ native compilation ∙ Separation logic

Victor and Clément demoed preliminary goal visualization in F* mode. Goals are sent to Emacs using a new kind of asynchronous message (proof-state), sent every time the dump tactic is invoked. Goals look like this:

let a (x: int{x == 1}) (y: int{y == 1}) : unit =

assert_by_tactic (x + y == 2)

(dump "arith")

arith Fri Aug 4 18:11:54 2017

Goal 1/1

x#603892: (x:Prims.int{ x == 1 })

y#603893: (y:Prims.int{ y == 1 })

y#604068: Prims.int

uu___#604069: Prims.squash (y == x + y)

y#604070: Prims.int

uu___#604071: Prims.squash (y == 2)

y#604072: Type0

uu___#604073: Prims.squash (y == (y == y))

--------------------------------------------------------------------------------

Prims.squash (x + y == 2)

(*?u33*) _ x y y uu___604069 y uu___604071 y uu___604073

The corresponding message in the F* IDE protocol is like this:

{ "contents": {

"smt-goals": [],

"goals": [

{ "goal": { "type": "Prims.squash (x + y == 2)",

"witness": "(*?u33*) _ x y y uu___604069 y uu___604071 y uu___604073" },

"hyps": [

{ "type": "(x:Prims.int{ x == 1 })",

"name": "x#603892" },

{ "type": "(y:Prims.int{ y == 1 })",

"name": "y#603893" },

{ "type": "Prims.int",

"name": "y#604068" },

{ "type": "Prims.squash (y == x + y)",

"name": "uu___#604069" },

{ "type": "Prims.int",

"name": "y#604070" },

{ "type": "Prims.squash (y == 2)",

"name": "uu___#604071" },

{ "type": "Type0",

"name": "y#604072" },

{ "type": "Prims.squash (y == (y == y))",

"name": "uu___#604073" } ] } ],

"label": "arith" },

"level": "proof-state",

"kind": "message" }

- TODO: Victor agreed document this protocol extension on the wiki.

We identified four and discussed three approaches for tracing through tactics.

- TODO: All three require propagating range information through the normalizer. Nik agreed to look into it.

-

Pretend that each

bindincludes a call todump.Pros: Makes it trivial to step through tactics and implement advanced debugging (everything is done on the IDE side); doesn't require invasive changes in the compiler; leverages the existing protocol.

Cons: Can be costly for complex tactics (large proof states, large numbers of states).

Post-meeting thoughts: The cons can be mitigated by bounding the debugging depth, or passing certain options to F* beforehand (breakpoints, conditions, …). We could also send state ids instead of complete states, and the UI would retrieve full goals from goal IDs by querying F* (but that last point would require asynchronicity in the IDE implementation: the protocol already is asynchronous, but not the F*-side implementation)

-

Make the normalizer and the tactics interpreter suspendable and resumable: tactics execute partially until the first bind, then suspend their execution and return to the IDE loop, which gets a new kind of result (

partial). Then the IDE sends special commands to step through the code.Pros: Makes it easy to get fine-grained control on which goals get inspected; makes it possible to run tracing faster. Also allows for more advanced debugging, like hot-swapping code or changing values while the code is paused and resuming execution.

Cons: Hard to implement (no call-cc in F*); requires additional support from the Emacs mode; requires complex new extensions of the protocol (see next solution for more on this topic)

-

Like (2), but add a secondary REPL inside the normalizer.

Pros: Same as (2); easier to implement.

Cons: Requires additional support in fstar-mode (two kinds of REPL imply additional developments of the async protocol, including to prevent fstar-mode from sending extra asynchronous messages in the middle of a debugging session); Less aesthetic?

-

(Not discussed) Use

ocamldebug(for bytecode) orgdb(for native code) to step through extracted tactics.Pros: Well-supported, powerful tools; little implementation effort on the F* and fstar-mode sides.

Cons: High overhead (need to extract, run in debugger); need to learn extra tools; no F*-specific support; ; OCaml doesn't have good support for sourcemaps, so one has to step through the OCaml code, not through the F* code (Clément has started lobbying efforts on that).

Final decision: go with (1) for now; revisit once issues pop up.

Note: An implementation of (3) would also allow for debugging arbitrary F* programs.

Problem: Editing tactics (in large terms) is slow.

Causes: F* needs to re-parse, re-desugar, and re-type-infer-and-type-check every time a partial tactic is re-executed. Tactic execution time

We discussed 3 solutions for this:

-

Add aggressive caching everywhere: the details are fuzzy, but basically we want to be able to detect when a term hasn't changed except for tactics, and then get right back to the first changed point in each edited tactic (without re-typechecking the surrounding code and re-computing VCs). This involves three layers of caching: in type-checking, in VC generation, and in tactic execution. And additional layer of caching can be used for individual queries to the SMT solver.

Pros: Transparent to users; simplest execution model on the UI side (repeatedly re-run a failed tactic). Used by Lean and Dafny (for TC and VC gen; there's no tactics there), so feasible.

Cons: Tricky to get right; high implementation complexity; potentially high memory footprint.

-

Make the normalizer and the tactics interpreter suspendable and resumable: tactics execute until completion, then suspend their execution and return to the IDE loop, which gets a new kind of result (

partial). Then the IDE sends special commands to add new code or revert already-run code. Potentially lock code/proofs parts and keep only tactic parts unlocked for edition.Pros: This is the Coq async model, roughly: it works there, so it might work for us; unlike 2 above, we can also keep the regular protocol and extend it to send a list of holes to the UI; then the UI can send edit command related to these holes.

Cons: See (2) of previous topic; hot code swapping; more complex notion of edited region.

-

Add a REPL inside the normalizer and an automatic breakpoint at the end of each tactic; implement hot code-swapping; do like 2 for the rest.

Pros: Useful for other purposes beyond tactic edition; smaller change?

Cons: See (3) above, plus code swapping; unclear story about stepping back in a tactic (canceling already-executed parts); cannot piggyback on the existing protocol.

-

Devise new modes of edition:

- Add simple ways to extract lemmas from a large proof: the IDE can create one lemma from each

assert_by_tactic, and insert the lemma and its partial proof in the buffer before the main theorem. Then the main theorem doesn't need to be repeatedly parsed and typechecked. Pros: Easy, cheap. Cons: Partial solution. - Add new where-style syntax to defer some goals. Terms are fully typechecked and verified with all lemmas assumed, then F* expects the next

npushes (one per assert_by_tactic) to be tactic definitions solving each of the asserts. Pros: doesn't require re-executing/typechecking terms; simple implementation (add a list of pending assertions, aka obligations in Coq land). Cons: tactics are decoupled from their goal (no inline tactics); some people dislike obligations. Post-meeting note: Such an approach naturally fits other interesting applications, like supplying extrinsic proofs of termination or observational purity after defining terms, or proving exhaustivity of pattern matches after defining them.

- Add simple ways to extract lemmas from a large proof: the IDE can create one lemma from each

No clear consensus on final decision.

Victor ran into issues (edit 2017-08-01: fixed) with not an embedded result-style error messages. Issue https://github.com/FStarLang/FStar/issues/1154 was opened to document this.

Nick had performance issues where native compilation only seemed to add overhead. Victor agreed to look into the example program. Clément asked how much of the program was spent in SMT vs. tactics to gauge the maximum expected speedup, and Nick offered to measure it.

Aseem and Monal are working on a separation logic framework using tactics. The WIP is in branch monal_plugins. This uses deep-embedded terms with shallow-embedded pre-post conditions in Hoare triples. Suggest to look into the framework developed in Coq for the FSCQ verified file system.

-

2016-07-27Nik Look at Victor's reification issue.