./download_models.sh provided by [9].

./download_images.sh

python3 neural_image_style_transfer.py built upon [9].

Since Gatys et al. proposed an image style transfer algorithm using convolution neural network (CNN) in 2015 ([1.1], [1.2]), many proceeding works extend their method and produce various results. Jing et al ([2]) suggested that these methods be divided into 3 categories: Maximum-Mean-Discrepancy(MMD)-based Descriptive Methods, Markov-Random-Field(MRF)-based Descriptive Methods, and Generative Methods. In this project we focus on MMD-based methods, which achieves style transfer by gradient descent on an output image. We reproduce the result of [1.1], implement the idea "activation shift" in [3] to improve transfering quality, and then give furthur insights into the arguments in [4] by both mathematical proofs and experiments.

We briefly review the algorithm in [1.1] in order to set up the mathematical notations, which is mainly adapted from [4] and [5], and will be used throughout this project.

Given a content image xc and a style image xs, we would like to generate an output image xo consisting of the content of xc and the style of xs. xo shares the same height and width with xc and is initialized to contain the same pixel values as xc. Let xc, xs, and xo be passed through a pretrained VGG19 CNN ([6]). We denote the heights of feature maps of xc, xs, and xo in layer l of the CNN by hcl, hsl, and hol, respectively, and the widths are denoted by wcl, wsl, and wol. Let mzl = hzl × wzl, ∀ z ∈ {c, s, o}. Also let nl be the number of filters in layer l. We then denote the rearranged feature maps of xc, xs, and xo in layer l by matrices Pl ∈ Mnl×mcl, Sl ∈ Mnl×msl, and Fl ∈ Mnl×mol, respectively. Pli,(wcl×k+q+1), 0 ≤ k < hcl and 0 ≤ q < wcl, is the value of (k+1)-th row and (q+1)-th column of i-th feature map of xc in layer l. Sl and Fl are viewed similarly. We minimize the loss function L = Lc + Ls to achieve style transfer by back propagation through CNN and gradient descent on xo for hundreds of epochs, where

Lc = Σl al⁄nlmcl Σi=1nl Σk=1mcl (Flik - Plik)2 is the content loss, and

Ls = Σl bl⁄(nl)2 Σi=1nl Σj=1nl (Glij - Alij)2 is the style loss, where

Al = 1⁄msl(Sl)(Sl)T and Gl = 1⁄mol(Fl)(Fl)T are averaged Gramian matrices, and

al and bl are user-specified weights.

In the context below, we omit the superscript l when not needed.

The meaning of Gramian matrices is clearly pointed out in [7], section 1: Aij = 1⁄ms Σk=1ms SikSjk indicates how often the i-th and j-th features appears together. G is understood similarly.

The content loss takes care of "where" a feature appears in a feature map. On the other hand, the style loss emphasizes "how often" distinct features appear together but does not care "where" they co-occur.

- Though [1.1] assumes that the heights and weights of xc and xs are identical, they are allowed to differ. There is no such requirement in [5].

- In literature, Gramian matrix comes in two forms: A = SST (others) and A = STS ([5]). The former is more consistent with APIs of deep learning libraries while the latter is the standard form ([10]). We use the former one.

In this project we use 3 content images and 3 style images as inputs and get 3 × 3 = 9 output images in each experiment.

Gatys kindly provides his implementation of [1.1] on [9]. All the extensions below are built upon Gatys' code.

In this part, we simply run Gatys' code to generate style-transferred images. It can be observed that there is "ghosting" in some results, as stated in [8].

Risser et al. argue that ghosting occurs because there are multiple sets of pixel values that can produce alike feature maps when passed through CNN, and some of the sets looks like ghosting ([8]). Novak et al. also give a related argument in [3], section 3.3 and suggest that using "activation shift" can reduce the ambiguity of candidate sets of pixel values. Their modification is that: instead of letting

A = 1⁄msSST and G = 1⁄moFFT,

let

A = 1⁄ms(S + sU)(S + sU)T and G = 1⁄mo(F + sU)(F + sU)T, where

s is a user-specified scalar and U the all one matrix (with size varying to meet the need).

The explanation of this modification is provided in [3], which we are not going to restate here. Here we simply implement it and examine its performance with s varying. It can be ovserved that the ghosting disappears when |s| increases. Activation shift removes ghosting.

Gramian matrix with activation shift. Value of s from top to bottom: -600, -500, -400, -300, -200, -100, 0, 100, 200, 300, 400, 500, 600.

Li et al. and Risser et al. regard each column S.k of S and F.k of F as generated from "style" probability distributions Ds and Do, respectively ([4][8]). Minimizing the Gramian-matrix-based style loss Ls is a way to match Do to Ds.

Li et al. furthur argue that minimizing Ls can be interpreted as minimizing MMD with a quadratic kernel. We slightly modify their proof and present it here.

1⁄n2 Σi=1n Σj=1n (Gij - Aij)2

= 1⁄n2 Σi=1n Σj=1n ((1⁄moFFT)ij - (1⁄msSST)ij)2

= 1⁄n2 Σi=1n Σj=1n ((1⁄mo Σk=1mo FikFjk) - (1⁄ms Σk=1ms SikSjk))2

= 1⁄n2 Σi=1n Σj=1n ((1⁄mo Σk=1mo FikFjk)2 + (1⁄ms Σk=1ms SikSjk)2 - 2(1⁄mo Σk=1mo FikFjk)(1⁄ms Σk=1ms SikSjk))

= 1⁄n2 Σi=1n Σj=1n ((1⁄mo2 Σk1=1mo Σk2=1mo Fik1Fjk1Fik2Fjk2) + (1⁄ms2 Σk1=1ms Σk2=1ms Sik1Sjk1Sik2Sjk2) - (2⁄moms Σk1=1mo Σk2=1ms Fik1Fjk1Sik2Sjk2))

= (1⁄mo2 Σk1=1mo Σk2=1mo 1⁄n2 Σi=1n Σj=1n Fik1Fjk1Fik2Fjk2) + (1⁄ms2 Σk1=1ms Σk2=1ms 1⁄n2 Σi=1n Σj=1n Sik1Sjk1Sik2Sjk2) - (2⁄moms Σk1=1mo Σk2=1ms 1⁄n2 Σi=1n Σj=1n Fik1Fjk1Sik2Sjk2)

= (1⁄mo2 Σk1=1mo Σk2=1mo (1⁄n Σi=1n Fik1Fik2)2) + (1⁄ms2 Σk1=1ms Σk2=1ms (1⁄n Σi=1n Sik1Sik2)2) - (2⁄moms Σk1=1mo Σk2=1ms (1⁄n Σi=1n Fik1Sik2)2)

= (1⁄mo2 Σk1=1mo Σk2=1mo (1⁄n F.k1TF.k2)2) + (1⁄ms2 Σk1=1ms Σk2=1ms (1⁄n S.k1TS.k2)2) - (2⁄moms Σk1=1mo Σk2=1ms (1⁄n F.k1TS.k2)2)

= (1⁄mo2 Σk1=1mo Σk2=1mo K(F.k1, F.k2, 2)) + (1⁄ms2 Σk1=1ms Σk2=1ms K(S.k1, S.k2, 2)) - (2⁄moms Σk1=1mo Σk2=1ms K(F.k1, S.k2, 2)) -------- (1), where

K(v, u, p) = (1⁄nvTu)p is the averaged power kernel.

Theoretically we can use any positive number as the power p of the kernel, but in practice, we need to compute STS and FTF, which are of size ms2 and mo2, in order to compute MMD. This exhausts memory. Thus, we need to convert the MMD side to the Gramian side if we want to use a power kernel with another p. We now show that MMD with kernel with integer power are easy to convert. The following is the general version of equation (1).

Let p be a nonnegative integer.

(1⁄mo2 Σk1=1mo Σk2=1mo K(F.k1, F.k2, p)) + (1⁄ms2 Σk1=1ms Σk2=1ms K(S.k1, S.k2, p)) - (2⁄moms Σk1=1mo Σk2=1ms K(F.k1, S.k2, p))

= (1⁄mo2 Σk1=1mo Σk2=1mo (1⁄n F.k1TF.k2)p) + (1⁄ms2 Σk1=1ms Σk2=1ms (1⁄n S.k1TS.k2)p) - (2⁄moms Σk1=1mo Σk2=1ms (1⁄n F.k1TS.k2)p)

= (1⁄mo2 Σk1=1mo Σk2=1mo (1⁄n Σi=1n Fik1Fik2)p) + (1⁄ms2 Σk1=1ms Σk2=1ms (1⁄n Σi=1n Sik1Sik2)p) - (2⁄moms Σk1=1mo Σk2=1ms (1⁄n Σi=1n Fik1Sik2)p)

= (1⁄mo2 Σk1=1mo Σk2=1mo 1⁄np Σi1=1n Σi2=1n ... Σip=1n (Πq=1p Fiqk1)(Πq=1p Fiqk2)) + (1⁄ms2 Σk1=1ms Σk2=1ms 1⁄np Σi1=1n Σi2=1n ... Σip=1n (Πq=1p Siqk1)(Πq=1p Siqk2)) - (2⁄moms Σk1=1mo Σk2=1ms 1⁄np Σi1=1n Σi2=1n ... Σip=1n (Πq=1p Fiqk1)(Πq=1p Siqk2))

= 1⁄np Σi1=1n Σi2=1n ... Σip=1n ((1⁄mo2 Σk1=1mo Σk2=1mo (Πq=1p Fiqk1)(Πq=1p Fiqk2)) + (1⁄ms2 Σk1=1ms Σk2=1ms (Πq=1p Siqk1)(Πq=1p Siqk2)) - (2⁄moms Σk1=1mo Σk2=1ms (Πq=1p Fiqk1)(Πq=1p Siqk2)))

= 1⁄np Σi1=1n Σi2=1n ... Σip=1n ((1⁄mo Σk=1mo Πq=1p Fiqk)2 + (1⁄ms Σk=1ms Πq=1p Siqk)2 - 2(1⁄mo Σk=1mo Πq=1p Fiqk)(1⁄ms Σk=1ms Πq=1p Siqk))

= 1⁄np Σi1=1n Σi2=1n ... Σip=1n ((1⁄mo Σk=1mo Πq=1p Fiqk) - (1⁄ms Σk=1ms Πq=1p Siqk))2 -------- (2).

Equation (2) can be interpreted as: MMD with kernel with power p is equivalent to mean squared error of frequencies of co-occurrences of all possible permutations of p features. The case with p = 3 is also mentioned in [3], section 4.5.

Although we can implement the generalized Gramian side for all nonnegative integer p, it still runs out of memory for p ≥ 2. Unfortunately, only p = 1 and 2 (using Gramian matrices) are practical.

For p = 1, we can furthur simplify equation (2).

1⁄np Σi1=1n Σi2=1n ... Σip=1n ((1⁄mo Σk=1mo Πq=1p Fiqk) - (1⁄ms Σk=1ms Πq=1p Siqk))2

= 1⁄n Σi=1n ((1⁄mo Σk=1mo Fik) - (1⁄ms Σk=1ms Sik))2

= 1⁄n Σi=1n (mean(Fi.) - mean(Si.))2 -------- (3).

MMD with linear kernel is equivalent to mean squared error of frequencies of occurrence of every feature.

This is much more easier to compute than what equation (2) suggests. We call this approach "mean vector" as opposed to the original Gramian matrix.

In this part we show that the style loss using mean vectors does capture some aspects of the style.

By substituting each variable z in equation (2) for (z + sU), we have

1⁄np Σi1=1n Σi2=1n ... Σip=1n ((1⁄mo Σk=1mo Πq=1p (Fiqk + sU)) - (1⁄ms Σk=1ms Πq=1p (Siqk + sU)))2

= (1⁄mo2 Σk1=1mo Σk2=1mo K(F.k1 + sU, F.k2 + sU, p)) + (1⁄ms2 Σk1=1ms Σk2=1ms K(S.k1 + sU, S.k2 + sU, p)) - (2⁄moms Σk1=1mo Σk2=1ms K(F.k1 + sU, S.k2 + sU, p)) -------- (4).

Letting p = 2, we find that the LHS is the style loss using Gramian matrices with activation shift s, and the RHS is MMD with "shifted" quadratic kernel. Thus we can reinterpret the conclusion in part 1: style loss using MMD with shifted quadratic kernel removes ghosting.

In part 2, we mention that minimizing the Gramian-matrix-based style loss is a way to match Do to Ds. There are other ways to describe Do and Ds and therefore are other ways to match them. In this part, we try two descriptions: variance vector and covariance matrix, as shown in the following.

Ls = Σl bl⁄nl Σi=1nl (var(Fi.) - var(Si.))2, and

Ls = Σl bl⁄(nl)2 Σi=1nl Σj=1nl (cov(Do)ij - cov(Ds)ij)2, respectively.

We now examine their performance. It can be observed that the style loss using covariance matrices creates great results without ghosting while thie one using variance vectors results in ghosting easily. It can also be seen that some styles are easy to transfer well (introducing less ghosting) while some are not. For example, Vincent van Gogh's "the starry night" is an relatively easier one.

- We can also add activation shifts to mean vectors, variance vectors, and covariance matrices. But if we write down the mathematical formula of style losses and do some calculation, we will find that the activation shifts cancel out eventually. Thus activation shifts make sense only for Gramian matrices.

- Style losses using linear combinations ([4], section 4.2) of mean vectors, Gramian matrices, variance vectors, and covariance matrices are possible, but the goal of this project is to examine the ability of each probability description to transfer the style, so we will not do such tests.

We see in the experiments above that there are two style losses creating great results: the one using shifted Gramian matrics and the one using covariance matrices. We regard the latter as a more elegant way since in the former, we need to decide one more argument, namely, the activation shift s. However, there are some cases in which the former outperforms the latter.

When doing this project, we find that striking the balance among the weights, al and bl, of losses is a nontrivial work. There is no solid theory that guides us to tune them, and we feel that the balance is just occasionally struck. This may be why people seek other approaches such as feed-forward methods to achieve neural image style transfer.

[1.1] L. A. Gatys, A. S. Ecker, and M. Bethge. Image style transfer using convolutional neural networks. 2016.

[1.2] L. A. Gatys, A. S. Ecker, and M. Bethge. A neural algorithm of artistic style. 2015. Preprint version of [1.1].

[2] Y. Jing, Y. Yang, Z. Feng, J. Ye, and M. Song. Neural Style Transfer: A Review. 2017.

[3] R. Novak and Y. Nikulin. Improving the neural algorithm of artistic style. 2016.

[4] Y. Li, N. Wang, J. Liu, and X. Hou. Demystifying neural style transfer. 2017.

[5] L. A. Gatys, A. S. Ecker, M. Bethge, A. Hertzmann, and E. Shechtman. Controlling perceptual factors in neural style transfer. 2016.

[6] K. Simonyan and A. Zisserman. Very Deep Convolutional Networks for Large-Scale Image Recognition. 2014.

[7] Y. Nikulin and R. Novak. Exploring the neural algorithm of artistic style. 2016.

[8] E. Risser, P. Wilmot, and C. Barnes. Stable and controllable neural texture synthesis and style transfer using histogram losses. 2017.

[9] PyTorch implementation of [1.1] by Gatys. https://github.com/leongatys/PytorchNeuralStyleTransfer

[C.1] Ludwig van Beethoven. https://upload.wikimedia.org/wikipedia/commons/thumb/6/6f/Beethoven.jpg/1200px-Beethoven.jpg

[C.2] Notre-Dame de Paris. http://www.newlooktravel.tn/photo/3-80-NewLookTravel_Paris_Cathedrale-Notre-Dame-nuit.jpg

[C.3] Opening of symphony no. 5 by Ludwig van Beethoven. https://www.bostonglobe.com/arts/music/2012/11/17/beethoven-fifth-symphony-warhorse-for-all-times/UjG7gHBTD5z5qB843gYZZO/story.html

[S.1] The starry night by Vincent van Gogh. https://raw.githubusercontent.com/leongatys/PytorchNeuralStyleTransfer/master/Images/vangogh_starry_night.jpg

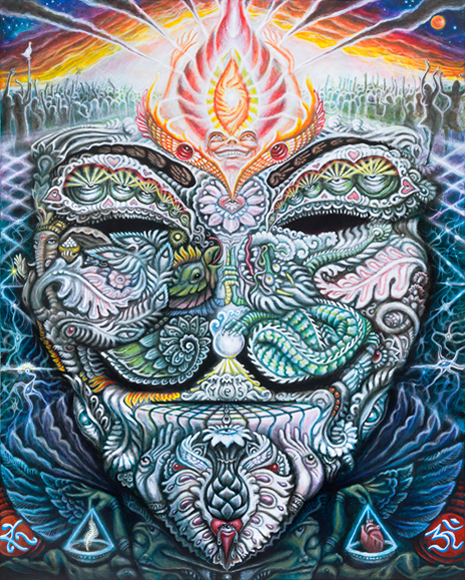

[S.2] Fawkes by Randal Roberts. https://www.allofthisisforyou.com/images/2012-fawkes-randal-roberts_580.jpg

[S.3] Ice. http://eskipaper.com/images/ice-5.jpg

[10] Gramian matrix. https://en.wikipedia.org/wiki/Gramian_matrix