Selfhosted backup upload target that manages versions encryption retention and supports cloud endpoints

- Selfhostable

- Handles backup versions by date, size and count

- File based - no database needed

- Supports multiple local and cloud endpoints

- Supports multiple backup sources (machines you want to back up), distinguished by hostname

- No dependencies unless you want cloud endpoints

The idea is that you use BackupDrop to upload your backups, which (after upload) cannot be changed by the device you backed up. Some crypto lockers are actively looking for backup devices like NAS or backup drives and delete or encrypt them too. Using BackupDrop the machine you backed up cannot delete or modify or even access past backups. It's meant to be a push-only system so you can upload backups via CURL from any device you want to back up from.

Also BackupDrop can handle multiple external storage providers and save the backups on S3, FTP or NFS.

curl -s -F "file=@theFileYouWantToUpload" https://yourBackupDrop.server.url/[identifier]For every identifier there will be a directory created in the data/ folder. It makes sense to use the hostname of the server of name of a specific application you're backing up.

#!/bin/bash

set -e

mysqldump --all-databases > db.sql

paths="/etc/nginx/ /var/www/ /home/myuser db.sql"

echo "[i] Starting compression"

tar --exclude-vcs --exclude="backup.tar.gz" -c -v -z -f backup.tar.gz $paths

echo "[i] done! Uploading"

curl -s -F "file=@backup.tar.gz" https://yourBackupDrop.server.url/$(hostname)

echo "[i] cleanup"

rm backup.tar.gz

rm db.sqlYou can and should encrypt files before uploading them (especially if you're using cloud endpoints) but if you can't, BackupDrop can handle it for you using public key or password encryption. Read more

# without persistence

docker run --rm --name backupdrop -p 8080:80 -it hascheksolutions/backupdrop

# with persistence

docker run --restart unless-stopped --name backupdrop -v /tmp/:/var/www/backupdrop/data -p 8080:80 -it hascheksolutions/backupdropStart the server (in a prodction environment you'll want to use a real webserver like nginx)

cd web

php -S 0.0.0.0:8080 index.phpThen upload a file using curl

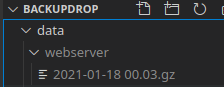

curl -s -F "file=@webserver.tar.gz" http://localhost:8080/webserverResponse:

{

"status": "ok",

"filename": "2021-01-18 00.03.gz",

"cleanup": []

}This has created the folder data/webserver (because we uploaded the file to localhost:8080/webserver) and renamed the file to the timestamp and the original extension.

If you upload the exact same file twice (detected by comparing their checksums), BackupDrop will delete the older upload and just keep the new one (only within the same backup source hostname)

curl -s -F "file=@webserver.tar.gz" http://localhost:8080/webserverResult:

{

"status": "ok",

"filename": "2021-01-18 00.24.gz",

"cleanup": [

"Deleted '2021-01-18 00.03.gz' because it's a duplicate"

]

}To change default settings you need to copy or rename /config/example.config.inc.php to /config/config.inc.php and change the values as needed.

Check out the example config file to see what how you can configure BackupDrop.