-

Notifications

You must be signed in to change notification settings - Fork 8

Home

Instructor: James Newton

Contact info: raise an issue

GitHub.com account required: If you don't have one, sign up, but do NOT use your real name as your username. No identifying data about students, or students themselves, can leave this building without express written consent of guardian and school.

This course is a serious and complex introduction to Robotics, automation, and the challenges of programming the real world. We will use Javascript as the primary language for all sections of the course because it happens to be the language of choice for the robot arm we will use, and because it has a wider application than probably any other language currently being used. The "Dexter" robotic arm from Haddington Dynamics will be available for demos and to test student programs. We will also provide a Sumo Robot Kit from Addicore.com for the students to build, expand into an autonomous robot, and take home. Students will learn to use Javascript to write programs that get data from the real world, describe motions, and design physical objects. By the end of the course, students will have completed a project of their own choosing, and demonstrated the ability to code reality.

This course will be 4 weeks long, 5 days per week (most weeks), 3 hours per day. Later classes will build very quickly on classes early in the course. Missing even one day will be devastating, but you can always make up the material by following the directions here.

We will work 3 hours each day, from 12:30 to 3:30 pm with a short break after 1.5 hours.

Session I: 6/24/19 to 6/28/19 1 week

Session II: 7/1/19 to 7/3/19 (*3 days)

Session III: 7/8/19 to 7/12/19 1 week

Session IV: 7/15/19 to 7/19/19 1 week

Parents / Guardians should arrive to pick up students 10 to 15 minutes before the end of class, at about 3:15 or 3:20

Students should probably be high school age, able to understand the speak English (unless translators are available) and having prior experience with programming would be very recommended. This is an ADVANCED course, and will be difficult for students who do not have at least some experience with some form of programming, even if that isn't Javascript.

An account with GitHub.com is required, and the name of the account should NOT include the students name. No information connecting the real identity of a student to this class should be released in public without express written consent of BOTH guardian AND school. Open your browsers, go to GitHub.com, and sign up for an account.

Most of the class content will be available online, here. However, in class the instructor will add notes, answer questions, and guide student work. If students have questions or problems, outside class hours, or even during class when the instructor is busy with other students or lecture, they may raise an issue and it will be answered asap.

Because the class has only one instructor, it will be very important that you raise your hand if you have questions, and wait for the instructor. But also, collaboration is normal and critical in the world of programming, so if you notice that your neighbor has their hand up, and you see that they are having an issue that you understand how to solve, as long as you are doing ok on time, please DO offer to help them! If they don't want it, or you can't seem to help them, shrug and go back to your own work.

In general: Do as you please, but harm none.

Welcome! This class should be a challenge, a FUN challenge, but a challenge. There will be times when you will wonder if you are smart enough, or care enough to continue. This is expected an normal both in class and in professional life. One of the goals of this class is to erase or dim the fear of failure which is generally instilled by school and society.

- Fail

- First

- Fast

- Fix

Of course success is the goal, but success through luck is not reproducible. Failure along with success teaches us the reality of the world and allows us to better predict successful actions in the future. If you try something and luck out, you don't know WHY it worked. You can try to reproduce those exact conditions, but you don't know which conditions are important and which are not.

More than that, you have to CARE enough to TRY even though you may FAIL. The willingness to fail shows motivation.

What is your motivation for being in this class?

Take on the hardest, least known, parts of any job first. The last thing you want to do is complete all the easy stuff, only to find that the hard parts are undoable. This is a critical error which is common in business, design, and life. Can you see how a fear of failure would (mis)guide a person to make this error?

Can you share a time when you turned away from trying something that you felt was simply too difficult, scary, or likely to result in failure? If you aren't comfortable doing that, can you admit that your lack of comfort is informed by a fear of failure?

Think of a major goal you have in life. Share what you think is the most difficult part of that goal.

Make small, incremental, steps towards the larger goal. Failing fast means you can fail a lot and that means you will stop failing and succeed faster. Rapid development cycles are the key to rapid progress.

This is the opposite of procrastination. Can you share your favorite activity to do instead of finishing your homework?

Making small attempts quickly is also a great way to avoid the fear of failure, and the need for perfection.

Dans ses écrits, un sage Italien Dit que le mieux est l'ennemi du bien. -- Voltaire. (In his writings, a wise Italian says that the best is the enemy of the good)

"A man's reach should exceed his grasp." Words from a poem by Robert Browning,

Once you have failed enough, you know exactly how to fix new failures when you see them. Experience is the result of many many failures.

Success without experience leads to "Impostor Syndrome" which is the feeling of being a fraud and an irrational fear of anyone finding out how unsure you are. People who have this issue are very easy to see. They can never admit what they don't know, or that they have made any mistake, no matter how small.

Please proudly document your failures, and what you've learned from them, as well as your successes.

- The word Robot comes from the Czech word "robota" meaning: Forced labor. Robots do jobs. But robots don't need to look like humans. A dishwasher is a robot because it does the job of washing the dishes. A car is a robot horse. A factory that makes lasagna is a robot cook. The process of using robots to do tasks previously done by humans is called "Automation".

Automation moves jobs from human to robot. We do that by programming, and connecting that program to the real world.

1804 Jacquard loom by Joseph Marie Jacquard is the first to use punched cards to direct the machine. First "programming" job created.

1840's Chuck Babbage conceives of the "Analytical Engine", to be programmed by punch card, and "Touring Complete" for the printing of mathematical tables. Ada Lovelace documents it, publishes the first computer program, and hacks the machine to show it could generate music and do other more interesting things.

1922 Kathleen Booth writes the first assembly language, to change human readable instructions into the numbers machines can execute.

1954 John Backus leads a team at IBM to develop the first commercially available high level language, FORTRAN: FORmula TRANslation. As the name implies, it focused on math. At that time it was an "interpreted" language, meaning that each command had machine code associated which was run when that command was recognized. It was later converted to a compiler where the high level language is all converted to low level machine code before running

1950's "Amazing" Grace_Hopper writes the first linker (enabling programs to be written in sections) and gives us the first general purpose computer language, COBOL: COmmon Business Oriented Language and the first compiler. She is also known for having coined the terms "bug" and "debugging" after finding a literal bug (a moth) in an early electro-mechanical computer.

1958 John McCarthy invents LISP: ( LISt Processor, ( which heavily influenced languages ( including the underlying engine of Javascript ) ) ).

1960's Margaret Hamilton leads the software team for the Apollo II (first landing on the moon) and saves that landing by insisting on the inclusion of a process monitor to catch overloads caused by incorrect operation by the Astronauts. She is the first to advocate for software development to be considered a profession.

1970's Tim Berners-Lee invents the World Wide Web. Three women later solve the problems required to make it actually work. Radia_Perlman solved network routing, Sally_Floyd managed network congestion issues, and Elizabeth_J._Feinler guided the development of ARPANET, whois, DNS, RFC's and other infrastructure which became the modern internet.

1970's "SmallTalk" a message based, object oriented programming language is developed at Xerox PARC by Alan Kay, Dan Ingalls, Adele Goldberg, Ted Kaehler, Scott Wallace, and others.

1995 "Javascript" (which is not Java) is added to Netscape Navigator (which later grows to become FireFox). It is based on Scheme (based on LISP), Self (based on SmallTalk), and only looks like Java. Sort of. Originally interpreted, it is now JIT (Just In Time) compiled.

Note: You may have noticed a surprising number of women making significant advances, especially in the very early days of programming: First program, first assembly language, first compiled language, saving the Apollo landing, fixing the net, etc... Women are very much ignored and written out of history. We throw away half our brain power by doing that. Read about Women in Computing and support girls / females in tech jobs if you want our country to excel. Sexism in any form will NOT be tolerated in this class!

Christopher Fry says: Let's re-define "Robot": An automated tool that can perform the instructions in its instruction set. Different robots have different instruction sets. E.g. a light switch has two instructions. A sewing machine or an airliner have many complex instructions.

We can think of people as "robots" under this definition as people too have "instruction sets" that they are capable of. BUT people have very large instruction sets, with quite complex instructions AND each person has at least slightly different things that they can and can't do. And human instruction sets are very fuzzy and hard to follow. Most importantly, humans are always running their own instructions, not only the ones we give them. Humans want rest, money, food, shelter, and they even want to stay alive. Machines don't want any of that unless they are programmed that way.

We may want to perform a complex tasks that needs several different kinds of robots. One or more of those "robots" could be human. The software tools we're using facilitate coordinating robots and humans.

"Humans Need Not Apply" (video 15 min) (discuss 15 min)

- Automation will replace human jobs at an increasing rate. At a low rate, humans compensate, moving into jobs that require more smarts than the machines can provide. At a faster rate, moving humans into higher IQ jobs becomes harder; education takes time and costs money. At some point, humans won't be smarter.

- But thinking that robots are taking our jobs is wrong. It's not robots; it's robot owners. The robots don't care; they aren't human and don't suffer human motivations. They don't want to rest, or have a weekend, or make money... they don't even "want" to stay alive(!) unless they are programmed to want those things.

"Rules for Rulers" (video 20 min) (discuss 15 min)

- "Basic Income" could theoretically redistribute wealth, but is politically (and even morally for some) unpopular.

- When the "means of production" (the robots) are held in the hands of a few, "democracy" or any form of government that is good for the governed, is improbable. If everyone owns a robot, people are freer to pursue their dreams and the pressure is off of government to redistribute wealth.

Dexter and Ending Scarcity (video 3 min) (discussion 15 min)

- Putting a robot in the hands of every person could resolve this mechanized malediction.

- Do you want to be a person with a robot making things for yourself, to sell or trade, or John Henry?

The Dexter Robot Arm. Demos Painting (video 8 min) (Live demo 10 min)

Making the things needed for a comfortable lifestyle is complex. To manage that complexity and the uncertainties of diverse manufacturing environments, we need to give our personal manufacturing robots the smarts to make small decisions. This is best done by writing programs to control the robots.

-

Let's start learning to program a robot using The Dexter Development Environment. (video ~35 min)

This is self paced instruction. Follow along with the video, going as fast or slow as is comfortable for you. Make sure you are understanding everything. Play parts that don't make sense over again. If you still have a question, raise your hand. Be sure to actually do what you are seeing in the video on your own computer. -

How to think like a computer (video instruction, 1 hour) This is also self paced, but it's complex and you may not finish it all in time today. If you can watch it on your own time, we very much recommend that. We will pick it up again later in the course.

The nice thing about Udacity is that it's setup just like a real college course and you can actually get a "nanoDegree" for a LOT less than a full college degree would cost you. And a lot faster. We will just do one class but if you like it, you can go on on your own. Sign up for an account using your gmail / google account, then start the Intro to Javascript course from the link below. Again, this is self paced; go as fast as you can, stop and raise your hand if you have questions. If you get done with it, go on to the object oriented Javascript course listed under "See also".

- https://www.udacity.com/course/intro-to-javascript--ud803 This course is supposed to take 2 weeks, but I know from experience that you can complete it in a couple of days in a classroom environment.

- Today, we need to complete lessons 1, 2, and 3. HURRY!

- Lessons 4 and 5.

If you have time, watch more of The Dexter Development Environment (video ~35 min) and How to think like a computer (video instruction, 1 hour)

- Lessons 6 and 7.

If you have time, watch more of The Dexter Development Environment (video ~35 min) and How to think like a computer (video instruction, 1 hour)

The "think like a computer" video covers an article from the DDE help system. Start DDE, go to the upper right "Doc" panel and open "Articles" then "How to Think Like a Computer". You can follow along with the video there, but scroll down to the most advanced version of eval0 program. Copy it out of the Doc panel and into the "Editor" panel, then add a call to it at the end such as eval0("1 + 2") and press the Eval button. You should get a 3 in the "Output" panel. Now change that to eval0("1 - 2") and notice that it fails to produce -1, instead just echoing back "1 - 2".

Homework: Modify the definition of eval0 to manage subtraction so that eval0("1 - 2") does return -1. Check it with other combinations of numbers. Post your code as an issue to this repo. Use 4 "back ticks" (the key to the left of the "1" key on the keyboard) at the start and end of the listing so it will be formatted as code.

If you have already started with DDE, please skip this section.

-

Let's start learning to program a robot using The Dexter Development Environment. (video ~35 min)

This is self paced instruction. Follow along with the video, going as fast or slow as is comfortable for you. Make sure you are understanding everything. Play parts that don't make sense over again. If you still have a question, raise your hand. Be sure to actually do what you are seeing in the video on your own computer. -

How to think like a computer (video instruction, 1 hour) This is also self paced, but it's complex and you may not finish it all in time today. If you can watch it on your own time, we very much recommend that. We will pick it up again later in the course.

The key point about Javascript as applied to robotics is that Javascript can't pause. There is no way to get a Javascript program to stop and wait for something to happen, then continue. All Javascript programs run straight through, then just end. Obviously, that's a problem when you need a program to send a message out to some other device (such as a web site or a motor on a robot arm) and wait for a response (data / web page, or a signal that the motor has moved to the desired angle) but it's also maybe a good thing (this can be argued, every language makes choices). Javascript was designed to run in a browser, and if the program has stopped running, because it's waiting for something, the browser is frozen. Humans don't like programs that freeze. And that applies to DDE as well. We don't want it to stop working, just because it's waiting for the robot to finish doing something. We don't want input / output operations to block the operation of our programs. Javascript is a non-blocking language and modern languages on moderns computers are blazingly fast. But input / output operations DO take time; lots of time compared to the speed of a program. Physical movement (e.g. of a robot arm) takes GOBS of time.

So how do we get around that? With callbacks. Callbacks are named or unnamed functions that are passed into a request that might take time. The request doesn't stop and wait, it just makes the request, and passes in the function, saying "Hey, minion, go do this and when you get done, run this function." Then the main program just goes on, or maybe that is the end of the function.

This is a really different way of writing programs, and it can feel really strange if you aren't used to it. Most programs are "synchronous" meaning they execute instructions one after the other, in sequence. Javascript is "asynchronous" meaning that callbacks can fire whenever the IO operation is finished. And this can also be confusing because there is no way to know in what order the callbacks will happen. You have to be a little smarter about how you code things to account for all this. So if you set x to 1 and write a function to add 10 to x, and another function to multiply x by 20, and then you call both of them, you can't really know if the result will be 220 or 30. ( (1 + 10) * 20 or 1 * 20 + 10 ). To see how that might be useful, think about having two Dexters working together, and DDE is controlling both of them. Sometimes Robot A can be working on something at the same time that Robot B is working to get more work done faster. Sometimes they can work separately, sometimes they have to cooperate; Robot A may need to stop until Robot B catches up. But most of the time, you just want Robot A to do one thing after another, waiting to start the next movement until after the current movement is completed.

So is there a way to just write a simple program in DDE that just tells the robot to carry out a sequence of operations, one after the other, while waiting for each to complete before going on to the next? Yes! It's called a Job. But to understand what a Job is, let's first think about how to do this list of blocking IO instructions, in sequence. You can separate each instruction into a separate function so they each operate without stopping and only dispatch one IO request. But you still need to stop and wait between instructions. We can build an array of instruction functions, where each entry in the array is a function with a single non-blocking procedure. And yes, arrays can hold functions just as easily as they hold numbers. We can dispatch one function from the array, and pass in the next function in the array as the callback to be executed when the first function finishes. Basically, we make a chain, and each link in the chain calls the next link when it's done. This is how we connect a really fast thing, to a really slow thing.

That's what a Job is in DDE. The do_list part of the Job is an array of instructions to command the robots.

You could think of this set of instructions as its own little sub-language that is build on top of Javascript. Mostly these instructions are just JavaScript functions that you call with similar syntax to other JS function calls. And you can just write a JS function as an entry in a do list if you want to do something that's not explicitly provided by an instruction. The instructions / functions are collected in a "do list" in the job, and are executed one at a time. A defined Job in DDE shows up as a button in the Output pane header. Pressing a Job's button starts it if it is stopped, and stops it if it is running. The color of the button indicates its status.

Note: This is a 3 day week.

- Making a Job for a Robot (video 35 min)

Please try to follow along with this video and do the same things. If you see any differences, document them in a comment on the video or via an issue.

Don't think of the do_list as a program. It technically is a program, but it doesn't include a lot of the things we expect:

- There is no way to return values from the instructions in the form

var variable = functionCall(parameter)like regular Javascript. However, instructions on the do list CAN affect the state of the job, global variables, etc... - The do_list is "self modifying". Instructions (like function calls) can add items to the do_list by returning them. The returned instructions are inserted into the do_list just after the function. Most languages don't allow self modification

- The do_list can't really do conditional statements, except via function calls and self modification. There is a

go_toand aloopand the loop can accept a conditional statement, but that is just a fixed number of times to loop or a call to a function which returns true, false, or a number. If there are instructions you want the robot to execute only if some condition is true, you make a function, and in it you test the condition, then return the instructions it should execute. You can label a point in the queue and then go to that label. - The "world" state as modified by the do_list is available after the job completes. Most programs destroy all internal state variables when they end. You can go back and inspect the Job, and see its final state, including the final do_list.

The do_list is really a queue. A list of instructions to do. Functions can be on the queue ("execute this function when its turn comes up") and they can return more instructions to put on the queue.

So the basic workflow is:

- Queue up some instructions for the robot to do in the do_list of a Job.

- Queue up a call to a function that evaluates the state of the Job and robot and perhaps return more instructions which are placed on the do_list queue. Those new instructions can be other functions, which will be executed when they come around on the queue. Those instructions can loop back or go to other points in the queue.

Extra: If you have time, this is a really good video / article about the recent changes to JavaScript which make it easier to write programs that wait for things:

https://tylermcginnis.com/async-javascript-from-callbacks-to-promises-to-async-await/

(self-paced study 30 min) See that you can write a job, and run it on the simulator and then on the real robot.

In DDE, select File / New, then go to Jobs / Insert example... / Dexter Moving. Notice there are two different ways to move the arm. You can copy those lines, and add new positions for the arm to move into. The Dexter.move_all_joints instruction takes 5 joint angles. The Dexter.move_to instruction takes an array of 3 numbers, x, y, z, indicating the position that Dexter should move to in meters.

Note that Dexter uses the metric system of measurement. So all units of distance are in meters. A meter is about 3 feet which is about Dexter's full reach. A tenth of a meter (e.g. 0.1) is about 4 inches, or about the length of your finger. A hundredth of a meter (e.g. 0.01), called a "centimeter", is a about a half inch (actually closer to 0.4 inches), or about the width of your little finger. And a thousandth of a meter (e.g. 0.001) called a "millimeter" is tiny, about a half of a tenth of an inch or about the thickness of a fingernail.

So if you change the first digit to the right of the decimal point, you are moving about a finger length. The next digit, 2nd to the right, moves you about a finger width, and the 3rd moves you about the thickness of a fingernail.

To fully understand how a job operates, you must understand the difference between Job Definition Time and Job Run Time.

Job Definition Time When you select and evaluate the source code of a job, you are defining the Job. This simply uses JavaScript's eval function to evaluate the source code, just like evaling any source code. The result of evaling new Job({name: "my_job" ...}) is an instance of the class Job. Once done, you can access the Job instance via Job.my_job

Many properties of the job are accessible via, for example, Job.my_job.name , which returns the string of the Job's name.

Of particular import is the do_list. In the case of:

var ang1 = 90

new Job({name: "my_job",

do_list: [Dexter.move_all_joints(ang1)]

})evaluation sets the global variable ang1 to 90. Then it sets Job.my_job to the new Job instance. Within this instance, the property Job.my_job.do_list will be effectively set to an array of instructions. We say "effectively" because the actual do_list isn't bound until the job is started. It is cached away so that it can be copied afresh for each starting of the job. But in any case, the do_list will now be an array containing just one instance of the move_all_joints instruction. This instance will have its joint1 angle set to 90 because ang1 is evaled when the Job definition is evaluated, and ang1 evals to 90. If you simply eval Dexter.move_all_joints(90), you can inspect the returned object and see that it has an array_of_angles property storing where the Dexter's angles will be commanded to go when the Job is actually run.

Now let's walk through Job Run Time. You run a Job by clicking its job name button or by calling Job.my_job.start()

One way to think of starting a job is that it is a second evaluation of the Job. Only this time, it is not using JavaScript's eval, it is using Job's special evaluator. Instead of taking an input of JavaScript source code, its taking input of the items on the do_list (created via JS eval when defining the Job) which can be of many different types. Each instruction is "evaluated" by the Job differently, depending on its type. (similar to JavaScript's eval!)

In the case of an instance of the move_all_joints instruction, that instance is transformed to a lower level instruction that is very similar to what you get when evaluating make_ins("a", 90), then the 90 is converted from degrees into arcseconds, and sent to the Job's default robot, which better be a Dexter because other robots probably won't understand the "a" oplet or be able to resolve a joint in units as fine as arcseconds (3600 arcseconds per degree).

After a job has finished, you can rerun it by clicking its job name button or calling its start method again. This does not redefine the job. So for instance, if between two runnings of a Job, we set our global variable of ang1 to 45, that will have no effect on the 2nd running of our job, because we are not re-evaluating ang1 or even Dexter.move_all_joints(ang1).

Nor are we re-defining my_job. That Joint1 inside the instance of move_all_joints is still 90, so our 2nd running of my_job should behave the same, even if we modify the value of ang1. Actually if you do re_run my_job, it won't be exactly the same because the Dexter robot will now be at a pose with Joint 1 equal to 90, so in our 2nd running, the robot won't move. But besides this initial "set up move", following moves would be the same between the two runnings.

The most flexible of instruction types is a JavaScript function. This allows you to execute arbitrary JavaScript at Job Run Time. You might need to do this because you want to use the current environment to help determine what instruction to run next. That current environment may not be knowable at Job Definition Time. Perhaps you want to verify that Dexter really is where you told it to go, or you want to check on the status of some other Job before proceeding with this one.

Functions allow you to make changes to what happens while the job is running.

To run arbitrary JS code during the running of a Job, wrap that code in a function definition like so:

new Job({name: "my_job",

do_list: [function(){return Dexter.move_all_joints(ang1)}]

})We could also do:

function move_it_buddy(){return Dexter.move_all_joints(ang1)}

new Job({name: "my_job",

do_list: [move_it_buddy]

})particularly helpful for long functions or functions we want to call multiple times.

We are not doing: do_list: [move_it_buddy()] because we don't want to CALL move_it_buddy, as that would put its result on the do_list at define time. We just want to eval the global variable move_it_buddy to put its value, which is a function definition, onto the do_list at define time.

When a JavaScript function is called, including when a running Job calls a function definition on its do_list, it sets local variables of the function's parameters to the values passed in, and evaluates the code in the body of the function.

In the cases above, ang1 will be eval'd at Job Run Time, as will the code that creates the instance of move_all_joints. Anything returned by a function definition on the do_list will be dynamically added to the do_list right below the function definition. Our do_list started out with just one item, but after running that one item, our do_list is extended by an instance of move_all_joints. That instance of move_all_joints is inserted into the do_list immediately after the function that generated it, making it run immediately after the function. So now, if we change the value of ang1 between two runnings of my_job, we won't have to redefine the whole job in order to get the effect of changing the value of ang1.

A function definition on a do_list doesn't have to return anything. It may just have side effects like calling out for a print statement. But if it returns something that is not a valid instruction, the Job will error.

If you want to access the running Job Instance at run time, use a function definition on the do_list, and reference this in the body of that function definition. Example:

new Job({name: "my_job",

do_list:[function(){ this.user_data.foo = 2},

function(){ out("foo: " + this.user_data.foo +

", robot: " + this.robot.name) }

]})Note that using user_data is a convenient way to pass values from one instruction to another without cluttering the global variable space. this evaluates to the current job object.

Jobs have a great deal of flexibility. We can use the full power of a general purpose programming language plus DDE's libraries to define them. During their running, Jobs can also use the the full power of a general purpose programming language plus DDE's libraries, or any that you import or create.

Though powerful, this flexibility comes at the cost of complexity. Using JavaScript's function defining capability, you can hide (but not eliminate) many details. Also, DDE attempts to make writing JavaScript and Jobs as simple as possible, but it is still not simple, especially if you want to understand what's going on beneath the surface. The goal of Dexter is to enable you to make as many things as possible. This is why DDE needs to be so flexible.

new Job({name: "my_job", //name for the button to start the job

do_list: [ //array of Instructions for robots or Control

Dexter.move_to([0, 0.5, 0.075]),

//Fixed at "Eval" button push

Control.wait_until(2), //controls the job

Human.enter_number({task: "How far?"}),

//Fixed at "Eval" but changes user_data at run time

function() { //re-evaluated after "my_job" button push

let a2 = Math.min(this.user_data.a_number,30)

return [ //must "return" any instructions to do_list

Dexter.move_to([0, a2, 0, 0, 0]),

Dexter.move_to([0, -a2, 0, 0, 0])

] //return single instruction or array

}, //could also call a named function

] // end of do_list

}) // end of job "my_job"- Understand the code

- bad documentation. Bad docs are a bug and they cause bugs! Report / fix bad documentation. Including your own comments in your code.

- wrong algorithm.

- sloppy additions (not fully understanding the existing code before you change it)

- Common bugs:

- "==" is not "=".

if (a = 'one')assigns 'one' to a and is True.if (a == 'one')compares a to 'one' returning true or false. To avoid this mistake, always put the constant first in a comparison. e.g.if ('one' = a)will throw an error, catching your mistake. - Mismatched parentheses. It's easy to forget a closing

)or}or to get them in the wrong order. And it can be VERY difficult to find once you have messed it up. Use the highlighting feature: Click on the opening or closing character, and look for the highlight of the matching one. If it isn't where you expect, check inside that block. - 0 index vs 1 index. Arrays in Javascript are indexed from ZERO not ONE. The "first" item in an array is at array[0]

- Things that look like each other. . vs , and : vs ;. rn vs m. Lint helps. Careful slow reading is also good.

Short tutorial, taken from Debugging Jobs in DDE (30 min)_

-

Lint: Little red marks and underlines in the editor window. A little over active. But can help when you can't see the error yourself

-

Eval selected part. (don't select a part if you want to eval the entire thing). Won't help if the thing you eval depends on other things that haven't been defined in the part you are evaling.

-

out()You can wrap this around just about anything to see what it's returning. e.g. To see whati+1actually is in:var sin=[];for (var i = 0; i < joints; i++) { sin[i] = eval("Dexter.J" + ( i + 1 ) + "_A2D_SIN") }just change it tofor (var i = 0; i < joints; i++) { sin[i] = eval("Dexter.J" + out( i + 1 ) + "_A2D_SIN") }and eval the line. Unlikeconsole.logout still returns the value it outs, so it doesn't get in the way! -

Comment out sections. Never use /* */ in code so the */ doesn't un-comment previously commented-out parts. Use // in code and /* */ to debug by removing parts to focus on problem areas. (ask for an example if this doesn't make sense).

-

Debugging Jobs in DDE (video instruction, 50 min)

In DDE, select File / New, then go to Jobs / Insert example... / and then go through each of the sample jobs. Skip the serial port example, as you don't have an Arduino or other USB connected device to talk to. On each sample, make some small change and post your version of it to your github repo. If your change causes a problem, see if you can fix it. If your change doesn't cause a problem, make a note on how you could have caused a problem. (Self paced study, 45 min)

We tend to think of our world in 3 dimensions. X, Y, and Z called Cartesian coordinates. Which way is Z? Which way is X? or Y? Any way you want them to be. But a common convention is that Z is up / down, X is right / left, and Y is out / back. But Dexter, and other robotic arms of the anthropomorphic type, have joints that can only move through angles. So we have to convert XYZ to Joint Angles, J1, J2, J3, J4, and J5.

For only one joint, in only 2 dimensions, it's not too hard; it's called Rectangular to Polar conversion and you can try this function in DDE: function r2p(x, y) { return [Math.hypot(x, y), Math.atan(x / y) * (180 / Math.PI)] }. E.g. out(r2p(4,5)) Results in a desired length of 5 and an angle of 36.87 degrees. So if we could expand or contract the length of an arm segment, we could figure out how to get to any 2d point with one joint.

When you add more joints (instead of sliding segments) and work in 3D it gets a LOT more complex. If you have time you can watch this video about a 2 joint arm's kinematics. A 5 joint arm like Dexter uses rather complex code. If you want to write Kinematics, don't drop math classes!

Lucky for us, all that work has already been done. We can use move_to(X, Y, Z, direction, config) wait a minute... what is "direction" and "config"?

config For most points within the reachability of Dexter, there are multiple ways in which Dexter can get there. For example, This picture shows all the ways Dexter might get to the same point (on the sloped face of the grey cube).

There is also a (really big) version that shows them all separated. config helps you specify your preference as to how Dexter configures its joints to get to the indicated x, y, z. There are 3 independent boolean values to determining this configuration. They are:

- base-rotation LEFT means J1, the base joint has a negative value, ie it is to the right if you are facing Dexters front. Looking down from the top at Dexter this would mean its rotated counter clockwise. The opposite direction is RIGHT or clockwise.

- J3-position Joint 3 can be thought of as Dexter's elbow. The elbow can be either UP i.e. away from the table or DOWN, closer to the table.

- J4 Position Joint 4 can be thought of as Dexter's wrist. It can be pointing either IN, towards the base, or OUT, away from the base.

Direction controls what direction the last part of the arm is pointing. It will be at X, Y, Z, but should it be pointing straight down? [0, 0, -1] does that. Or should it be pointed out? [0, 1, 0] does that. Or maybe you want it pointed in? [0, -1, 0]. See how it works? It's an extra XYZ on top of the main one, but limited to just 0, 1, or -1 to indicate direction, but not position. You can also use an array of 2 values, which will be used as pitch and roll angles, but be careful not to use 90 as both of the angles because it will cause a "singularity"

Singularity This word is used to mean exactly the opposite of what you would think. "Singular" means there is only one, that it is unique, and special. Just like each of you. "Always remember, you are unique... just like everyone else." but "Singularity" is defined as "a point at which a function takes an infinite value" and it means that there is more than one way to get to the point. If you specify [90,90] there are an infinite number of ways to get to that direction.

Write a job using move_to and run it in the simulator. Post that to your GitHub repo. Extra points if you use a variable (hint: array) to store a basic position and then add or subtract from that to move around that place. E.g. Imagine you are programming a Dexter inside a claw machine. Store the XYZ position of a "prize", then move_to a position above the prize, open the gripper (this can be just a comment), move down to the prize, close the gripper, then move up above it again to clear the other objects, and finally move over to the position of the "chute" where you open the claw to dump the prize for pickup by the user.

- Advanced Jobs (video instruction, 55 min)

TODO: What can Dexter NOT do and what are the main limiting factors? Putting bounds on Dexter's current usefulness vs future usefulness. Post Scarcity Life may provide some ideas.

- Compared to a human, a robot arm typically doesn't have the same strength at the end effector ("fingers") for the same size and weight. A robot may be horrifically strong, but it will take up a large space with massive support systems. Or it might be impossibly delicate and small, but then will have very little strength. The human arm is still a champion for being able to get into small spaces, manipulate objects, and have reasonable strength. e.g. The Dexter arm isn't terribly strong (it's "light industrial") with up to a 1kg load (about 2 lbs) at 1 meter (about 3 feet). Humans can lift ~5 times as much. The record is 20 kg for 1:17 (using 2 arms)

- Manipulating many different types of objects in any orientation is easy for humans, but robots typically have to be re-trained / re-programmed for each type of object and will fail if the object isn't oriented as expected.

- As you learned about Kinematics, figuring out which pose to use to reach for an object can be challenging for a robot. Humans generally don't have any problems planning a path to reach around things and through things. Place a penny on a desk. The penny describes a point in 3 space. While sitting in you chair, you can reach that point either from ABOVE the desk or below. But if the desk is "in the way" then above the desk becomes the better option. Really simple (to us) concepts like that become very difficult to program into a robot.

Accuracy: If you average all your attempts, how well they center on the target.

Precision: How close your attempts come to the same point, even if that point is nowhere near the target. This video makes it very clear: What's the difference between accuracy and precision? - Matt Anticole

Dexter has fantastic precision. Which is even "load adaptive". Dexter's Accuracy is less impressive, but not bad.

Stiffness is how much an object resists deformation in response to an applied force.

Compliance is the opposite. When a force is applied, a compliant object will move to limit the force.

Dexter has very little inherent "stiffness", but can sense deformation and resist it with great precision.

Can Dexter (for each discuss why and why not):

- Make art? ^ ^ Can it make unique art? (hint:

Math.random()) Can it make customized art? (hint: variables, dialog boxes, data files) - Operate a camera?

- Collect / sort parts?

- Assemble parts?

- Make parts? e.g. 3D printing, CNC machining, laser cutting.

- Grow food?

- Build a shelter?

- Reproduce itself? Print a motor and wind it's coils? Run cables? 3D print parts? Assemble a Dexter?

Homework: What problem can you solve with Dexter and an End Effector?

Second Half: Student Projects Leveraging what students learn about

- moving the robot

- manipulating / end effectors

- machine vision (and perhaps other sensing techniques)

- conditional "decision making" by the robot

- User Interface/Human Instructions

Goals:

- To make a Job that accomplishes an automation goal.

- End up with a "demo" that they can show family and friends.

3D printing is changing the world. In the past, making a low cost plastic part was stupidly expensive. Now we can print almost anything we want in a few hours while we do something else. The most difficult part is envisioning the part and describing it to the computer. "Until you can teach a subject, you do not truly understand it."

There are many ways to get the design of a physical object into a computer. Most people use CAD programs which depend on mouse clicks and drags to draw the object on the screen. In this section, we will discover that programming can also describe physical objects. Javascript programming, in fact. And a 3D printer is nothing more than a robot.

-

Avoid supports by: 1. Branching out at 45 degrees, 2. Bridge overhangs between two supports, 3. Orienting part for printing. FDM printers can't extrude in the air, they have to have something under them to support the hot plastic. But they can overhang a little bit, so you can work out into the air at about 45 degrees (half the filament on the edge, the other half in the air). If you need more than that, the slicer software will have to print a breakaway part up from the bottom (or from whatever is under that point) to support your part. Those waste plastic, leave a mark when you break them away, and slow down the print. It's often best to just build in your own 45 degree support. "All strength comes from triangles." Or you can setup the part so there are structures on either side, and the filament can be strung or "bridge" between them. Or turn the part so it can be printed from the (new) bottom up. e.g. Turn the "T" on it's side.

Another option is to break the part up into multiple parts that are printed flat, then joined together after printing.

- Account for the spread of the filament. Have a "fudge" variable that is added to make all interior spaces a little wider and subtracted from the exterior. Be sure to use it anywhere that you need precision. Then you can adjust your print by changing that fudge value until it exactly compensates for the amount the filament is being squished.

- Slow down narrow, tall, objects. Anything that prints two quickly won't have time to cool and harden before the next layer is applied. Some slicers are smart enough to pause between layers, some have settings you can add for this ("minimum layer time") but if that isn't possible, you can add another object; either a duplicate if you need two, or a simple "tower" so that the print head has to go back and forth between the two for each layer, which slows it down.

- Know your filament width The nozzle in the print head extrudes plastic at some fixed diameter. If you design a part with sections that are narrower than that, they won't print. If you have parts that are smaller than 2 filament diameters, you are only going to get one.

https://openjscad.com/ Leveraging our understanding of Javascript to build new end effectors in the browser. Approved student designs can be 3D printed at the library or by instructor / staff.

-

Primitives (

square(),cube(),circle(),cylinder(),sphere()) Note: square and cube should really be rectangle and cuboid as the sides don't have to be the same length -

Transformations (

translate(),rotate(),scale()) Parameter is typically[X,Y,Z]e.g.const ellipse = circle().scale([1,2,1])orconst log = cylinder().rotate([0,90,0]) -

Combinations (

union() {shapes, ...},difference() {starting_shape, subtracted_shape, ...},intersection() {shapes...})

-

Parameters You can setup a "control panel" to easily change things via sliders, input fields, etc... by adding a function called

getParameterDefinitionsas follows:

function getParameterDefinitions() {

return [

{ name: 'length', type: 'int', initial: 150, caption: 'Length?' },

{ name: 'width', type: 'int', initial: 100, caption: 'Width?' },

];

}

The parameters are evaluated and values are passed into the main function. Be sure to declare the main function properly.

function main(params) {

var l = params.length;

var w = params.width;

...

}

All of this is at:

https://en.wikibooks.org/wiki/OpenJSCAD_User_Guide#OpenJSCAD_Programming_Guide

As we go through the examples, DO type them in and see what they do. Instead of just const rect = square() change the return statement to return the item. e.g. return rect.

In your github repo, create an object and link it in the ReadMe.md file like this:

https://openjscad.com/#https://raw.githubusercontent.com/ yourUserName / yourRepoName /master/ yourFileName .jscad

e.g.

https://openjscad.com/#https://raw.githubusercontent.com/JamesNewton/HybridDiskEncoder/master/encoderdisk.jscad

actually opens this file for editing

https://github.com/JamesNewton/HybridDiskEncoder/blob/master/encoderdisk.jscad

Make a car. Or an object of some kind with at least 5 parts, and with some parts of complex shape. Edit (fork) this file: car.jscad to improve the design, then submit a pull request. See the comments in the file.

The thing at the end of the robot arm that holds an end effector. On the Dexter HD the tool interface is called the "SpanMount" and you can download the STL file for it from Thingiverse.

End effectors slid onto the tool interface over that diamond shaped rail in the center. The two small holes on the right hold a servo in place, and the shaft of the servo is centered over that large single hole on the right. On the servo shaft, a part called the "SpanDriver" ( STL ) is attached and comes up through that hole to make contact with the end effector. This can be made to slide into a slot in a part of the end effector to make it move.

Design an end effector for Dexter that does something useful. e.g. hold a pen, hold a microscope, hold a camera and a laser line.

(we will also continue to debug and print end effectors)

Believe it or not, Javascript in the browser is more than fast enough to do machine vision OpenCV.js. Your computers may not have webcams, but you can use still pictures as sources to do images processing.

FYI, the actual library of Javascript code, can be included in a web page via:

<script src="https://docs.opencv.org/master/opencv.js"></script> It's "only" 8.8MB...

To be effective in developing methods for machine vision, we must understand the physics of light. Light travels in straight lines. If we want to find out how much light is coming to us from any point out in the room, we can use a light sensor. But the sensor will have light from many points hit it. We can put the sensor at the end of a tube, so that only the light from where we point the tube is recorded. Don't be confused... light is coming from that point and going off in many different directions, but only one ray comes from that point, and manages to go all the way down the tube to our sensor. All the other rays of light from that point are wasted; we don't sense them.

We could move the tube around to see light from all the different points and so build up an image. Each tube and sensor measuring one point of light from the world would generate a single picture element aka pixel. ("Pickel" was taken, "Pictel" was hard to say.) We could have many sensors and many tubes, but it turns out we can simplify that by having an array of sensors behind a barrier with a small point in the center. Then only the light from points at the top will fall on sensors at the bottom. Our sensors can be made smaller and smaller to increase resolution, but they must be very sensitive. Again, that point is the source of many rays of light, but only one makes it through the pinhole to the sensor.

To improve the amount of light we collect from each point, we can use a lens. When a ray of light reaches a lens, like the one in your eye, or a camera, it is deflected slightly. The deflection is different, depending on where the ray of light strikes the lens. Rays on the outside are defected more, those near the center, less. If you think about rays of light from a point, the ones that hit the outside of the lens, and the inside, will all end up on the same sensor. Of course, the disadvantage is that they only focus or hit the correct sensor, when the lens is the right distance from the sensors.

Between all the pixels and colors, each image includes a stunning amount of data. Quite often, we don't need all that to get our work done, and having too much information slows us down. So we can modify the images to remove unneeded data. We can also modify the pictures to highlight important data.

The concept of contrast, The concept (high level) of SIMPLIFY to derive value ie REMOVE data until you get to the very minimal amount that you need to get a job done (we don't need, color, heck we don't even need gray scale, we just need b&w. Then we can 'read a pixel value (and get back "true or false" (while or black). And then we've done our first "ANALYSIS" (ie machine vision.) I like getting just ONE BIT out of picture, ie get the b & w from the center pixel OR get the average of all pixels in gray, then is the number more or less than 128? We could use this as a "lights on in the room" detector, the very simplest machine vision.

In DDE, we can open a picture and convert it from color to grey. To see this in DDE, save your work, File / New, then Insert / Machine vision / Show Grey. Evaluate the program and Choose picture, then press "Show Image" to see both the original and the modified version. Close the dialog and look at the code.

Modify the program to convert the picture to black and white, instead of just Grey. Hint: cv.threshold(gray_mat, bw_mat, 128, 255, cv.THRESH_BINARY) Post your program in your github repo.

-

Insert / Machine Vision / Blob Detection, Read through the program.

-

Insert / Machine Vision / Face Recognition, Read through the program.

This web page explains how structured light from a laser line can be used to very quickly find the range to an object across a path.

http://techref.massmind.org/techref/com/cyberg8t/www/http/pendragn/actlite.htm

Basically, it uses a laser line to illuminate an object, but it turns the line on and off to easily find the difference:

Make a DDE job that takes two pictures (camera frames) and finds the difference of them.

Using two pictures of the same scene where one picture has a laser line, and the other doesn't, find the distance to the objects in the picture which the laser line strikes, given the height of the camera above the laser line.

Finish printing and test the pen holders, programming the arm to write a message or draw a picture.

Use the image processing to detect the laser line without subtracting. E.g. Detect red only in the picture and only above a threshold to filter out everything but the laser line.

Bots, asides, and challenges

With: 2 laser cut parts, one marble castor, 3 short white and 1 short black zip ties.

- Clean out the holes of the castor holder as needed with your small phillips screwdriver and a rotating / drilling motion.

- Clear any remaining cutouts from the cardboard parts. Press from the white side to the brown side along the edge of the cut with your fingernail until they are clear. For the smaller holes / slots, press them out with the end of the screwdriver.

- Bend the cardboard along the dotted lines, keep white surface on the outside.

- Align nose (has two large circle cut outs) at an angle with the slanted edge of the other part.

- Insert a small white zip tie through the slot on each side and pull it tight. P.S. It looks better if the "head" of the zip tie is on the inside of the bot.

- Place one tab of the marble castor on the slot on the bottom, and insert a small white zip tie through that, and through the slot on the front, then back around through the notch on the front to tie those all three together.

- Add a short white zip tie through one of the tabs on the back of the castor, through the matching hole in the bottom, over to the other hole, and back through the other tab. Make sure you close it around the outside of the castor, not over the marble.

- Snip off the ends of all the zip ties, HOLDING THEM so they don't go flying off! Keep the ends and use two of them to pin the top to the back of the sides.

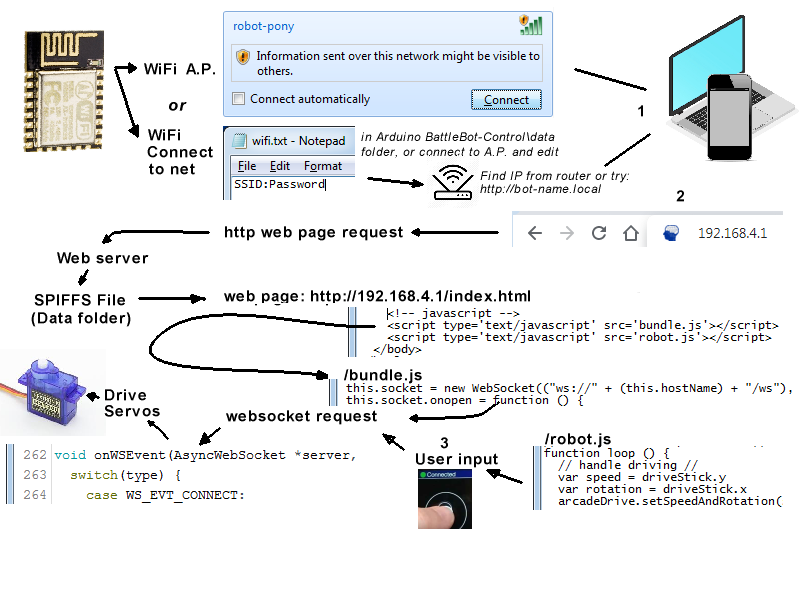

What is the Control board doing in the bot? It's got an ESP-8266 which has WiFi, and is programmable via the Arduino IDE. And it's on an Addicore.com RoboBoffin which provides easy access to plug in RC Servos. So how do we program an Arduino to move Servos?

https://www.youtube.com/watch?v=yyG0koj9nNY

- Go to https://www.tinkercad.com

- Sign in with Google account (or whatever)

- Click "Circuits" on the left

- Click "Create new circuit"

- Change "Components" to "Arduino" under "Starters"

- Scroll down and drag the "Servo" starter into the work area on the left.

- Click "Code" on the toolbar near the right

- Change the "Blocks" pull down to "Blocks + Text"

- Click "Start Simulation" Notice that if you click any of the line numbers, the program will pause and you can step through it and hover to see variable values.

Challenge 1: Change the program to move between 45 and 90 degrees. For credit: Hit "Share", "Invite people", "Copy" and then post it to an issue on the class Repo.

With: a Boffin PCB, Battery Pack, 4 AA batteries

Using: Small phillips screwdriver

- Unscrew both screws on the blue terminal block so there is space for the wires between the metal block and metal spring.

- Screw the BLACK wire to the "GND" terminal, and the RED wire to the "+5" terminal (note: It's not actually gonna get +5 volts, but 1.6 volts * 4 batteries is close enough).

- Make sure the switch on the battery case is OFF

- Insert the 4 AA batteries, with the spring against the flat end and the nub against the nub end.

- Show it to someone to make sure you have it right, place the PCB on a non-metallic surface, and turn the switch on. You should see a blinking green light after a brief flash of the blue light.

- Assuming that went well, put the sticker from your PCB bag on the bottom of your bot body, and see if you can connect to the WiFi name on the sticker. After connecting, it will error that you have no internet access; this is normal. Open your browser and go to 192.168.4.1

Servo motors take 3 wires, a ground, a power, and a "control". The control determines how the servo will move. It uses "pulse width modulation". For this servo, pulse widths vary between 1 ms wide and 2 ms wide.

- A 1.5ms pulse moves it to the center position.

- A 1 ms pulse moves the servo all the way clockwise.

- A 2ms pulse move the servo all the way counterclockwise.

- A 1.75 pulse moves it half way counterclockwise, etc.

What's in a servo?

https://www.youtube.com/watch?v=ZZhuD78BLDk

To connect a servo, you need three pins:

- Ground (brown or black wire)

- Power (5 to 6 volts, on the red or center wire)

- Pulses from a GPIO pin on the Arduino. (usually a yellow or orange wire)

With: 2 Servo Motors, 2 Wheels, 2 large black strap ties

Using: Small phillips screwdriver

- Make sure the battery pack is turned OFF

- The servo wires are brown, red, orange. The brown is "GND", the orange is "I/O" (and the red is "5V" but it's in the middle so hard to get that'n on wrong, ay?). Make sure you have them the right way 'round and then connect one to "D1" and other to "D2" on the PCB.

- Turn the power on. The wheels should jerk, then settle down to not moving. There is a tiny adjustment screw inside the back of the servo to zero it out if it keeps moving. Connect and notice which way they turn when you move the onscreen "joystick" so you can decide which should be right and which left. Power off to save battery.

- Using a large black strap tie, secure each motor in place. Notice that you can make the axle be a bit closer to the front or the back depending on which way you lay the motor down.

- Screw a wheel onto each motor. Make sure the wheel is all the way on the shaft, and use the screw from the wheel package to hold it in place. Tighten it down while holding the wheel so the motor doesn't spin.

Power on the bot, connect, and drive it around. Have a sumo battle with anyone else who's bot is ready. "Chaos erupts"

Challenge 2: Since we have some extra motors, figure out where to connect one and how to control it. What weapon could you control with that? Post your ideas in an issue on the class repo.

Use the gear icon to open the EDIT function. (Note: It takes a bit to load, and works a LOT better on a laptop than it does on a cell).

Challenge 3: Change the name of your bot. For credit: On the class repo, raise and issue and document the steps required. Hint: This is a "digging through the doc's" thing. Points deducted if you yell out "I got it!"

Challenge 4: Extra credit: Make your bot do a "dance" every time the web page loads.

A "PING" sensor works by sending out a burst of ultrasound and listening for the echo when it bounces off of an object. It pings the obstacles with ultrasound, then listens for the echo. Because it's an electronic device, it can hear really quick echos and detect objects far closer than "across the canyon". This is very close to what bats do to "see" at night; it's called or "echolocation". Lazzaro Spallanzani figured out this was happening in 1794, Paul Langevin tried to use it to detect submarines in 1917, 'cause Das U-Boats was kill'n us, and Polaroid used them to autofocus their cameras in 1986. Those Polaroid sensors sucked! Today's PING sensors are much better, but:

- Very low resolution (cm depth, 30 or 40 degree wide)

- Unreliable (drop outs, noise)

- Slow (a few readings per second)

Hard to beat for a quick range sensor.

https://www.youtube.com/watch?v=yyG0koj9nNY

- Go to https://www.tinkercad.com

- Sign in with Google account (or whatever)

- Click "Circuits" on the left

- Click "Create new circuit"

- Change "Components" to "Arduino" under "Starters"

- Scroll down and drag the "Ultrasonic Range Finder" starter into the work area on the left.

- Click "Code" on the toolbar near the right

- Change the "Blocks" pull down to "Blocks + Text"

- At the bottom, click "Serial Monitor"

- Click "Start Simulation" Notice that an object appears next to the PING sensor an you can move it around to see the result. Hint: Graph is cool.

Challenge: Change the circuit to add a servo, and make the servo turn when the object gets closer. For credit: Hit "Share", "Invite people", "Copy" and then post it to an issue on the class Repo.

Breadboards are a common way to quickly connect electronic components. They consist of rows and columns of metal strips with flexible "fingers" which will spread and clamp around the component leads when they are inserted into their hole. Each numbered row is connected together, but divided into two sides. e.g. row 1, pins a-e are connected, and row 1 pins f-j are connected but a-e is not connected to f-j. The columns on the sides are connected all the way down, with a column for power (red, "+") and a column for ground (blue, "-").

Using a white board, 4 conductors torn from the female to male cables, and a PING sensor.

- Connect the "VCC" pin to "D7" - "5V" (or D5-5V)

- Connect "Trigger" to "D7" - "I/O"

- Connect "Echo" to "D5" - "I/O"

- Connect "GND" to "D7" - "GND" (or D5-GND)

Place the parts back in the bot, fire it up, connect and see if you have range to the nearest object showing up on the driving page. Troubleshoot.

Challenge 1: Just for fun: Attempt to navigate down a row in the classroom without looking at the bot. Use only the ping data.

Edit the robot.js file, find the loop and add this at the loop start.

var dist = infoBox.botStatus.cm

if (dist === undefined || dist < 3 || dist > 200) dist = 200

var avoid = (200 - dist) * 0.0008

// handle driving //

var speed = driveStick.y

var rotation = driveStick.x + avoid

arcadeDrive.setSpeedAndRotation(speed, rotation)Refresh the control page and try to drive the robot into something. Note: This only works when driving.

Code review of bundle.js and robot.js.

Challenge: Add a "self driving" button that sets a interval, uses the sensor data to navigate, and drives forward automatically.

https://jsfiddle.net/yomb27eu/5/

Add /embedded/#Result to view in SmartPhone.

Note: If that doesn't work, try this:

https://googlechrome.github.io/samples/image-capture/grab-frame-take-photo.html

It's another way of accessing the camera.

Note: Chrome (and soon other browsers) will not allow access to the Camera from any page that was not loaded over an https: connection. Since the bot can't support https, you need to tell Chrome to trust it anyway by:

- Navigate to chrome://flags/#unsafely-treat-insecure-origin-as-secure

- Enable it

- Add "http://192.168.4.1"

- Close Chrome and restart it.

https://jsfiddle.net/w3dgLpbx/4/

Add /embedded/#Result to view in SmartPhone

Challenge: Edit Robot.js to add a SetInterval which calls the above code and uses the summaries to drive the car forward, left and right.

- OpenJSCad end effector and car

- DDE / Dexter drawing DXF files

- Kinematics / other DDE samples

- Bot's w/sensors (if we get them working)

- Image processing, structured light with laser line

This course focuses on Robotics. But you could think of Robotics as the MOTIVATING force to learn many other topics, such as Math, Physics, Economics, User Interface and Programming. We will touch upon some of these directly, but others only as questions arise. We want to leave in enough time to let student questions and interest drive the level of detail that we dive into topics that would, seem, at first blush, to be NOT related to robotics. The interdisciplinary nature of robotics provides a great vehicle for more conventional subjects that tend NOT to get students excited. We hope to get students excited about some of those subjects.

4F: Fail, Fast, First, Fix!

- https://github.com/getify/You-Dont-Know-JS Complete set of very good books on Javascript, free online.

- https://www.udacity.com/course/object-oriented-javascript--ud711

- https://www.pluralsight.com/guides/introduction-to-asynchronous-javascript More about Javascript being non-blocking or asynchronous.

- https://skillsmatter.com/skillscasts/12157-introduction-to-openjscad Coding reality (for things that need to scale in complex ways)

- http://hdrobotic.com/open-source You can build a Dexter