-

-

Notifications

You must be signed in to change notification settings - Fork 5.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

spmatmul sparse matrix multiplication - performance improvements #30372

Conversation

|

this doesn't seem to work with |

|

Can you test this with matrices that are 10^6x10^6 with 1 and 10 nonzeros per row? IIRC, we used to have this method in the very early days but changed it. There are definitely problem sizes for which this will be faster (but not asymptotically), and if we want these benefits, we need to have some reasonable heuristics to decide which variant to pick. |

|

I had some benchmarks in #29682 that could be used here as well. |

|

Just a thought - we can consider having an optional parameter for accumulating into a dense vector or by sorting. The default would have to be the one that is asymptotically good, but this dense accumulator approach could be picked optionally. |

|

Hi @ViralBShah, Some additional changes are:

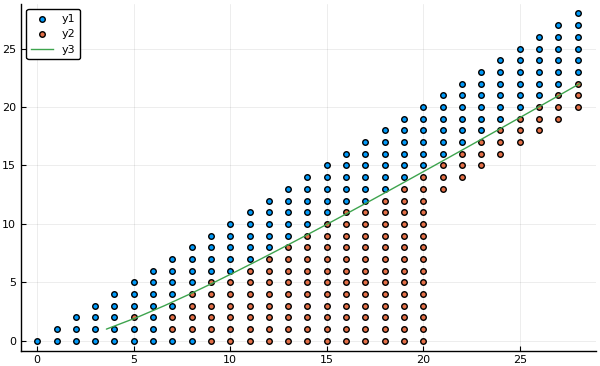

Benchmark results: My own original example: The asymptotic case of @ViralBShah The benchmarks proposed by @KristofferC |

|

This is some really nice work @KlausC! Is it possible to for the It should hopefully be easy to do this easily, and will serve as a good record for this PR. Is there any benefit from inlining the |

|

The same examples with |

|

@KlausC The tests should be an easy fix. I also propose that we mention in the NEWS file about this performance enhancement, and that we no longer have the two options for |

|

Also, for future reference, here's a paper by @aydinbuluc on state of the art on sparse matrix multiplication: https://people.eecs.berkeley.edu/~aydin/spgemm_p2s218.pdf with code at https://bitbucket.org/YusukeNagasaka/mtspgemmlib |

I tried it and did not see a difference. The QuickSort algorithm itself branches to InsertionSort, if numrows <= 20, so the best we could gain is one |

|

Thanks! |

|

I am actually happy to merge this as is (the test changes are not that important). |

|

I committed added test cases and NEWS.md after your merged. How do I get these last changes saved? |

…372) * General performance improvements for sparse matmul Details for the polyalgorithm are in: JuliaLang/julia#30372

The implementation of Gustavson's algorithm used to need a sorting-step ofter the result matrix is constructed. The algorithm has been modified to produce the result vectors properly ordered.

Speed improvement is about 4 times at comparable space usage.

old results:

new results: