-

Notifications

You must be signed in to change notification settings - Fork 3.6k

Closed

Labels

bugSomething isn't workingSomething isn't workingfeatureIs an improvement or enhancementIs an improvement or enhancementprogress bar: tqdm

Milestone

Description

🐛 Bug

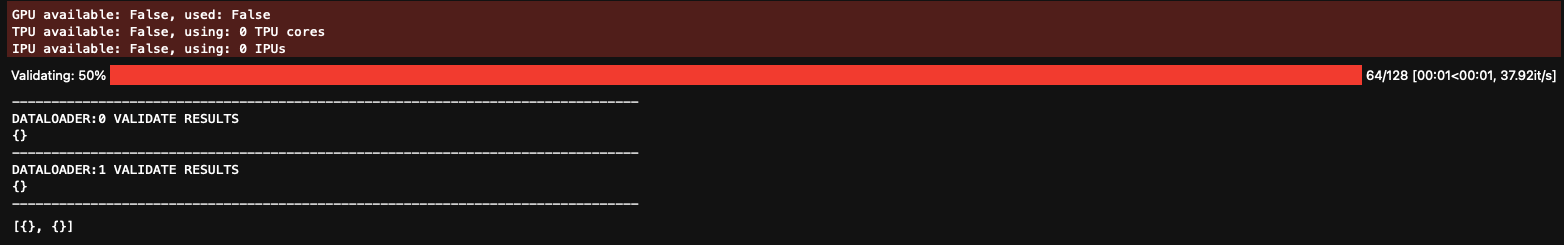

TQDM progress is progressing from the beginning for each dataloader on master but on patch release, it's starting from where it left for the previous dataloader. Thus causing the issue in the attached image for multiple dataloaders.

To Reproduce

CODE

import torch

from torch.utils.data import DataLoader, Dataset

from pytorch_lightning import LightningDataModule, LightningModule, Trainer

from pytorch_lightning.callbacks import RichProgressBar

class RandomDataset(Dataset):

def __init__(self, size: int, length: int):

self.len = length

self.data = torch.randn(length, size)

def __getitem__(self, index):

return self.data[index]

def __len__(self):

return self.len

class BoringModel(LightningModule):

def __init__(self):

super().__init__()

self.layer = torch.nn.Linear(32, 2)

def forward(self, x):

return self.layer(x)

def validation_step(self, batch, batch_idx, dataloader_idx=None):

loss = self(batch).sum

def val_dataloader(self):

return [DataLoader(RandomDataset(32, 64)), DataLoader(RandomDataset(32, 64))]trainer = Trainer()

trainer.validate(BoringModel())Same issue with validation under trainer.fit(), trainer.test() and trainer.predict()

Also if someone does this:

def val_dataloader(self):

return [DataLoader(RandomIterableDataset(32, 2000)), DataLoader(RandomDataset(32, 2000))]then it shows total batches as inf, even though the second dataloader has a length, but it doesn't show up since we probably might be assigning it to sum(all_dataloader_batches). what I think might look good is that it should set the total individually for each dataloader, and for every new iteration starts from the beginning.

Environment

- PyTorch Lightning Version (e.g., 1.5.0): master

- PyTorch Version (e.g., 1.10): 1.10

- Python version (e.g., 3.9): 3.9

- OS (e.g., Linux): mac

- CUDA/cuDNN version:

- GPU models and configuration:

- How you installed PyTorch (

conda,pip, source): - If compiling from source, the output of

torch.__config__.show(): - Any other relevant information:

Additional context

working fine on patch releases. RichProgressBar is also working fine.

Metadata

Metadata

Assignees

Labels

bugSomething isn't workingSomething isn't workingfeatureIs an improvement or enhancementIs an improvement or enhancementprogress bar: tqdm