This project is an entry into #AWSDeepLensChallenge.

Simon Says is a childhood game where the players act out the commands from Simon. When Simon says to do something you do the action,

however when Simon doesn't give the command you should not do the action. Our project is building a Simon Says Deep Learning platform where

everyone can join the same global game using Deeplens to verify the correct action of each player.

Fun Fact: The guinness world record for a game of Simon Says is 12,215 people set on June 14, 2007 at the Utah Summer Games.

- Have the device issue a

simon sayscommand. - Monitor the camera stream and classify the player's action.

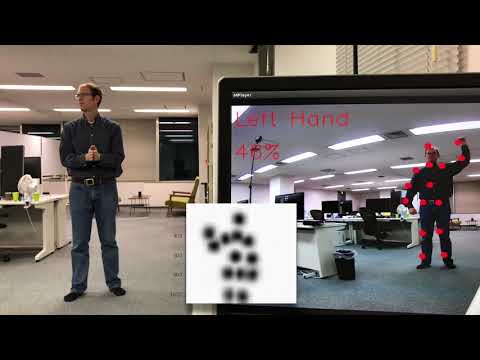

- Overlay a display of the player's pose and current action.

- Link the device to a global simon game network.

The Deeplens device is used to process the video stream of the player. The video stream is formatted to fit our network of 184x184 resolution. We crop

the left and right siding of the image to form a box and scale the image down keeping the aspect ratio. Our model is optimized to use the GPU which is required

or processing time for one frame takes ~30-60s, compared to about .6-1s using GPU. We use the output, a series of images, to calculate the position of the body, which we

refer to as the pose map. The pose map consists of ordered (x,y) positions of each body part, which we feed into a classification network to tell us the predicted action.

-

How we converted the realtimePose Model to run on Deeplens. Convert Model

-

Read More about how we classified the different poses in this notebook.

- Intel Optimized Realtime Pose - realtimePose-Intel.zip

- MXnet Pose Classification Model: poseclassification.zip

The Simon Says game network is built using AWS services. The process starts from cloudwatch where a chain of events is triggered every minute that will generate

and distribute a new game. The first step is a lambda event that queries an S3 bucket using Athena, this query is used so we can add new game actions by just uploaded a file to S3.

After the Athena query is finished the output activates a 2nd lambda which is used to generate the next game by randomly picking one action and deciding if simon says or not and it

will publish the game to an IoT channel. All Deeplens devices will register to this IoT channel and it will be notified when a new game starts.

Image Generated using https://cloudcraft.co/

Image Generated using https://cloudcraft.co/

- Read more about backend development here

- Before running one minor change will need to be addressed and that is audio output. In our case we were not able to hear audio until we

added the

aws_camuser and GreenGrass user to theaudiogroup. If you do not hear sound please verify these group settings.sudo adduser ggc_user audio

- We were not able to get audio playing using the deeplens deployment.

- Upload the model files to your S3 bucket for Deeplens.

- Create a new Lambda function using this packaged zip file simon_posetest.zip. Make sure to set the lambda handler to

greengrassSimonSays.function_handler - Create a new Deeplens Project with the model and lambda function.

- Deploy to Deeplens.

To get setup on the device all you need to do is SSH or open a Terminal, clone this repo, download the

optimized model and mxnet classification model, and install the python requirements.

- It is best to stop GreenGrass Service before you do this.

sudo systemctl stop greengrassd.service.

git clone https://github.com/MDBox/deeplens-simon-says

cd ./deeplens-simon-says/deeplens/simonsays

wget https://s3.amazonaws.com/mdbox-deeplen-simon/models/models.zip

unzip models.zip

sudo pip install -r requirments.txt

sudo python simon.pyThis project uses two open source projects as reference. The original model and development is credited to them.