-

Notifications

You must be signed in to change notification settings - Fork 796

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Fix global mode print tensor bug (#10056)

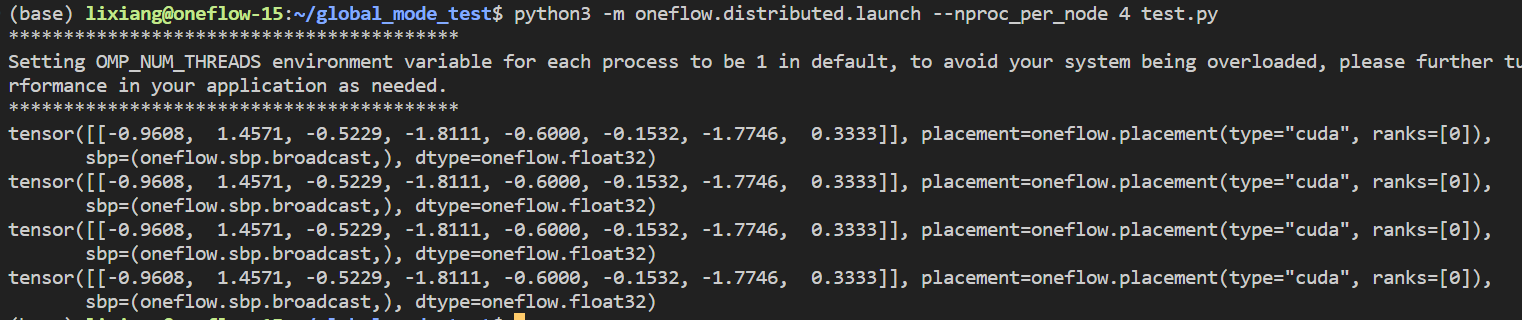

## This PR is done: Fix: Oneflow-Inc/OneTeam#1942 test with: ```python import oneflow as flow from oneflow.utils.global_view import global_mode a = flow.randn( (1, 8), sbp=flow.sbp.broadcast, placement=flow.placement("cuda", ranks=[0]) ) with global_mode(True, placement=flow.placement(type="cuda", ranks=[0,1,2,3]),sbp=flow.sbp.broadcast): print(a) ``` output:  --------- Co-authored-by: Xiaoyu Xu <xiaoyulink@gmail.com> Co-authored-by: oneflow-ci-bot <ci-bot@oneflow.org> Co-authored-by: mergify[bot] <37929162+mergify[bot]@users.noreply.github.com>

- Loading branch information

1 parent

b305117

commit 7e7fb20

Showing

8 changed files

with

46 additions

and

3 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters