PaddleX 3.0 is a low-code development tool for AI models built on the PaddlePaddle framework. It integrates numerous ready-to-use pre-trained models, enabling full-process development from model training to inference, supporting a variety of mainstream hardware both domestic and international, and aiding AI developers in industrial practice.

🎨 Rich Models One-click Call: Integrate over 200 PaddlePaddle models covering multiple key areas such as OCR, object detection, and time series forecasting into 19 pipelines. Experience the model effects quickly through easy Python API calls. Also supports more than 20 modules for easy model combination use by developers.

🚀 High Efficiency and Low barrier of entry: Achieve model full-process development based on graphical interfaces and unified commands, creating 8 featured model pipelines that combine large and small models, semi-supervised learning of large models, and multi-model fusion, greatly reducing the cost of iterating models.

🌐 Flexible Deployment in Various Scenarios: Support various deployment methods such as high-performance inference, service deployment, and lite deployment to ensure efficient operation and rapid response of models in different application scenarios.

🔧 Efficient Support for Mainstream Hardware: Support seamless switching of various mainstream hardware such as NVIDIA GPUs, Kunlun XPU, Ascend NPU, and Cambricon MLU to ensure efficient operation.

🔥🔥 "PaddleX Document Information Personalized Extraction Upgrade", PP-ChatOCRv3 innovatively provides custom development functions for OCR models based on data fusion technology, offering stronger model fine-tuning capabilities. Millions of high-quality general OCR text recognition data are automatically integrated into vertical model training data at a specific ratio, solving the problem of weakened general text recognition capabilities caused by vertical model training in the industry. Suitable for practical scenarios in industries such as automated office, financial risk control, healthcare, education and publishing, and legal and government sectors. October 24th (Thursday) 19:00 Join our live session for an in-depth analysis of the open-source version of PP-ChatOCRv3 and the outstanding advantages of PaddleX 3.0 Beta1 in terms of accuracy and speed. Registration Link

🔥🔥 9.30, 2024, PaddleX 3.0 Beta1 open source version is officially released, providing more than 200 models that can be called with a simple Python API; achieve model full-process development based on unified commands, and open source the basic capabilities of the PP-ChatOCRv3 pipeline; support more than 100 models for high-performance inference and service-oriented deployment (iterating continuously), more than 7 key visual models for edge-deployment; more than 70 models have been adapted for the full development process of Ascend 910B, more than 15 models have been adapted for the full development process of Kunlun chips and Cambricon

🔥 6.27, 2024, PaddleX 3.0 Beta open source version is officially released, supporting the use of various mainstream hardware for pipeline and model development in a low-code manner on the local side.

🔥 3.25, 2024, PaddleX 3.0 cloud release, supporting the creation of pipelines in the AI Studio Galaxy Community in a zero-code manner.

PaddleX is dedicated to achieving pipeline-level model training, inference, and deployment. A pipeline refers to a series of predefined development processes for specific AI tasks, which includes a combination of single models (single-function modules) capable of independently completing a certain type of task.

All pipelines of PaddleX support online experience on AI Studio and local fast inference. You can quickly experience the effects of each pre-trained pipeline. If you are satisfied with the effects of the pre-trained pipeline, you can directly perform high-performance inference / serving deployment / edge deployment on the pipeline. If not satisfied, you can also Custom Development to improve the pipeline effect. For the complete pipeline development process, please refer to the PaddleX pipeline Development Tool Local Use Tutorial.

In addition, PaddleX provides developers with a full-process efficient model training and deployment tool based on a cloud-based GUI. Developers do not need code development, just need to prepare a dataset that meets the pipeline requirements to quickly start model training. For details, please refer to the tutorial "Developing Industrial-level AI Models with Zero Barrier".

| Pipeline | Online Experience | Local Inference | High-Performance Inference | Service-Oriented Deployment | Edge Deployment | Custom Development | Zero-Code Development On AI Studio |

|---|---|---|---|---|---|---|---|

| OCR | Link | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| PP-ChatOCRv3 | Link | ✅ | ✅ | ✅ | 🚧 | ✅ | ✅ |

| Table Recognition | Link | ✅ | ✅ | ✅ | 🚧 | ✅ | ✅ |

| Object Detection | Link | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Instance Segmentation | Link | ✅ | ✅ | ✅ | 🚧 | ✅ | ✅ |

| Image Classification | Link | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Semantic Segmentation | Link | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Time Series Forecasting | Link | ✅ | 🚧 | ✅ | 🚧 | ✅ | ✅ |

| Time Series Anomaly Detection | Link | ✅ | 🚧 | ✅ | 🚧 | ✅ | ✅ |

| Time Series Classification | Link | ✅ | 🚧 | ✅ | 🚧 | ✅ | ✅ |

| Small Object Detection | 🚧 | ✅ | ✅ | ✅ | 🚧 | ✅ | 🚧 |

| Multi-label Image Classification | 🚧 | ✅ | ✅ | ✅ | 🚧 | ✅ | 🚧 |

| Image Anomaly Detection | 🚧 | ✅ | ✅ | ✅ | 🚧 | ✅ | 🚧 |

| Layout Parsing | 🚧 | ✅ | 🚧 | ✅ | 🚧 | ✅ | 🚧 |

| Formula Recognition | 🚧 | ✅ | 🚧 | ✅ | 🚧 | ✅ | 🚧 |

| Seal Recognition | 🚧 | ✅ | ✅ | ✅ | 🚧 | ✅ | 🚧 |

| Pedestrian Attribute Recognition | 🚧 | ✅ | 🚧 | ✅ | 🚧 | ✅ | 🚧 |

| Vehicle Attribute Recognition | 🚧 | ✅ | 🚧 | ✅ | 🚧 | ✅ | 🚧 |

| Face Recognition | 🚧 | ✅ | 🚧 | ✅ | 🚧 | ✅ | 🚧 |

❗Note: The above capabilities are implemented based on GPU/CPU. PaddleX can also perform local inference and custom development on mainstream hardware such as Kunlunxin, Ascend, Cambricon, and Haiguang. The table below details the support status of the pipelines. For specific supported model lists, please refer to the Model List (Kunlunxin XPU)/Model List (Ascend NPU)/Model List (Cambricon MLU)/Model List (Haiguang DCU). We are continuously adapting more models and promoting the implementation of high-performance and service-oriented deployment on mainstream hardware.

🔥🔥 Support for Domestic Hardware Capabilities

| Pipeline | Ascend 910B | Kunlunxin R200/R300 | Cambricon MLU370X8 | Haiguang Z100 |

|---|---|---|---|---|

| OCR | ✅ | ✅ | ✅ | 🚧 |

| Table Recognition | ✅ | 🚧 | 🚧 | 🚧 |

| Object Detection | ✅ | ✅ | ✅ | 🚧 |

| Instance Segmentation | ✅ | 🚧 | ✅ | 🚧 |

| Image Classification | ✅ | ✅ | ✅ | ✅ |

| Semantic Segmentation | ✅ | ✅ | ✅ | ✅ |

| Time Series Forecasting | ✅ | ✅ | ✅ | 🚧 |

| Time Series Anomaly Detection | ✅ | 🚧 | 🚧 | 🚧 |

| Time Series Classification | ✅ | 🚧 | 🚧 | 🚧 |

❗Before installing PaddleX, please ensure you have a basic Python environment (Note: Currently supports Python 3.8 to Python 3.10, with more Python versions being adapted). The PaddleX 3.0-beta2 version depends on PaddlePaddle version 3.0.0b2.

- Installing PaddlePaddle

# cpu

python -m pip install paddlepaddle==3.0.0b2 -i https://www.paddlepaddle.org.cn/packages/stable/cpu/

# gpu,该命令仅适用于 CUDA 版本为 11.8 的机器环境

python -m pip install paddlepaddle-gpu==3.0.0b2 -i https://www.paddlepaddle.org.cn/packages/stable/cu118/

# gpu,该命令仅适用于 CUDA 版本为 12.3 的机器环境

python -m pip install paddlepaddle-gpu==3.0.0b2 -i https://www.paddlepaddle.org.cn/packages/stable/cu123/❗For more PaddlePaddle versions, please refer to the PaddlePaddle official website.

- Installing PaddleX

pip install https://paddle-model-ecology.bj.bcebos.com/paddlex/whl/paddlex-3.0.0b1-py3-none-any.whl❗For more installation methods, refer to the PaddleX Installation Guide.

One command can quickly experience the pipeline effect, the unified CLI format is:

paddlex --pipeline [Pipeline Name] --input [Input Image] --device [Running Device]You only need to specify three parameters:

pipeline: The name of the pipelineinput: The local path or URL of the input image to be processeddevice: The GPU number used (for example,gpu:0means using the 0th GPU), you can also choose to use the CPU (cpu)

For example, using the OCR pipeline:

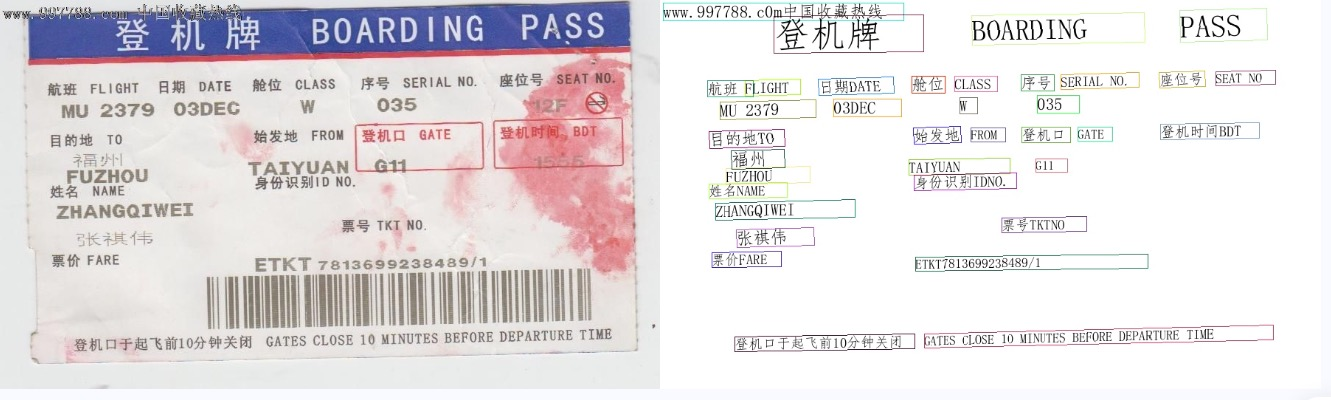

paddlex --pipeline OCR --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_ocr_002.png --device gpu:0👉 Click to view the running result

{

'input_path': '/root/.paddlex/predict_input/general_ocr_002.png',

'dt_polys': [array([[161, 27],

[353, 22],

[354, 69],

[162, 74]], dtype=int16), array([[426, 26],

[657, 21],

[657, 58],

[426, 62]], dtype=int16), array([[702, 18],

[822, 13],

[824, 57],

[704, 62]], dtype=int16), array([[341, 106],

[405, 106],

[405, 128],

[341, 128]], dtype=int16)

...],

'dt_scores': [0.758478200014338, 0.7021546472698513, 0.8536622648391111, 0.8619181462164781, 0.8321051217096188, 0.8868756173427551, 0.7982964727675609, 0.8289939036796322, 0.8289428877522524, 0.8587063317632897, 0.7786755892491615, 0.8502032769081344, 0.8703346500042997, 0.834490931790065, 0.908291103353393, 0.7614978661708064, 0.8325774055997542, 0.7843421347676149, 0.8680889482955594, 0.8788859304537682, 0.8963341277518075, 0.9364654810069546, 0.8092413027028257, 0.8503743089091863, 0.7920740420391101, 0.7592224394793805, 0.7920547400069311, 0.6641757962457888, 0.8650289477605955, 0.8079483304467047, 0.8532207681055275, 0.8913377034754717],

'rec_text': ['登机牌', 'BOARDING', 'PASS', '舱位', 'CLASS', '序号 SERIALNO.', '座位号', '日期 DATE', 'SEAT NO', '航班 FLIGHW', '035', 'MU2379', '始发地', 'FROM', '登机口', 'GATE', '登机时间BDT', '目的地TO', '福州', 'TAIYUAN', 'G11', 'FUZHOU', '身份识别IDNO', '姓名NAME', 'ZHANGQIWEI', 票号TKTNO', '张祺伟', '票价FARE', 'ETKT7813699238489/1', '登机口于起飞前10分钟关闭GATESCLOSE10MINUTESBEFOREDEPARTURETIME'],

'rec_score': [0.9985831379890442, 0.999696917533874512, 0.9985735416412354, 0.9842517971992493, 0.9383274912834167, 0.9943678975105286, 0.9419361352920532, 0.9221674799919128, 0.9555020928382874, 0.9870321154594421, 0.9664073586463928, 0.9988052248954773, 0.9979352355003357, 0.9985110759735107, 0.9943482875823975, 0.9991195797920227, 0.9936401844024658, 0.9974591135978699, 0.9743705987930298, 0.9980487823486328, 0.9874696135520935, 0.9900962710380554, 0.9952947497367859, 0.9950481653213501, 0.989926815032959, 0.9915552139282227, 0.9938777685165405, 0.997239887714386, 0.9963340759277344, 0.9936134815216064, 0.97223961353302]}The visualization result is as follows:

To use the command line for other pipelines, simply adjust the pipeline parameter to the name of the corresponding pipeline. Below are the commands for each pipeline:

👉 More CLI usage for pipelines

| Pipeline Name | Command |

|---|---|

| Image Classification | paddlex --pipeline image_classification --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_image_classification_001.jpg --device gpu:0 |

| Object Detection | paddlex --pipeline object_detection --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_object_detection_002.png --device gpu:0 |

| Instance Segmentation | paddlex --pipeline instance_segmentation --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_instance_segmentation_004.png --device gpu:0 |

| Semantic Segmentation | paddlex --pipeline semantic_segmentation --input https://paddle-model-ecology.bj.bcebos.com/paddlex/PaddleX3.0/application/semantic_segmentation/makassaridn-road_demo.png --device gpu:0 |

| Image Multi-label Classification | paddlex --pipeline multi_label_image_classification --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_image_classification_001.jpg --device gpu:0 |

| Small Object Detection | paddlex --pipeline small_object_detection --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/small_object_detection.jpg --device gpu:0 |

| Image Anomaly Detection | paddlex --pipeline anomaly_detection --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/uad_grid.png --device gpu:0 |

| Pedestrian Attribute Recognition | paddlex --pipeline pedestrian_attribute --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/pedestrian_attribute_002.jpg --device gpu:0 |

| Vehicle Attribute Recognition | paddlex --pipeline vehicle_attribute --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/vehicle_attribute_002.jpg --device gpu:0 |

| OCR | paddlex --pipeline OCR --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_ocr_002.png --device gpu:0 |

| Table Recognition | paddlex --pipeline table_recognition --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/table_recognition.jpg --device gpu:0 |

| Layout Parsing | paddlex --pipeline layout_parsing --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/demo_paper.png --device gpu:0 |

| Formula Recognition | paddlex --pipeline formula_recognition --input https://paddle-model-ecology.bj.bcebos.com/paddlex/demo_image/general_formula_recognition.png --device gpu:0 |

| Seal Recognition | paddlex --pipeline seal_recognition --input https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/seal_text_det.png --device gpu:0 |

| Time Series Forecasting | paddlex --pipeline ts_fc --input https://paddle-model-ecology.bj.bcebos.com/paddlex/ts/demo_ts/ts_fc.csv --device gpu:0 |

| Time Series Anomaly Detection | paddlex --pipeline ts_ad --input https://paddle-model-ecology.bj.bcebos.com/paddlex/ts/demo_ts/ts_ad.csv --device gpu:0 |

| Time Series Classification | paddlex --pipeline ts_cls --input https://paddle-model-ecology.bj.bcebos.com/paddlex/ts/demo_ts/ts_cls.csv --device gpu:0 |

A few lines of code can complete the quick inference of the pipeline, the unified Python script format is as follows:

from paddlex import create_pipeline

pipeline = create_pipeline(pipeline=[Pipeline Name])

output = pipeline.predict([Input Image Name])

for res in output:

res.print()

res.save_to_img("./output/")

res.save_to_json("./output/")The following steps are executed:

create_pipeline()instantiates the pipeline object- Passes the image and calls the

predictmethod of the pipeline object for inference prediction - Processes the prediction results

For other pipelines in Python scripts, just adjust the pipeline parameter of the create_pipeline() method to the corresponding name of the pipeline. Below is a list of each pipeline's corresponding parameter name and detailed usage explanation:

👉 More Python script usage for pipelines

⬇️ Installation

🔥 Pipeline Usage

-

📝 Information Extracion

-

🎥 Computer Vision

- 🖼️ Image Classification Pipeline Tutorial

- 🎯 Object Detection Pipeline Tutorial

- 📋 Instance Segmentation Pipeline Tutorial

- 🗣️ Semantic Segmentation Pipeline Tutorial

- 🏷️ Multi-label Image Classification Pipeline Tutorial

- 🔍 Small Object Detection Pipeline Tutorial

- 🖼️ Image Anomaly Detection Pipeline Tutorial

- 🖼️ Image Recognition Pipeline Tutorial

- 🆔 Face Recognition Pipeline Tutorial

- 🚗 Vehicle Attribute Recognition Pipeline Tutorial

- 🚶♀️ Pedestrian Attribute Recognition Pipeline Tutorial

-

🔧 Related Instructions

⚙️ Module Usage

-

🔍 OCR

- 📝 Text Detection Module Tutorial

- 🔖 Seal Text Detection Module Tutorial

- 🔠 Text Recognition Module Tutorial

- 🗺️ Layout Parsing Module Tutorial

- 📊 Table Structure Recognition Module Tutorial

- 📄 Document Image Orientation Classification Tutorial

- 🔧 Document Image Unwarp Module Tutorial

- 📐 Formula Recognition Module Tutorial

-

🏞️ Image Features

🏗️ Pipeline Deployment

🖥️ Multi-Hardware Usage

📝 Tutorials & Examples

-

📑 PP-ChatOCRv3 Model Line —— Paper Document Information Extract Tutorial

-

📑 PP-ChatOCRv3 Model Line —— Seal Information Extract Tutorial

-

🖼️ General Image Classification Model Line —— Garbage Classification Tutorial

-

🧩 General Instance Segmentation Model Line —— Remote Sensing Image Instance Segmentation Tutorial

-

👥 General Object Detection Model Line —— Pedestrian Fall Detection Tutorial

-

👗 General Object Detection Model Line —— Fashion Element Detection Tutorial

-

🚗 General OCR Model Line —— License Plate Recognition Tutorial

-

✍️ General OCR Model Line —— Handwritten Chinese Character Recognition Tutorial

-

🗣️ General Semantic Segmentation Model Line —— Road Line Segmentation Tutorial

-

🛠️ Time Series Anomaly Detection Model Line —— Equipment Anomaly Detection Application Tutorial

For answers to some common questions about our project, please refer to the FAQ. If your question has not been answered, please feel free to raise it in Issues.

We warmly welcome and encourage community members to raise questions, share ideas, and feedback in the Discussions section. Whether you want to report a bug, discuss a feature request, seek help, or just want to keep up with the latest project news, this is a great platform.

The release of this project is licensed under the Apache 2.0 license.