-

Notifications

You must be signed in to change notification settings - Fork 846

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

add DeepFM knowledge #734

base: master

Are you sure you want to change the base?

add DeepFM knowledge #734

Changes from 11 commits

d8a7040

51a0d28

d82ae39

3bf764b

45586d6

3da5837

4b78bbd

2a6d4de

d972a60

8077d93

2c602b7

ad3e385

7fadb2d

7015a3c

50d7ec8

ef21ced

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,59 @@ | ||

| DeepFM模型 | ||

|

|

||

| ## 模型简介 | ||

|

|

||

| CTR预估是目前推荐系统的核心技术,其目标是预估用户点击推荐内容的概率。DeepFM模型包含FM和DNN两部分,FM模型可以抽取low-order特征,DNN可以抽取high-order特征。无需Wide&Deep模型人工特征工程。由于输入仅为原始特征,而且FM和DNN共享输入向量特征,DeepFM模型训练速度很快。 | ||

|

|

||

| ### 1)DeepFM模型 | ||

|

|

||

| 为了同时利用low-order和high-order特征,DeepFM包含FM和DNN两部分,两部分共享输入特征。对于特征i,标量wi是其1阶特征的权重,该特征和其他特征的交互影响用隐向量Vi来表示。Vi输入到FM模型获得特征的2阶表示,输入到DNN模型得到high-order高阶特征。 | ||

|

|

||

| $$ | ||

| \hat{y} = sigmoid(y_{FM} + y_{DNN}) | ||

| $$ | ||

|

|

||

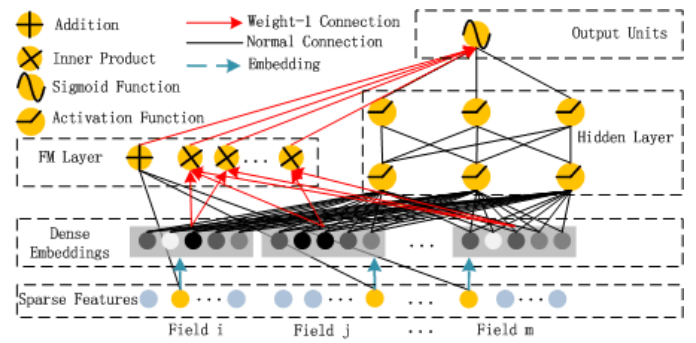

| DeepFM模型结构如下图所示,完成对稀疏特征的嵌入后,由FM层和DNN层共享输入向量,经前向反馈后输出。 | ||

|

|

||

|  | ||

|

|

||

| ### 2)FM | ||

|

|

||

| FM模型不单可以建模1阶特征,还可以通过隐向量点积的方法高效的获得2阶特征表示,即使交叉特征在数据集中非常稀疏甚至是从来没出现过。这也是FM的优势所在。 | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. FM介绍再详细一点,目前就只有一个公式,借鉴一下这个,给一个这一样的应用示例:https://www.biaodianfu.com/ctr-fm-ffm-deepfm.html |

||

|

|

||

| $$ | ||

| y_{FM}= <w,x> + \sum_{j_1=1}^{d}\sum_{j_2=j_1+1}^{d}<V_i,V_j>x_{j_1}\cdot x_{j_2} | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. 介绍的比较简单,FM的原理希望通俗易懂,我查了一下资料,可以参考: |

||

| $$ | ||

|

|

||

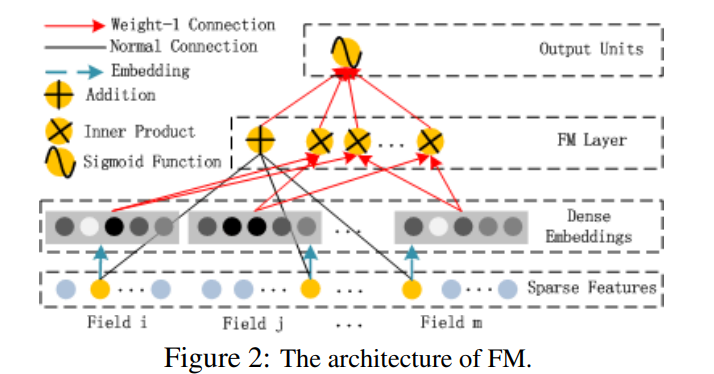

| 单独的FM层结构如下图所示: | ||

|

|

||

|  | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. 目前FM的部分描述比较简单,考虑补充FM的学习过程,FM的优缺点等等 |

||

|

|

||

| ### 3)DNN | ||

|

|

||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. wide&Deep详细说一下,不一定每个人都知道 There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. 考虑回答一下,为什么选择DNN要跟FM结合,RNN跟FM能够结合吗?分析一下DNN+FM结合的好处 |

||

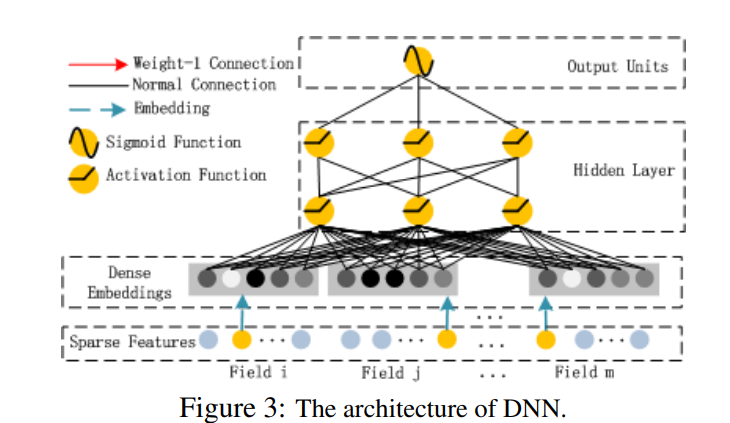

| 该部分和Wide&Deep模型类似,是简单的前馈网络。在输入特征部分,由于原始特征向量多是高纬度,高度稀疏,连续和类别混合的分域特征,因此将原始的稀疏表示特征映射为稠密的特征向量。 | ||

|

|

||

| 假设子网络的输出层为: | ||

| $$ | ||

| a^{(0)}=[e1,e2,e3,...en] | ||

| $$ | ||

| DNN网络第l层表示为: | ||

| $$ | ||

| a^{(l+1)}=\sigma{(W^{(l)}a^{(l)}+b^{(l)})} | ||

| $$ | ||

| 再假设有H个隐藏层,DNN部分的预测输出可表示为: | ||

| $$ | ||

| y_{DNN}= \sigma{(W^{|H|+1}\cdot a^H + b^{|H|+1})} | ||

| $$ | ||

| DNN深度神经网络层结构如下图所示: | ||

|

|

||

|  | ||

|

|

||

| ### 4)Loss及Auc计算 | ||

|

|

||

| * 预测的结果将FM的一阶项部分,二阶项部分以及dnn部分相加,再通过激活函数sigmoid给出,为了得到每条样本分属于正负样本的概率,我们将预测结果和1-predict合并起来得到predict_2d,以便接下来计算auc。 | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. 添加auc和DeepFM的损失函数 |

||

| * 每条样本的损失为负对数损失值,label的数据类型将转化为float输入。 | ||

| * 该batch的损失avg_cost是各条样本的损失之和 | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. 讲解理论的时候,理论不需要说具体的实现过程,把原理讲清楚就行。这几句删除 |

||

| * 我们同时还会计算预测的auc指标。 | ||

|

|

||

|

|

||

|

|

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

low-order和highe-order解释一下是啥