-

Notifications

You must be signed in to change notification settings - Fork 15

Drafting a new Resource module layout & build #16

base: master

Are you sure you want to change the base?

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status: 0 of 45 files reviewed, 21 unresolved discussions

.build.ps1, line 1 at r1 (raw file):

[CmdletBinding()]

This file is the point of entry of the automation.

It allows for calling all type of 'workflow' (group of tasks) used during testing: restore dependencies, build, test, or doing it all at once.

as an example, you would use

C:\DscResource.Template\ PS > .build.ps1 -ResolveDependency -Tasks buildTo restore the dependencies (RequiredModules.psd1) and compiling the module (going into the output folder, more on that later).

The dependencies are saved into the output\RequiredModules folder, and that path is pre-pended to the $Env:PSModulePath, so that the required modules don't pollute or conflict with your environment (I don't want to be able to pin versions per project). That said, it is also possible to override this behaviour and install in either scope.

The rest of this file would probably not change from a project to another, instead the Build.psd1 configuration might be changed.

Build.psd1, line 2 at r1 (raw file):

@{ # Pending PR on ModuleBuilder

Waiting on my PR to modulebuilder to be merged so that all configuration to the project can be done here.

I'll explain the principles here.

This build configuration allows to configure the Build behaviour and preferences.

Build.psd1, line 7 at r1 (raw file):

Suffix = "./suffix.ps1" BuildWorkflow = @{

You can define 'custom' workflow for Invoke-Build by creating a list a task to run for a key.

for instance, if I run .build.ps1 -tasks build it will run the Clean, Set_Build_Environment_Variables and Build_Module_ModuleBuilder tasks.

Build.psd1, line 28 at r1 (raw file):

) }

Defines parameters to use when restoring dependencies. This allows you to bootstrap based on your corporate configuration. I should probably extract some of those to be defined (overridden) in another file, not checked in source control.

RequiredModules.psd1, line 2 at r1 (raw file):

@{ # Set up a mini virtual environment...

This is using Warren's PSDepend module.

I also have a work in progress PR to support pinning of versions such as -gt 2.0 -and -lt 3.0

RequiredModules.psd1, line 9 at r1 (raw file):

} }

These are the Modules required for running the build

RequiredModules.psd1, line 19 at r1 (raw file):

#required for DSC authoring xDscResourceDesigner = 'latest' 'PowerShell/DscResource.Tests' = @{

This is an example of using PSDepend to pull and extract the DscResource.Tests.

In this case, it's using a git repository, but we could do the same with a PowerShell module if (or when) DscResource.Tests was released.

.build/tasks/Build-Module.ModuleBuilder.build.ps1, line 1 at r1 (raw file):

Param (

This is just a file with Invoke-Build Build Tasks.

There are many features to Invoke-Build which makes the tasks easier to maintain.

Eventually, the tasks should probably be released along with a Module (maybe xDscResourceDesigner, along with the template and import the tasks from that module to not leave them with the ResourceModule project).

Specifically, this is using Build-Module from @Jaykul's ModuleBuilder module.

.build/tasks/Build-Module.ModuleBuilder.build.ps1, line 18 at r1 (raw file):

if (Get-Command gitversion) { Write-Verbose "Using ModuleVersion as resolved by gitversion" (gitversion | ConvertFrom-Json).InformationalVersion

We should definitely use SemVer for the modules going forward, also enabling pre-releases for people to try out, and having quick pre-releases just after a merge.

GitVersion is a great tool to make that happening.

.build/tasks/Build-Module.ModuleBuilder.build.ps1, line 22 at r1 (raw file):

else { Write-Verbose "Command gitversion not found, defaulting to 0.0.1" '0.0.1'

this probably should change

.vscode/settings.json, line 14 at r1 (raw file):

"files.insertFinalNewline": true, "powershell.scriptAnalysis.settingsPath": ".vscode\\analyzersettings.psd1", "cSpell.language": "en",

I've added cSpell to help users using vscode + cSpell add-on. (helps me a lot)

Source/Build.psd1, line 1 at r1 (raw file):

@{

This is only required for now until my PR to ModuleBuilder is accepted (soon, I'm sure).

Source/DscResource.Template.psm1, line 1 at r1 (raw file):

#Get public and private function definition files.

This file is only an example of what's possible when loading the module in development.

In theory, you should always build the module first and then import it. That file is overridden completely during the build.

Source/suffix.ps1, line 2 at r1 (raw file):

# Suffix to add at the end of the PSM1 $script:localizedData = Get-LocalizedData

This is an example to add a command at the end of your built module not within a function.

In that case it's for loading the localized Data

Source/DSCResources/MSFT_Folder/MSFT_Folder.psm1, line 9 at r1 (raw file):

} $script:MasterModule = Import-Module -Name (Join-Path $ModuleBasePath "$ModuleName.psd1") -PassThru -force

A key difference is that I use the 'hosting' or 'master' module here, because using sort-of-nested-module is not very PowerShell-y, and leaves too much code in the DSC Resources.

I think the functions we develop for DSC Resources should be available in interactive sessions for configuration (and testing), and only the logic enabling idempotency should live in the Dsc Resource's PSM1.

DSC is just a model (interface) used to manage resources with imperative code (functions).

Source/DSCResources/MSFT_Folder/MSFT_Folder.psm1, line 240 at r1 (raw file):

) $testTargetResourceResult = &$Script:TestDscParameterState `

I show here how to call Test-DscParameterState while leaving it as a Private function in the host module.

However, I'm not a fan of Test-DscParameterState because I think it obfuscate a bit too much the test of what we consider 'compliant'...

At the same time I agree that avoids re-writing code every time, so I left it.

Source/private/Get-LocalizedData.ps1, line 11 at r1 (raw file):

each DscResource odule can load its specific Language file, along with one for the parent module. #> function Get-LocalizedData

Because there is now much less code in the DSC Resources, I think there should not be a file per Resource (most of the work & verbosity will be done in the module, that we may need to improve at some point).

That said, this implementation will load the .strings.psd1 localized data based on the caller.

If you call Get-LocalizedData from a Resource module's psm1, it will load the psd1 of similar baseName (MSFT_Folder.psm1 --> ModuleBase\en\MSFT_Folder.strings.psd1).

So in each localization folder you can have one language file for your module, and one per DSC Resource, but they would be in the same language folder of your module.

It also defaults to en-US, and should work without parameters thanks to its default.

Source/private/Get-LocalizedData.ps1, line 59 at r1 (raw file):

else { # For Development purposes

Allows for it to work when the function is called from a .ps1 when the module is in development and not yet compiled. It's a nice to have, not necessary IMO

Source/public/Get-Folder.ps1, line 1 at r1 (raw file):

<#

The Get-Folder, Set-Folder, Test-Folder are standard PowerShell advanced function, that anyone should be able to use in scripts or with the CLI.

The DSC Resources only proxy the DSC inputs to those commands.

Source/DscResource.Template.psd1, line 1 at r1 (raw file):

@{

The source files have been moved to a Source subfolder (that could also be named src or take the Module's name) to highlight the code of the project, and separate from the build-specific files.

It helps for clarity.

Source/DscResource.Template.psd1, line 9 at r1 (raw file):

# PSM1 to load with module RootModule = "DscResource.Template.psm1"

You see that the RootModule is now needed, because I've changed the source files to be a single function per file, currently separated by Private & Public to define whether they are exported or not.

The build can be configured for more advanced configuration if needed, but that's subject for another time.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My first impression, it is really a lot of code per repo just to test and/or build.

This need a lot more detailed practical review on how it behave when developing a new resource and running unit tests locally for a specific resource (not all) or just unit testing one helper function locally.

Have you run this in AppVeyor so it works there too? Or are you planning at looking at that later?

How is this gonna practically work when a resource module is released? Will the build script concatenate all the split functions?

Reviewed 17 of 45 files at r1.

Reviewable status: 17 of 45 files reviewed, 22 unresolved discussions (waiting on @gaelcolas)

.vscode/settings.json, line 14 at r1 (raw file):

Previously, gaelcolas (Gael) wrote…

I've added cSpell to help users using vscode + cSpell add-on. (helps me a lot)

Suggest using cSpell.json instead for a repo. VS Code extension Code Spell Checker will pick it up and use it, and also if opt-in to the spell checking test that will use the same file. Example https://github.com/PowerShell/DscResource.Tests/blob/dev/.vscode/cSpell.json

Source/DSCResources/MSFT_Folder/MSFT_Folder.psm1, line 11 at r1 (raw file):

$script:MasterModule = Import-Module -Name (Join-Path $ModuleBasePath "$ModuleName.psd1") -PassThru -force # Calling the MasterModule's private function Get-LocalizedData to load data based on current UI culture $script:localizedData = &$script:MasterModule { Get-LocalizedData }

I think the is gonna come of where complex to new contributors. Why do we need this? Why not import the modules and call the helper functions normally?

Source/public/Get-Folder.ps1, line 1 at r1 (raw file):

Previously, gaelcolas (Gael) wrote…

The Get-Folder, Set-Folder, Test-Folder are standard PowerShell advanced function, that anyone should be able to use in scripts or with the CLI.

The DSC Resources only proxy the DSC inputs to those commands.

This will add another level to the resource and breaking changes that maybe we should not have? Now we also need to take in account if users are using what was previously a helper function as a cmdlet in other production code.

|

To use this we have to refactor the test framework and change the release process. Is you suggestion that all existing modules in the DSC Resource Kit should change to this model? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

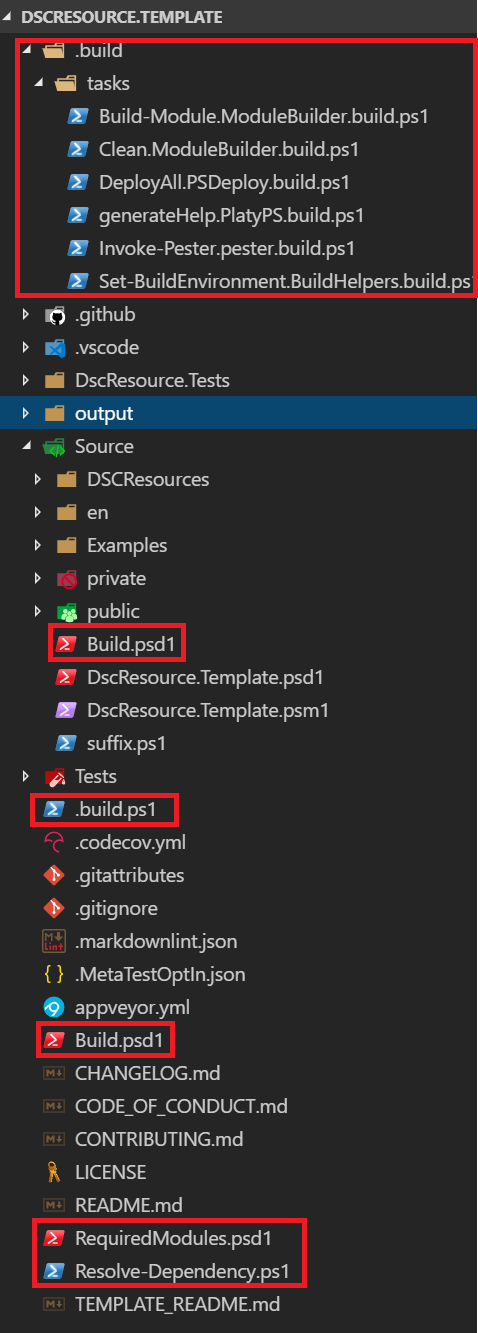

There's actually not much more code in each repo, see below in red.

Also, As I've commented (but I understand that it's not the best way to present the change), the build tasks would most likely be embedded in a PowerShell module also used as a dependency (xDscResourceDesigner would be a good candidate).

The Resolve-Dependency will be replaced by a script in the gallery, that I was waiting for to be released. There are plans for this to also go in PackageManagement, so it should soon be even simpler.

With this we could (not sure we should, but we can debate that later), remove the need for PSDepend.

So that would leave us with .build.ps1, build.psd1 (soon de-duplicated), RequiredModules.psd1 as extra file, and the rest being 'dynamically loaded' modules (if not available already on the system).

Then it's good to remind that those Dynamically loaded modules are somewhat similar to what's happening with the test harness already. It's just a bit more declarative in the PSD1, and done upfront.

It also enable to have a model that works with composites, where you can declare the required Modules for the composite.

This need a lot more detailed practical review on how it behave when developing a new resource and running unit tests locally for a specific resource (not all) or just unit testing one helper function locally.

Agreed, it needs more examples and explanation, but I haven't had time yet to adapt the tests (next step).

In short, it is very similar to what's currently done, where you can test the file, or test the built module (best practice).

As it's now using InvokeBuild as a task runner, you can easily create 'shortcut' for specific jobs you want.

Currently you have .build.ps1 -tasks build or .build.ps1 -tasks test or just .build.ps1 for doing both...

It's also easy to add tasks because they are decoupled and can be loaded from modules, so we can extend with, say, Test-Kitchen specific tasks.

Have you run this in AppVeyor so it works there too? Or are you planning at looking at that later?

This model, and most of the code, is coming from my SampleModule project that I've been working on and perfecting for a couple of years. You can see this as a v2 (or probably v3), but it's been working for me and others in AppVeyor for a while, we're just extending it to DSC resources & configurations. It has the benefit to be working on any CI tool (I've used it in Jenkins, Team City, AppVeyor, VSTS), behind firewalls and proxy with private galleries (by changing the psd1), and be extensible (thanks to InvokeBuild).

It's also what's powersing my DSC infra pipeline, as demo'ed in the AutomatedLab DscWorkshop.

For this specific draft, I haven't yet tested it in appveyor (need to sort out the testing first), but it won't be a problem as soon as it runs locally.

How is this gonna practically work when a resource module is released? Will the build script concatenate all the split functions?

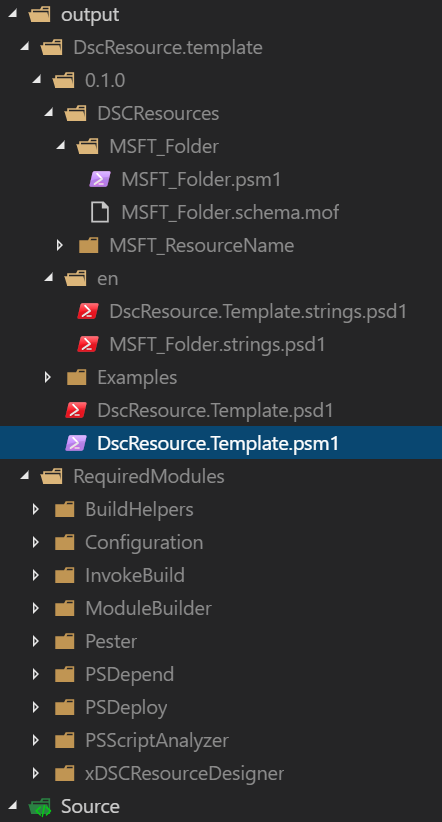

Absolutely, here's the result of the built module, created in the output folder:

you can see that the module only have 1 PSM1 left, and the other usual folders copied.

In that screenshot, you can also see the RequiredModules that have been pulled for that repository. The output is not version controlled in git.

To use this we have to refactor the test framework and change the release process. Is you suggestion that all existing modules in the DSC Resource Kit should change to this model?

Eventually, not necessarily overnight. Obviously that's a decent effort (but to be fair, once we get the right template, moving 1 repository is probably between 2 hours (small module) to 2 days. That's still up to 120 days of work, which would probably take years.

For now, I'm focusing on what it should be looking like, making it closer to PS Module Development best practices, and adding a bit of automation as the icing on the cake.

Given that:

- there's an interest in moving off AppVeyor towards AzDO

- there's a great need in repeatable, real-life, integration testing

- need to have a process that can help people getting into this and be successful quickly

We're bound to refactor what we've done so far, decouple from AppVeyor, create a great testing experience and practices.

Not to mention what's coming next, it's good to have a flexible 'framework' that can be directly adapted for it.

We can talk later about how to approach the change, but I want us to agree on a North star to aim for.

Most likely we'll need to double down on Integration testing first, introducing TK, and then only change the layout.

The way to remove code from the resources into functions, putting 1 function per file, hand avoiding multi-nested modules makes the Unit testing easier (encourages atomic functions).

Reviewable status: 17 of 45 files reviewed, 22 unresolved discussions (waiting on @johlju)

.vscode/settings.json, line 14 at r1 (raw file):

Previously, johlju (Johan Ljunggren) wrote…

Suggest using cSpell.json instead for a repo. VS Code extension Code Spell Checker will pick it up and use it, and also if opt-in to the spell checking test that will use the same file. Example https://github.com/PowerShell/DscResource.Tests/blob/dev/.vscode/cSpell.json

Yep, totally right. I did it somewhere and forgot to do it there too.

Source/DSCResources/MSFT_Folder/MSFT_Folder.psm1, line 11 at r1 (raw file):

Previously, johlju (Johan Ljunggren) wrote…

I think the is gonna come of where complex to new contributors. Why do we need this? Why not import the modules and call the helper functions normally?

if you just talk about invoking Get-LocalizedData in the MasterModule context, it's not something they will need to change, or care much about. We can discuss the specifics though.

The idea is avoiding having many large PSM1 to work with. Single, atomic, ps1 for functions. Simple PSM1 for DSC Resources 'proxying' to functions, are easier to maintain, test, and apprehend. It's also easier to then migrate to Class Based DSC resources.

Source/public/Get-Folder.ps1, line 1 at r1 (raw file):

I don't understand the 'another level'. It actually simplifies the layout by 'flattening' multiple PSM1s with easy to organise functions (similar to what's done in C#, Java and many many more languages.

Need to take into account if users are using what was previously a helper function as a cmdlet

Yes, and it's actually a desired outcome. It forces managing interface, which is a key element of managing complexity and writing testable code. It also reduces the chances for breaking changes (paradoxically!) at it encourages better abstraction and decoupling. It also helps with greater testing (people will use those even if they don't use DSC resources), greater contributions, and better transition to Imperative to DSC automation.

If you want a feel for it, look at cChoco & my Chocolatey module... (that I should bring to HQRM as well and suggest to DscResources)

|

Hey guys, sorry I haven't had a chance to engage on this, but I think we also need to consider auto-documentation resources in this. IMHO we should he encouraging the use of this model because it reduces the amount of work required to maintain and reduces risk of documentation not being the source of truth. This would go well with PlatyPs. I also haven't seen any mention of helper modules specific to the resource. The folder doesnt appear in the template. |

|

Also, shouldn't Requiredmodules folder be in the .gitignore? |

|

One final thought on this we need to carefully consider: we are introducing significant amounts of technical debt to every repository when ever we change the template/structure. Maintainers have a choice:

IMHO we are going to end up having large divergence in approach to these which is actually going to make things more difficult for maintaineemrs and contributors in the long run. I'm not saying don't do this or that it is not a good idea, but we haven't even got our major modules (including xPSDesiredStateConfiguration, xActiveDirectory,xWebAbmimistration etc) all using an existing template standard. I believe like any change it should be made incrementally. We also would need to provide a massive amount of effort into the other repos to try and get them up to speed. But again, I support and agree wity improvements in this area, I just know without the right level of investment and support from all maintainers we will just have another divergent standard that will require additional code in DSCResource.Tests to maintain. Sorry to be mr. Negative today- it's just that I've been through several of the major structural changes to the templates and it usually takes me a solid year to catch up on the tech debt it generates. Many other maintainers dont bother. |

|

I agree with @PlagueHO on the technical debt part, that was my thought with if this should be used in all repos. I’m not seeing that happening in the short term, since there are no contributors (well, in 2,5 years I have seen 1, no counting maintainers) interested in moving a resource module to a different model. That’s leaves the maintainers that are already busy reviewing PRs and fixing already existing technical debt on their free time. Personally I’m not seeing me moving those resources I maintain over to this any time soon. I have still not yet moved to the auto-documentation, but that is one priority to simplify documentation for contributors and myself. In the short term, I see the need for a way to integration test on multiple nodes (having and AD to test clustering, and setting up a AD on multiple nodes, SQL Server multiple modes, etc.). For example test-kitchen, or Azure DevOps (automatically setting up a resource group while testing). As a maintainer I want a way for integration test the xActiveDirectory resources when a contributor sends in a PR. I don’t mind running a test suite in azure DevOps or Tast-Kitchen if it is automatic. A user that have the need to use private repositories etc. probably have the knowledge of manually download the prerequisite from there approved local resources. I’m only seeing the need for documentation what is needed where. You did a very nice job about this, but not sure this is gonna be used in the DSC resource Kit unless someone can put in some serious time implementing this in the repos. I don’t think this template will help get contributors to the DSC resource Kit. It has always for me been more of a style guideline of how the project structure should look like. If this template changes to much at once, it cannot be used like that anymore. |

|

Btw I agreed with all that @PlagueHO said in his comment, not just the technical debt part 😃 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My day job is technical leadership in adoption of Continuous Delivery, so I'm always looking to break changes down into smaller pieces to reduce impact and therefore risk: So, can I suggest we break down this change into individual change proposals and identify the problem statement and user stories for each that we're trying to solve. We then could use some realistic repo examples (e.g. SQLServerDsc and NetworkingDsc) to see how much time/effort/consequences to implementing?

I'm 100% in support of these improvements though - just not looking forward to being in tech debt hell for the next year or so (note, I'm still in tech debt hell anyway, but I'd like to not get deeper 😁 ).

Reviewed 1 of 45 files at r1.

Reviewable status: 18 of 45 files reviewed, 22 unresolved discussions (waiting on @gaelcolas and @johlju)

Source/DSCResources/MSFT_Folder/MSFT_Folder.psm1, line 9 at r1 (raw file):

Previously, gaelcolas (Gael) wrote…

A key difference is that I use the 'hosting' or 'master' module here, because using sort-of-nested-module is not very PowerShell-y, and leaves too much code in the DSC Resources.

I think the functions we develop for DSC Resources should be available in interactive sessions for configuration (and testing), and only the logic enabling idempotency should live in the Dsc Resource's PSM1.DSC is just a model (interface) used to manage resources with imperative code (functions).

FYI, using positional parameters here in the Join-Pth.

Source/public/Get-Folder.ps1, line 1 at r1 (raw file):

Previously, gaelcolas (Gael) wrote…

I don't understand the 'another level'. It actually simplifies the layout by 'flattening' multiple PSM1s with easy to organise functions (similar to what's done in C#, Java and many many more languages.

Need to take into account if users are using what was previously a helper function as a cmdlet

Yes, and it's actually a desired outcome. It forces managing interface, which is a key element of managing complexity and writing testable code. It also reduces the chances for breaking changes (paradoxically!) at it encourages better abstraction and decoupling. It also helps with greater testing (people will use those even if they don't use DSC resources), greater contributions, and better transition to Imperative to DSC automation.

If you want a feel for it, look at cChoco & my Chocolatey module... (that I should bring to HQRM as well and suggest to DscResources)

I absolutely understand why adding levels of abstraction can be extremely useful and powerful (Yay SOLID Principles), but I'd make the following comments here:

-

I disagree with always making these functions public because:

a. unless they are absolutely implementing something that does not exist elsewhere and is needed (e.g. is Get-Folder actually really needed as a function by the public - and if so, would you want a whole DSC Resource with it? "Do you want a side of hamburger with your fries?")

b. we are adding another public interface that we must now always support. It is prevents us from changing out the Get-Folder implementation without raising this a "Breaking Change" - even though the breaking change isn't to the DSC Resource.

c. We will very often conflict with user specific implementations of common functions - who dosesn't already have a Get-Folder function of their own? 😁The exception is things like the PowerShelGet module that contains DSC Resources (thanks @johlju) but it's primary purpose is to provide Install-Module etc - so making these interfaces public makes sense.

-

Many DSC Resource kit resources already implement this pattern to some degree (without making the function public). They might differ in specific implementation details, but we should not be concerning ourselves with implementation details.

-

(relates to 2 sort of): Many (at least many of mine) are already wrappers/abstractions over top of existing functions. For example FirewallRule abstractions Get-NetFirewallRule (and many others). If I were to add a new function Get-FirewallRule (which I already have, but it is an internal implementation detail that doesn't get exposed to user) then a user would rightly ask: Should I use Get-FirewallRule or Get-NetFirewallRule? IMHO we would always want users to use the MSFT implementation of Get-NetFirewallRule - not the helper wrapper function.So by doing this we have actually added confusion for users.

I guess what I'm saying is that I think this template is now moving down into the realm of governing implementation details rather than interfaces and behaviors.

I still absolutely see huge value here and think this is all very work implementing.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Another thought: If we could break all these changes down and list them as "benefit vs. effort" and then prioritize them, we could pick up some low hanging fruit, implement them in our repos and adjust the template accordingly?

Reviewable status: 18 of 45 files reviewed, 22 unresolved discussions (waiting on @gaelcolas and @johlju)

|

I agree with @PlagueHO on breaking down the changes so we can add them individually, so we can see the benefits and the assumptions, like “module X is released in gallery”, or “function/module Y must have implemented Z”. I didn’t mean to sound negative in my previous comments, I’m all for change to the better, and the work here is intended to be for the better. I’m just afraid changing everything at once will never work, as the changes will never make it outside the template. Slow pace (=smaller pieces) is better, and also easier for the community as a whole to grasp it (for me too) and be part of the discussion. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

we also need to consider auto-documentation resources in this

Totally agree, I just haven't been that far yet.

shouldn't Requiredmodules folder be in the .gitignore?

Its parent folder (output) is already there.

we are introducing significant amounts of technical debt to every repository when ever we change the template/structure

I see what you mean, but I'd like to point out that's a bit inaccurate. The tech debt might already exist even if we haven't agreed on any change. Compliance to a standard, or in this case divergence, only makes it visible, the tech debt might already exist, as it's the case for integration testing.

That said, I fully appreciate the risks of changing the template, and the impact it could have. Truth is, we already have the problem, and it's not fully converged (it's mostly).

I believe like any change it should be made incrementally. We also would need to provide a massive amount of effort into the other repos to try and get them up to speed

I'm 100% with you here, the fact that I used a draft PR to the existing template was only for convenience in collaborating. The aim is to introduce the concepts I think are best practices early on, so we can discuss, flesh out together WHAT we want, and HOW we address the change.

Jumping on @johlju 's comment:

since there are no contributors (well, in 2,5 years I have seen 1, no counting maintainers) interested in moving a resource module to a different model.

I'm afraid that's also part of the problem. The approach taken in the DSC resources within the resource kit is so specific, that it's hard for people to transition. That started very early (with WMF4, long before our time I think), but because of the effort needed to change, it hasn't evolved as much as the PowerShell modules have, meaning it will be harder to have contributor the further we drift away from the PowerShell community practices.

Personally I’m not seeing me moving those resources I maintain over to this any time soon.

I hear you, and that's a different problem than the one I try to expose here. That does not mean you should not aim for this, eventually. Just that it's impractical and unlikely.

In the short term, I see the need for a way to integration test on multiple nodes. [...] For example test-kitchen, or Azure DevOps

Yep, I'm working on this in parallel. It might even be both, trying to find the right people to help ;) I'll chat to you about this.

You did a very nice job about this, but not sure this is gonna be used in the DSC resource Kit unless someone can put in some serious time implementing this in the repos.

Don't discard this that early. I hear you, let's see if it makes sense first before we find out whether or how we can get there.

I don’t think this template will help get contributors to the DSC resource Kit.

I think the automation, the improved alignment with PowerShell Module practices, and encouraging approaches that makes maintaining module easier will help getting new contributors. Whether it's this template or another. It's only part of what we need, but an important one (and I'm also working on the rest, which is less visible for now. I'll fix that).

It has always for me been more of a style guideline of how the project structure should look like. If this template changes to much at once, it cannot be used like that anymore.

It's already bigger than style guidelines, so I don't see why that can't "be used like that anymore".

Back on @PlagueHO comments:

break down this change into individual change proposals and identify the problem statement and user stories for each that we're trying to solve

A bit early for now IMO to do that, but that'll come next, yes.

Showing you this approach and confronting your experience with mine helps getting a better picture of the problems & challenges.

I did expect, and for good reasons, for you two to react hastily with "we'll never have time". But we all know that's only part of the picture, hence your request for breaking things down.

For now it's about discussing what it should be like (if we had a ginormous magic wand), this draft only illustrates concepts on an over-simplified resource, so we can reduce the number of variables.

So far you both mainly picked on:

- Amount of work changes to the template layout would create

- The potential pain of using publicly exposed functions

- Agreeing on the Integration test part

To finish because it was last comment:

Slow pace (=smaller pieces) is better and also easier for the community as a whole to grasp it (for me too) and be part of the discussion

Actually it's mainly easier on you, because you are already doing so much... Again, I know we're not ready for all those changes. I'm merely trying to see what's a good idea, what I might be missing, and what can help smooth the transition (some can be scripted!).

I'm taking your feedback away, think about it, and will get back to you.

Reviewable status: 18 of 45 files reviewed, 22 unresolved discussions (waiting on @gaelcolas and @johlju)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status: 18 of 45 files reviewed, 22 unresolved discussions (waiting on @johlju and @PlagueHO)

Source/DSCResources/MSFT_Folder/MSFT_Folder.psm1, line 9 at r1 (raw file):

Previously, PlagueHO (Daniel Scott-Raynsford) wrote…

FYI, using positional parameters here in the Join-Pth.

So is the Split-Path :) It's a draft after all... Will fix, thanks!

Source/public/Get-Folder.ps1, line 1 at r1 (raw file):

1.a DSC is an interface enabling a declarative approach. The functions also apply to people not doing it through DSC (we're not an exclusive club). Same purpose (managing resource), different approach. We should recommend the approach though, not enforce it (especially from day 1).

The main point is not to bundle code within the Resource's PSM1 which should only 'implement idempotency', which is already done in some Dsc Resources or modules (but not many, because it wasn't a design best practice when it started with WMF4)...

1.b You're saying that's a bad thing, I think it drives better modules, quality and community which should take priority over 'comfort'.

1.c Implementation detail. That's what module prefix are for ;)

-

Agreed, and I'm glad it's not all gloom ;) I'm just saying we should encourage and promote as it foster better design & quality. This draft shows how to make it public (which I recommend), leaving it as private pseudo-nested module is trivial change.

-

If you abstract and not 'purely' proxy, that's because you add value over existing implementations (even simplification, look at the npm ecosystem).

we would always want users to use the MSFT implementation of Get-NetFirewallRule

Actually, when possible we want to contribute upstream, failing that we want to offer alternative making it clear on how it differs.

I guess what I'm saying is that I think this template is now moving down into the realm of governing implementation details rather than interfaces and behaviors.

Nope, just the lack of time makes it seem like so ;) . I'd argue that the existing template does not forbid the principles I explained above, but is rarely (never?) implemented because it is not what's recommended.

This draft template can allow similar patterns, but it should not encourage them if we think they're not the best practices (which is what we're also discussing, let's just not mix them up).

I think it's again a tooling problem, I'll think about it and talk around to get a better idea of what we should be doing.

Pull Request (PR) description

This is an early preview on a new template I'm working on, introducing some different concepts than what's been used historically.

It is still work in progress but submitting this draft for starting the discussion and getting early feedback.

The reasons for changing are multiple, and I'll explain in-line in a review, but the TLDR is:

/cc @PlagueHO @johlju @mhendric

This change is