-

Notifications

You must be signed in to change notification settings - Fork 1.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Possible memory leak when using DaskExecutor in Kubernetes #3966

Comments

|

I think dask and kubernetes are unlikely to be the culprits here, rather I suspect your # Swapping out executor for this should do it

executor = LocalDaskExecutor(num_workers=6) |

|

Hmmm, that's interesting. I'd still be surprised if this is a bug in dask. A larger reproducible example I could run locally would definitely help. If you drop the

For your flow above, I'd expect using a |

|

👋 We have a similar setup, running Prefect in Kubernetes and using DaskExecutor for our flow with 8 workers. All workers are eating more and more memory until all of them OOM. After a bit of trial and error, we added these env vars to Dask workers yaml, which slows down the leak considerably. Credits to https://stackoverflow.com/questions/63680134/growing-memory-usage-leak-in-dask-distributed-profiler/63680548#63680548 Other related links: |

|

This issue is stale because it has been open 30 days with no activity. To keep this issue open remove stale label or comment. |

|

This issue was closed because it has been stale for 14 days with no activity. If this issue is important or you have more to add feel free to re-open it. |

|

Hello, I think I am seeing this issue and does not seem to be solved by using the environment variables We are using prefect 1.4 A minimal working example I tried doubling and making half the values of We are observing a steady increase of memory up to the point that the container gets OOMKilled. |

|

When we use the flow without the |

|

Hi @charalamm - Prefect 1.x is no longer under active development; please upgrade to 2.x or 3.x. |

|

@cicdw Thanks for you response. Is this problem though a known problem with prefect 1.x and is there any known solution? |

Description

I’m running Prefect in Kubernetes. What I have is a flow which spawns 15 Kubernetes high CPU intense jobs. In order to do some parallelism, I have in the flow a DaskExecutor configured (6 workers, 1 thread).

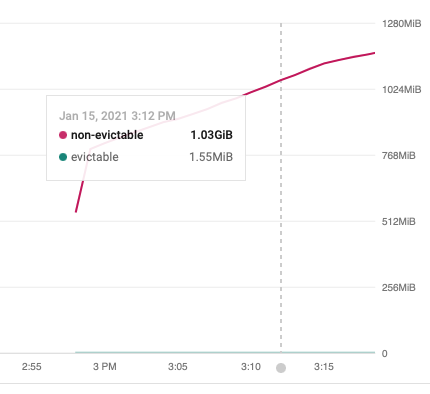

What I see is the prefect-job which is created by the Kubernetes agent, uses quite some resources which grows over time. See attached screenshot.

Note: I’m talking about the job created by the prefect agent. The actual job executing code is only using 188MiB.

It seems like there is a possible memory leak in either Prefect or Dask. Is there a better alternative to deploy this? Which uses less resources?

Expected Behavior

Well first of all, I wouldn't expect this high memory usage. But most of all, not that it seems to be growing indefinitely. In this instance, the flow wasn't killed (yet) by Kubernetes due high memory usage, but that is something I was running into.

Reproduction

Reproduction is bit tricky as this flow is generated from a config file on running the script. I think I got all the relevant bits below:

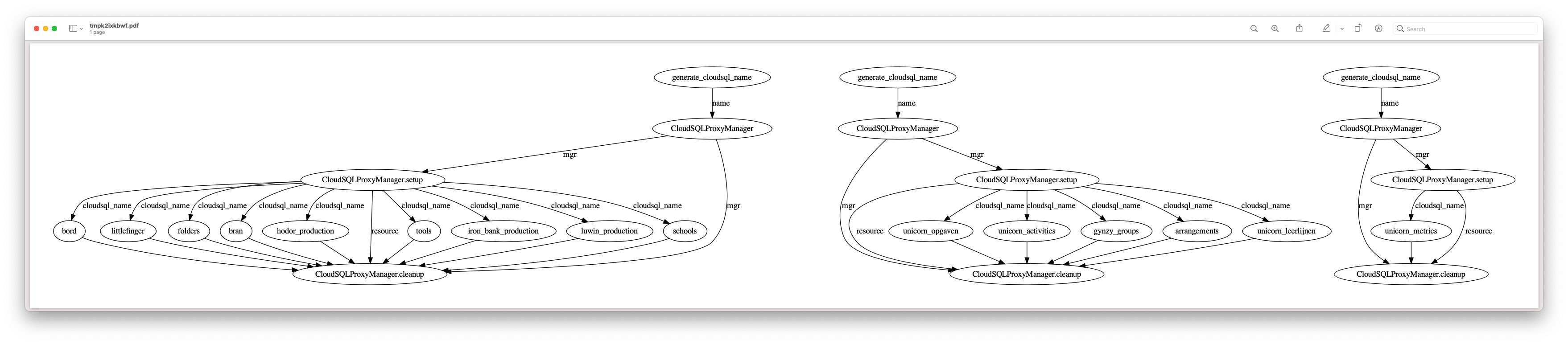

Visualizing one of the flows show:

Environment

Running Prefect Server 0.14.0 on Kubernetes 1.18.x in Google Cloud.

Originally reported on slack: https://prefect-community.slack.com/archives/CL09KU1K7/p1610706804087800

The text was updated successfully, but these errors were encountered: