-

Notifications

You must be signed in to change notification settings - Fork 152

Kernel Parameters

Warning

This wiki is obsolete. For the latest documentation, go to rocm.docs.amd.com/projects/Tensile

- LoopDoWhile: True=DoWhile loop, False=While or For loop

- LoopTail: Additional loop with LoopUnroll=1.

- EdgeType: Branch, ShiftPtr or None

- WorkGroup: [dim0, dim1, LocalSplitU]

- ThreadTile: [dim0, dim1]

- MatrixInstruction: Type of matrix instruction used for the calculation, and wave tiling parameters [InstructionM, InstructionN, InstructionK, InstructionB, BlocksInMDir, WaveTileM, WaveTileN, WaveGroupM, WaveGroupN]

- GlobalSplitU: Split up summation among work-groups to create more concurrency. This option launches a kernel to handle the beta scaling, then a second kernel where the writes to global memory are atomic.

- PrefetchGlobalRead: True means outer loop should prefetch global data one iteration ahead.

- PrefetchLocalRead: True means inner loop should prefetch lds data one iteration ahead.

- WorkGroupMapping: In what order will work-groups compute C; affects cacheing.

- LoopUnroll: How many iterations to unroll inner loop; helps loading coalesced memory.

- MacroTile: Derrived from WorkGroup*ThreadTile.

- DepthU: Derrived from LoopUnroll*SplitU.

- NumLoadsCoalescedA,B: Number of loads from A in coalesced dimension.

- GlobalReadCoalesceGroupA,B: True means adjacent threads map to adjacent global read elements (but, if transposing data then write to lds is scattered).

- GlobalReadCoalesceVectorA,B: True means vector components map to adjacent global read elements (but, if transposing data then write to lds is scattered).

- VectorWidth: Thread tile elements are contiguous for faster memory accesses. For example VW=4 means a thread will read a float4 from memory rather than 4 non-contiguous floats.

- KernelLanguage: Whether kernels should be written in source code (HIP, OpenCL) or assembly (gfx803, gfx900, ...).

The exhaustive list of solution parameters and their defaults is stored in Common.py.

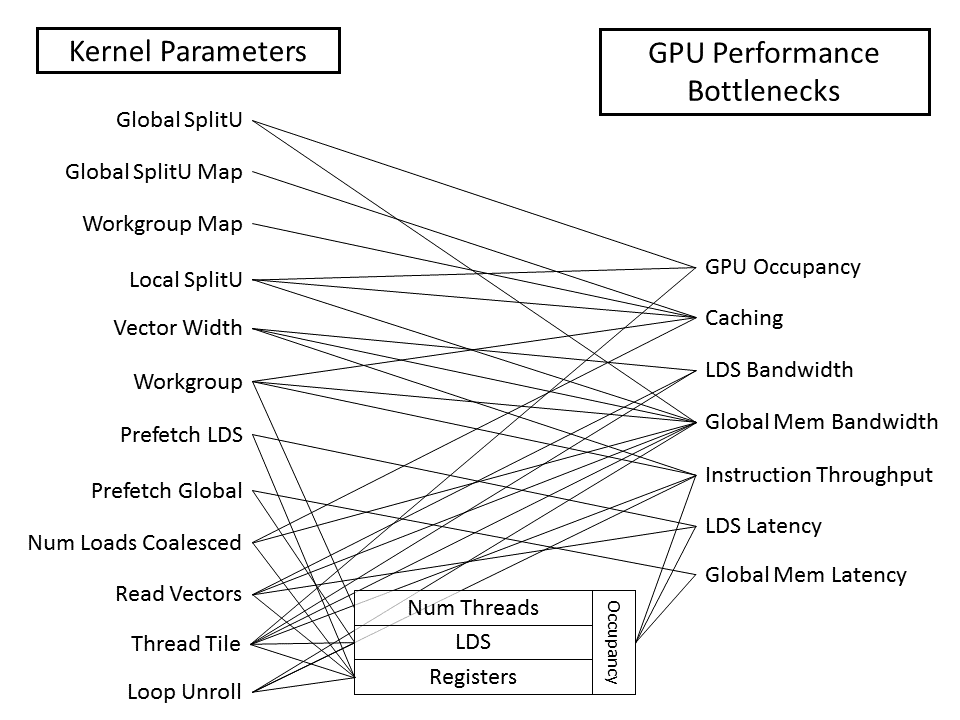

The kernel parameters affect many aspects of performance. Changing a parameter may help address one performance bottleneck but worsen another. That is why searching through the parameter space is vital to discovering the fastest kernel for a given problem.

For a traditional GEMM, the 2-dimensional output, C[i,j], is mapped to launching a 2-dimensional grid of work groups, each of which has a 2-dimensional grid of work items; one dimension belongs to i and one dimension belongs to j. The 1-dimensional summation is represented by a single loop within the kernel body.

To handle arbitrary dimensionality, Tensile begins by determining 3 special dimensions: D0, D1 and DU.

D0 and D1 are the free indices of A and B (one belongs to A and one to B) which have the shortest strides. This allows the inner-most loops to read from A and B the fastest via coalescing. In a traditional GEMM, every matrix has a dimension with a shortest stride of 1, but Tensile doesn't make that assumption. Of these two dimensions, D0 is the dimension which has the shortest tensor C stride which allows for fast writing.

DU represents the summation index with the shortest combined stride (stride in A + stride in B); it becomes the inner most loop which gets "U"nrolled. This assignment is also mean't to assure fast reading in the inner-most summation loop. There can be multiple summation indices (i.e. embedded loops) and DU will be iterated over in the inner most loop.

OpenCL allows for 3-dimensional grid of work-groups, and each work-group can be a 3-dimensional grid of work-items. Tensile assigns D0 to be dimension-0 of the work-group and work-item grid; it assigns D1 to be dimension-1 of the work-group and work-item grids. All other free or batch dimensions are flattened down into the final dimension-2 of the work-group and work-item grids. Withing the GPU kernel, dimensions-2 is reconstituted back into whatever dimensions it represents.

Kernel names contain abbreviations of relevant parameters along with their value. A kernel name might look something like the following:

Cijk_Ailk_Bjlk_SB_MT64x256x16_<PARAMETERS>

The first part (C***_A***_B***) indicates the type of operation the kernel performs. This example is a GEMM.

Next, is the data type supported by the kernel. In the example, S indicates single precision floating point numbers. B indicates the kernel can use beta values. The table below lists supported data types and their corresponding code name:

| Code | Type |

|---|---|

| S | Single-precision float |

| D | Double-precision float |

| C | Single-precision complex float |

| Z | Double-precision complex float |

| H | Half-precision float |

| 4xi8 | 4 x 8-bit integer (deprecated, use I8) |

| I | 32-bit integer |

| B | Bfloat16 |

| I8 | 8-bit integer |

MT stands for macro tile. In the example, the macro tile is 64x256. The third number listed with macro tile (16 in the example) is the unroll depth, specified by the DepthU parameter.

After these standard name segments comes an alphabetized list of abbreviations of relevant kernel parameters. The table below lists parameters, their kernel name abbreviations, and their default values to help interpret the meaning of a kernel name:

| Code | Parameter | Default |

|---|---|---|

| 1LDSB | 1LDSBuffer | 0 |

| APM | AggressivePerfMode | 1 |

| AAV | AssertAlphaValue | False |

| ABV | AssertBetaValue | False |

| ACED | AssertCEqualsD | False |

| AF0EM | AssertFree0ElementMultiple | 1 |

| AF1EM | AssertFree1ElementMultiple | 1 |

| AMAS | AssertMinApproxSize | -1 |

| ASE | AssertSizeEqual | {} |

| ASGT | AssertSizeGreaterThan | {} |

| ASLT | AssertSizeLessThan | {} |

| ASM | AssertSizeMultiple | {} |

| ASAE | AssertStrideAEqual | {} |

| ASBE | AssertStrideBEqual | {} |

| ASCE | AssertStrideCEqual | {} |

| ASDE | AssertStrideDEqual | {} |

| ASEM | AssertSummationElementMultiple | 1 |

| AAC | AtomicAddC | False |

| BL | BufferLoad | True |

| BS | BufferStore | True |

| CDO | CheckDimOverflow | 0 |

| CTDA | CheckTensorDimAsserts | False |

| CustomKernelName | ||

| DU | DepthU | -1 |

| DULD | DepthULdsDivisor | 1 |

| DTL | DirectToLds | False |

| DTVA | DirectToVgprA | False |

| DTVB | DirectToVgprB | False |

| DAF | DisableAtomicFail | 0 |

| DKP | DisableKernelPieces | 0 |

| DVO | DisableVgprOverlapping | False |

| ET | EdgeType | Branch |

| EPS | ExpandPointerSwap | True |

| R | Fp16AltImpl | False |

| FL | FractionalLoad | 0 |

| GR2A | GlobalRead2A | True |

| GR2B | GlobalRead2B | True |

| GRCGA | GlobalReadCoalesceGroupA | True |

| GRCGB | GlobalReadCoalesceGroupB | True |

| GRCVA | GlobalReadCoalesceVectorA | True |

| GRCVB | GlobalReadCoalesceVectorB | True |

| GRPM | GlobalReadPerMfma | 1 |

| GRVW | GlobalReadVectorWidth | -1 |

| GSU | GlobalSplitU | 1 |

| GSUA | GlobalSplitUAlgorithm | SingleBuffer |

| GSUSARR | GlobalSplitUSummationAssignmentRoundRobin | True |

| GSUWGMRR | GlobalSplitUWorkGroupMappingRoundRobin | False |

| GLS | GroupLoadStore | False |

| ISA | ISA | |

| IU | InnerUnroll | 1 |

| IA | InterleaveAlpha | 0 |

| KL | KernelLanguage | Source |

| LEL | LdcEqualsLdd | True |

| LBSPP | LdsBlockSizePerPad | -1 |

| LPA | LdsPadA | 0 |

| LPB | LdsPadB | 0 |

| LDL | LocalDotLayout | 1 |

| LRVW | LocalReadVectorWidth | -1 |

| LWPM | LocalWritePerMfma | -1 |

| LR2A | LocalRead2A | True |

| LR2B | LocalRead2B | True |

| LW2A | LocalWrite2A | True |

| LW2B | LocalWrite2B | True |

| LDW | LoopDoWhile | False |

| LT | LoopTail | True |

| MAD or FMA | MACInstruction | FMA |

| MT | MacroTile | |

| MTSM | MacroTileShapeMax | 64 |

| MTSM | MacroTileShapeMin | 1 |

| MDA | MagicDivAlg | 2 |

| MI | MatrixInstruction | [] |

| MO | MaxOccupancy | 40 |

| MVN | MaxVgprNumber | 256 |

| MIAV | MIArchVgpr | False |

| MVN | MinVgprNumber | 0 |

| NTA | NonTemporalA | 0 |

| NTB | NonTemporalB | 0 |

| NTC | NonTemporalC | 0 |

| NTD | NonTemporalD | 0 |

| NR | NoReject | False |

| NEPBS | NumElementsPerBatchStore | 0 |

| NLCA | NumLoadsCoalescedA | 1 |

| NLCB | NumLoadsCoalescedB | 1 |

| ONLL | OptNoLoadLoop | 1 |

| OPLV | OptPreLoopVmcnt | True |

| PBD | PackBatchDims | 0 |

| PFD | PackFreeDims | 1 |

| PG | PackGranularity | 2 |

| PSD | PackSummationDims | 0 |

| PSL | PerformanceSyncLocation | -1 |

| PWC | PerformanceWaitCount | -1 |

| PWL | PerformanceWaitLocation | -1 |

| PK | PersistentKernel | 0 |

| PKAB | PersistentKernelAlongBatch | False |

| PAP | PrefetchAcrossPersistent | 0 |

| PAPM | PrefetchAcrossPersistentMode | 0 |

| PGR | PrefetchGlobalRead | True |

| PLR | PrefetchLocalRead | 1 |

| RK | ReplacementKernel | False |

| SGR | ScheduleGlobalRead | 1 |

| SIA | ScheduleIterAlg | 1 |

| SLW | ScheduleLocalWrite | 1 |

| SS | SourceSwap | False |

| SU | StaggerU | 32 |

| SUM | StaggerUMapping | 0 |

| SUS | StaggerUStride | 256 |

| SCIU | StoreCInUnroll | False |

| SCIUE | StoreCInUnrollExact | False |

| SCIUI | StoreCInUnrollInterval | 1 |

| SCIUP | StoreCInUnrollPostLoop | False |

| SPO | StorePriorityOpt | False |

| SRVW | StoreRemapVectorWidth | 0 |

| SSO | StoreSyncOpt | 0 |

| SVW | StoreVectorWidth | -1 |

| SNLL | SuppressNoLoadLoop | False |

| TSGRA | ThreadSeparateGlobalReadA | 0 |

| TSGRB | ThreadSeparateGlobalReadB | 0 |

| TT | ThreadTile | [4, 4] |

| TLDS | TransposeLDS | 0 |

| UIIDU | UnrollIncIsDepthU | 0 |

| UMF | UnrollMemFence | False |

| U64SL | Use64bShadowLimit | 1 |

| UIOFGRO | UseInstOffsetForGRO | 0 |

| USFGRO | UseSgprForGRO | -1 |

| VAW | VectorAtomicWidth | -1 |

| VS | VectorStore | True |

| VW | VectorWidth | -1 |

| WSGRA | WaveSeparateGlobalReadA | 0 |

| WSGRB | WaveSeparateGlobalReadB | 0 |

| WS | WavefrontSize | 64 |

| WG | WorkGroup | [16, 16, 1] |

| WGM | WorkGroupMapping | 8 |

| WGMT | WorkGroupMappingType | B |