Lightweight CLI and OpenAI-compatible server for querying multiple Large Language Model (LLM) providers.

Configure additional providers and models in llms.json

- Mix and match local models with models from different API providers

- Requests automatically routed to available providers that supports the requested model (in defined order)

- Define free/cheapest/local providers first to save on costs

- Any failures are automatically retried on the next available provider

- Lightweight: Single llms.py Python file with single

aiohttpdependency - Multi-Provider Support: OpenRouter, Ollama, Anthropic, Google, OpenAI, Grok, Groq, Qwen, Z.ai, Mistral

- OpenAI-Compatible API: Works with any client that supports OpenAI's chat completion API

- Configuration Management: Easy provider enable/disable and configuration management

- CLI Interface: Simple command-line interface for quick interactions

- Server Mode: Run an OpenAI-compatible HTTP server at

http://localhost:{PORT}/v1/chat/completions - Image Support: Process images through vision-capable models

- Audio Support: Process audio through audio-capable models

- Custom Chat Templates: Configurable chat completion request templates for different modalities

- Auto-Discovery: Automatically discover available Ollama models

- Unified Models: Define custom model names that map to different provider-specific names

- Multi-Model Support: Support for over 160+ different LLMs

Simple ChatGPT-like UI to access ALL Your LLMs, Locally or Remotely!

Read the Introductory Blog Post.

pip install llms-py- Download llms.py

curl -O https://raw.githubusercontent.com/ServiceStack/llms/main/llms.py

chmod +x llms.py

mv llms.py ~/.local/bin/llms- Install single dependency:

pip install aiohttpSet environment variables for the providers you want to use:

export OPENROUTER_FREE_API_KEY="..."| Provider | Variable | Description | Example |

|---|---|---|---|

| openrouter_free | OPENROUTER_FREE_API_KEY |

OpenRouter FREE models API key | sk-or-... |

| groq | GROQ_API_KEY |

Groq API key | gsk_... |

| google_free | GOOGLE_FREE_API_KEY |

Google FREE API key | AIza... |

| codestral | CODESTRAL_API_KEY |

Codestral API key | ... |

| ollama | N/A | No API key required | |

| openrouter | OPENROUTER_API_KEY |

OpenRouter API key | sk-or-... |

GOOGLE_API_KEY |

Google API key | AIza... |

|

| anthropic | ANTHROPIC_API_KEY |

Anthropic API key | sk-ant-... |

| openai | OPENAI_API_KEY |

OpenAI API key | sk-... |

| grok | GROK_API_KEY |

Grok (X.AI) API key | xai-... |

| qwen | DASHSCOPE_API_KEY |

Qwen (Alibaba) API key | sk-... |

| z.ai | ZAI_API_KEY |

Z.ai API key | sk-... |

| mistral | MISTRAL_API_KEY |

Mistral API key | ... |

Enable the providers you want to use:

# Enable providers with free models and free tiers

llms --enable openrouter_free google_free groq

# Enable paid providers

llms --enable openrouter anthropic google openai mistral grok qwenStart the UI and an OpenAI compatible API on port 8000:

llms --serve 8000Launches the UI at http://localhost:8000 and an OpenAI Endpoint at http://localhost:8000/v1/chat/completions.

llms "What is the capital of France?"The configuration file llms.json is saved to ~/.llms/llms.json and defines available providers, models, and default settings. Key sections:

headers: Common HTTP headers for all requeststext: Default chat completion request template for text prompts

Each provider configuration includes:

enabled: Whether the provider is activetype: Provider class (OpenAiProvider, GoogleProvider, etc.)api_key: API key (supports environment variables with$VAR_NAME)base_url: API endpoint URLmodels: Model name mappings (local name → provider name)

# Simple question

llms "Explain quantum computing"

# With specific model

llms -m gemini-2.5-pro "Write a Python function to sort a list"

llms -m grok-4 "Explain this code with humor"

llms -m qwen3-max "Translate this to Chinese"

# With system prompt

llms -s "You are a helpful coding assistant" "How do I reverse a string in Python?"

# With image (vision models)

llms --image image.jpg "What's in this image?"

llms --image https://example.com/photo.png "Describe this photo"

# Display full JSON Response

llms "Explain quantum computing" --rawBy default llms uses the defaults/text chat completion request defined in llms.json.

You can instead use a custom chat completion request with --chat, e.g:

# Load chat completion request from JSON file

llms --chat request.json

# Override user message

llms --chat request.json "New user message"

# Override model

llms -m kimi-k2 --chat request.jsonExample request.json:

{

"model": "kimi-k2",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": ""}

],

"temperature": 0.7,

"max_tokens": 150

}Send images to vision-capable models using the --image option:

# Use defaults/image Chat Template (Describe the key features of the input image)

llms --image ./screenshot.png

# Local image file

llms --image ./screenshot.png "What's in this image?"

# Remote image URL

llms --image https://example.org/photo.jpg "Describe this photo"

# Data URI

llms --image "data:image/png;base64,$(base64 -w 0 image.png)" "Describe this image"

# With a specific vision model

llms -m gemini-2.5-flash --image chart.png "Analyze this chart"

llms -m qwen2.5vl --image document.jpg "Extract text from this document"

# Combined with system prompt

llms -s "You are a data analyst" --image graph.png "What trends do you see?"

# With custom chat template

llms --chat image-request.json --image photo.jpgExample of image-request.json:

{

"model": "qwen2.5vl",

"messages": [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": ""

}

},

{

"type": "text",

"text": "Caption this image"

}

]

}

]

}Supported image formats: PNG, WEBP, JPG, JPEG, GIF, BMP, TIFF, ICO

Image sources:

- Local files: Absolute paths (

/path/to/image.jpg) or relative paths (./image.png,../image.jpg) - Remote URLs: HTTP/HTTPS URLs are automatically downloaded

- Data URIs: Base64-encoded images (

data:image/png;base64,...)

Images are automatically processed and converted to base64 data URIs before being sent to the model.

Popular models that support image analysis:

- OpenAI: GPT-4o, GPT-4o-mini, GPT-4.1

- Anthropic: Claude Sonnet 4.0, Claude Opus 4.1

- Google: Gemini 2.5 Pro, Gemini Flash

- Qwen: Qwen2.5-VL, Qwen3-VL, QVQ-max

- Ollama: qwen2.5vl, llava

Images are automatically downloaded and converted to base64 data URIs.

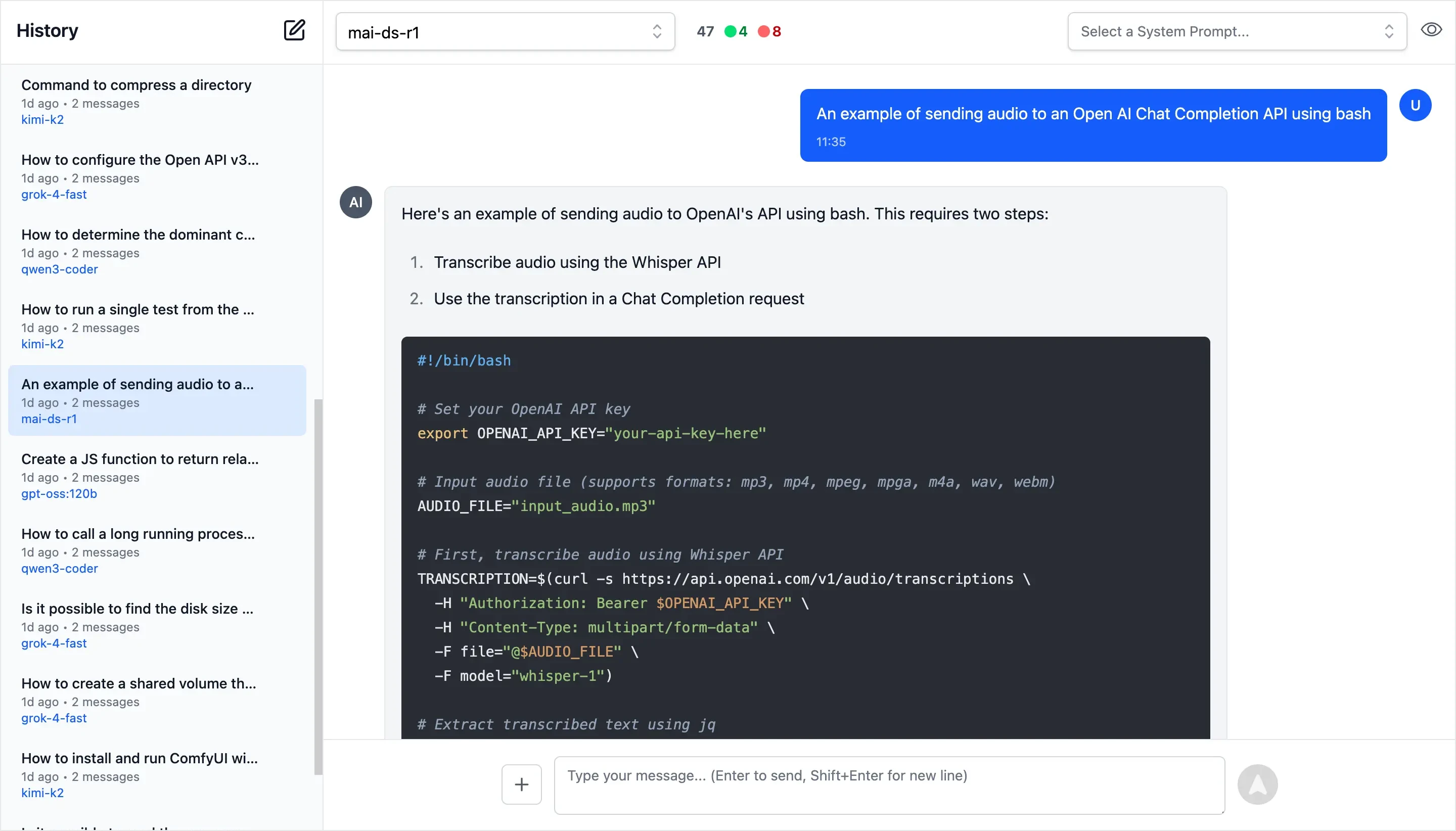

Send audio files to audio-capable models using the --audio option:

# Use defaults/audio Chat Template (Transcribe the audio)

llms --audio ./recording.mp3

# Local audio file

llms --audio ./meeting.wav "Summarize this meeting recording"

# Remote audio URL

llms --audio https://example.org/podcast.mp3 "What are the key points discussed?"

# With a specific audio model

llms -m gpt-4o-audio-preview --audio interview.mp3 "Extract the main topics"

llms -m gemini-2.5-flash --audio interview.mp3 "Extract the main topics"

# Combined with system prompt

llms -s "You're a transcription specialist" --audio talk.mp3 "Provide a detailed transcript"

# With custom chat template

llms --chat audio-request.json --audio speech.wavExample of audio-request.json:

{

"model": "gpt-4o-audio-preview",

"messages": [

{

"role": "user",

"content": [

{

"type": "input_audio",

"input_audio": {

"data": "",

"format": "mp3"

}

},

{

"type": "text",

"text": "Please transcribe this audio"

}

]

}

]

}Supported audio formats: MP3, WAV

Audio sources:

- Local files: Absolute paths (

/path/to/audio.mp3) or relative paths (./audio.wav,../recording.m4a) - Remote URLs: HTTP/HTTPS URLs are automatically downloaded

- Base64 Data: Base64-encoded audio

Audio files are automatically processed and converted to base64 data before being sent to the model.

Popular models that support audio processing:

- OpenAI: gpt-4o-audio-preview

- Google: gemini-2.5-pro, gemini-2.5-flash, gemini-2.5-flash-lite

Audio files are automatically downloaded and converted to base64 data URIs with appropriate format detection.

Send documents (e.g. PDFs) to file-capable models using the --file option:

# Use defaults/file Chat Template (Summarize the document)

llms --file ./docs/handbook.pdf

# Local PDF file

llms --file ./docs/policy.pdf "Summarize the key changes"

# Remote PDF URL

llms --file https://example.org/whitepaper.pdf "What are the main findings?"

# With specific file-capable models

llms -m gpt-5 --file ./policy.pdf "Summarize the key changes"

llms -m gemini-flash-latest --file ./report.pdf "Extract action items"

llms -m qwen2.5vl --file ./manual.pdf "List key sections and their purpose"

# Combined with system prompt

llms -s "You're a compliance analyst" --file ./policy.pdf "Identify compliance risks"

# With custom chat template

llms --chat file-request.json --file ./docs/handbook.pdfExample of file-request.json:

{

"model": "gpt-5",

"messages": [

{

"role": "user",

"content": [

{

"type": "file",

"file": {

"filename": "",

"file_data": ""

}

},

{

"type": "text",

"text": "Please summarize this document"

}

]

}

]

}Supported file formats: PDF

Other document types may work depending on the model/provider.

File sources:

- Local files: Absolute paths (

/path/to/file.pdf) or relative paths (./file.pdf,../file.pdf) - Remote URLs: HTTP/HTTPS URLs are automatically downloaded

- Base64/Data URIs: Inline

data:application/pdf;base64,...is supported

Files are automatically downloaded (for URLs) and converted to base64 data URIs before being sent to the model.

Popular multi-modal models that support file (PDF) inputs:

- OpenAI: gpt-5, gpt-5-mini, gpt-4o, gpt-4o-mini

- Google: gemini-flash-latest, gemini-2.5-flash-lite

- Grok: grok-4-fast (OpenRouter)

- Qwen: qwen2.5vl, qwen3-max, qwen3-vl:235b, qwen3-coder, qwen3-coder-flash (OpenRouter)

- Others: kimi-k2, glm-4.5-air, deepseek-v3.1:671b, llama4:400b, llama3.3:70b, mai-ds-r1, nemotron-nano:9b

Run as an OpenAI-compatible HTTP server:

# Start server on port 8000

llms --serve 8000The server exposes a single endpoint:

POST /v1/chat/completions- OpenAI-compatible chat completions

Example client usage:

curl -X POST http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "kimi-k2",

"messages": [

{"role": "user", "content": "Hello!"}

]

}'# List enabled providers and models

llms --list

llms ls

# List specific providers

llms ls ollama

llms ls google anthropic

# Enable providers

llms --enable openrouter

llms --enable anthropic google_free groq

# Disable providers

llms --disable ollama

llms --disable openai anthropic

# Set default model

llms --default grok-4- Installed from PyPI

pip install llms-py --upgrade- Using Direct Download

# Update to latest version (Downloads latest llms.py)

llms --update# Use custom config file

llms --config /path/to/config.json "Hello"

# Get raw JSON response

llms --raw "What is 2+2?"

# Enable verbose logging

llms --verbose "Tell me a joke"

# Custom log prefix

llms --verbose --logprefix "[DEBUG] " "Hello world"

# Set default model (updates config file)

llms --default grok-4

# Update llms.py to latest version

llms --update

# Pass custom parameters to chat request (URL-encoded)

llms --args "temperature=0.7&seed=111" "What is 2+2?"

# Multiple parameters with different types

llms --args "temperature=0.5&max_completion_tokens=50" "Tell me a joke"

# URL-encoded special characters (stop sequences)

llms --args "stop=Two,Words" "Count to 5"

# Combine with other options

llms --system "You are helpful" --args "temperature=0.3" --raw "Hello"The --args option allows you to pass URL-encoded parameters to customize the chat request sent to LLM providers:

Parameter Types:

- Floats:

temperature=0.7,frequency_penalty=0.2 - Integers:

max_completion_tokens=100 - Booleans:

store=true,verbose=false,logprobs=true - Strings:

stop=one - Lists:

stop=two,words

Common Parameters:

temperature: Controls randomness (0.0 to 2.0)max_completion_tokens: Maximum tokens in responseseed: For reproducible outputstop_p: Nucleus sampling parameterstop: Stop sequences (URL-encode special chars)store: Whether or not to store the outputfrequency_penalty: Penalize new tokens based on frequencypresence_penalty: Penalize new tokens based on presencelogprobs: Include log probabilities in responseparallel_tool_calls: Enable parallel tool callsprompt_cache_key: Cache key for promptreasoning_effort: Reasoning effort (low, medium, high, *minimal, *none, *default)safety_identifier: A string that uniquely identifies each userseed: For reproducible outputsservice_tier: Service tier (free, standard, premium, *default)top_logprobs: Number of top logprobs to returntop_p: Nucleus sampling parameterverbosity: Verbosity level (0, 1, 2, 3, *default)enable_thinking: Enable thinking mode (Qwen)stream: Enable streaming responses

The --default MODEL option allows you to set the default model used for all chat completions. This updates the defaults.text.model field in your configuration file:

# Set default model to gpt-oss

llms --default gpt-oss:120b

# Set default model to Claude Sonnet

llms --default claude-sonnet-4-0

# The model must be available in your enabled providers

llms --default gemini-2.5-proWhen you set a default model:

- The configuration file (

~/.llms/llms.json) is automatically updated - The specified model becomes the default for all future chat requests

- The model must exist in your currently enabled providers

- You can still override the default using

-m MODELfor individual requests

The --update option downloads and installs the latest version of llms.py from the GitHub repository:

# Update to latest version

llms --updateThis command:

- Downloads the latest

llms.pyfromhttps://raw.githubusercontent.com/ServiceStack/llms/refs/heads/main/llms.py - Overwrites your current

llms.pyfile with the latest version - Preserves your existing configuration file (

llms.json) - Requires an internet connection to download the update

Pipe Markdown output to glow to beautifully render it in the terminal:

llms "Explain quantum computing" | glowAny OpenAI-compatible providers and their models can be added by configuring them in llms.json. By default only AI Providers with free tiers are enabled which will only be "available" if their API Key is set.

You can list the available providers, their models and which are enabled or disabled with:

llms lsThey can be enabled/disabled in your llms.json file or with:

llms --enable <provider>

llms --disable <provider>For a provider to be available, they also require their API Key configured in either your Environment Variables

or directly in your llms.json.

| Provider | Variable | Description | Example |

|---|---|---|---|

| openrouter_free | OPENROUTER_FREE_API_KEY |

OpenRouter FREE models API key | sk-or-... |

| groq | GROQ_API_KEY |

Groq API key | gsk_... |

| google_free | GOOGLE_FREE_API_KEY |

Google FREE API key | AIza... |

| codestral | CODESTRAL_API_KEY |

Codestral API key | ... |

| ollama | N/A | No API key required | |

| openrouter | OPENROUTER_API_KEY |

OpenRouter API key | sk-or-... |

GOOGLE_API_KEY |

Google API key | AIza... |

|

| anthropic | ANTHROPIC_API_KEY |

Anthropic API key | sk-ant-... |

| openai | OPENAI_API_KEY |

OpenAI API key | sk-... |

| grok | GROK_API_KEY |

Grok (X.AI) API key | xai-... |

| qwen | DASHSCOPE_API_KEY |

Qwen (Alibaba) API key | sk-... |

| z.ai | ZAI_API_KEY |

Z.ai API key | sk-... |

| mistral | MISTRAL_API_KEY |

Mistral API key | ... |

- Type:

OpenAiProvider - Models: GPT-5, GPT-5 Codex, GPT-4o, GPT-4o-mini, o3, etc.

- Features: Text, images, function calling

export OPENAI_API_KEY="your-key"

llms --enable openai- Type:

OpenAiProvider - Models: Claude Opus 4.1, Sonnet 4.0, Haiku 3.5, etc.

- Features: Text, images, large context windows

export ANTHROPIC_API_KEY="your-key"

llms --enable anthropic- Type:

GoogleProvider - Models: Gemini 2.5 Pro, Flash, Flash-Lite

- Features: Text, images, safety settings

export GOOGLE_API_KEY="your-key"

llms --enable google_free- Type:

OpenAiProvider - Models: 100+ models from various providers

- Features: Access to latest models, free tier available

export OPENROUTER_API_KEY="your-key"

llms --enable openrouter- Type:

OpenAiProvider - Models: Grok-4, Grok-3, Grok-3-mini, Grok-code-fast-1, etc.

- Features: Real-time information, humor, uncensored responses

export GROK_API_KEY="your-key"

llms --enable grok- Type:

OpenAiProvider - Models: Llama 3.3, Gemma 2, Kimi K2, etc.

- Features: Fast inference, competitive pricing

export GROQ_API_KEY="your-key"

llms --enable groq- Type:

OllamaProvider - Models: Auto-discovered from local Ollama installation

- Features: Local inference, privacy, no API costs

# Ollama must be running locally

llms --enable ollama- Type:

OpenAiProvider - Models: Qwen3-max, Qwen-max, Qwen-plus, Qwen2.5-VL, QwQ-plus, etc.

- Features: Multilingual, vision models, coding, reasoning, audio processing

export DASHSCOPE_API_KEY="your-key"

llms --enable qwen- Type:

OpenAiProvider - Models: GLM-4.6, GLM-4.5, GLM-4.5-air, GLM-4.5-x, GLM-4.5-airx, GLM-4.5-flash, GLM-4:32b

- Features: Advanced language models with strong reasoning capabilities

export ZAI_API_KEY="your-key"

llms --enable z.ai- Type:

OpenAiProvider - Models: Mistral Large, Codestral, Pixtral, etc.

- Features: Code generation, multilingual

export MISTRAL_API_KEY="your-key"

llms --enable mistral- Type:

OpenAiProvider - Models: Codestral

- Features: Code generation

export CODESTRAL_API_KEY="your-key"

llms --enable codestralThe tool automatically routes requests to the first available provider that supports the requested model. If a provider fails, it tries the next available provider with that model.

Example: If both OpenAI and OpenRouter support kimi-k2, the request will first try OpenRouter (free), then fall back to Groq than OpenRouter (Paid) if requests fails.

{

"defaults": {

"headers": {"Content-Type": "application/json"},

"text": {

"model": "kimi-k2",

"messages": [{"role": "user", "content": ""}]

}

},

"providers": {

"groq": {

"enabled": true,

"type": "OpenAiProvider",

"base_url": "https://api.groq.com/openai",

"api_key": "$GROQ_API_KEY",

"models": {

"llama3.3:70b": "llama-3.3-70b-versatile",

"llama4:109b": "meta-llama/llama-4-scout-17b-16e-instruct",

"llama4:400b": "meta-llama/llama-4-maverick-17b-128e-instruct",

"kimi-k2": "moonshotai/kimi-k2-instruct-0905",

"gpt-oss:120b": "openai/gpt-oss-120b",

"gpt-oss:20b": "openai/gpt-oss-20b",

"qwen3:32b": "qwen/qwen3-32b"

}

}

}

}{

"providers": {

"openrouter": {

"enabled": false,

"type": "OpenAiProvider",

"base_url": "https://openrouter.ai/api",

"api_key": "$OPENROUTER_API_KEY",

"models": {

"grok-4": "x-ai/grok-4",

"glm-4.5-air": "z-ai/glm-4.5-air",

"kimi-k2": "moonshotai/kimi-k2",

"deepseek-v3.1:671b": "deepseek/deepseek-chat",

"llama4:400b": "meta-llama/llama-4-maverick"

}

},

"anthropic": {

"enabled": false,

"type": "OpenAiProvider",

"base_url": "https://api.anthropic.com",

"api_key": "$ANTHROPIC_API_KEY",

"models": {

"claude-sonnet-4-0": "claude-sonnet-4-0"

}

},

"ollama": {

"enabled": false,

"type": "OllamaProvider",

"base_url": "http://localhost:11434",

"models": {},

"all_models": true

}

}

}Run `llms` without arguments to see the help screen:

usage: llms.py [-h] [--config FILE] [-m MODEL] [--chat REQUEST] [-s PROMPT] [--image IMAGE] [--audio AUDIO]

[--file FILE] [--raw] [--list] [--serve PORT] [--enable PROVIDER] [--disable PROVIDER]

[--default MODEL] [--init] [--logprefix PREFIX] [--verbose] [--update]

llms

options:

-h, --help show this help message and exit

--config FILE Path to config file

-m MODEL, --model MODEL

Model to use

--chat REQUEST OpenAI Chat Completion Request to send

-s PROMPT, --system PROMPT

System prompt to use for chat completion

--image IMAGE Image input to use in chat completion

--audio AUDIO Audio input to use in chat completion

--file FILE File input to use in chat completion

--raw Return raw AI JSON response

--list Show list of enabled providers and their models (alias ls provider?)

--serve PORT Port to start an OpenAI Chat compatible server on

--enable PROVIDER Enable a provider

--disable PROVIDER Disable a provider

--default MODEL Configure the default model to use

--init Create a default llms.json

--logprefix PREFIX Prefix used in log messages

--verbose Verbose output

--update Update to latest version

Config file not found

# Initialize default config

llms --init

# Or specify custom path

llms --config ./my-config.jsonNo providers enabled

# Check status

llms --list

# Enable providers

llms --enable google anthropicAPI key issues

# Check environment variables

echo $ANTHROPIC_API_KEY

# Enable verbose logging

llms --verbose "test"Model not found

# List available models

llms --list

# Check provider configuration

llms ls openrouterEnable verbose logging to see detailed request/response information:

llms --verbose --logprefix "[DEBUG] " "Hello"This shows:

- Enabled providers

- Model routing decisions

- HTTP request details

- Error messages with stack traces

llms.py- Main script with CLI and server functionalityllms.json- Default configuration filerequirements.txt- Python dependencies

OpenAiProvider- Generic OpenAI-compatible providerOllamaProvider- Ollama-specific provider with model auto-discoveryGoogleProvider- Google Gemini with native API formatGoogleOpenAiProvider- Google Gemini via OpenAI-compatible endpoint

- Create a provider class inheriting from

OpenAiProvider - Implement provider-specific authentication and formatting

- Add provider configuration to

llms.json - Update initialization logic in

init_llms()

Contributions are welcome! Please submit a PR to add support for any missing OpenAI-compatible providers.