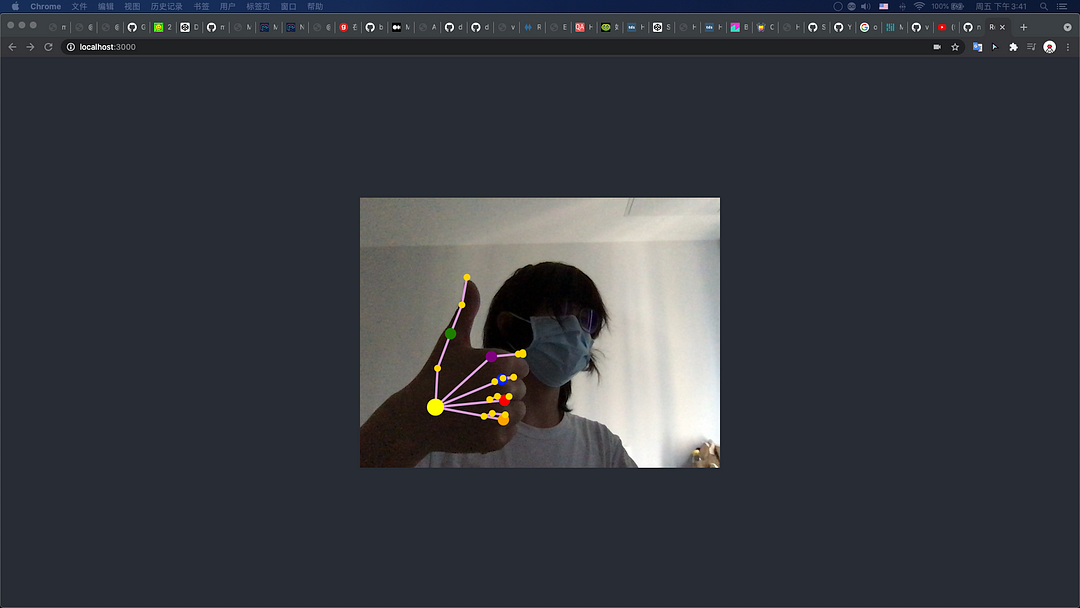

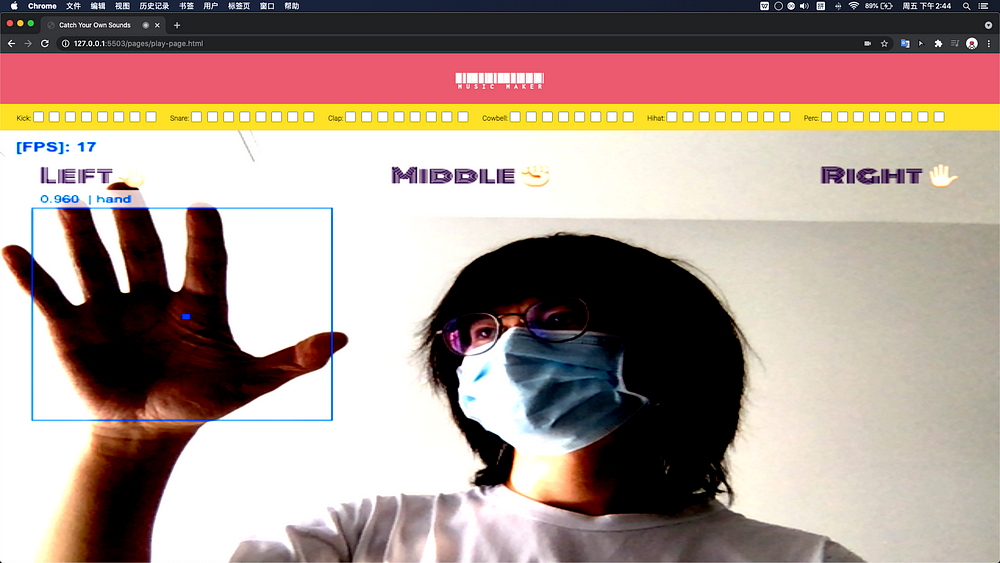

Introduction: This is a simple demo work that uses handtrack.js and Tone.js; by capturing the position of the hand, it can switch the background music and change the simple filter of the canvas; similarly, a simple drums created loops by Tone.js, you can simply mix different sounds together.

You can try my online demo:

CodeSandbox: https://ggddh.csb.app

You can also watch the demo video online:

YouTube: https://youtu.be/8Pg5hY85aWA

You can find this project in my code repository:

Github: https://github.com/ShuSQ/CCI_ACC_FP2_handMusic

When I was a high school student, I tried audio production software like fruitloops, and I was very interested in audio production methods. At that time, I thought about whether users can control the audio through some interactive methods of the body. I have come into contact with TensorFlow this semester, and I decided to explore and experiment in this direction.

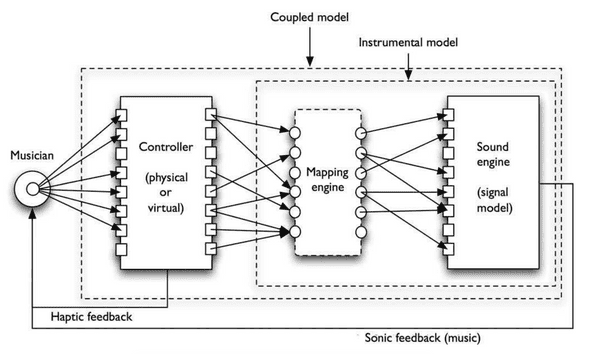

Image from:34.4.magnusson.pdf

First, I need to find a way to recognize the hand and control it through the video information collected by the webcam. Among the available technical tools, we have many choices, such as TensorFlow, openCV and MediaPipe, etc. Finally, I chose Handtrack.js as the technical solution for finding hands.

What is Handtrack.js?

Handtrack.js is a library for prototyping realtime hand detection (bounding box), directly in the browser. It frames handtracking as an object detection problem, and uses a trained convolutional neural network to predict bounding boxes for the location of hands in an image.

Why I choose Handtrack.js?

Because I am more familiar with JavaScript, and it is easier to obtain the case-study of Handtrack.js on the Internet; of course, in terms of calculation speed and accuracy, it is not as good as the Python+MediaPipe solution. Considering the scale of the project, the current Performance is also acceptable.

Other, we can also detect the image information collected by the webcam through JavaScript, compare each frame, and then detect the motion information. This is a clever method, and it can also generate its own style of video.

You can learn more from this code repository here:

https://github.com/jasonmayes/JS-Motion-Detection

Testing the technical solutions of tensorflow.js and fingerpose is great, but we don’t need it at the moment

Handtrack.js is very convenient to use, we can quickly configure parameters:

const modelParams = { // 導入handtrack默認的參數模型

flipHorizontal: true, // flip e.g for video

imageScaleFactor: 0.7, // reduce input image size for gains in speed.

maxNumBoxes: 1, // maximum number of boxes to detect

iouThreshold: 0.5, // ioU threshold for non-max suppression

scoreThreshold: 0.9, // confidence threshold for predictions.

};I learned a lot from these materials:

- Real Time AI GESTURE RECOGNITION with Tensorflow.JS

- Handtrack.js: Hand Tracking Interactions in the Browser using Tensorflow.js

- Controlling 3D Objects with Hands

- Machine learning for everyone: How to implement pose estimation in a browser using your webcam

- Teachable Machine

- Air guitar tutorial

- A modern approach for Computer Vision on the web

- Motion Tracking & Music in < 100 lines of JavaScript

- MediaPipe in JavaScript

Create an empty audio tag in .html:

<audio></audio>We need to get the page elements in handplay.js:

const audio = document.getElementsByTagName("audio")[0];Through the length of predictions, bbox is the coordinate of the box that stores the recognition result. We can read the xy coordinate information of the handbook through bbox[0] and bbox[1], and then divide it into 3 recognition areas:

if (predictions.length > 0) {

let x = predictions[0].bbox[0];

let y = predictions[0].bbox[1];

// 匹配位置並播放音源

if (y <= 100) {

if (x <= 150) {

//Left

audio.src = genres.left.source;

applyFilter(genres.left.filter);

} else if (x >= 250 && x <= 350) {

//Middle

audio.src = genres.middle.source;

applyFilter(genres.middle.filter);

} else if (x >= 450) {

//Right

audio.src = genres.right.source;

applyFilter(genres.right.filter);

}

//Play the sound

audio.volume = 1;

audio.play(); // 播放audio

}

}The following articles are very helpful for me:

-

Making Music In A Browser: Recreating Theremin With JS And Web Audio API

-

How To Create A Responsive 8-Bit Drum Machine Using Web Audio, SVG And Multitouch

In addition to switching music with gestures, I also considered adding simple filters to change the effect of the video, mainly through the filter attribute of CSS, using the three effects of blur brightness contrast, and initially considered implementing similar to TensorFlow The effect of NST (Neural Style Transfer), considering the performance of the browser and the implementation cost, chose a more convenient filter solution.

Tone.js is a Web Audio framework for creating interactive music in the browser. Earlier, Tone.js and magenta.js were compared. There are many similarities between the two, and magenta.js is okay. Through MusicRNN and MusicVAE to complete machine learning, I really want to do this, let the ambient sound, background sound and Samples mix! However, there are not enough materials for making music with Magenta.js on the Internet. After learning, I found that I could not achieve it in a short time, so I chose Tone.js. To achieve this, Tone.js loads audio samples and sets the scheduleRepeat() poetry selection loop effect, and sets the playback step through the <input type="checkbox"> tag.

// .HTML

...

<div class="kick">

<input type="checkbox">

<input type="checkbox">

<input type="checkbox">

<input type="checkbox">

<input type="checkbox">

<input type="checkbox">

<input type="checkbox">

<input type="checkbox">

</div>

...function sequencer() { //載入音頻

...

const kick = new Tone.Player('src/kick-electro01.wav').toMaster();

...

} function repeat() { // 實現單個drums的播放

...

let kickInputs = document.querySelector(

`.kick input:nth-child(${step + 1})`

...

);

...

if (kickInputs.checked) {

kick.start();

...

}In addition, because the browser prohibits the automatic playback of audio, we need to add resume() to skip this:

document.documentElement.addEventListener(

"mousedown", function(){

mouse_IsDown = true;

if (Tone.context.state !== 'running') {

Tone.context.resume();

}})I learned a lot from these materials:

After the whole demo is finished, I have a better understanding of webaudio and webcam, but I also have more things to enrich, such as:

- Rich musicmachine GUI elements, you can control the step and volume, and choose more drums types;

- Consider using a track library with better performance to achieve more detailed interaction, such as using fingers to play audio sources and click checkboxes;

- Do more experiments on the filter effect, and have parameter conduction with the mixed audio;

- Use MusicRNN, MusicVAE, etc. to convolve the audio, you can mix the audio through more modes.

- Consider tracking targets other than hands, such as facemesh, etc.